Method and device for allocating and controlling buffer space of multiple queues

A cache space and multi-queue technology, applied in the field of allocation and control of multi-queue cache space, can solve the problems of low cache utilization, complex algorithm, lack of adaptability, etc., to improve utilization, save hardware resources, and The effect that is beneficial to hardware practice

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

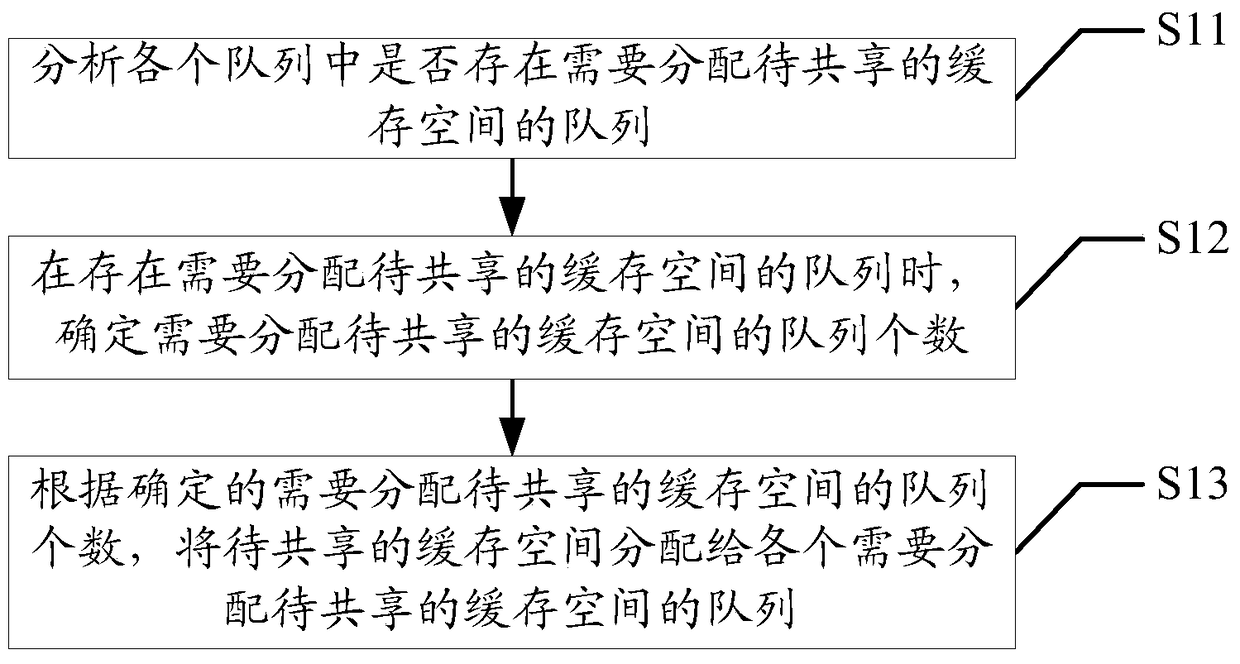

Method used

Image

Examples

no. 1 example

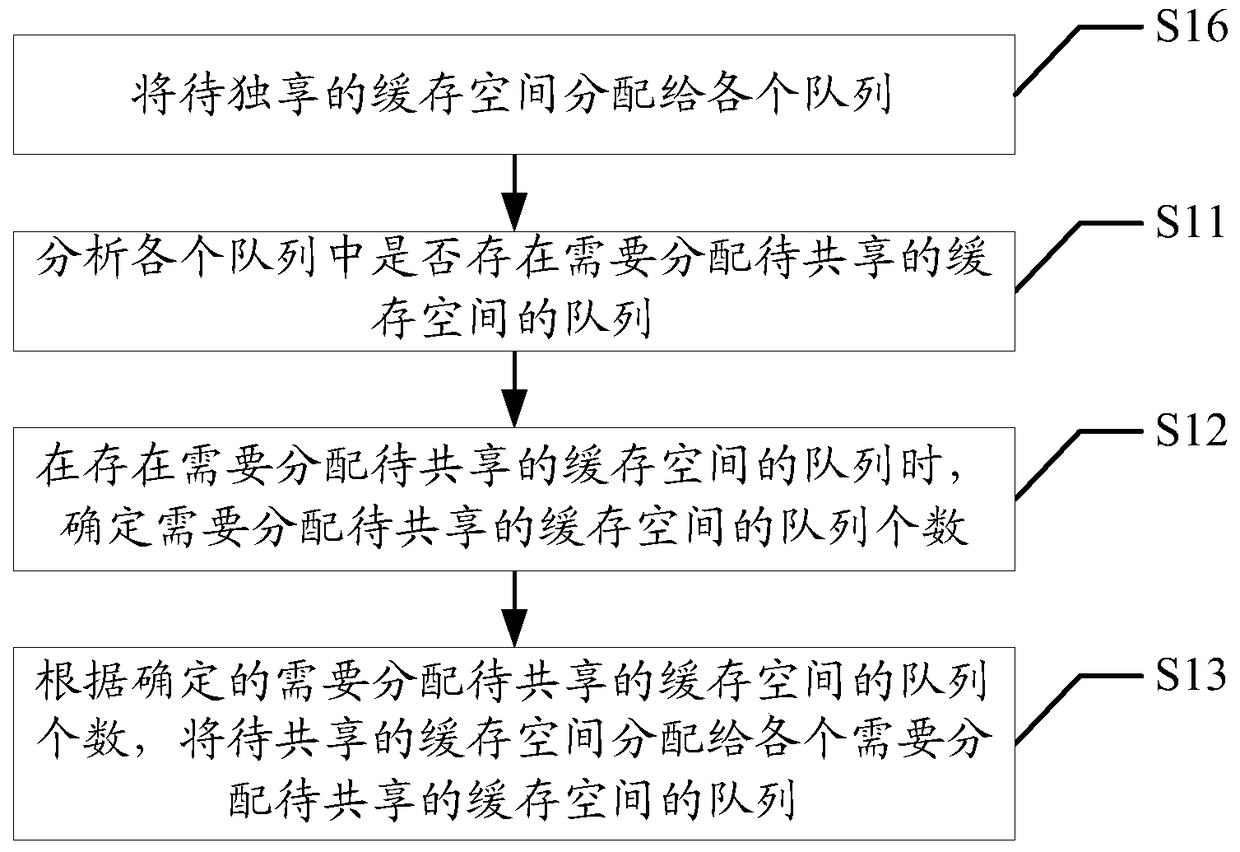

[0056] Based on the first embodiment above, step S13 includes:

[0057] Step S14, reserving a cache space with a preset value in the cache space to be shared, and using the reserved cache space as the cache space to be allocated;

[0058] Step S15 , according to the determined number of queues that need to allocate the cache space to be shared, allocate the cache space to be allocated to each queue that needs to allocate the cache space to be shared.

[0059] Specifically, obtain the size of the cache space to be shared, that is, the size of the shared cache space allocated for multiple queues, for example, it can be 100M, or any other preset shared cache space; Cache space, the preset value can be 10M, or 15M or 30M and other shared cache space set in advance, and the remaining cache space after reservation is used as the cache space to be allocated; allocate the cache to be shared according to the determined needs The number of queues for the space, and allocate the cache s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com