Generation method and device of topic model and acquisition method and device of topic distribution

A topic model and topic distribution technology, applied in the computer field, can solve the problems of low accuracy and stability of topic distribution

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

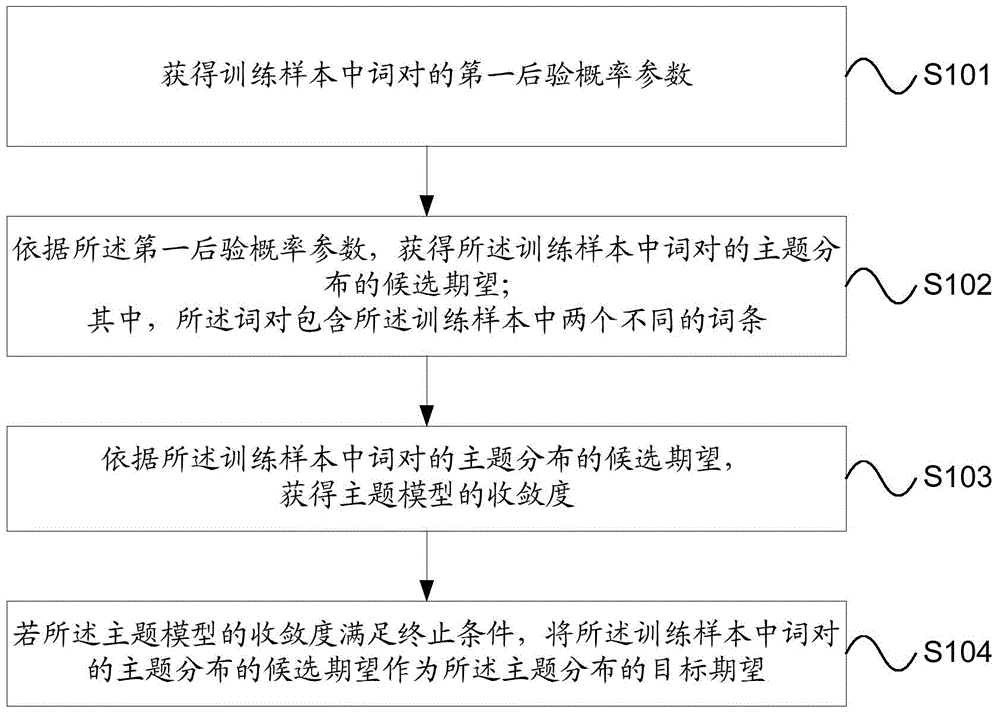

[0077] The embodiment of the present invention provides a method for generating a topic model, please refer to figure 1 , which is a schematic flowchart of Embodiment 1 of the method for generating a topic model provided by the embodiment of the present invention. As shown in the figure, the method includes the following steps:

[0078] S101. Obtain a first posterior probability parameter of a word pair in a training sample.

[0079] Specifically, the prior probability parameter of the Dirichlet distribution of the word pair in the training sample is obtained; according to the sum of the random number and the prior probability parameter of the Dirichlet distribution, the Dirichlet distribution of the word pair in the training sample is obtained The first posterior probability parameter of the distribution is used as the first posterior probability parameter of the word pairs in the training sample.

[0080] Or, according to the number of occurrences of the word pairs in the t...

Embodiment 2

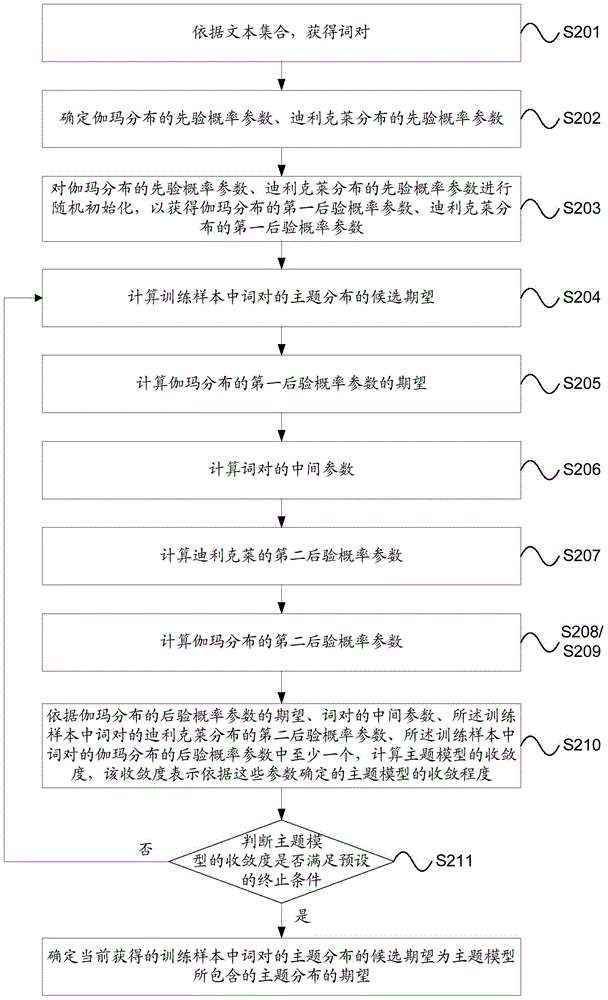

[0093] Based on the first embodiment above, the embodiment of the present invention specifically describes the methods of S101 to S104 in the first embodiment. Please refer to figure 2 , which is a schematic flowchart of Embodiment 2 of the method for generating a topic model provided by an embodiment of the present invention. As shown in the figure, the method includes the following steps:

[0094]S201. Obtain word pairs according to the text set.

[0095] Preferably, the short texts in the training samples can be traversed, and word segmentation is performed on the traversed short texts, so as to obtain a set of lemmas corresponding to each short text. A word pair is determined based on any two different entries in the entry set corresponding to each short text, so the word pair refers to a combination of any two entries in the same short text.

[0096] Wherein, if the word pair contains punctuation marks, numbers or stop words, the word pair is removed.

[0097] Prefera...

Embodiment 3

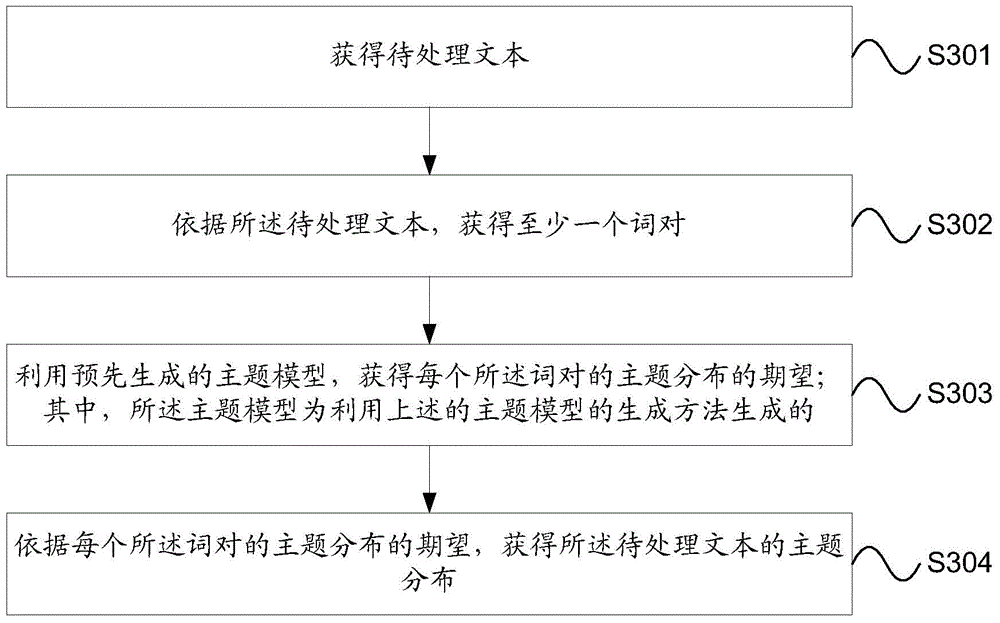

[0152] Based on the first and second embodiments above, this embodiment of the present invention provides a method for obtaining topic distribution, please refer to image 3 , which is a schematic flowchart of a method for obtaining topic distribution provided by an embodiment of the present invention. As shown in the figure, the method includes the following steps:

[0153] S301. Acquire text to be processed.

[0154] S302. Obtain at least one word pair according to the text to be processed;

[0155] S303. Using a pre-generated topic model, obtain an expectation of a topic distribution of each word pair; wherein, the topic model is generated by using the above-mentioned method for generating a topic model.

[0156] S304. Obtain the topic distribution of the text to be processed according to the expectation of the topic distribution of each word pair.

[0157] Preferably, the text to be processed may include, but not limited to, query text, comment information, microblogs, e...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com