Dressed human body three-dimensional bare body model calculation method through single Kinect

A three-dimensional clean body and human body technology, applied in computing, image data processing, instruments, etc., can solve problems that cannot be reconciled quickly, economically, and accurately

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0072] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

[0073] Embodiments of the present invention are as follows:

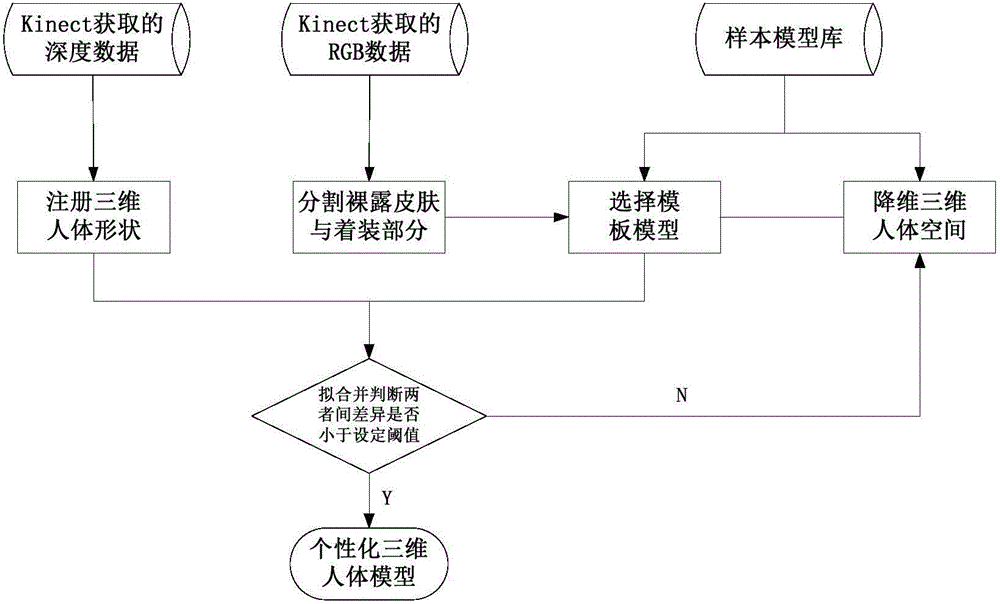

[0074] Step 1: According to figure 1 The figure in the figure is to install the experimental equipment, make the dressed human body stand at a distance of about 2 meters from the Kinect to rotate for one circle, and record the movement process at a speed of about 25 frames per second. The recorded data includes a total of about 200 frames of RGB-D images and bones attitude. Among them, the depth image is a function of pixel coordinates, which is transformed into a three-dimensional coordinate system ( image 3 (a)), and use the bounding box method to separate the human body depth image from the background ( image 3 (b)).

[0075] Step 2: Use the implicit surface method to denoise the human body depth image frame by frame. The denoising results are as follows: Figure 4 shown. Since th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com