Multi-level cache method based on historical upgrading and downgrading frequency

A cache and frequency technology, applied in the field of data reading and writing and storage of computer systems, can solve problems such as insufficient description of data block status, ignoring of valuable historical implicit information, etc., so as to reduce average response time and space consumption. Small, the effect of reducing bandwidth usage

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] In order to make the above objects, features and advantages of the present invention more comprehensible, the present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

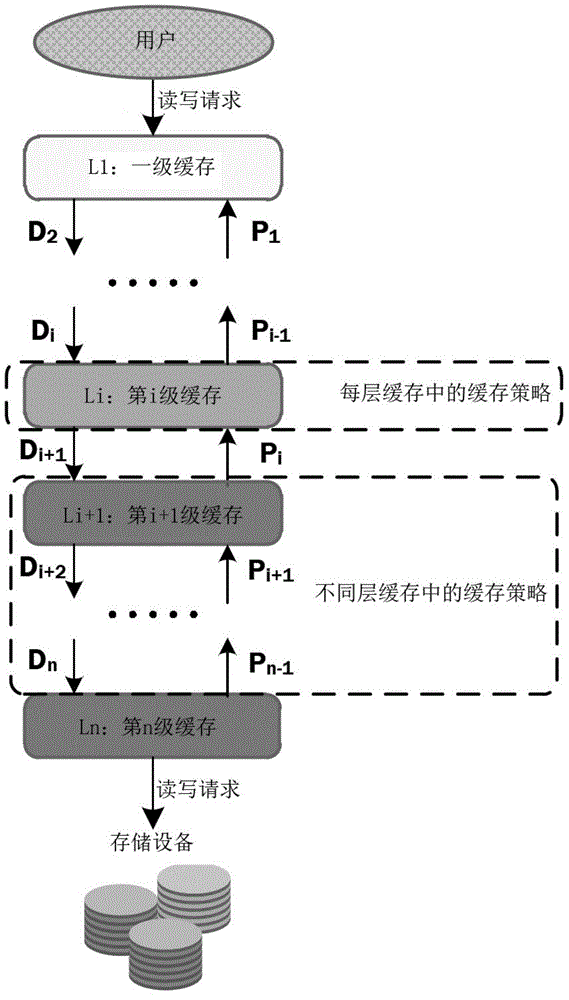

[0033] The present invention provides a multi-level caching method based on historical de-escalation frequency, including:

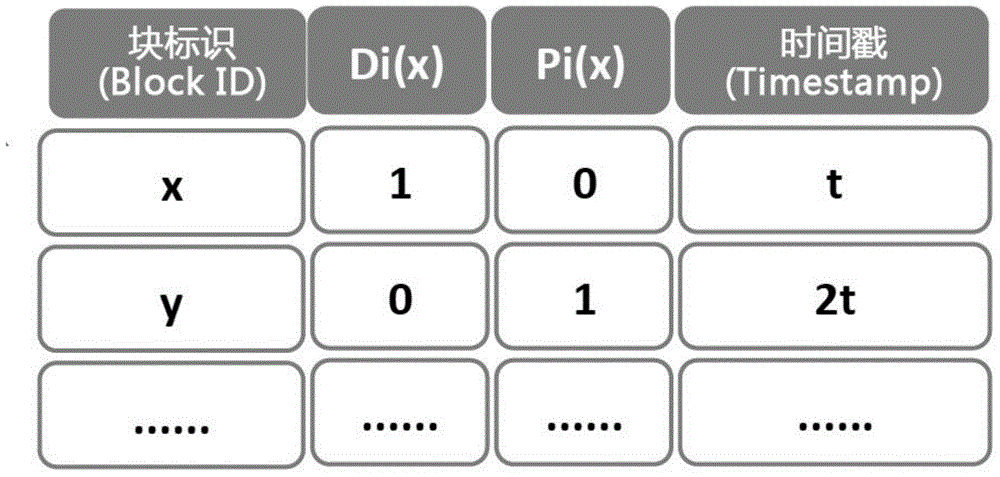

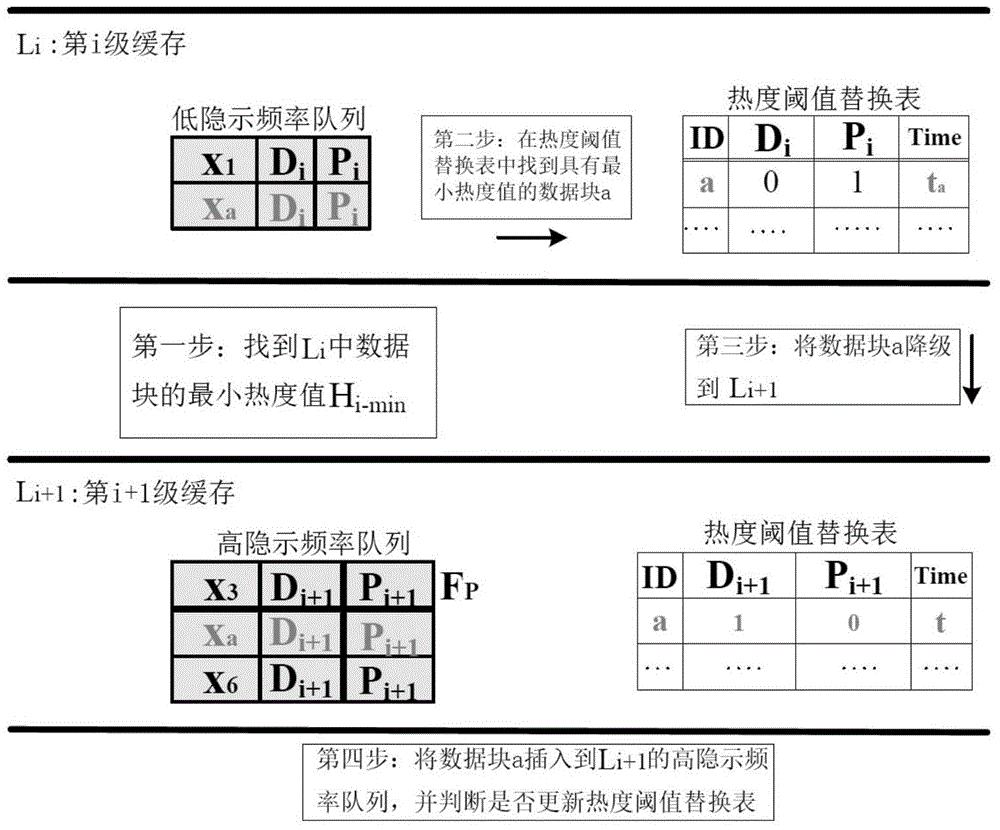

[0034] Step S1, according to the implicit frequency of each data block in the cache at all levels, wherein the implicit frequency is the sum of the number of times each data block is upgraded and downgraded per unit time;

[0035] Step S2, high implicit frequency queues and low implicit frequency queues are established in caches at all levels, wherein the high implicit frequency queues store data blocks with high implicit frequency, and the low implicit frequency queues store data blocks with the lowest implicit frequency Frequency data blocks; here, in each level of cache of the multi-level cache system, tw...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com