Video saliency detection method based on global motion estimation

A technology of global motion and detection method, which is applied in the direction of digital video signal modification, electrical components, image communication, etc., can solve problems such as hindering practical application, not considering the influence of global motion, and not being able to give full play to the advantages of detection results, etc., to achieve robustness The effect of high performance, reasonable design and strong scalability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0057] Embodiments of the present invention are described in further detail below in conjunction with the accompanying drawings:

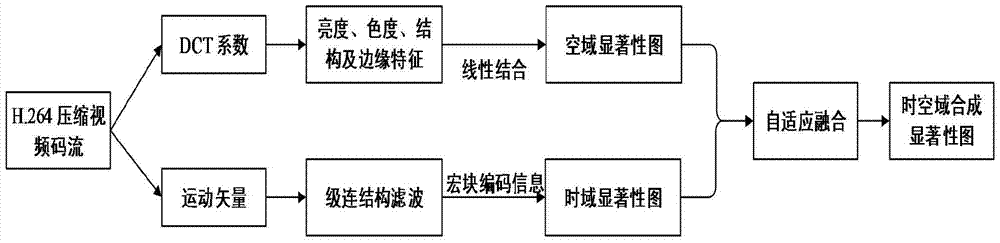

[0058] A video saliency detection method based on global motion estimation, such as figure 1 shown, including the following steps:

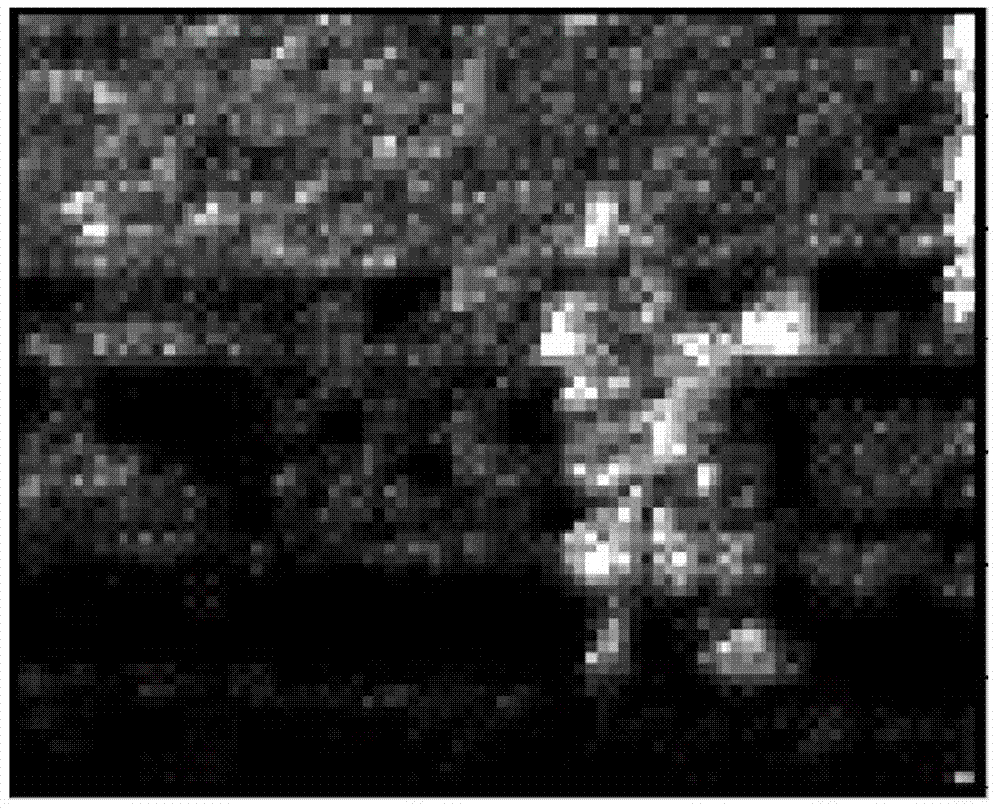

[0059] Step 1. Extract the spatial domain features and time domain features in the compressed code stream, and use the two-dimensional Gaussian weight function and the spatial domain features to obtain the spatial domain saliency map.

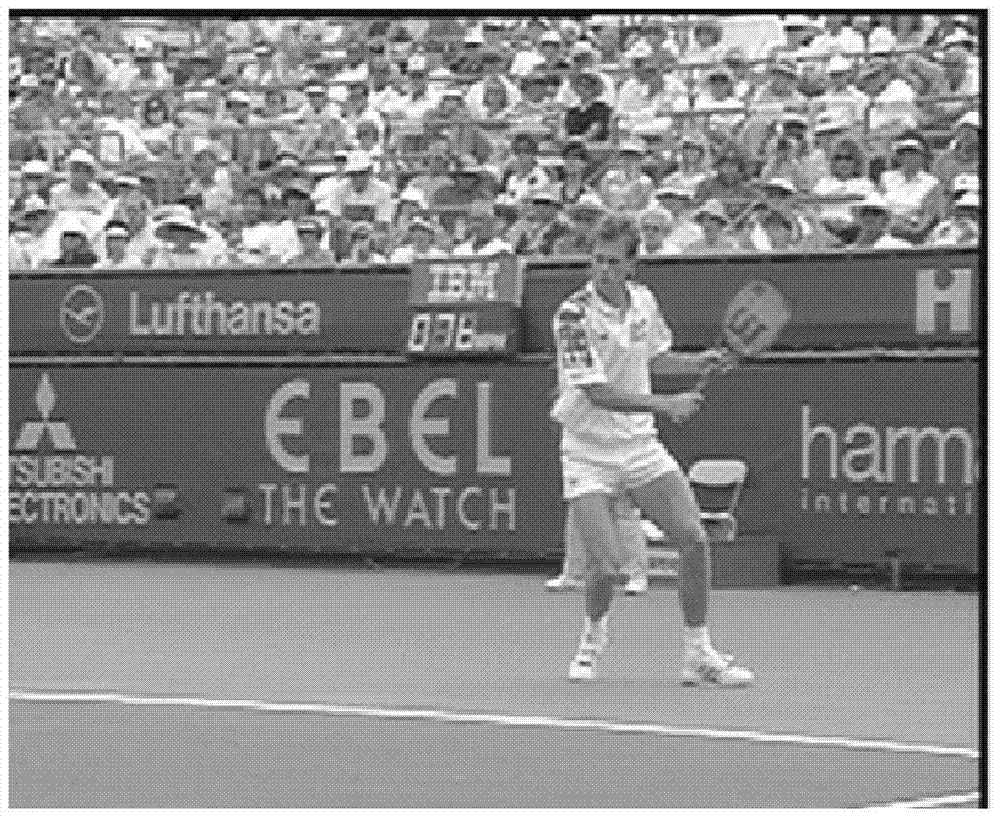

[0060] In this step, the original video is compressed by H.264 test version 18.5 (JM18.5), and each frame of image is divided into (4×4) blocks. For CIF sequences, each frame can be divided into 88×72 blocks. Extract the motion vector and DCT coefficient corresponding to each block, the motion vector represents the time domain information, and the DCT coefficient of each block includes a direct current component DC and fifteen alternating current components (AC 1 ~AC 15 ), extract t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com