Three-dimensional convolutional neural network based video classifying method

A neural network and three-dimensional convolution technology, applied in the field of video processing, can solve the problems of insufficient video data resources, reduce the learning complexity of three-dimensional convolutional neural network, etc., and achieve the effects of reducing network complexity, improving classification performance, and increasing speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] Below in conjunction with accompanying drawing, invention is further described:

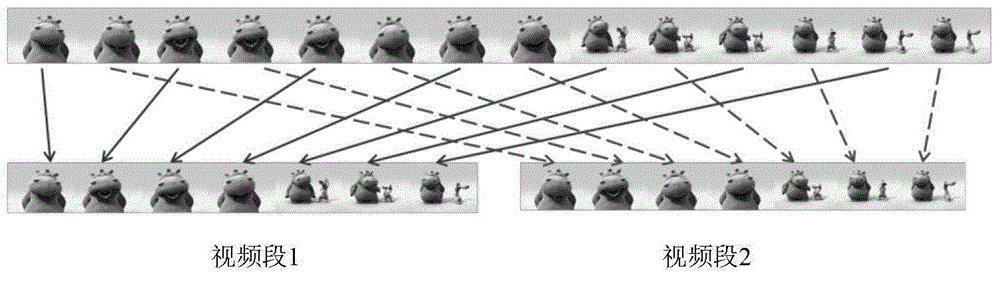

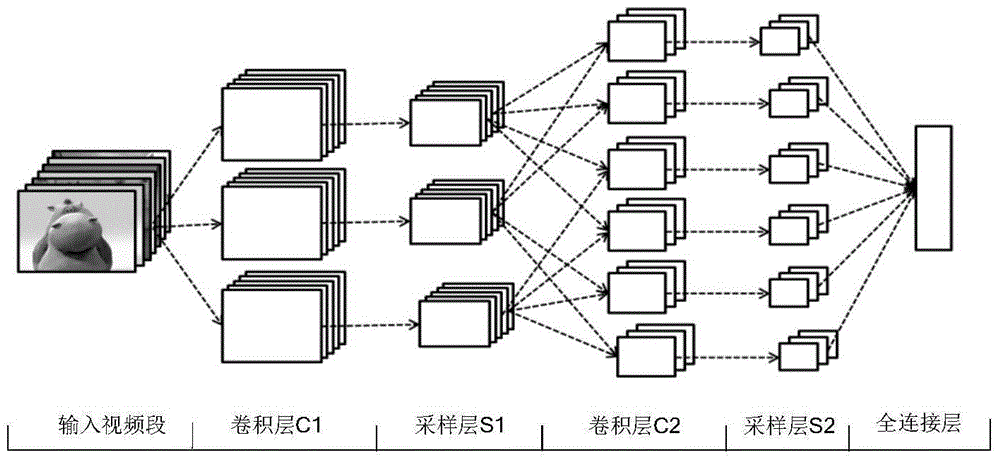

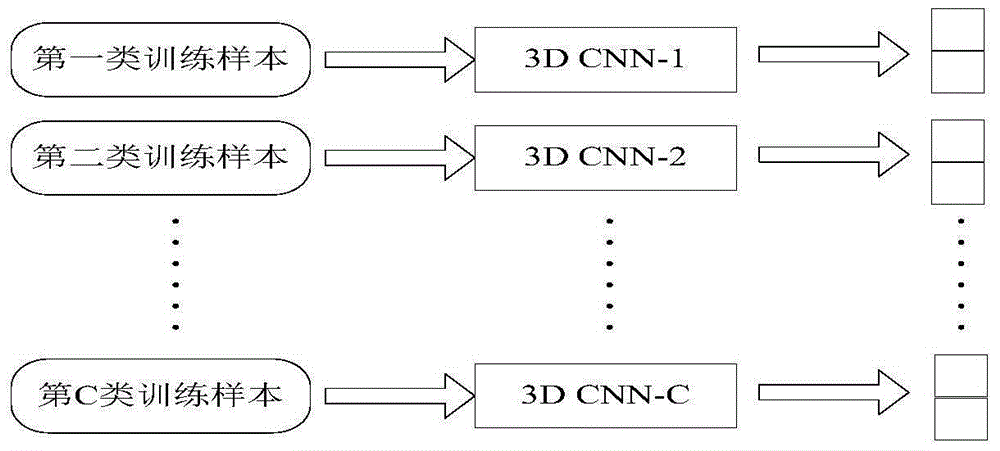

[0041] According to the present invention, a video classification method is provided. Firstly, the video in the video library is read, and the video frame is grayscaled; secondly, the grayscaled video is sampled into a video with a fixed number of frames by sampling at equal intervals. segment; for each type of video, use the video segment as a unit to formulate different training and test data sets, and set labels for each video segment. The tags are divided into two types: belonging to this category and not belonging to this category; Initialize a 3D CNN network for class video, and train the network with the training samples corresponding to the class, so that the 3D CNN can perform two-category classification on multiple video segments inside and outside the class; connect multiple trained 3D CNNs in parallel, and then The classification mechanism is set at the end, and the category of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com