Coastal monitoring and defense decision-making system based on multi-dimensional space fusion and method thereof

A technology of spatial fusion and decision-making method, applied in multi-dimensional databases, structured data retrieval, resources, etc., can solve problems such as inability to carry out collaborative defense, and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0063] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

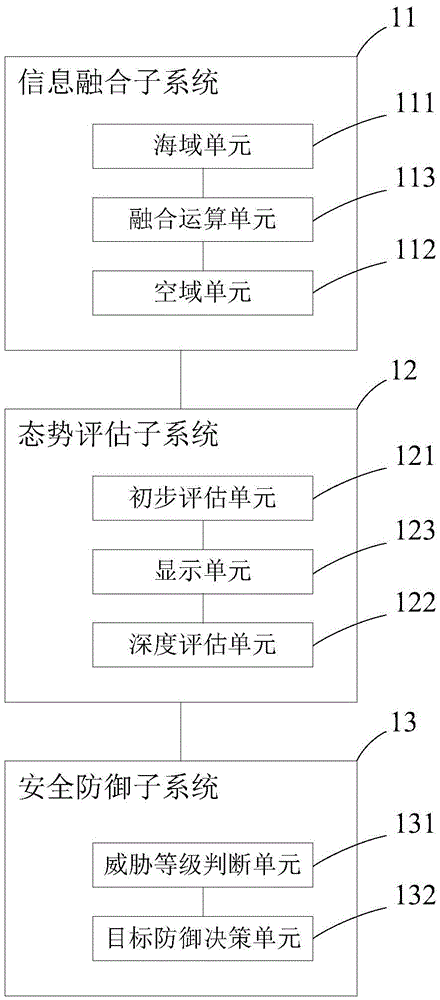

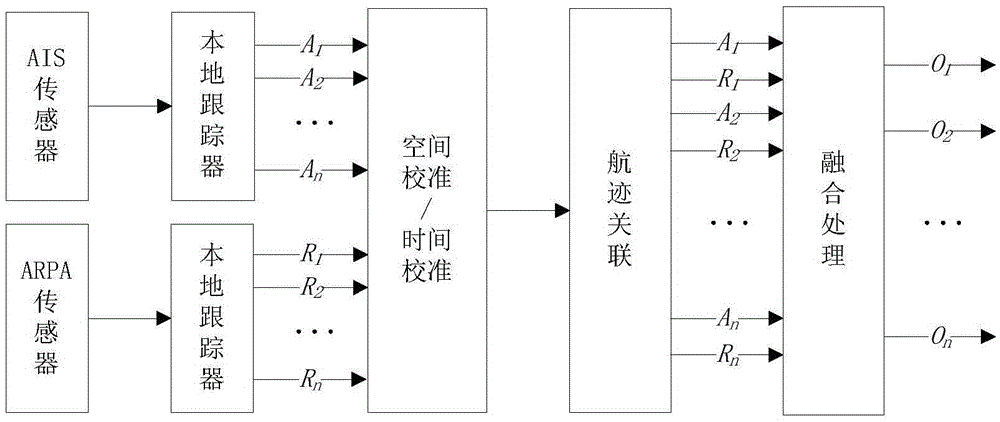

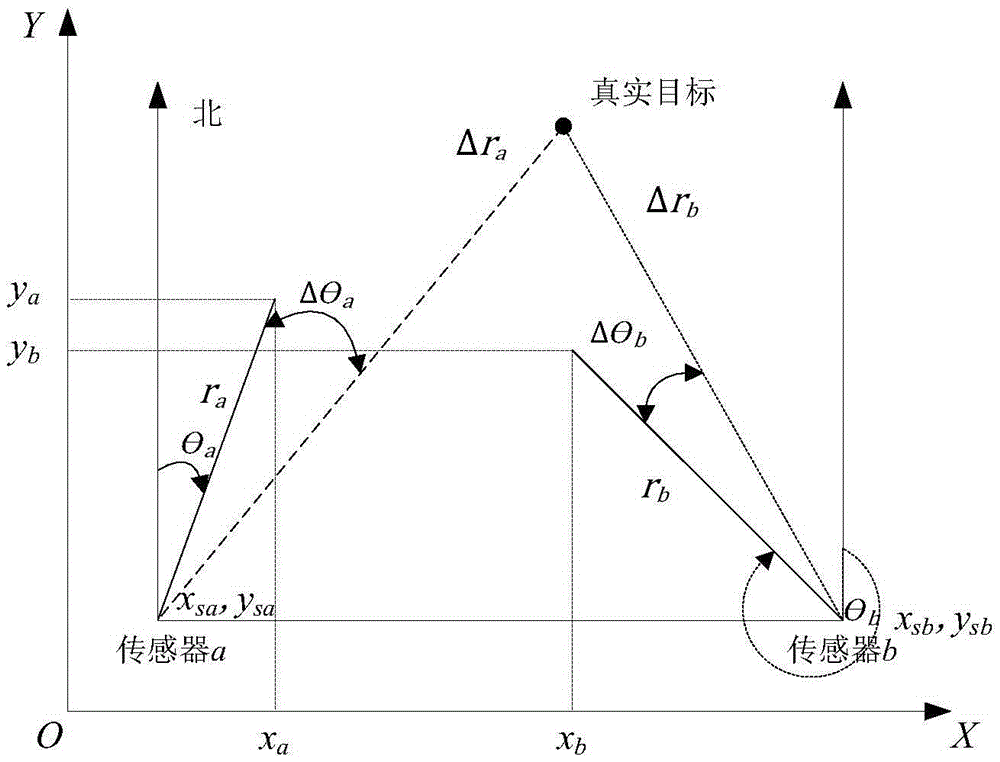

[0064] The present invention provides a coastal monitoring and defense decision-making system based on multi-dimensional space fusion, which integrates and comprehensively processes the monitoring information and data of multi-platform and multi-sensors in coastal waters and airspace to provide situation display and threat assessment, so as to protect the country Sovereignty, safeguarding national security, military defense, marine development, marine management, etc. provide support for decision-making. The system of the present invention mainly includes an information fusion subsystem, a situation assessment subsystem, and a security defense subsystem. The information fusion subsystem utilizes the basis of space to realize the integrated acquisition, transmission, processing, networked sharing and sharing of multi-dimensional and multi-source ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com