Driver emotion real time identification method fusing facial expressions and voices

A facial expression and recognition method technology, applied in speech recognition, character and pattern recognition, speech analysis, etc., to achieve high-precision real-time driver negative emotion recognition and high accuracy effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

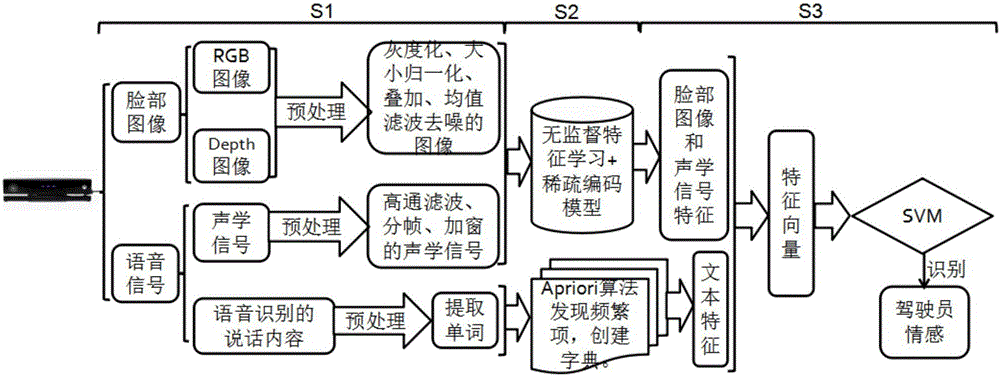

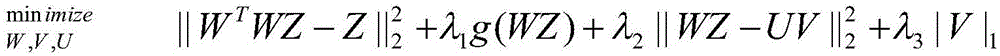

[0030] Such as figure 1 Shown, be the method flow chart of the present invention, at first, track people's face in real time by kinectSDK, obtain driver's face image (RGB image and Depth image) and voice signal (comprising acoustic signal and speech content), afterwards to driver The face image (RGB image and Depth image) and acoustic signal are preprocessed, and the feature extraction model based on unsupervised feature learning and sparse coding is trained according to the given objective function. After the model is obtained, the preprocessed information is input into the feature The extraction model obtains the emotional features based on facial images and sound signals; and extracts words according to the spoken content, and creates a dictionary through the frequent words obtained by the Apriori algorithm, and obtains text-based emotional ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com