Scene light source estimation accuracy improving method based on camera response function

A camera response and light source technology, which is applied in the fields of computer vision and image processing, can solve problems such as the inability to effectively improve the accuracy of light source estimation, and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

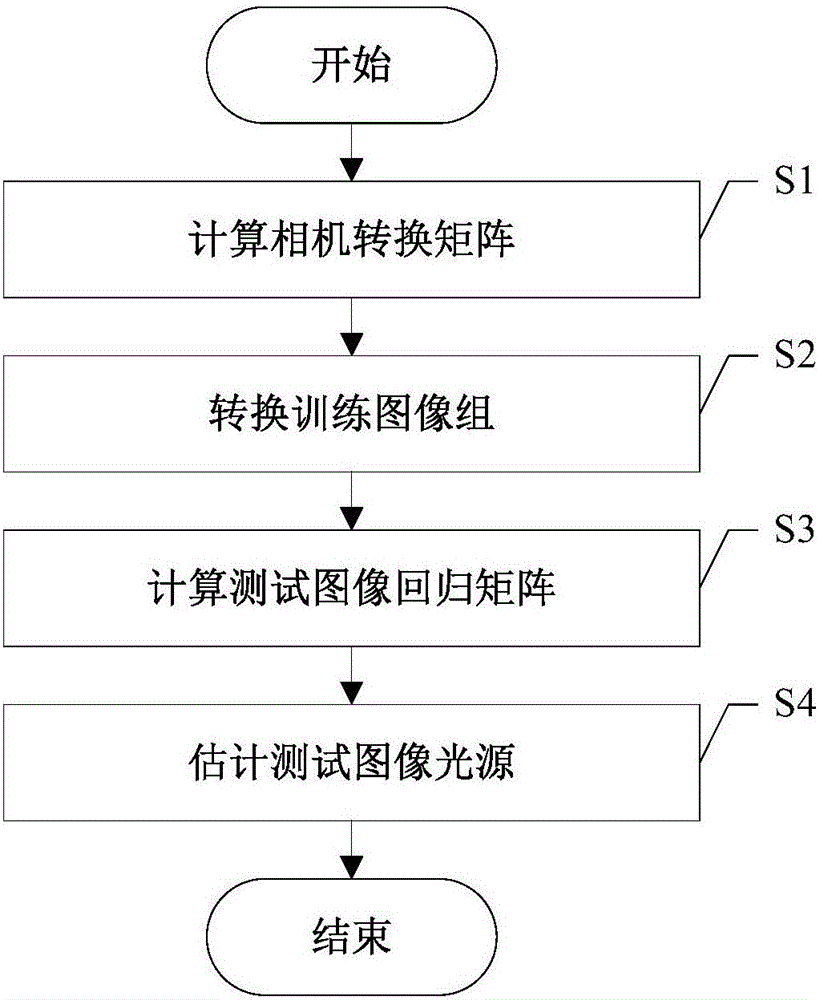

Method used

Image

Examples

Embodiment 1

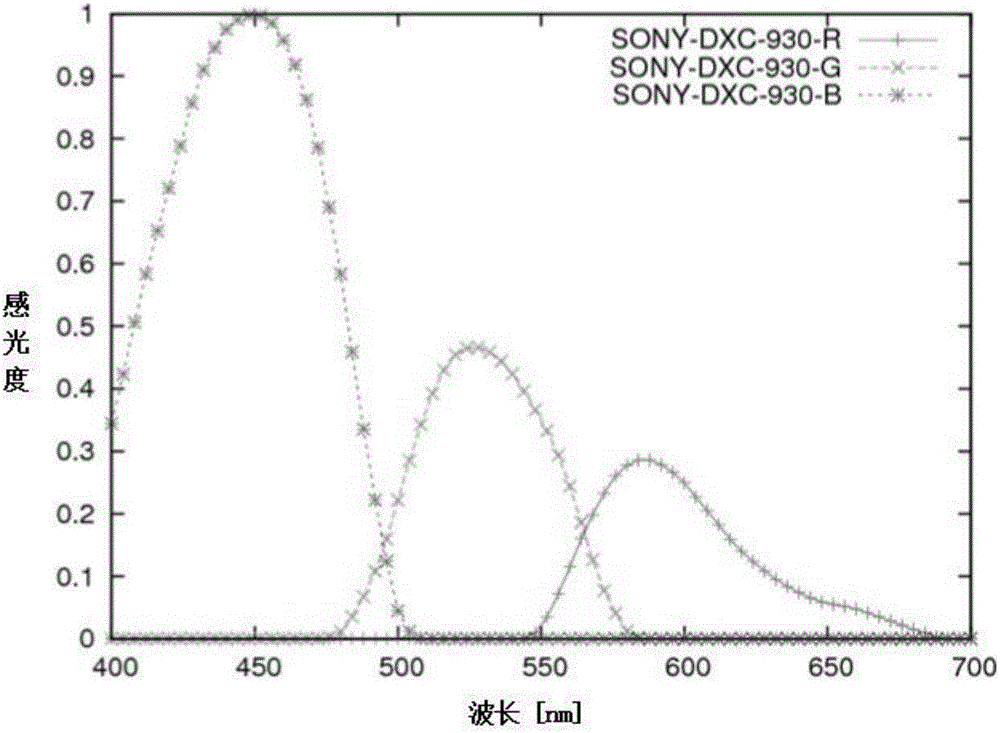

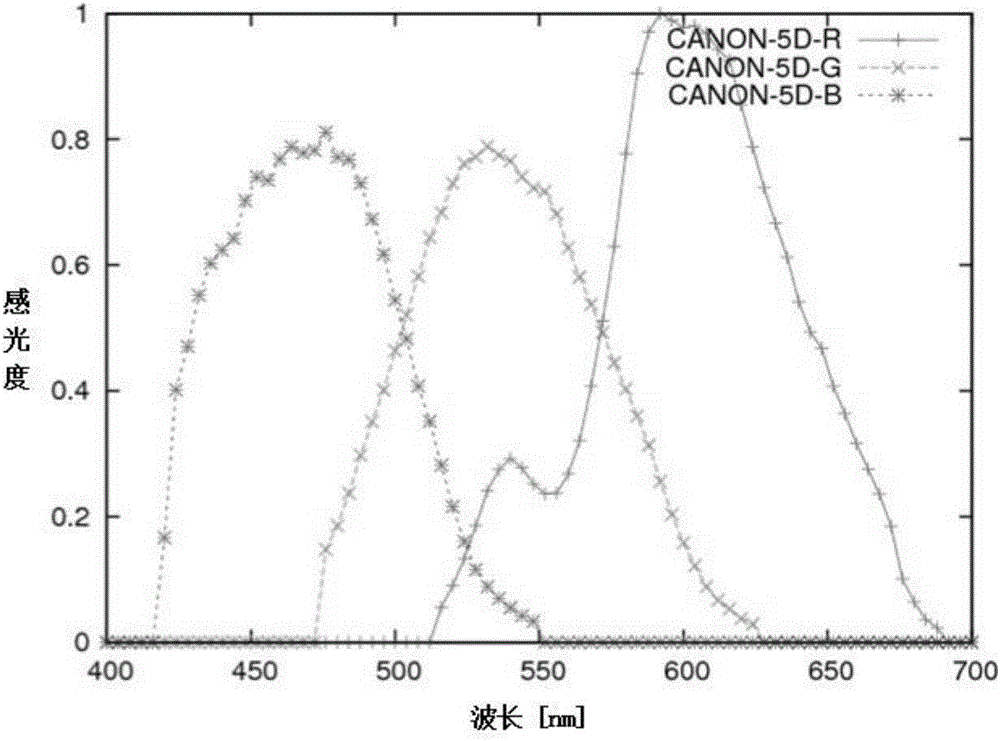

[0031] Download the artificially synthesized surface S from the currently internationally recognized image library website for estimating the color of the scene light source, and download the 321 color shift images T and its real light source L taken by the image library with the SONY DXC 930 camera as the training set. At the same time, download another image library, an image IMG_0332.png taken by a CANON 5D camera, as a test image, and the image size is 1460*2193. Neither the training set images nor the test images have undergone any camera preprocessing (such as white balance, gamma value correction). Then the detailed steps of the present invention are as follows:

[0032] S1. Calculating the camera conversion matrix: Calculate the color sensitivity response function of the camera (SONY DXC930) used in the training image to the color sensitivity response function of the camera (CANON 5D) used in the test image to the same given surface reflectance by the least square meth...

Embodiment 2

[0071] The pixel values of each color component of the original input image are respectively corrected by using the light source color values under each color component calculated in step S4. Taking a pixel value (0.459, 0.545, 0.472) of the test image input in the step S4 as example, the corrected result is (0.459 / 0.3765, 0.545 / 0.3435, 0.472 / 0.2800)=(1.2191, 1.5866, 1.6857), and then Multiply the corrected value by the standard white light factor Obtain (0.7038, 0.9160, 0.9732) as the pixel value of the final output corrected image, do similar calculations for other pixel values of the original input image, and finally obtain the corrected color image.

[0072] Such as Figure 4 Shown is the original image to be corrected, and the image after performing tone correction on the light source color value calculated by step S4 is as follows Figure 5 shown.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com