A GPU-accelerated batch processing method for multiplying full vectors by homogeneous sparse matrices

A sparse matrix and processing method technology, applied in processor architecture/configuration, complex mathematical operations, etc., can solve problems such as multiplying full vectors by homogeneous sparse matrices that have not been batch-processed, and unable to fully utilize the advantages of GPUs in programs, to achieve improved The effect of parallelism, time-consuming solution, and reduced computing time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

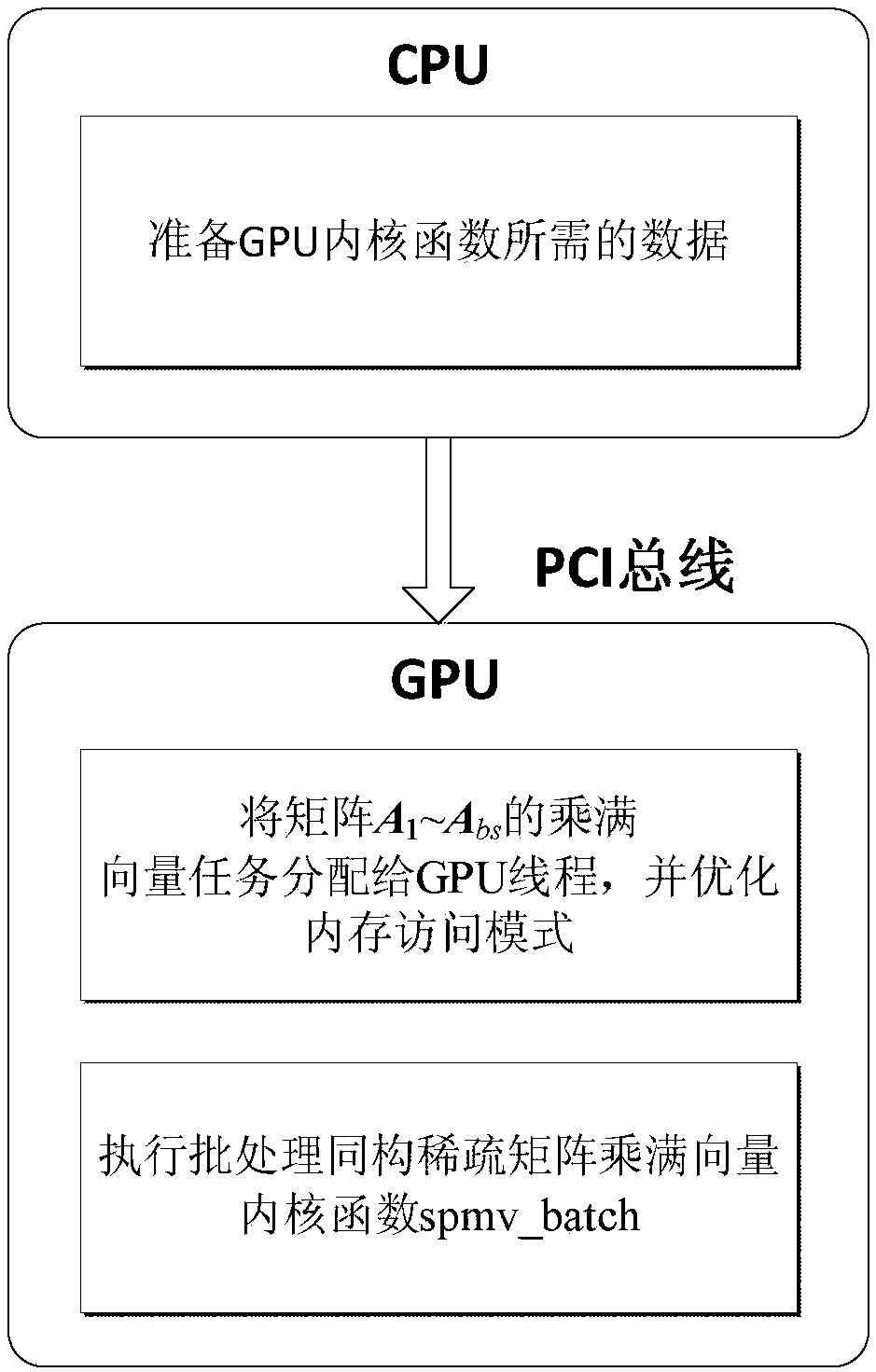

[0034] Such as image 3 As shown, a GPU-accelerated batch processing method of multiplying a full-vector isomorphic sparse matrix of the present invention, a large number of isomorphic sparse matrix A 1 ~A bs Multiply full vector operation: A 1 x 1 = B 1 ,..., A bs x bs = B bs , Where x 1 ~x bs Is the multiplied full vector, b 1 ~b bs Is the result full vector, bs is the number of matrices to be batch processed, and the method includes:

[0035] (1) In the CPU, all matrix A 1 ~A bs Stored as row compression storage format, matrix A 1 ~A bs Sharing the same row offset array CSR_Row and column number array CSR_Col, the row offset array element CSR_Row[k] stores the total number of non-zero elements before the kth row of the matrix, and the value of k ranges from 1 to n+1; The specific values of each matrix are stored in their respective numeric array CSR_Val 1 ~CSR_Val bs , The multiplied vector is stored in the array x 1 ~x bs , The result full vector is stored in array b 1 ~b bs...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com