Eye and mouth state recognition method based on convolutional neural network

A convolutional neural network and state recognition technology, which is applied in the field of image recognition, can solve the problems of unbreakable recognition accuracy, few applicable scenarios, and low detection efficiency. It is friendly to transplantation and promotion, has a wide application range, and improves The effect of robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] In order to enable your examiners to further understand the structure, features and other purposes of the present invention, the attached preferred embodiments are now described in detail as follows. The described preferred embodiments are only used to illustrate the technical solutions of the present invention, not to limit the present invention. invention.

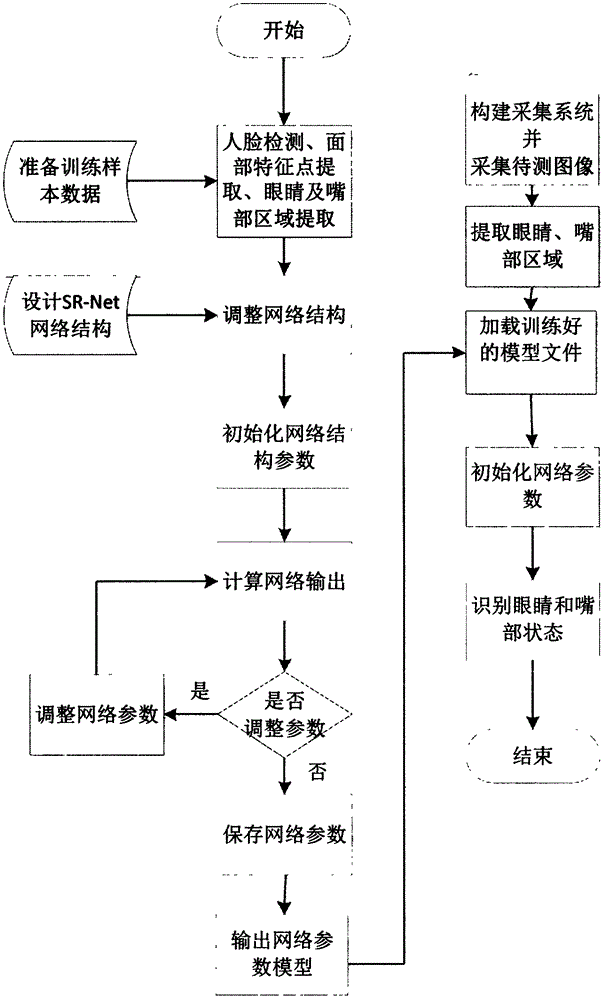

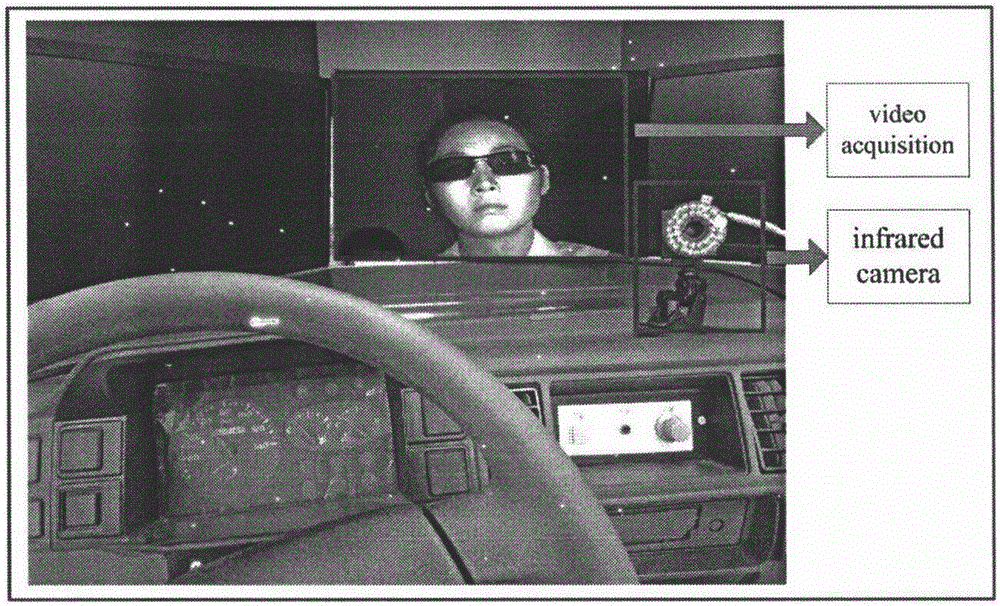

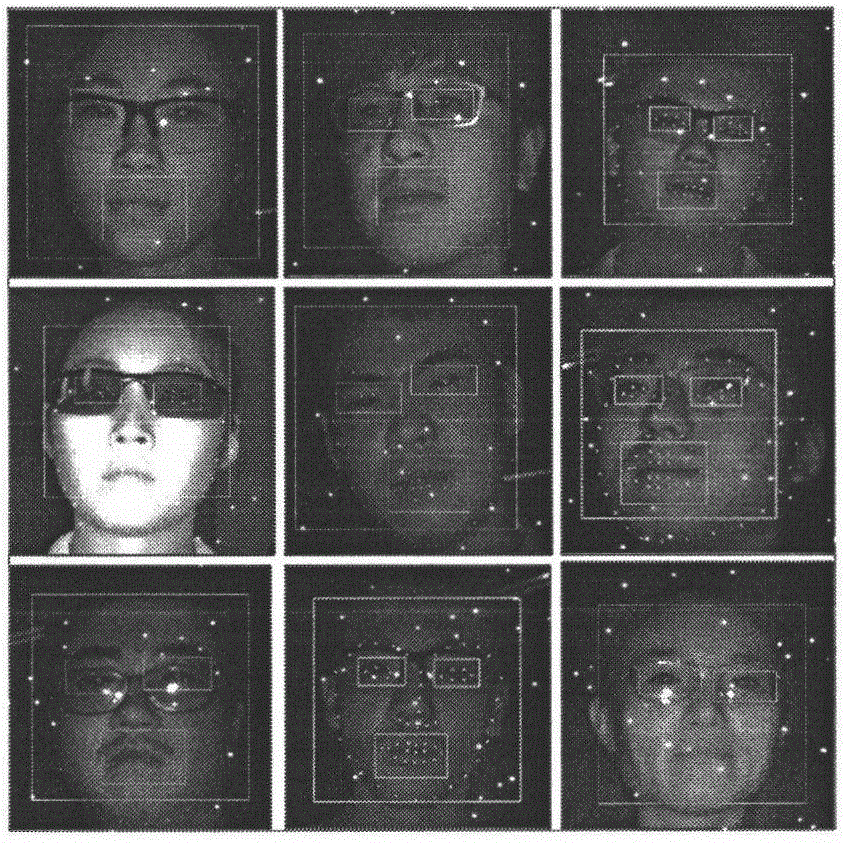

[0031] Process flow of the present invention such as figure 1 As shown, firstly, the face area of interest is detected based on the haar feature combined with the AdaBoost algorithm (or other methods), and the face feature points are detected based on the preliminary face detection results by a combination of random forest and linear regression, and Extract the eyes and mouth area; then according to the basic structure of the convolutional neural network convolutional layer, downsampling layer and fully connected layer and the Lenet5 network structure, the neural network is optimized by convolution of the local ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com