Modulation identification method based on convolutional neural network

A convolutional neural network and modulation recognition technology, applied in the field of signal modulation recognition, can solve the problems of poor modulation recognition performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

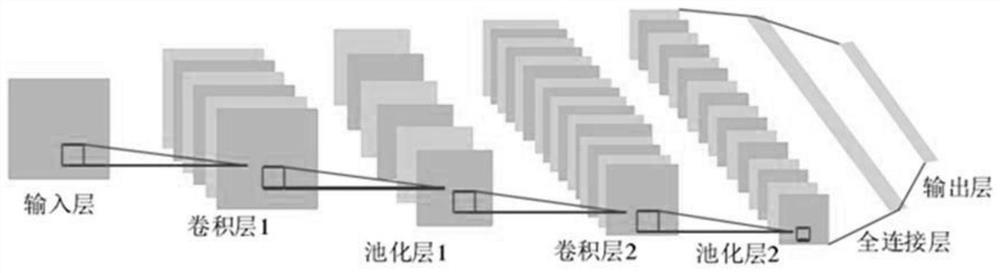

[0075] like Figure 1-8 As shown, this embodiment relates to a modulation recognition method based on a convolutional neural network, comprising the following steps:

[0076] S1: Select the modulated signal data set and design the convolutional neural network model structure;

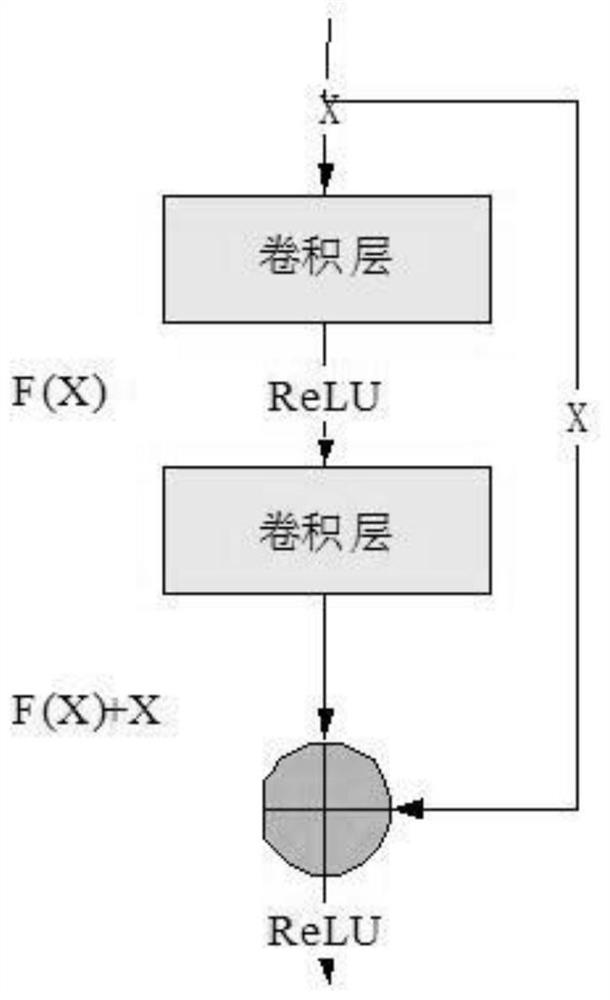

[0077] S2: Construct the residual unit through the residual connection in the convolutional neural network model;

[0078] S3: Perform batch normalization on the data in the network layer in batches in the convolutional neural network model;

[0079] S4: setting convolutional neural network parameters;

[0080] S5: Train the convolutional neural network and randomly lose the data in the training set;

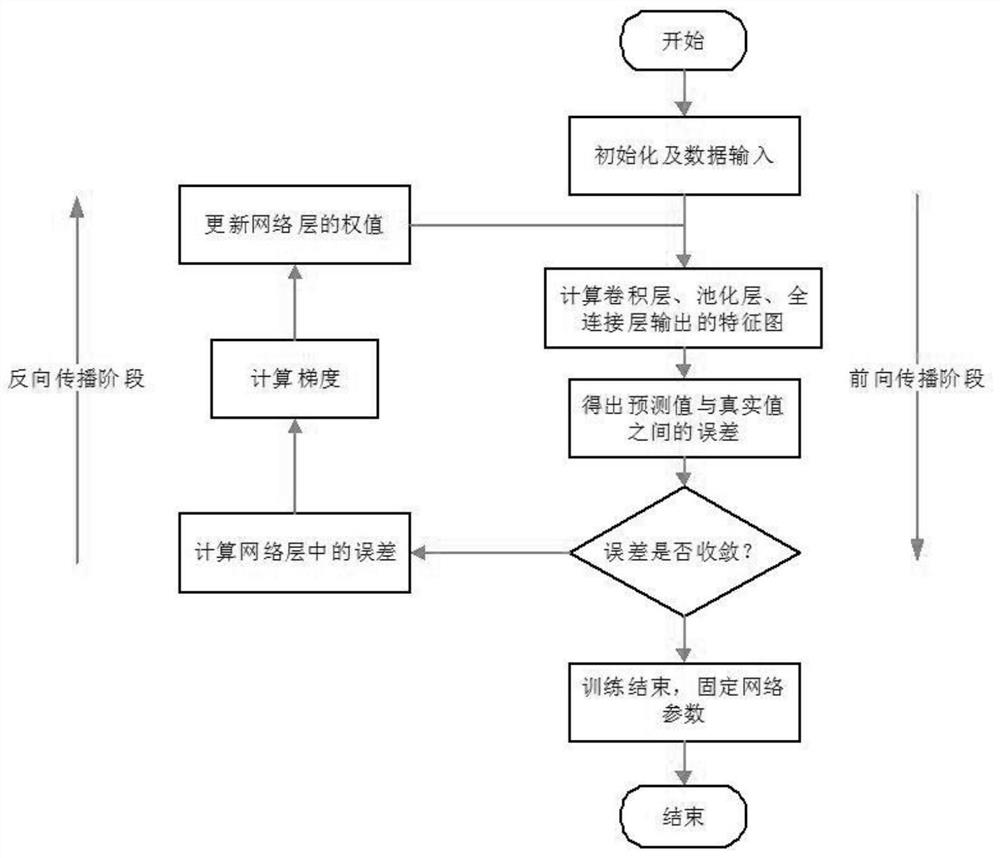

[0081] S5.1: Initialize network parameters;

[0082] S5.2: Calculate the feature maps output by the convolutional layer, pooling layer, and fully connected layer;

[0083] S5.3: Obtain the error between the predicted value and the actual value;

[0084] S5.4: Determine whether the error is converged...

Embodiment 2

[0115] like Figure 1-8 As shown, the specific parameter settings of the convolutional neural network proposed in this embodiment on the basis of Embodiment 1 are shown in Table 2, wherein the first residual unit Unit1 and the second residual unit Unit2 and the global average pool are listed. The specific parameters of the classification layer GAP and Softmax classification layer, and the remaining three residual units are consistent with the parameters in the second residual unit. The number of convolution kernels is set to 32, and the size of the first convolution kernel of each residual unit is 1×1, and then the size of the convolution kernel in the first residual unit is 2×3, and the size of the convolution kernel in the remaining residual units is is 1×3. It is worth noting that edge expansion is performed in both convolutional layers. The dropout layer is respectively connected after the second residual unit and the fourth residual unit to improve the generalization pe...

Embodiment 3

[0120] In this embodiment, the 64-bit Ubuntu 16.04 LTS system is used as the experimental environment, and the Keras deep learning framework at the back end of Tensorflow is used to complete the model construction, and the NVIDIA GTX 1070Ti graphics card is used to accelerate the training process. Using the public modulation signal dataset RML2016.10a as the experimental data, 60% of the data in the dataset is used as the training set, 20% as the test set, and the remaining 20% as the verification set. During the training process, the cross-entropy loss function and Adam optimizer are used, and the number of training iterations is set to 120. The early stop method is used to control whether the model continues to iterate. If the recognition rate does not change much after every 10 rounds of training, the iteration is stopped. During the training process of the convolutional neural network, different batch sizes may have a certain impact on the recognition rate and training ti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com