Fuzzy boundary fragmentation-based depth motion map human body action recognition method

A technology of blurring boundaries and actions, applied in character and pattern recognition, instruments, computer parts, etc., can solve the problem of missing action time information, and achieve the effect of improving classification accuracy, efficient segmentation, and improving robustness.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] In order to better illustrate the purpose, specific steps and characteristics of the present invention, the present invention will be further described in detail below in conjunction with the accompanying drawings, taking the MSRAction3D data set as an example:

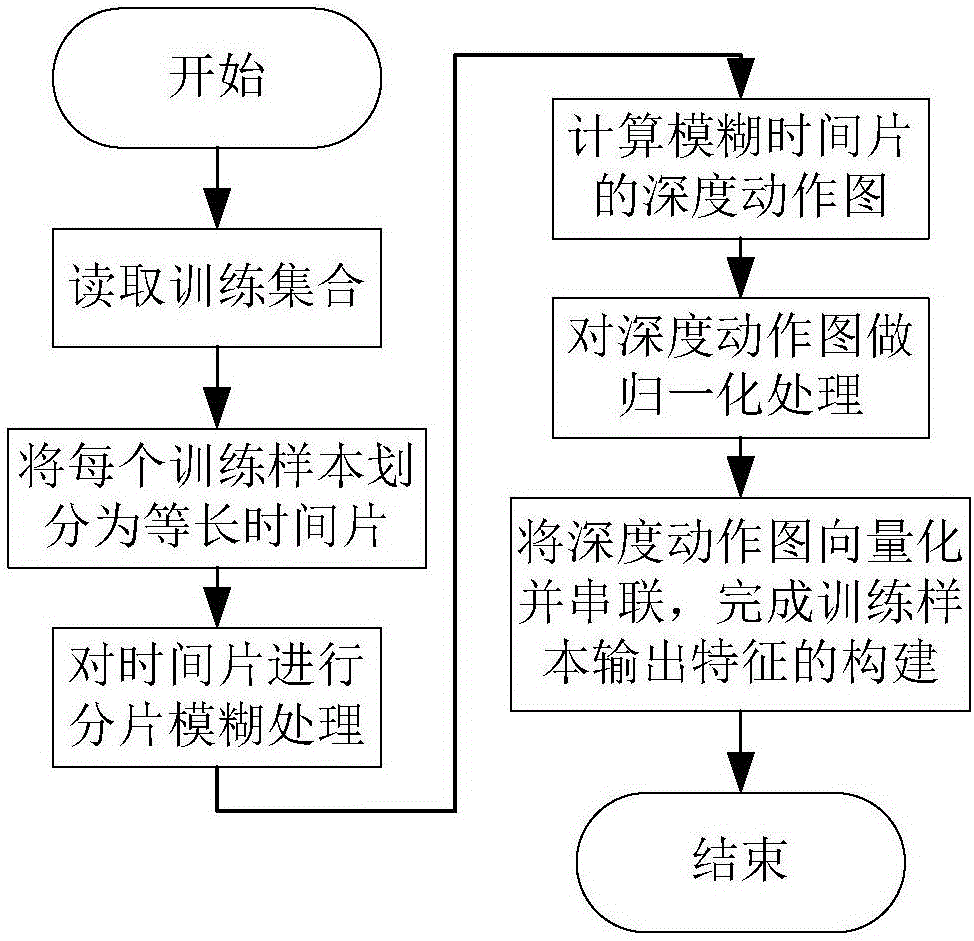

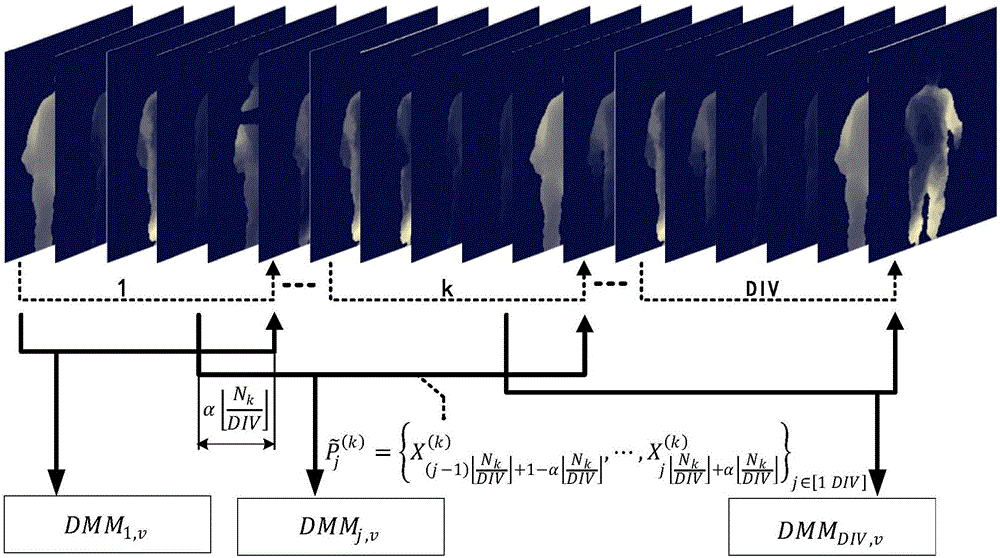

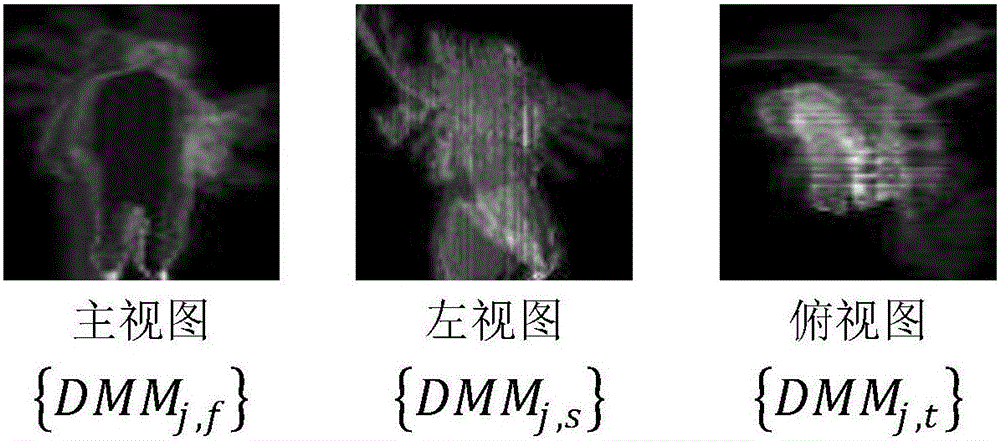

[0037] The human behavior recognition method of the depth motion map of the fuzzy boundary segmentation proposed by the present invention, wherein the flow chart of feature extraction is as follows figure 1 shown. Firstly, the sample is divided into equal segments to determine the boundary, and then the blurring degree of the boundary is determined according to the parameter α. For each segmented video sub-sequence, its depth motion map DMM is calculated, and all The DMM of the sample is fixed to the same size and normalized, and the features of the subsequence are obtained after serial vectorization, and the construction of the output feature of the training sample is completed.

[0038] A human behavior reco...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com