Interactive three-dimensional human face expression animation editing method and system and extension method

A technology for 3D face and animation editing, applied in animation production, instruments, computing, etc., can solve the problems of inappropriate interactive editing of face model control elements, a large number of offline processing and operations, and unnatural expressions.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0068] The following will clearly and completely describe the technical solutions in the embodiments of the present invention with reference to the accompanying drawings in the embodiments of the present invention. Obviously, the described embodiments are only some, not all, embodiments of the present invention.

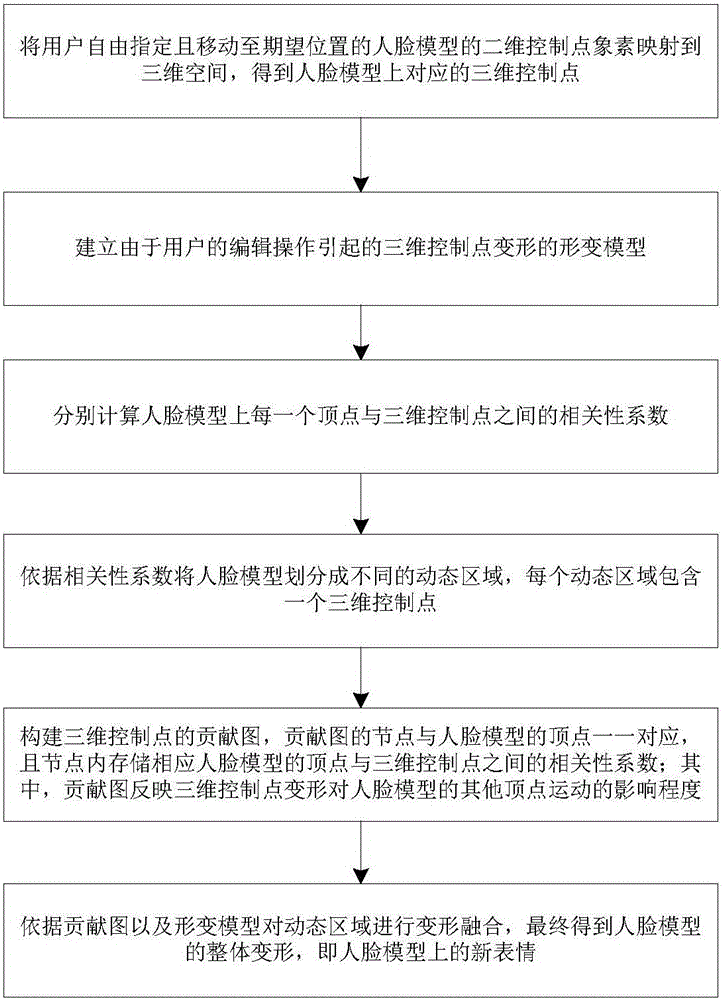

[0069] figure 1 It is a flowchart of an interactive three-dimensional facial expression animation editing method of the present invention. As shown in the figure, the interactive three-dimensional facial expression animation editing method is completed in the server, and specifically includes:

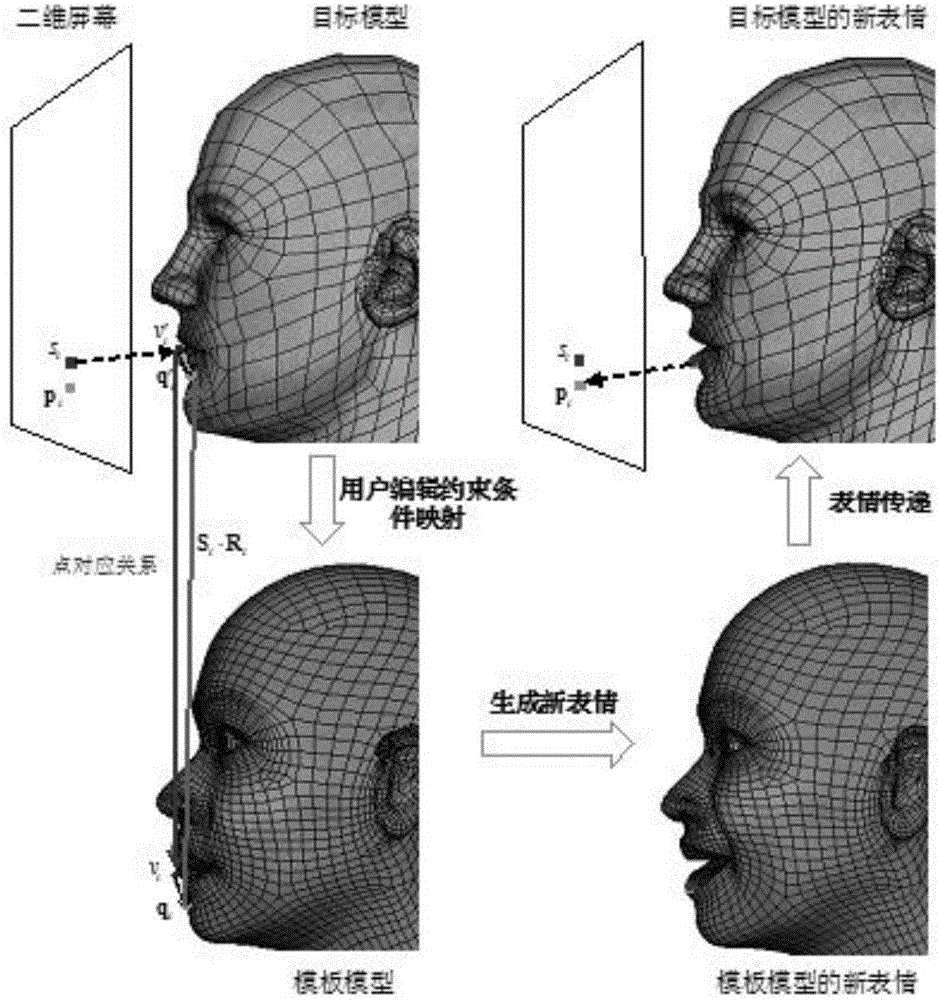

[0070] Step 1: Map the pixels of the two-dimensional control points of the face model freely designated by the user and moved to the desired position to the three-dimensional space, and obtain the corresponding three-dimensional control points on the face model.

[0071] Specifically, in order to provide users with an intuitive and convenient interaction method, the present ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com