Control instruction generation method based on face recognition and electronic equipment

A technology of control commands and normalization, applied in the field of image processing, can solve the problems of single operation mode, inability to meet the needs of users for diversified and more interesting operation modes, and inability to meet the needs of mobile terminal applications, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

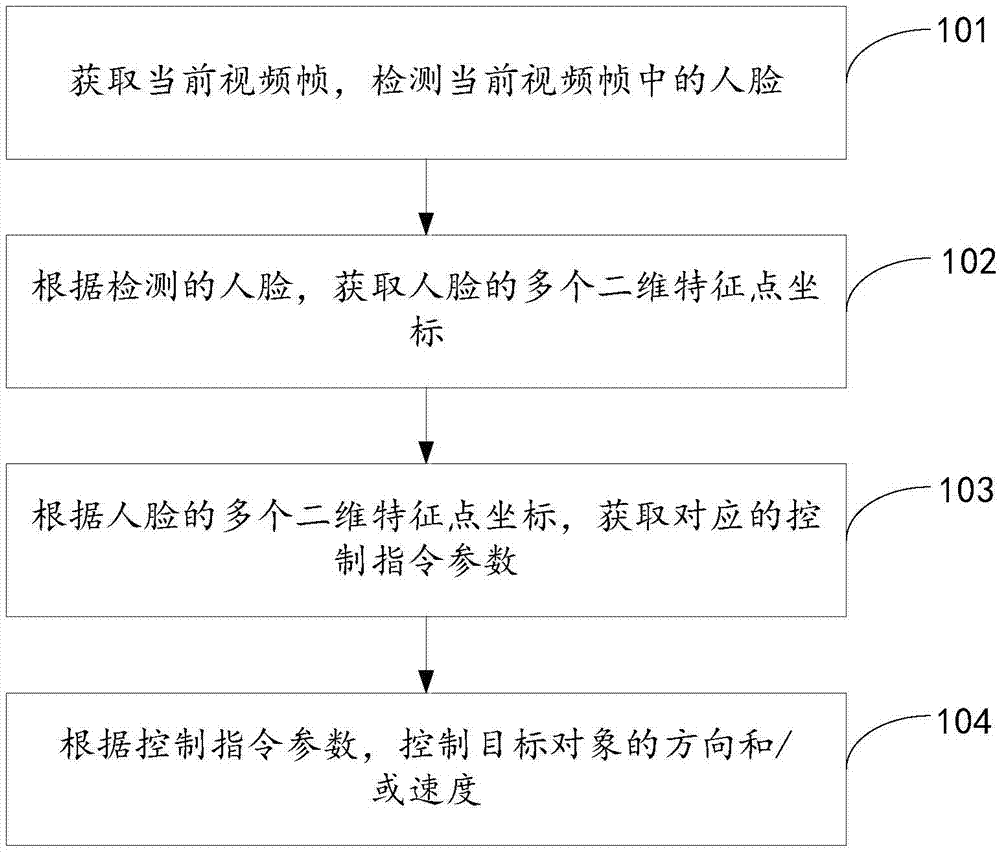

[0106] An embodiment of the present invention provides a method for generating control instructions based on face recognition, referring to figure 1 As shown, the method flow includes:

[0107] 101. Acquire a current video frame, and detect a human face in the current video frame.

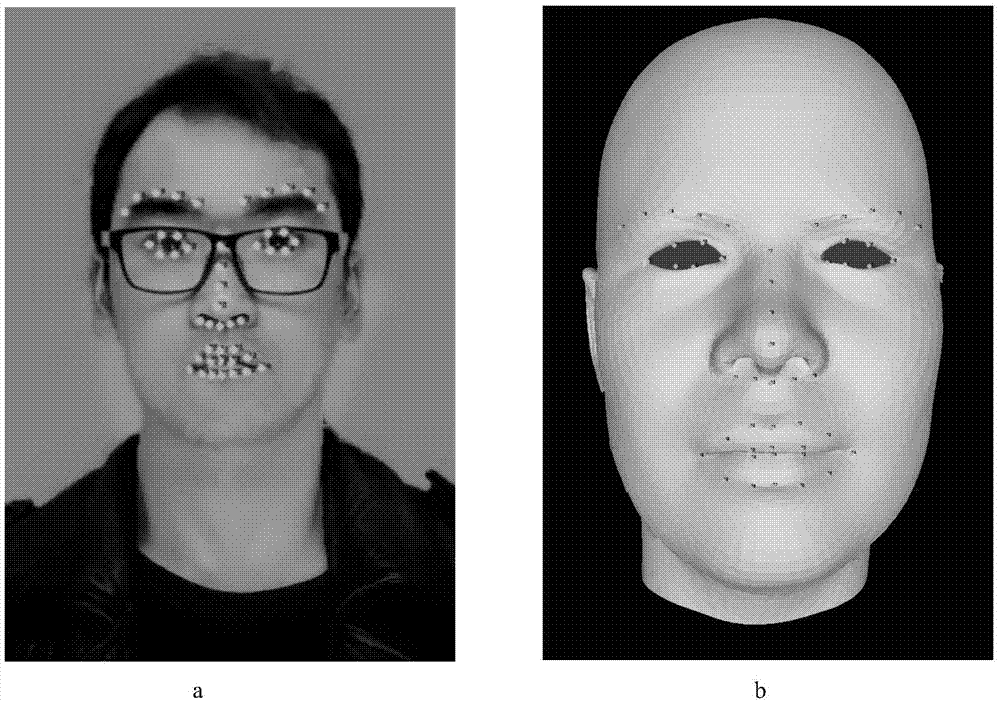

[0108] 102. Acquire multiple two-dimensional feature point coordinates of the human face according to the detected human face.

[0109] 103. According to the coordinates of multiple two-dimensional feature points of the human face, corresponding control instruction parameters are acquired.

[0110] Wherein, the control instruction parameters include the rotation angle of the human face and / or the distance between the upper lip and the lower lip of the human face.

[0111] Specifically, obtaining the rotation angle of the face includes:

[0112] Obtaining a pose estimation matrix of a face relative to a three-dimensional template face, where the three-dimensional template face is a frontal three-...

Embodiment 2

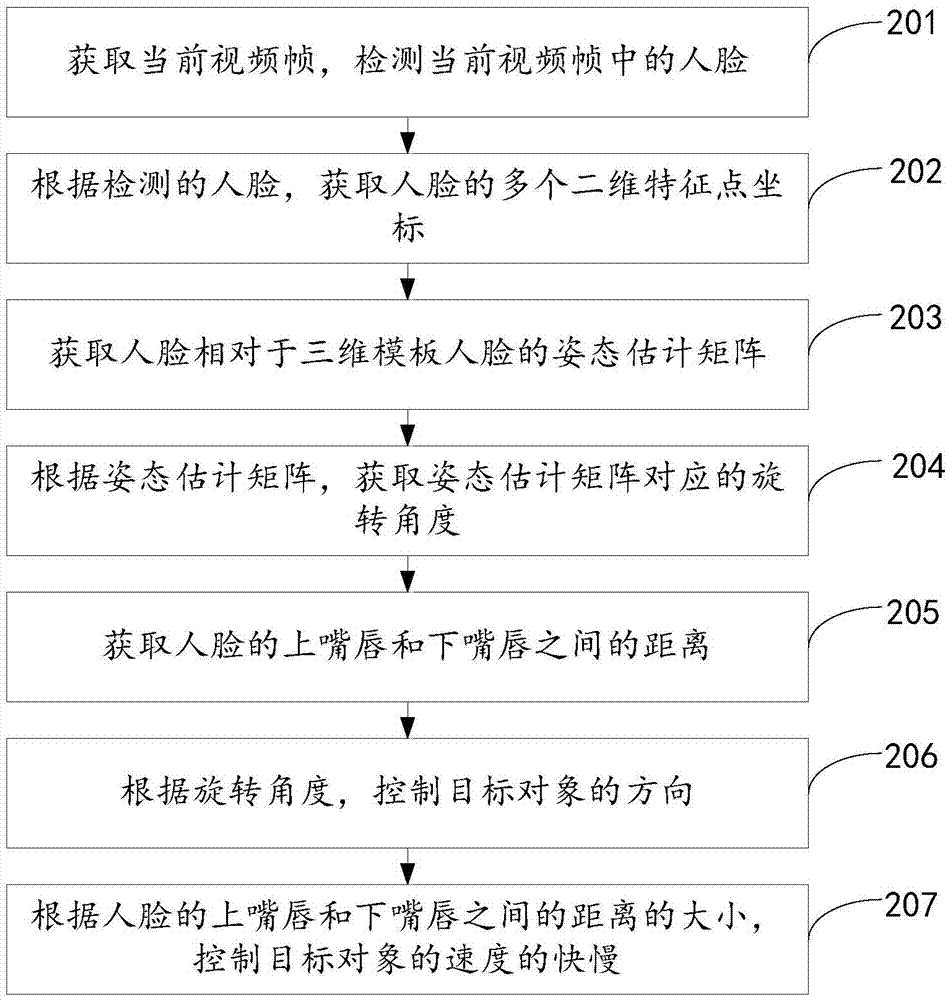

[0135] An embodiment of the present invention provides a method for generating a control instruction based on face recognition. In this embodiment, the control instruction parameters include the rotation angle of the face and the distance between the upper lip and the lower lip of the face. The rotation angle and the distance between the upper lip and lower lip of the face, control the direction and speed of the control target object, refer to figure 2 As shown, the method flow includes:

[0136] 201. Acquire a current video frame, and detect a human face in the current video frame.

[0137] Specifically, the current video frame may be acquired through the camera according to a preset video frame acquisition instruction, and the embodiment of the present invention does not limit the specific manner of acquiring the current real-time video frame.

[0138] The method for detecting faces can be through traditional feature-based face detection methods, statistics-based face dete...

Embodiment 3

[0237] An embodiment of the present invention provides a method for generating a control command based on face recognition. In this embodiment, the control command parameter includes the rotation angle of the face, and the direction of the target object is controlled through the rotation angle of the face. Refer to Figure 5 As shown, the method flow includes:

[0238] 501. Acquire a current video frame, and detect a human face in the current video frame.

[0239] Specifically, this step is the same as step 201 in the second embodiment, and will not be repeated here.

[0240] 502. According to the detected human face, obtain partial feature point coordinates among multiple two-dimensional feature point coordinates of the human face.

[0241] Specifically, the manner of obtaining the coordinates of the feature points in this step is the same as that of step 202 in the second embodiment, and will not be repeated here.

[0242] Preferably, some of the acquired two-dimensional f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com