No-distortion integrated imaging three-dimensional displaying method based on Kinect

An integrated imaging and three-dimensional display technology, applied in image enhancement, image analysis, image data processing, etc., can solve problems such as optical distortion, failure to consider the effect of color images on depth image restoration, and inability to obtain high-quality continuous depth images

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

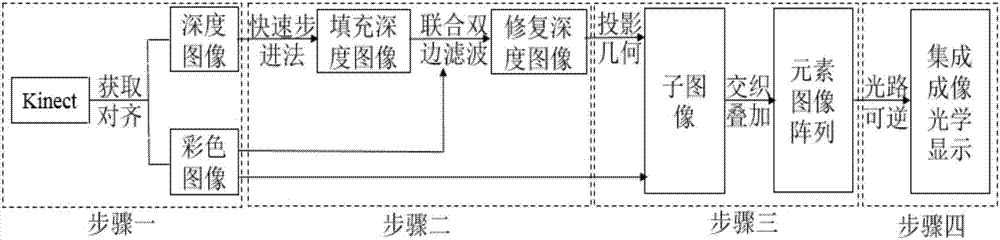

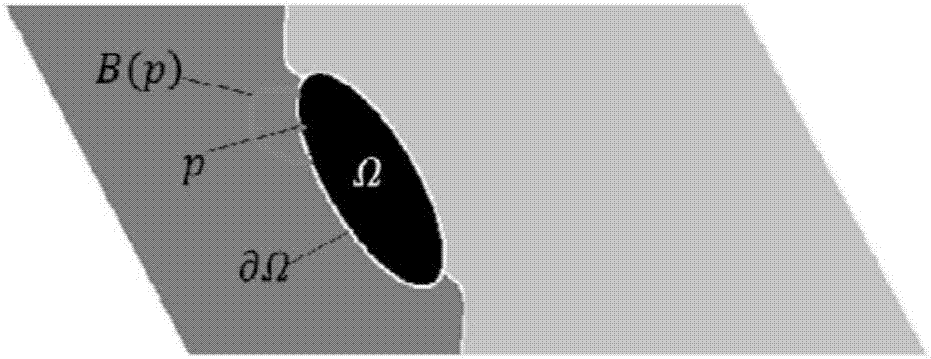

[0075] In order to make the specific implementation of the present invention more clear, the following four steps included in the present invention will be described in detail and completely in combination with the technical solution of the present invention and the accompanying drawings.

[0076] Such as figure 1 As shown, the distortion-free integrated imaging three-dimensional display method based on the Kinect sensor comprises the following steps:

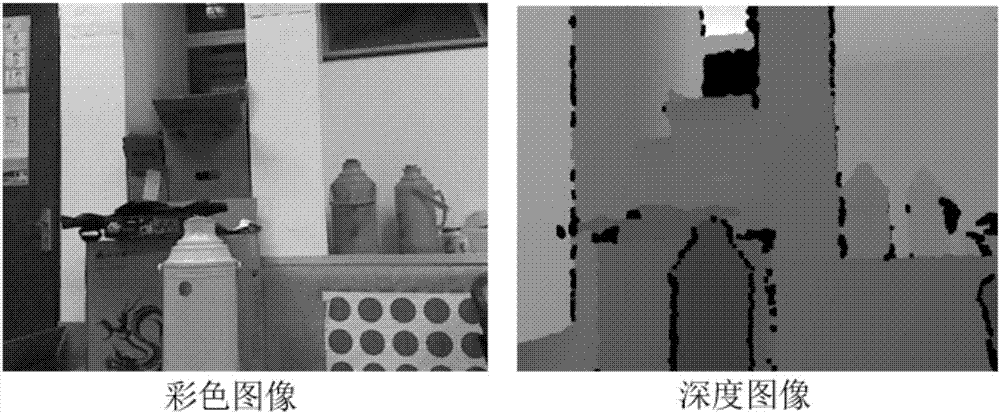

[0077] Step 1, calibrate and calibrate the depth image and color image acquired by the Kinect sensor. Its specific implementation includes the following two parts:

[0078] 1. Calibrate the Kinect sensor

[0079] Ordinary cameras are usually calibrated by shooting a checkerboard patterned calibration board, combined with Zhang’s calibration and other calibration methods, but in the Kinect depth image, the checkerboard pattern on the calibration board cannot be displayed, so it is impossible to directly calibrate the infrared ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com