Chinese text classification method based on super-deep convolution neural network structure model

A text classification and convolutional neural technology, applied in the fields of natural language processing and deep learning, can solve problems such as insufficient accuracy, difficulty in determining the size of the convolution kernel, and high vector dimensions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0033] The present embodiment is based on the Chinese text classification method of ultra-deep convolutional neural network structure model, and this method comprises the following steps:

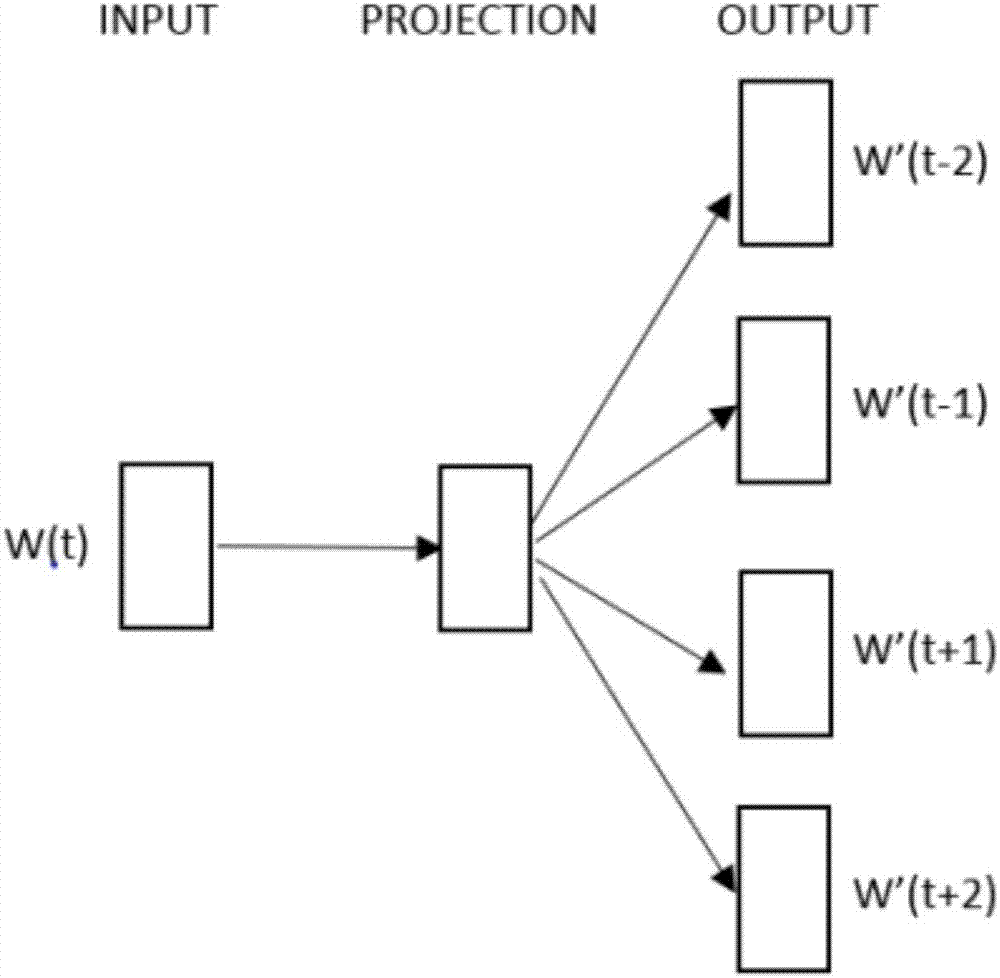

[0034] Step 1: Collect the training corpus of word vectors from the Internet, use the jieba word segmentation tool to segment the training corpus, remove stop words at the same time, build a dictionary D, and then use the Skip-gram model training in the Word2Vec tool to get each word in the dictionary Corresponding word vector; The Skip-gram model (see figure 1 ) is to predict the words in the context definition Context(w) of the current word w(t) under the premise of knowing the current word w(t). The Skip-gram model consists of three layers: input layer, projection layer and output Floor;

[0035] The input layer (INPUT) input is the current word w(t), the projection layer (PROJECTION) is the identity projection of the input layer to deal with the projection layer in the CBOW model, and ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com