Man-machine interaction method of mobile device and mobile device

A mobile device and human-computer interaction technology, applied in the field of human-computer interaction, can solve problems such as lag, low accuracy and flexibility, and failure to achieve human-computer interaction experience, so as to improve operating efficiency and convenience, and improve human-computer interaction. Accurate and reliable, the effect of good human-computer interaction experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

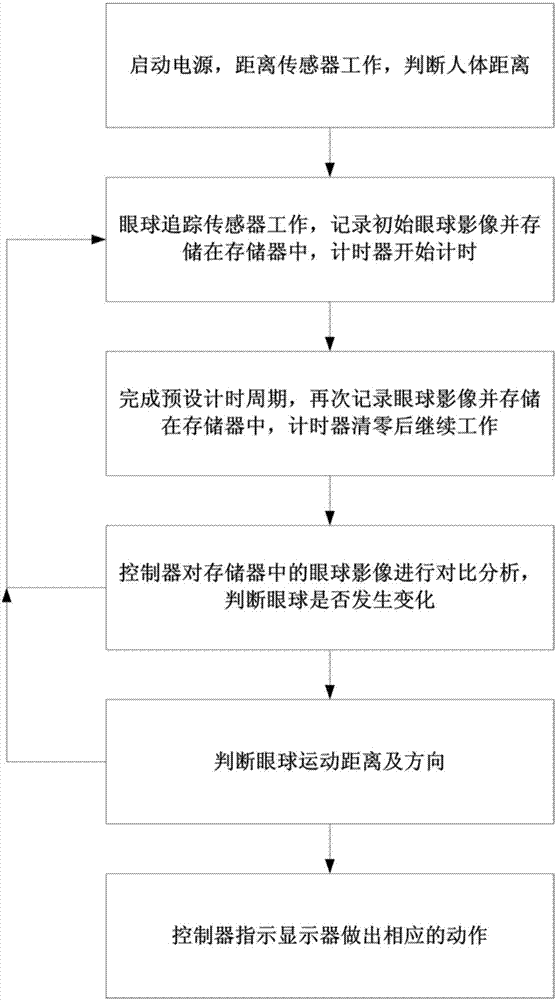

[0026] like figure 1 As shown, a human-computer interaction method for a mobile device includes the following steps:

[0027] a. Turn on the power, the distance sensor works, and judge the distance of the human body;

[0028] b. The eye tracking sensor works, records the initial eye image and stores it in the memory, and the timer starts counting;

[0029] c. Complete the preset timing cycle, record the eyeball image again and store it in the memory, and continue to work after the timer is cleared;

[0030] d. The controller compares and analyzes the eyeball images in the memory to determine whether the eyeballs have changed;

[0031] e. Judging the distance and direction of eye movement;

[0032] f. The controller instructs the display to make corresponding actions.

[0033] The distance sensor presets the healthy browsing distance between the human body and the mobile device. The healthy browsing distance is 35 to 45 centimeters, and the actual distance can be adjusted a...

Embodiment 2

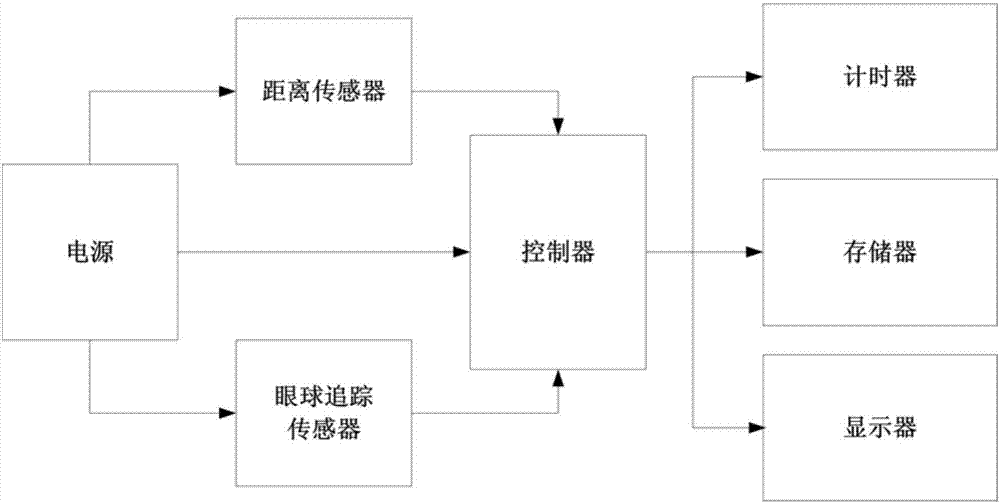

[0041] like figure 2 As shown, a mobile device includes a power supply, a distance sensor, an eye tracking sensor, a controller, a timer, a memory and a display.

[0042] The mobile device is working normally, and the distance sensor judges the healthy browsing distance between the human body and the mobile device. When it is within 35 to 45, the eye tracking sensor is activated and starts to work, and records the initial eyeball image and stores it in the memory. Simultaneously timing timer starts counting. After completing a timing cycle of 0.1 to 1 second, usually set to 0.2 seconds, the eye tracking sensor records the eyeball image again and stores it in the memory, and the timer continues to work after being cleared. The controller compares and analyzes the two eyeball images stored in the memory, and judges whether the eyeball changes. If there is a change, judges the distance and direction of the eyeball movement, and compares it with the preset judgment threshold for...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com