Embedded attitude learning method capable of carrying out self supervision on the basis of video time-space relationship

A technology of time-space relationship and learning method, applied in the fields of instruments, character and pattern recognition, computer parts, etc., can solve the problems of high cost and time-consuming, and achieve the effect of reducing cost and improving retrieval efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] It should be noted that, in the case of no conflict, the embodiments in the present application and the features in the embodiments can be combined with each other. The present invention will be further described in detail below in conjunction with the drawings and specific embodiments.

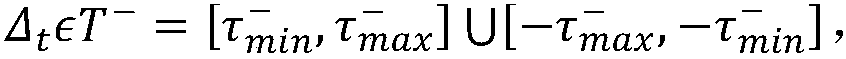

[0026] figure 1It is a system flowchart of a self-supervised embedding attitude learning method based on the temporal-spatial relationship of videos in the present invention. It mainly includes self-supervised pose embedding: temporal order and spatial layout (1); creating training courses (2); mining repetitive poses (3); network structure (4).

[0027] In supervised training using human annotations, avoid hard examples with ambiguous or even incorrect labels. This kind of data can inhibit convergence and lead to poor results. On the other hand, skipping too many difficult training examples may lead to poor results. Overfitting to a small fraction of easy samples, leading to generali...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com