A Video Behavior Recognition Method Based on Deep Convolutional Features

A deep convolution and recognition method technology, applied in the field of computer vision, can solve the problems of ignoring the motion characteristics, not considering the trajectory characteristics and its timing, and the accuracy of the classification effect is not high, so as to achieve the effect of accurate feature extraction and improved accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

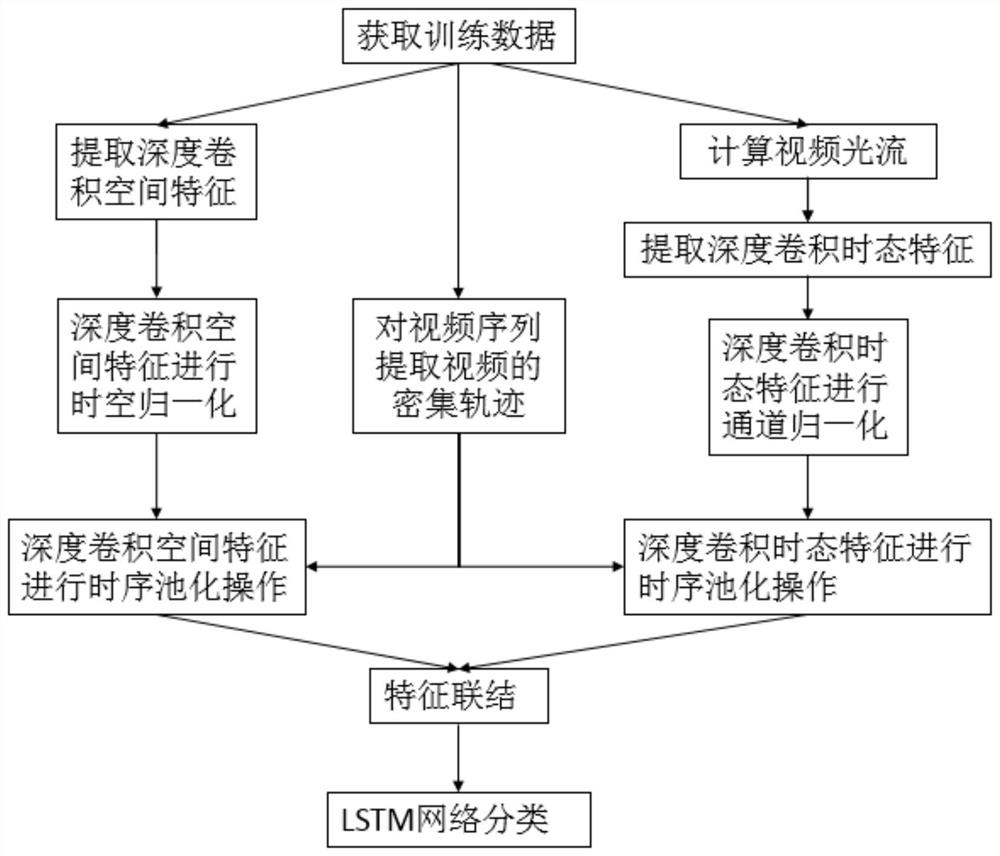

[0022] This embodiment provides a video behavior recognition method based on deep convolution features, the flow chart of the method is as follows figure 1 shown, including the following steps:

[0023] S1. Obtain training data: Obtain the videos and corresponding labels in the training video dataset, extract each frame at a certain frame rate, and obtain the training samples and their categories, which include all behavior types involved in the videos in the training dataset ;Extract the dense trajectory of the video: every 15 frames, use the grid method for dense sampling, use the dense trajectory algorithm to track the sampling points within these 15 frames, obtain the trajectory of each sampling point, and remove static trajectory and excessive changes The trajectory of the video is obtained to obtain the dense trajectory of the video;

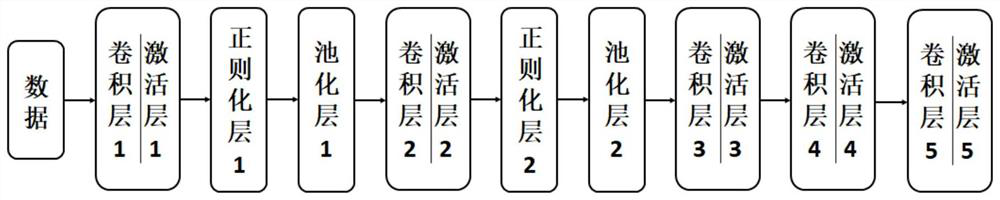

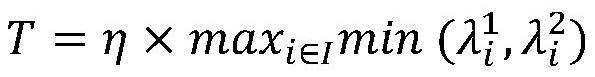

[0024] S2. Extract the deep convolutional spatial features of the video: input the video sequence into the pre-trained spatial neural ne...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com