Method for estimating unknown object grabbing positions and posture on basis of mixed information input network models

A technology of mixing information and inputting network, applied in computing, image data processing, instruments, etc., can solve problems such as low efficiency, large amount of calculation for grasping point search, and difficulty in grasping unknown objects.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

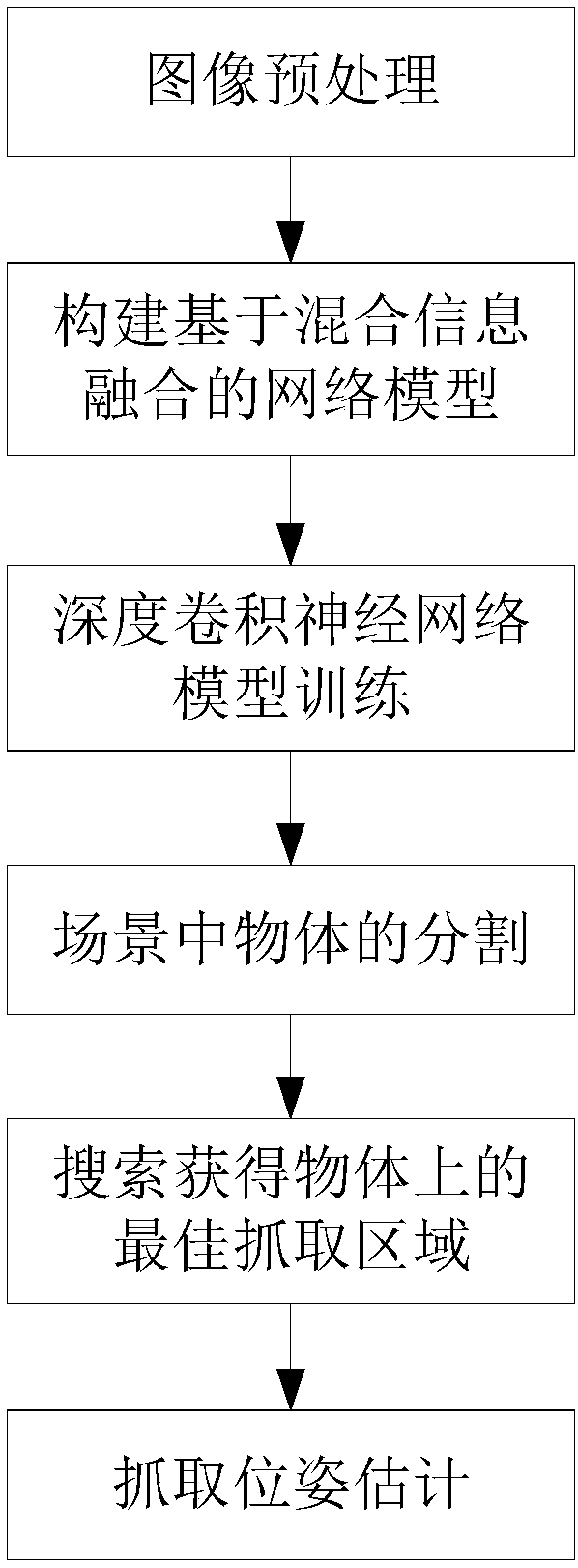

[0070] Specific implementation mode 1: The specific implementation mode of the present invention will be further elaborated in conjunction with the accompanying drawings. Such as figure 1 As shown, it is a flow chart of a method for catching pose estimation of an unknown object based on a convolutional neural network model of the present invention, which is mainly completed by the following steps:

[0071] Step 1: Image Preprocessing

[0072] 1) Depth information preprocessing

[0073] The mixed information input of this patent includes the color, depth and normal vector channel information of the object image, and the data comes from the Kinect depth sensor of Microsoft Corporation. Depth channel information usually has a lot of image noise due to shadows, object reflections, etc., so that the depth values of many pixels on the depth image are missing, and usually appear in the form of large areas. Therefore, when using the traditional image filtering method to try to ...

Embodiment

[0118] combine Figure 1 to Figure 5 To illustrate this embodiment, the steps of the unknown object grasping and recognition method based on the convolutional neural network model are as follows:

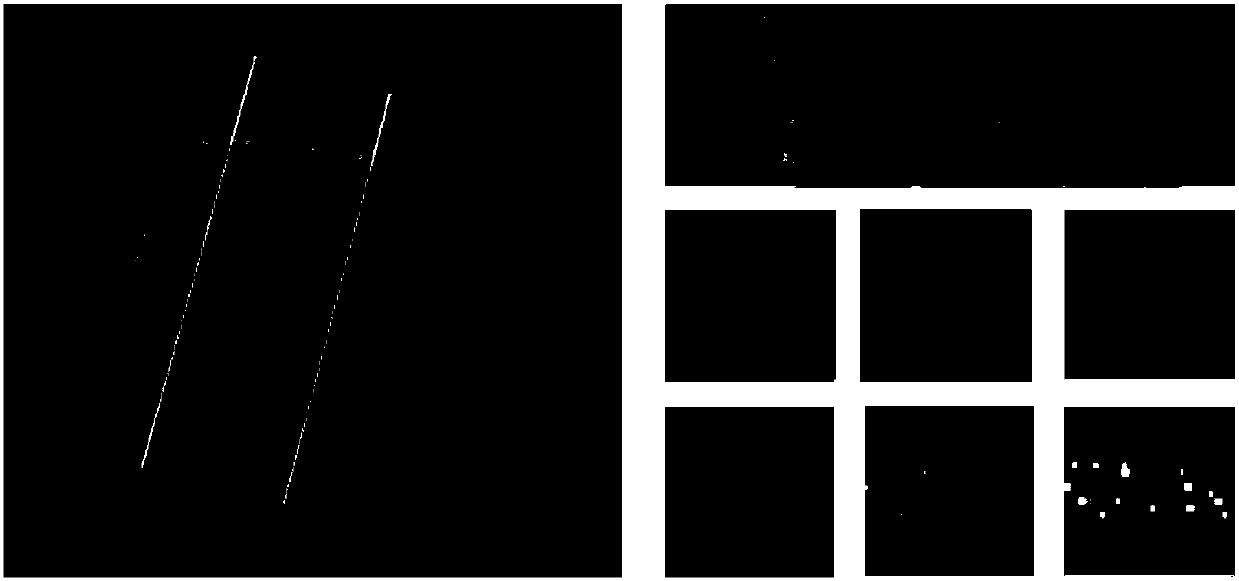

[0119] Step 1, first preprocessing the RGB-D image. figure 2 The left side of the middle is the color image of the original object, in which the rectangular frame is the rectangular area that needs to be judged for graspability, and the direction of the long axis of the rectangle is the closing direction of the robot's grasping. The first line on the right side of the figure is the rectangular area image after the image rotation operation, and the second and third lines are the results of the color image and the normal vector image after the image size scaling and whitening processing.

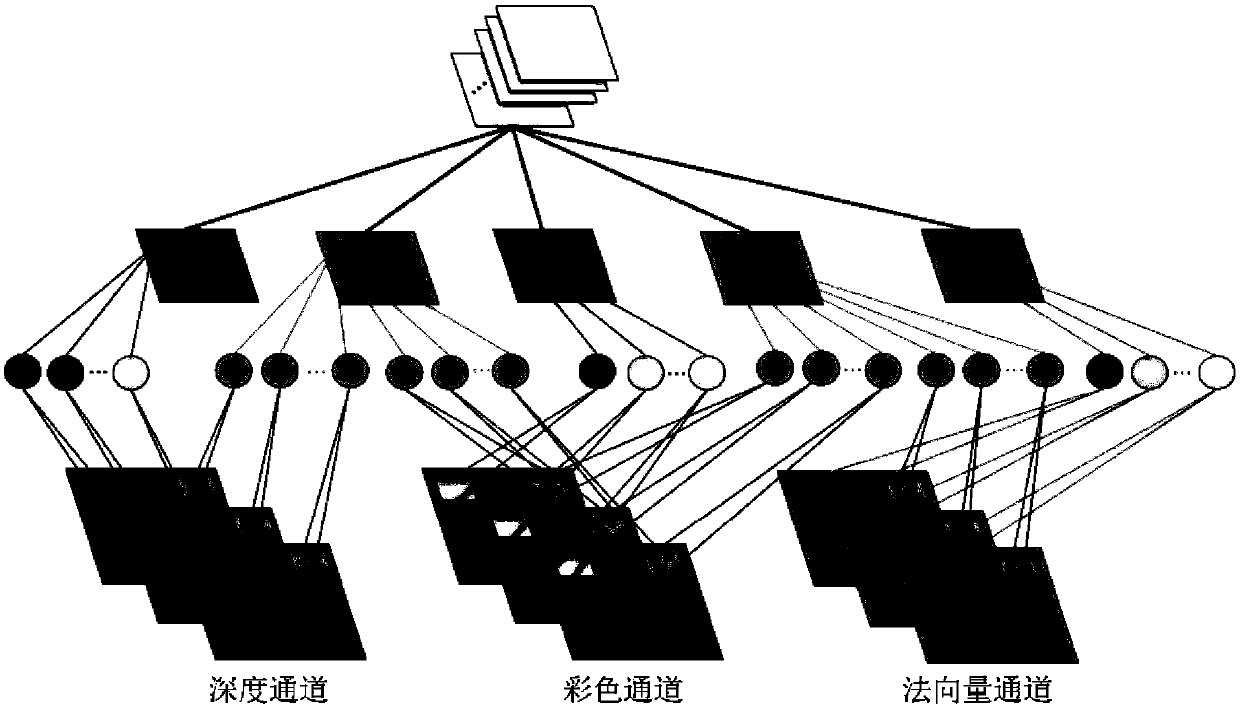

[0120] Step 2, construct as image 3 The structure of the hybrid information fusion model, and build a deep convolutional neural network model.

[0121] Step 3: Input the training data into the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com