Convolutional neural network-based synthetic aperture focused imaging depth assessment method

A convolutional neural network and synthetic aperture focusing technology, which is applied to biological neural network models, neural architectures, instruments, etc., can solve problems such as consuming a lot of time, achieve the effects of reducing complexity, shortening computing time, and enhancing scalability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

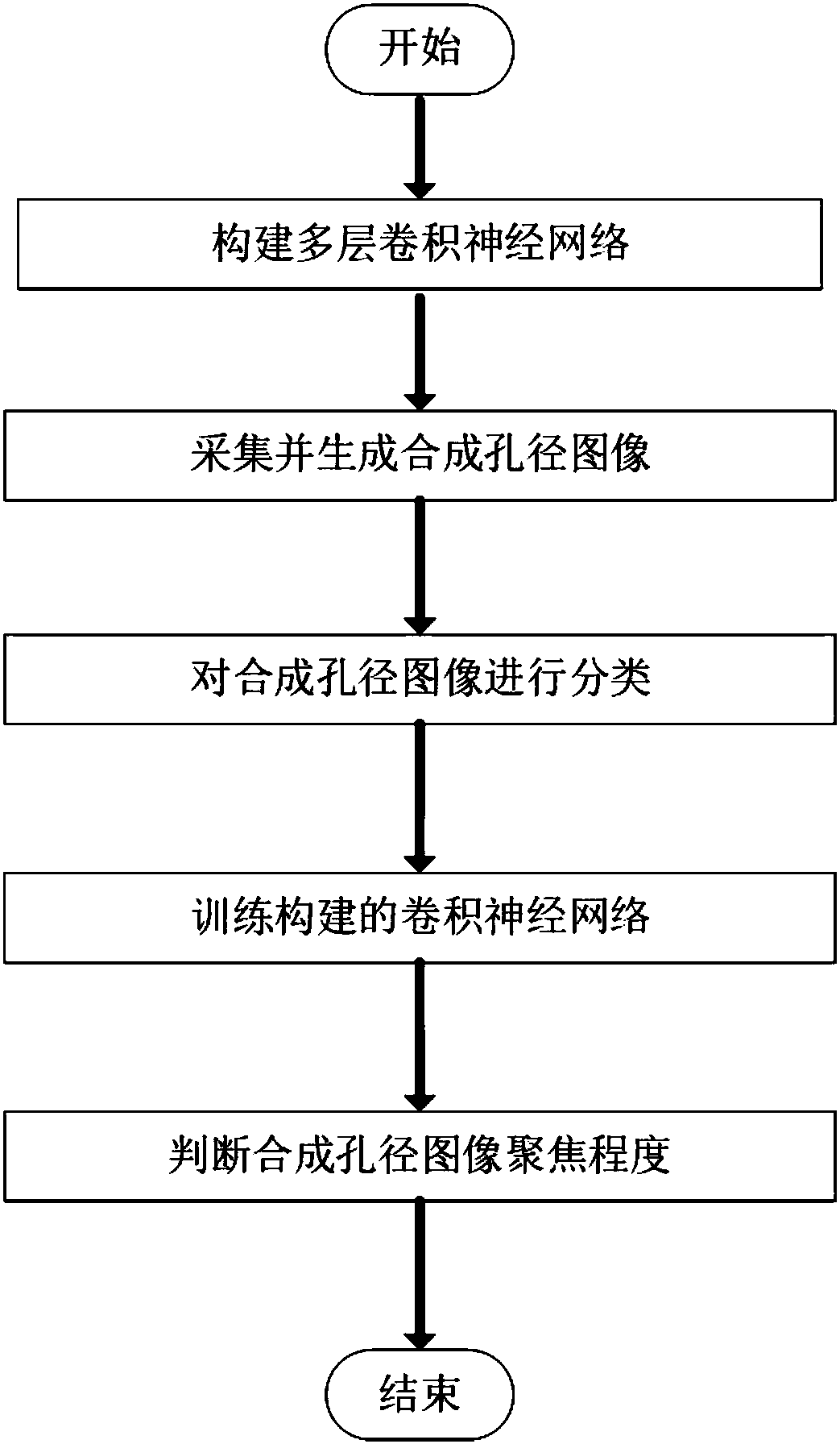

[0058] Taking 704 images collected from 44 scenes in the campus to generate 8766 synthetic aperture images as an example, the synthetic aperture focusing imaging depth evaluation method based on convolutional neural network is as follows: figure 1 As shown, the specific steps are as follows:

[0059] (1) Build a multi-layer convolutional neural network

[0060] For the input image of the network, the uniform size is 227×227×3, where 227×227 is the resolution of the input image, and 3 is the pixel information of the input image with three color channels.

[0061] The convolutional neural network consists of 5 convolutional layers, 3 pooling layers and 3 fully connected layers. The specific parameters are as follows:

[0062] conv1: (size: 11, stride: 4, pad: 0, channel: 96)

[0063] pool1: (size: 3, stride: 2, pad: 0, channel: 96)

[0064] conv2: (size: 5, stride: 1, pad: 2, channel: 256)

[0065] pool2: (size: 3, stride: 2, pad: 0, channel: 256)

[0066] conv3: (size: 3, ...

Embodiment 2

[0110] Taking 704 images collected from 44 scenes in the campus to generate 8766 synthetic aperture images as an example, the steps of the synthetic aperture focusing imaging depth evaluation method based on convolutional neural network are as follows:

[0111] (1) Build a multi-layer convolutional neural network

[0112] The steps of constructing a multi-layer convolutional neural network are the same as those in Embodiment 1.

[0113] (2) Collect and generate synthetic aperture images

[0114] Use a camera array composed of 8 camera levels to shoot the target object, collect images of each camera located at different angles of view, and use formula (5) to get the projection to the reference plane π r Image

[0115] W ir =H i ·F i (5)

[0116] where F i For the images captured by each camera, W ir for F i Projected to the plane π after affine transformation r image of H i from F i projected onto the reference plane π r The affine matrix of , where i is 1,2,...,N, ...

Embodiment 3

[0129] Taking 704 images collected from 44 scenes in the campus to generate 8766 synthetic aperture images as an example, the steps of the synthetic aperture focusing imaging depth evaluation method based on convolutional neural network are as follows:

[0130] (1) Build a multi-layer convolutional neural network

[0131] The steps of constructing a multi-layer convolutional neural network are the same as those in Embodiment 1.

[0132] (2) Collect and generate synthetic aperture images

[0133] Use a camera array composed of 16 camera levels to shoot the target object, collect images of each camera located at different angles of view, and use formula (5) to get the projection to the reference plane π r Image

[0134] W ir =H i ·F i (5)

[0135] where F i For the images captured by each camera, W ir for F i Projected to the plane π after affine transformation r image of H i from F i projected onto the reference plane π r The affine matrix of , where i is 1,2,...,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com