A method for generating natural language descriptions of open-domain videos based on multimodal feature fusion

A feature fusion, natural language technology, applied in the field of video analysis, can solve the problem of only using RGB image features, without much research on other information, without considering other features, etc., to increase robustness and speed, improve accuracy, Highly robust effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

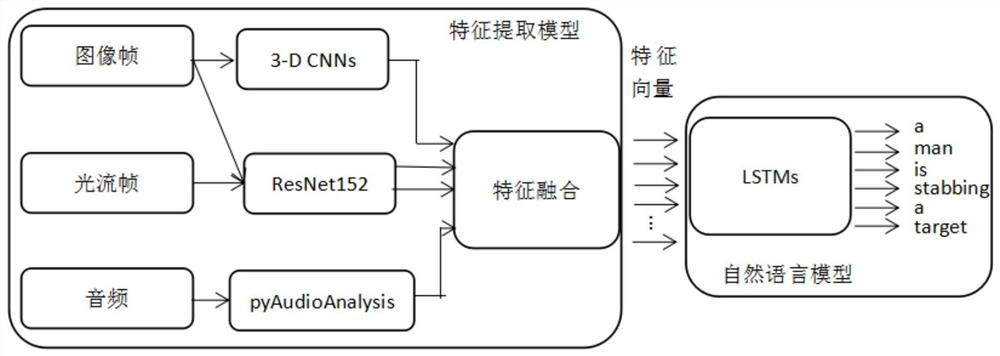

[0033] Such as figure 1 The shown open-domain video natural language description model based on multimodal feature fusion is mainly divided into two major models, one is the feature extraction model, and the other is the natural language model. The present invention mainly studies the feature extraction model, which will be divided into four major models: Partial introduction.

[0034] The first part: ResNet152 extracts RGB image features and optical flow features,

[0035] (1) Extraction of RGB image features,

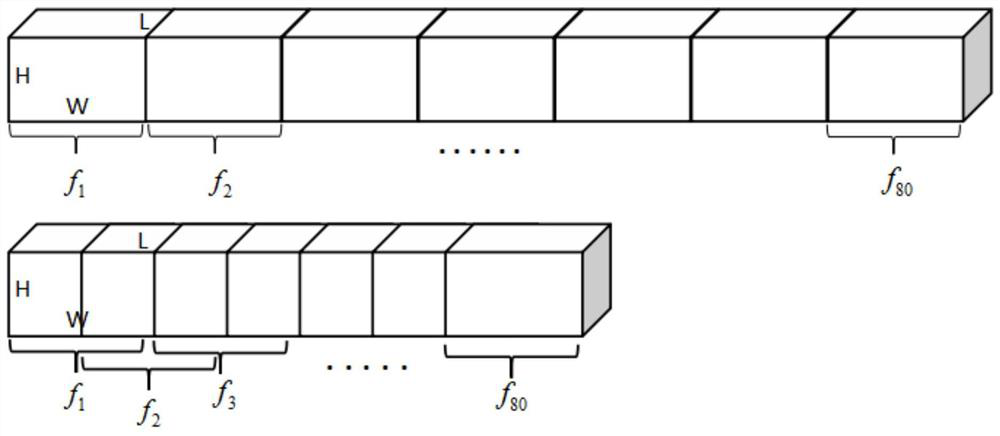

[0036] Use the ImageNet image database to pre-train the ResNet model. ImageNet contains 12,000,000 images divided into 1,000 categories, which can make the model more accurate in identifying objects in open-domain videos. The batch size of the neural network model is set to 50, and the learning rate at the beginning Set to 0.0001, the MSVD (Microsoft Research Video DescriptionCorpus) dataset contains 1970 video clips, with a duration between 8 and 25 seconds, corres...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com