Text abstract generating method and system

A technology for generating systems and texts, applied in biological neural network models, special data processing applications, instruments, etc., can solve the problem of text abstraction with multiple impurity information, limited feature extraction, and inability to identify and extract features of high-level feature key information, etc. problems, to achieve the effect of enhancing key information, enhancing key information, and improving the generation effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

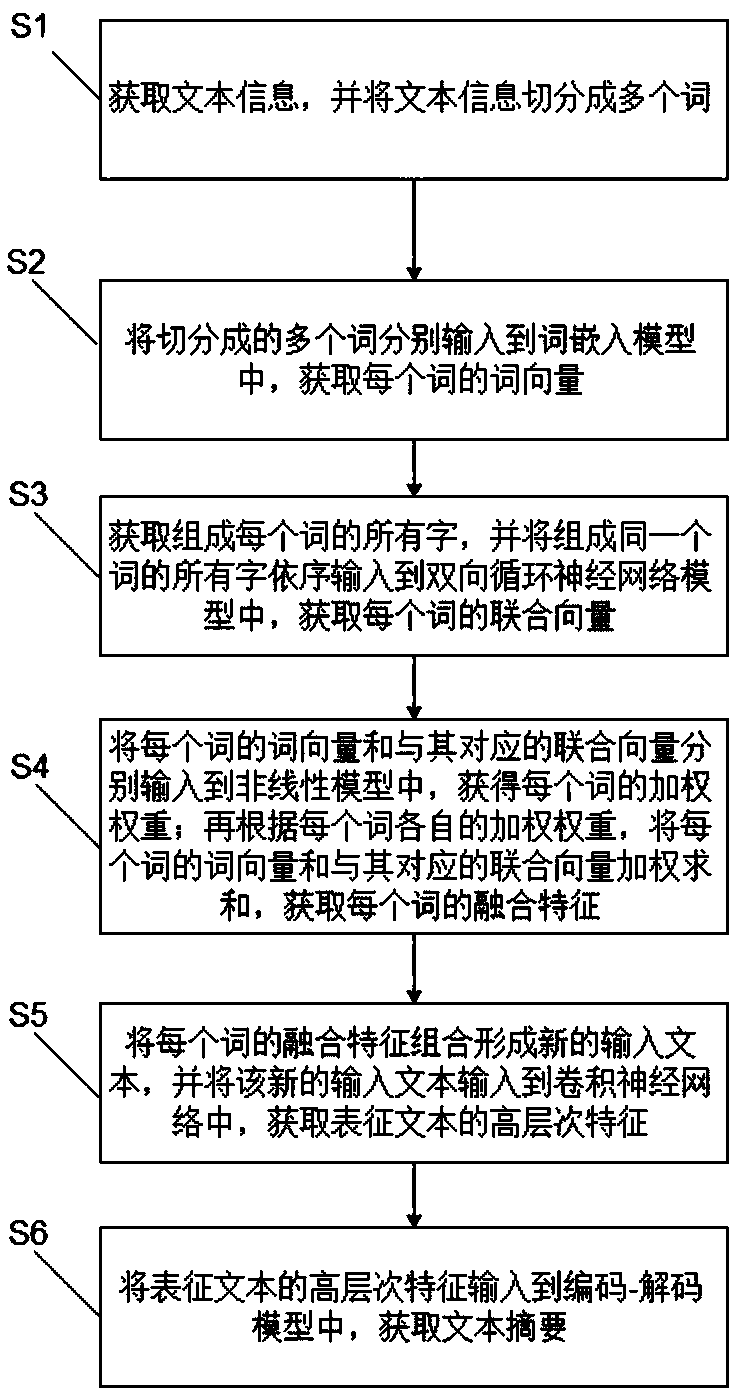

[0029] see figure 1 , which is a flowchart of a method for generating a text summary in an embodiment of the present invention. The method for generating a text summary includes the following steps:

[0030] Step S1: Obtain text information and segment the text information into multiple words.

[0031] In the present invention, the text information can be segmented into multiple words by means of an existing word segmenter or word segmentation tool.

[0032] Step S2: input the divided words into the word embedding model respectively, and obtain the word vector of each word.

[0033] In the present invention, if the word vector of the ith word uses x i representation, then the word vector set representing the text can be expressed as x={x 1 ,x 2 ,...,x i-1 ,x i}; where the size of the word vector in the word embedding model can be set to 200, where the vector here and other vectors involved later are a certain word or data expressed in a computer-readable language such a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com