Recurrent neural network language model training method and device, equipment and medium

A language model and neural network technology, applied in the field of artificial intelligence, can solve problems such as hindering applications

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

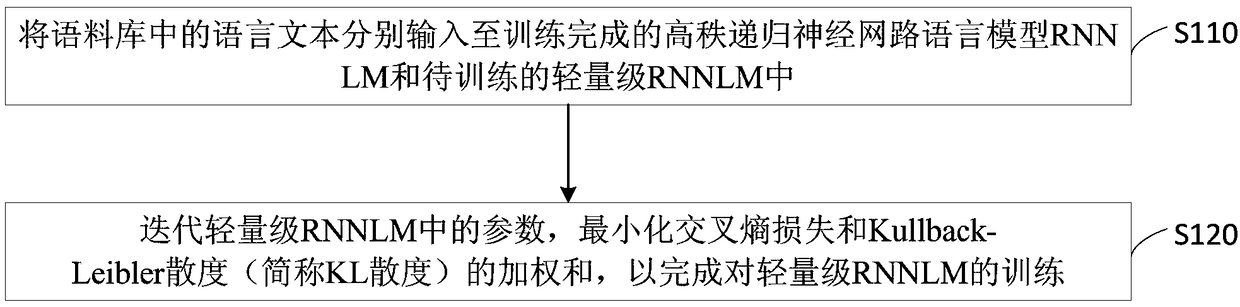

[0026] figure 1 It is a flow chart of a recursive neural network language model training method provided in Embodiment 1 of the present invention. This embodiment is applicable to the training situation of a recurrent neural network language model used for language text recognition. The method can be composed of recursive Neural network language model training device to perform, specifically includes the following steps:

[0027] S110. Input the language text in the corpus into the trained high-rank recurrent neural network language model RNNLM and the lightweight RNNLM to be trained respectively.

[0028] In this embodiment, the corpus includes Penn Treebank (PTB) corpus and / or Wall Street Journal (WSJ) corpus. Among them, the PTB corpus contains a total of 24 parts, the vocabulary size is limited to 10000, and the label Indicates out-of-set words. Select part or all of the predictions in the PTB corpus as the training set, and input the language text in the training set i...

Embodiment 2

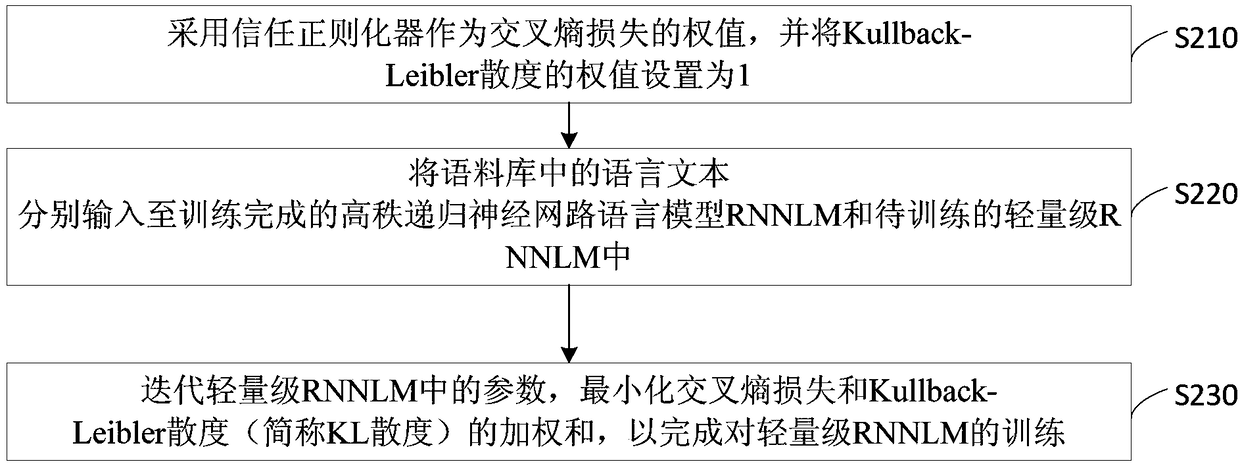

[0042] In the process of model training, it is found that the training process of the student model still has the following two defects: First, in the language model, each training data label vector represents a degenerated data distribution, which gives the corresponding language text Likelihood on a category. Compared to the possibility distribution obtained by the teacher model in all training data, that is, the probability that the corresponding language text falls on all labels, the degenerate data distribution has more noise and localization. Second, different from the previous experimental results of knowledge distillation in acoustic modeling and image recognition, in this embodiment, it is found in the experiment of language text recognition that when the cross-entropy loss and KL divergence have fixed weights, by minimizing The weighted sum of the cross-entropy loss and the KL divergence yields a student model that is inferior to that obtained by minimizing the KL ...

Embodiment 3

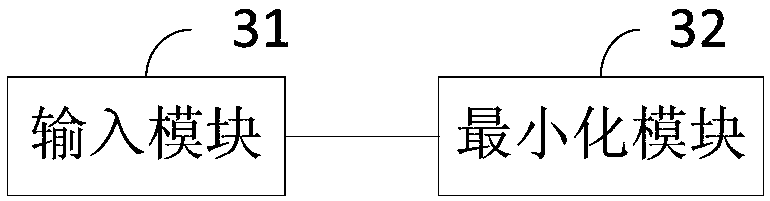

[0098] image 3 It is a schematic structural diagram of a recurrent neural network language model training device provided in Embodiment 3 of the present invention. Such as image 3 As shown, an input module 31 and a minimization module 32 are included.

[0099] The input module 31 is used to input the language text in the corpus into the high-rank recursive neural network language model RNNLM and the lightweight RNNLM to be trained respectively;

[0100] Minimize module 32, be used for iterating the parameter in lightweight RNNLM, minimize the weighted sum of cross entropy loss and Kullback-Leibler divergence, to complete the training to lightweight RNNLM;

[0101] Among them, the cross-entropy loss is the cross-entropy loss of the output vector of the lightweight RNNLM relative to the training data label vector of the language text, and the Kullback-Leibler divergence is the Kullback of the output vector of the lightweight RNNLM relative to the output vector of t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com