Baby crying sound translation method based on sound feature recognition

A sound feature and baby technology, applied in the field of baby cry translation, can solve problems such as low efficiency, no reference standard, and decline in nursing quality, and achieve the effect of improving quality and efficiency, reducing misjudgment and delayed judgment

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] The present invention will be further described below in conjunction with specific embodiments. The exemplary embodiments and descriptions of the present invention are used to explain the present invention, but not as a limitation to the present invention.

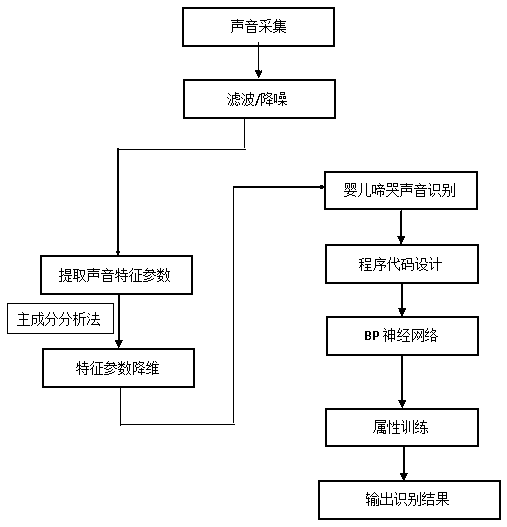

[0022] Such as figure 1 As shown, a kind of baby cry translation method based on sound feature recognition of the present embodiment, the specific steps are as follows:

[0023] A hand-held precision sound level pickup can be used to be placed 10cm above the baby's mouth to collect 1s long sound clips of baby crying, and pre-process the sound clips of all baby cries collected. The pre-processing includes Adopt MINI DSP audio processor, DSP voice noise reduction algorithm, LD-2L filter noise reduction current sound anti-jamming device for voice noise reduction and filter noise reduction of all baby crying sound clips.

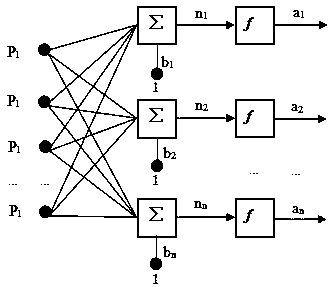

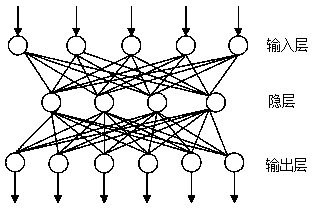

[0024] These sound signals need to be analyzed and processed before they are input into the BP ne...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com