Method and system for optimizing human-computer interaction interface of intelligent cabin based on three-branch decision

A technology of human-computer interaction interface and cockpit, which is applied in the direction of user/computer interaction input/output, computer components, mechanical mode conversion, etc. It can solve the problems of low gesture recognition accuracy and slow recognition speed, and reduce interaction time. Comfortable interactive experience, accurate gesture recognition effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

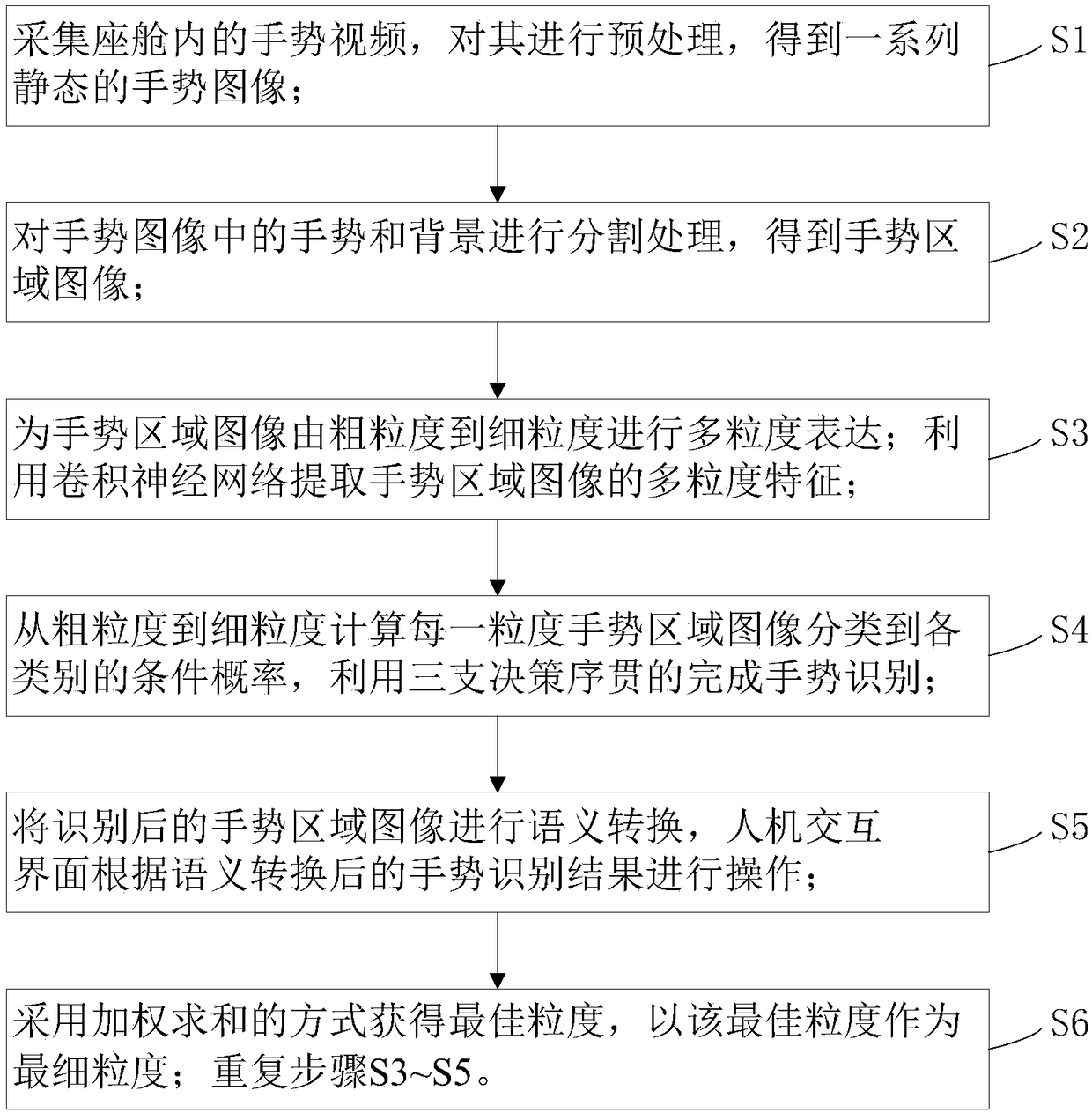

[0044] The present invention comprises the following steps:

[0045] S1. Collect gesture video in the cockpit, preprocess it, and obtain a static gesture image;

[0046] S2. Segmenting the gesture and the background in the gesture image to obtain a gesture area image;

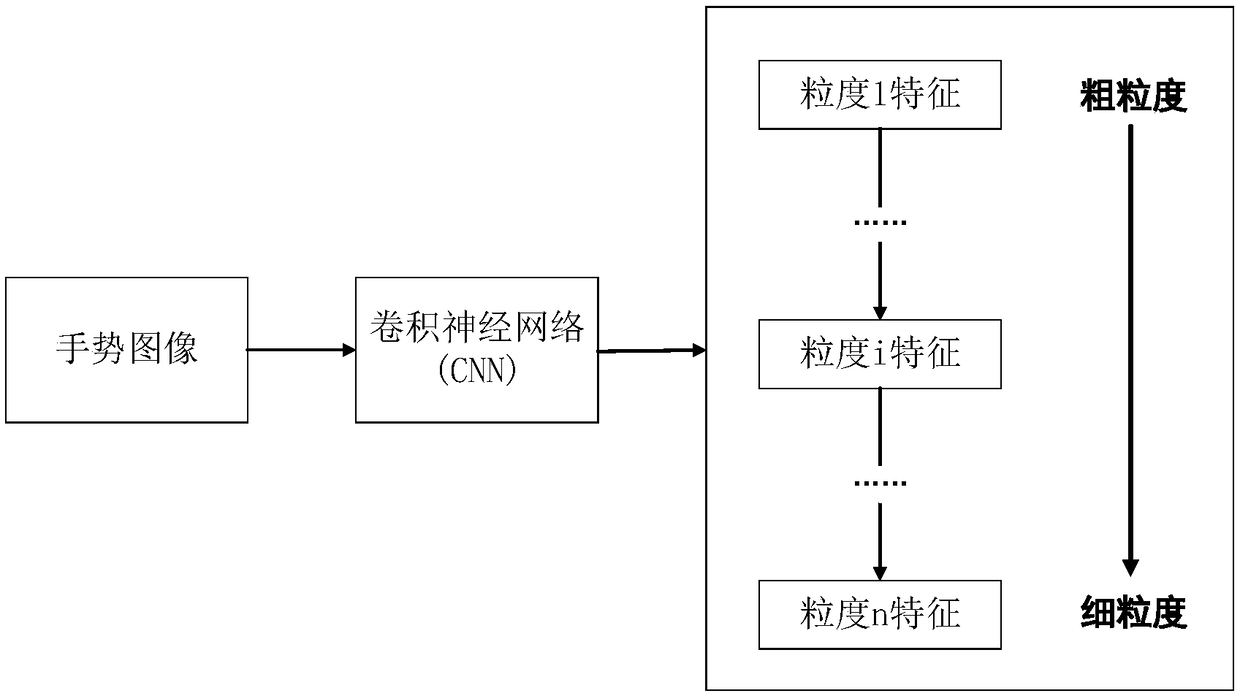

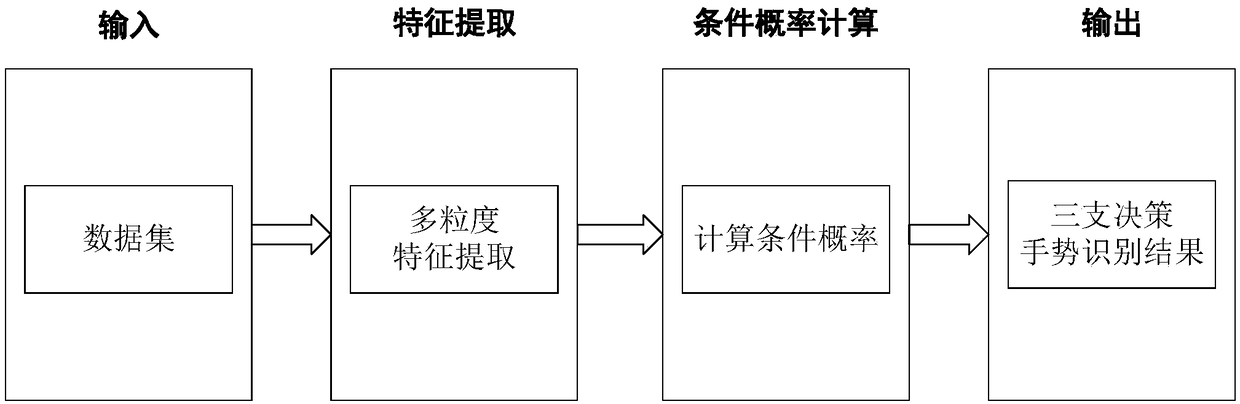

[0047] S3. Perform multi-grained expression for the gesture area image from coarse-grained to fine-grained; use convolutional neural network to extract multi-granularity features of the gesture area image;

[0048] S4. Calculate the conditional probability of classifying the image of each granularity gesture region into each category from coarse-grained to fine-grained, and use the three decision-making steps to sequentially complete gesture recognition;

[0049] S5. Perform semantic conversion on the recognized gesture area image, and operate the human-computer interaction interface according to the gesture recognition result after semantic conversion;

[0050] The gesture region image is expressed in multip...

Embodiment 2

[0077] On the basis of steps S1-S5, this embodiment also adds step S6, which adopts the method of weighted summation to obtain the optimal granularity, and takes the optimal granularity as the finest granularity, and repeatedly executes steps S3-S5.

[0078] HMI interface optimization design method such as Figure 4 As shown, the final human-computer interaction interface optimization result of each granularity is obtained by weighted summation, so as to determine the optimal granularity of the gesture area image, and use the optimal granularity as the finest granularity, and use the convolutional neural network to analyze new gestures. Extract multi-granularity features and make three decisions sequentially;

[0079] Result=w×Acc+(1-w)×Time

[0080] Time=T 1 +T 2

[0081] Among them, Result is the optimal granularity of the gesture area image, Acc indicates the gesture recognition accuracy, Time indicates the time spent in the gesture recognition process, w indicates the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com