A semi-supervised network representation learning algorithm based on deep compression self-encoder

A self-encoder and network representation technology, applied in the field of network representation learning, can solve limitations and other problems, and achieve the effect of improving generalization ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

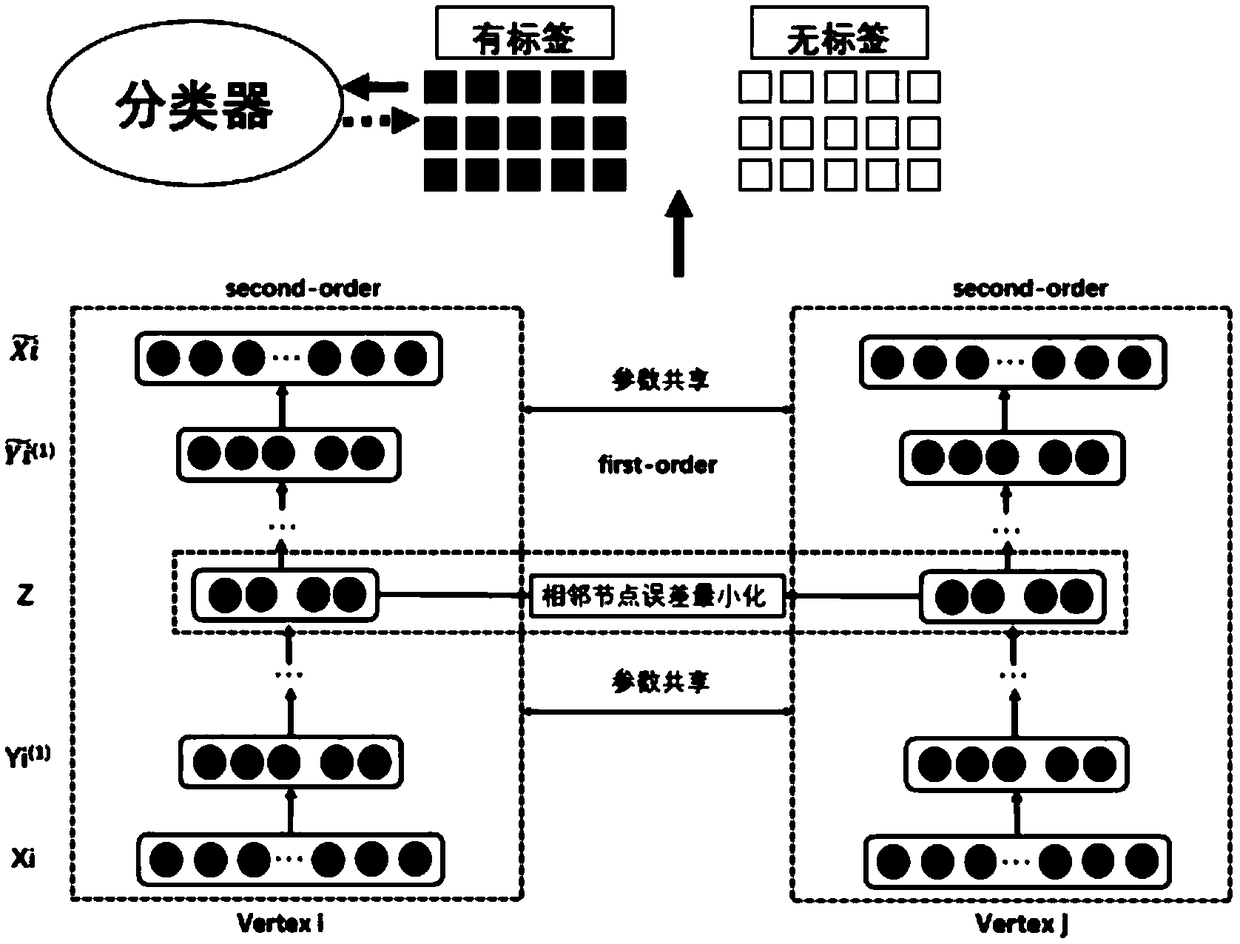

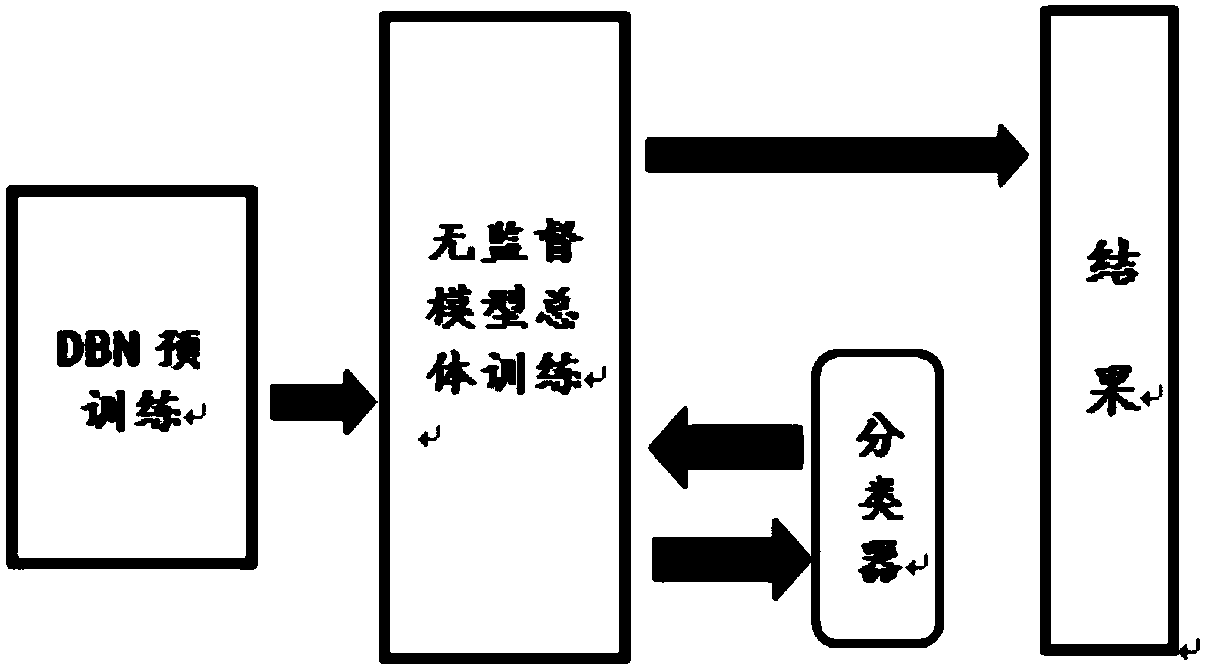

Method used

Image

Examples

Embodiment 1

[0053] This embodiment runs on the Windows platform, the operating system is 64-bit windows10, the CPU uses E3 1231V3, the memory is 32G, the GPU used is NVIDIA GTX970, the memory capacity is 3.5G, all algorithms are written in python language, the basic configuration is as follows 2:

[0054] Table 2 Experimental environment configuration

[0055]

[0056] The data set used is shown in Table 3:

[0057] Table 3 Data set

[0058]

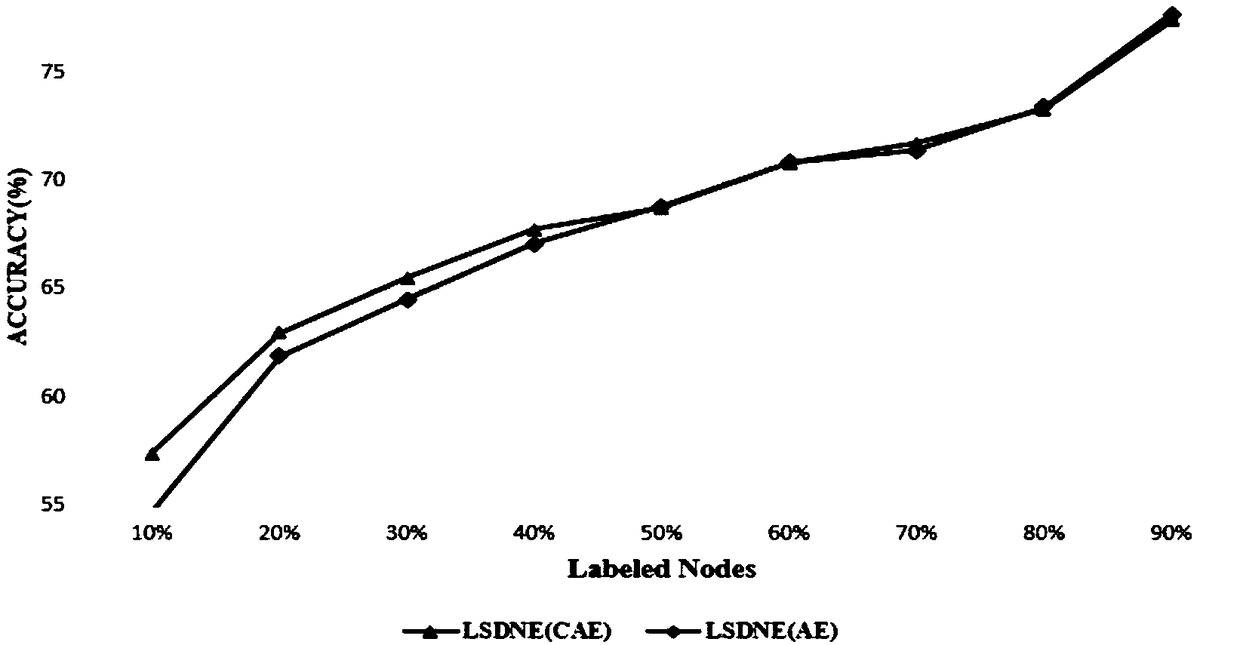

[0059] In this embodiment, the test set occupies 10% to 90% of the total data set. After the node representation vector of the network is obtained, this vector is used as input data, and multi-class logistic regression is used to classify the nodes. The experimental index adopts the micro-average Micro-F1, the experimental results are obtained after 10 times of classification results are averaged, and the baseline is the best value of the experimental results in their respective papers. The baseline algorithm used for comparison is as follows:

[0060] ●De...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com