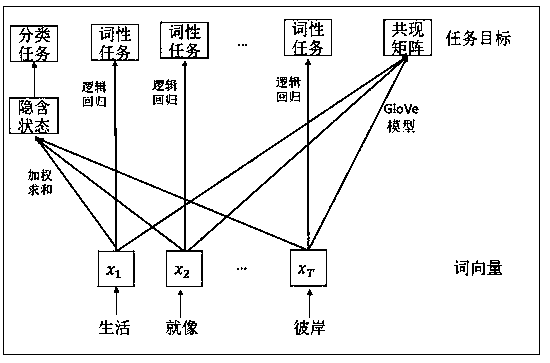

A method of generating a word vector from a multi-task model

A multi-task model, word vector technology, applied in neural learning methods, biological neural network models, special data processing applications, etc., can solve the problem of limited word vector information, achieve strong generalization ability, ensure high efficiency, The effect of improving quality and versatility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

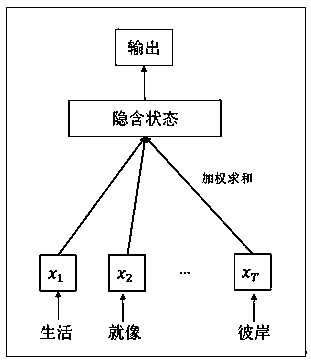

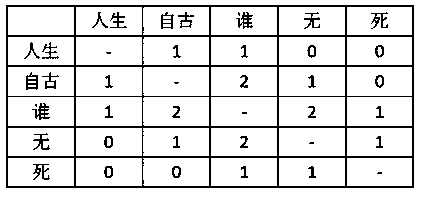

Method used

Image

Examples

Embodiment Construction

[0026] The accompanying drawings are for illustrative purposes only, and should not be construed as limitations on this patent; in order to better illustrate this embodiment, certain components in the accompanying drawings will be omitted, enlarged or reduced, and do not represent the size of the actual product; for those skilled in the art It is understandable that some well-known structures and descriptions thereof may be omitted in the drawings. The positional relationship described in the drawings is for illustrative purposes only, and should not be construed as a limitation on this patent.

[0027] In step S1, in the present invention, a large amount of text corpus needs to be used as training data. The present invention mainly uses open-source Wikipedia Chinese data, and unsupervised tasks can directly train the data without labels, and part-of-speech tagging The annotations of Wikipedia can be obtained by predicting Wikipedia data through existing open source tools, and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com