A natural language emotion analysis method based on a deep network

A technology of natural language and sentiment analysis, applied in the field of natural language sentiment analysis based on deep network, can solve the problem of not considering the object word and context semantic connection

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

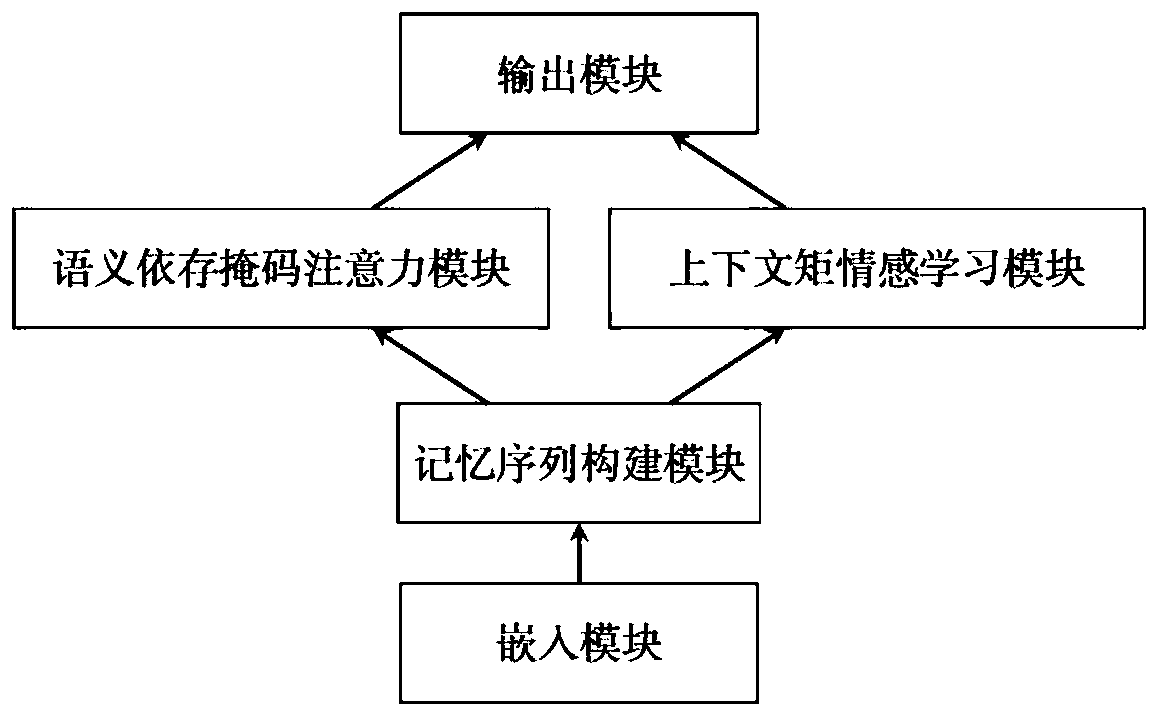

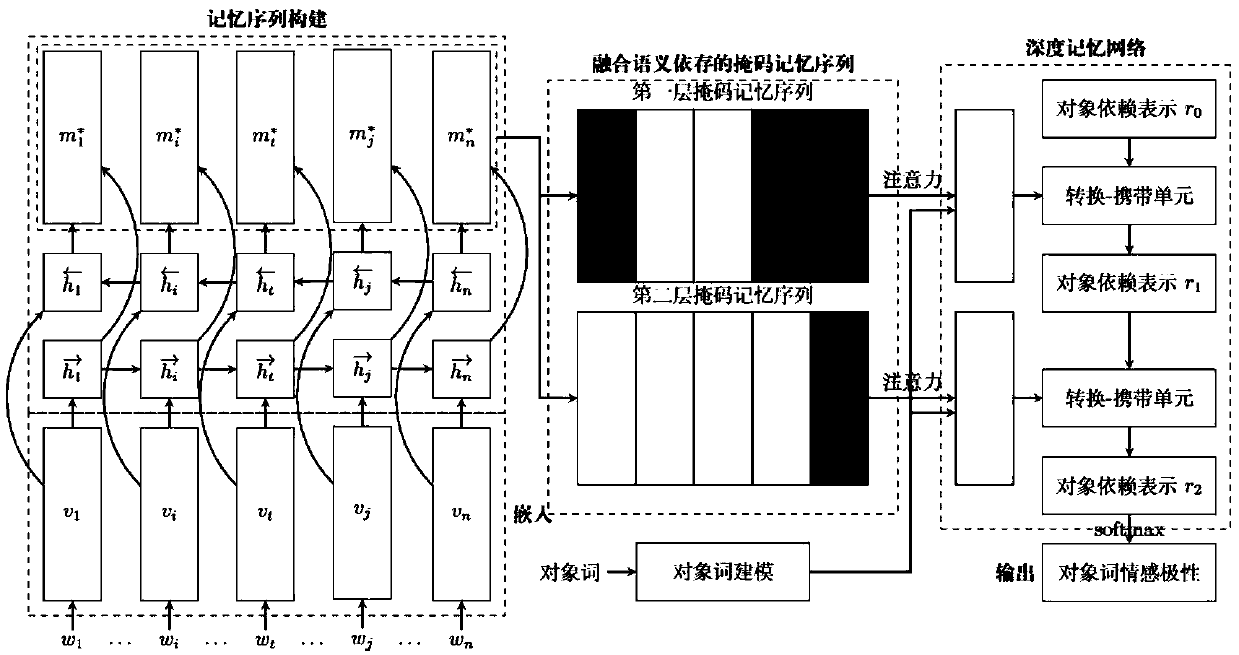

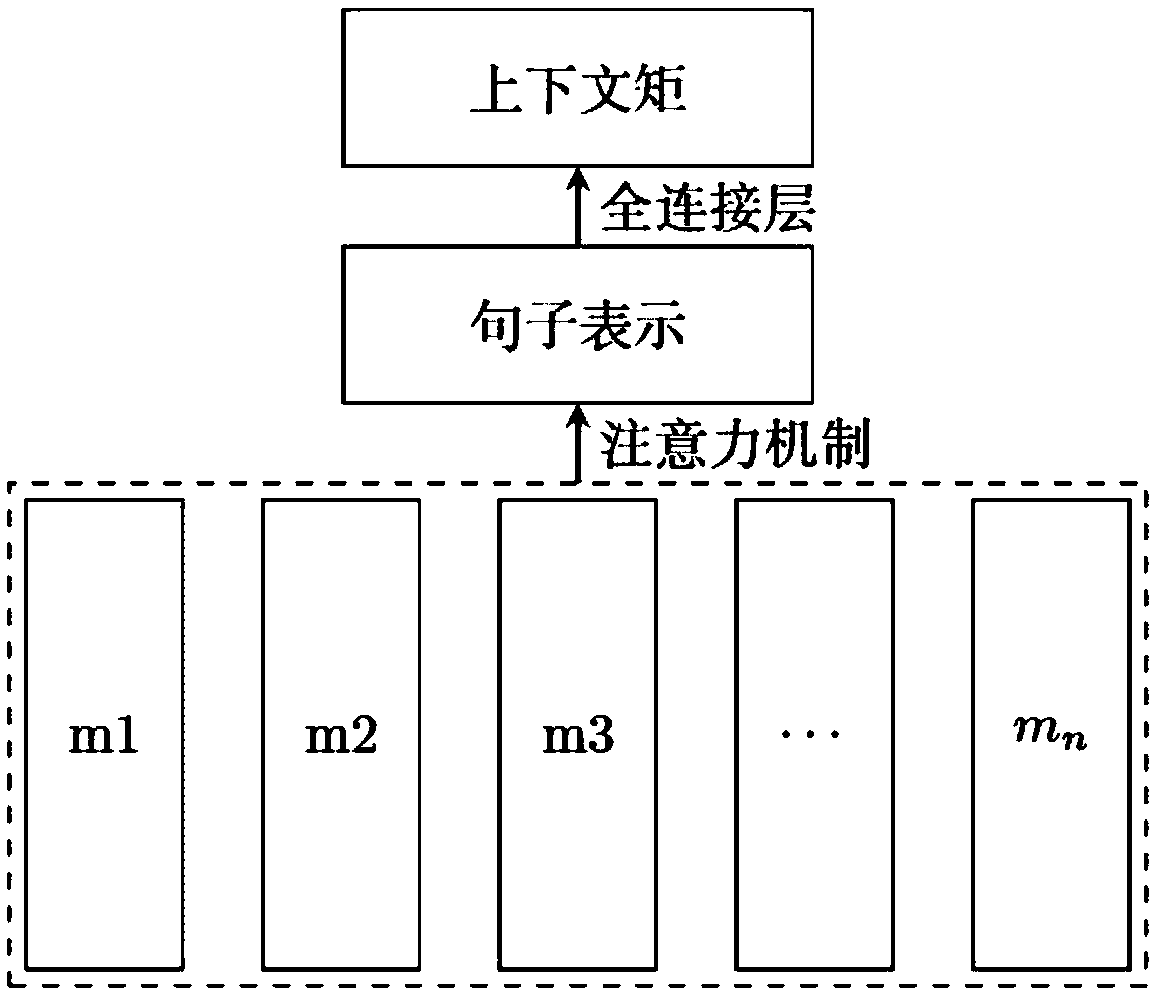

[0056] Figure 1~3 As shown, a deep network-based natural language sentiment analysis method includes an embedding module, a memory sequence building module, a semantic dependency mask attention module, a context moment emotion learning module, and an output module;

[0057] The embedding module uses an embedding lookup table pre-trained by an unsupervised method to convert words in the corpus into corresponding word vectors; for non-dictionary words that do not exist in the lookup table, a Gaussian distribution is used to randomly initialize the which is randomly transformed into a low-dimensional word embedding;

[0058] The memory sequence construction module converts the embedding sequence obtained by the embedding module into a memory sequence through a bidirectional long-short-term memory unit, and the converted memory sequence can represent where n is the sequence length;

[0059] The semantic dependence mask attention module extracts semantic dependence information ...

Embodiment 2

[0094] In this example,

[0095] Given the sentence "Great food but the service was dreadful!" and the object words "food" and "service".

[0096] Results: In the RAM model, both object words in this sentence were judged as positive sentiment, while the DMMN-SDCM model successfully identified the respective emotional polarities of the two object words.

[0097] Analysis: Since the DMMN-SDCM model replaces the traditional text distance information with semantic dependency information, the model can judge that the context word "dreadful" has a deeper impact on the object word "service" than the context word "Great". The emotional polarity judgment of the object word is more helpful. In addition, due to the introduction of the context moment learning task, the model can learn the relationship between the object words "food" and "service" at the same time when constructing the context memory sequence, that is, the contrastive relationship, so as to construct a more scientific memor...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com