Real-time action recognition method and device and electronic equipment

A real-time action and action recognition technology, applied in the field of biometric identification, can solve problems such as slow recognition speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

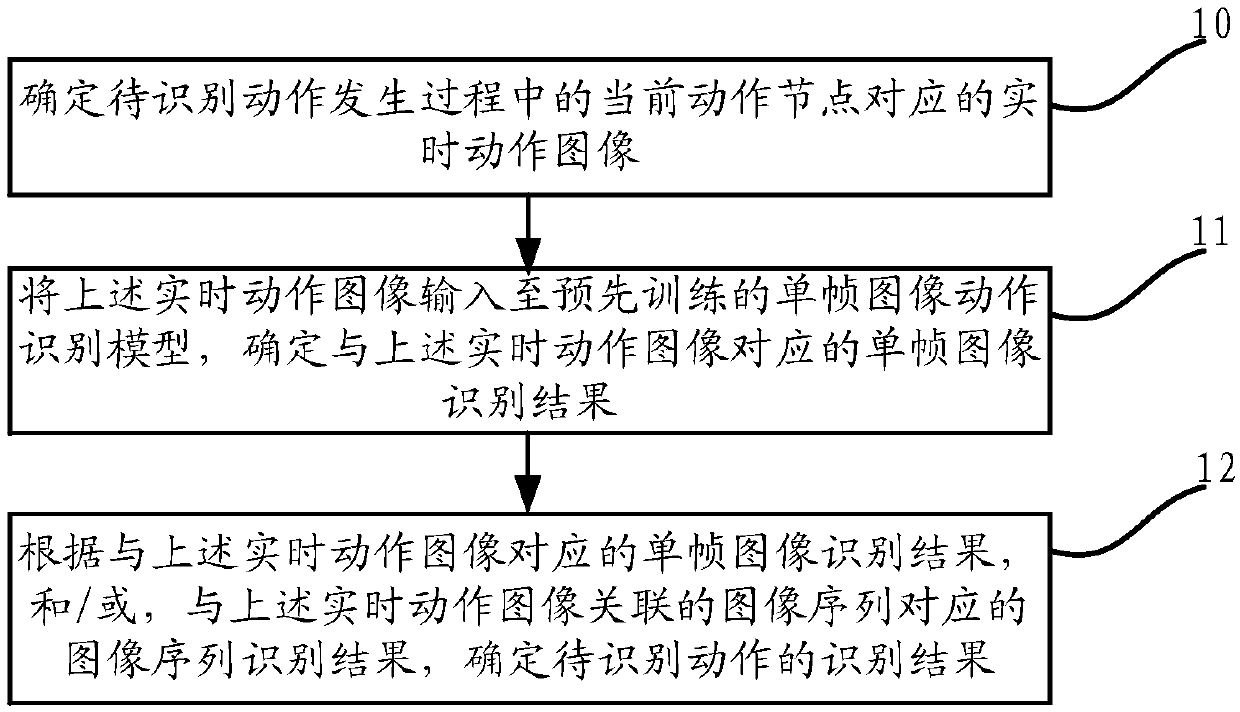

[0072] This embodiment provides a real-time action recognition method, such as figure 1 As shown, the method includes: Step 10 to Step 12.

[0073] Step 10, determine the real-time action image corresponding to the current action node during the action to be recognized.

[0074] The occurrence of a certain human action is composed of a series of sequential process actions, and each process action can be considered as an action node of the action. For example, when a "falling action" occurs, there will be sequentially occurring process actions such as "body leaning", "hand raising", "falling to the ground", wherein each process action such as "body leaning", " Hand raised", "falling to the ground" are considered as an action node of the "falling action". The complexity of an action is different, and the number of action nodes constituting the action is also different. For example, for an action of "raise hand", the action nodes constituting the action may only include an act...

Embodiment 2

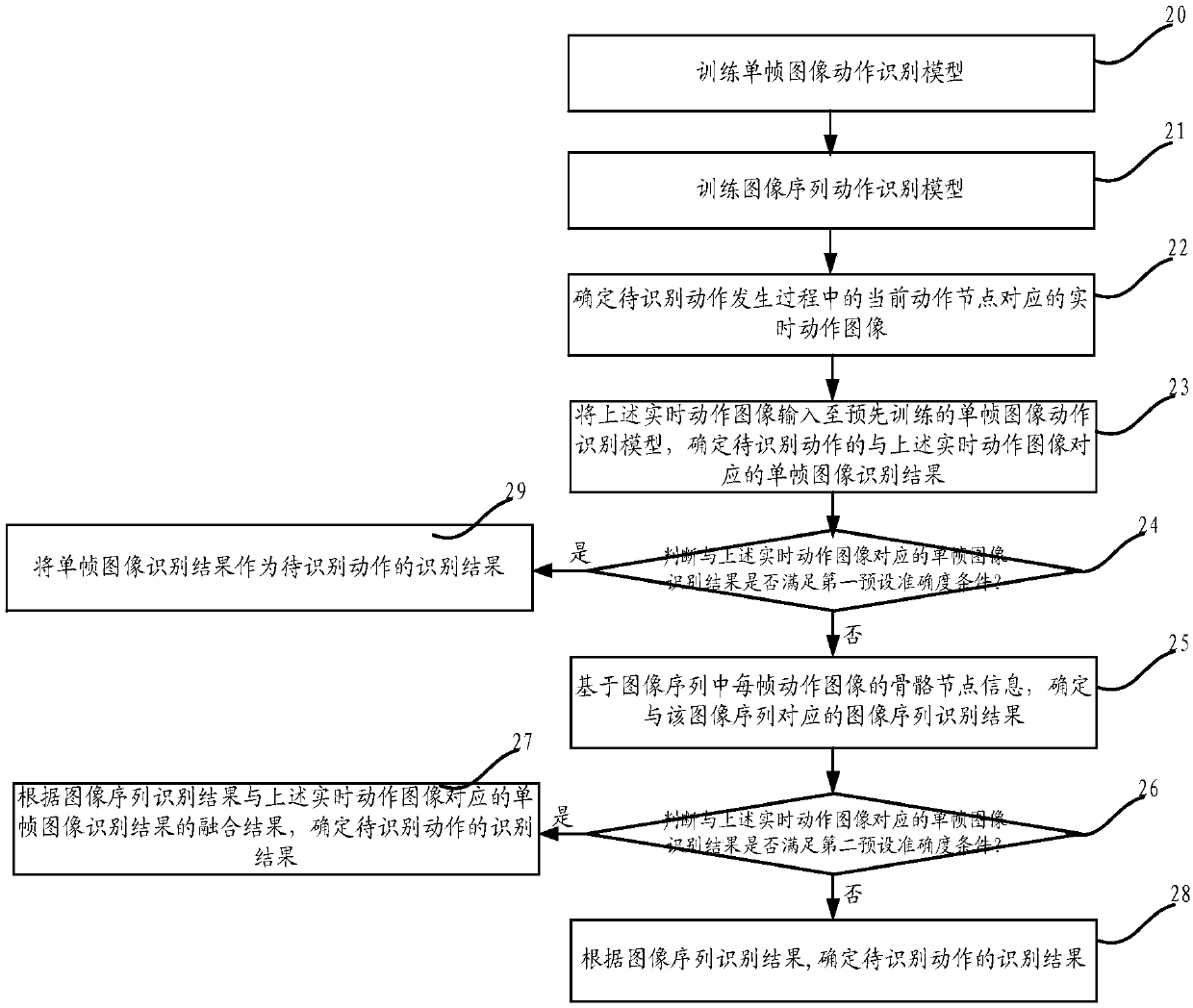

[0088] This embodiment provides a real-time action recognition method, such as figure 2 As shown, the method includes: Step 20 to Step 29.

[0089] Step 20, training a single-frame image action recognition model.

[0090]In some embodiments of the present application, the real-time action image is input into the pre-trained single-frame image action recognition model, and before the step of determining the single-frame image recognition result corresponding to the above-mentioned real-time action image of the action to be recognized, it also includes: training A single-frame image action recognition model.

[0091] During specific implementation, training the single-frame image action recognition model includes: obtaining a sample image set composed of several action images corresponding to at least one iconic action node in the occurrence of each preset action; Network training to obtain a single-frame image action recognition model. The preset action in the embodiment of...

Embodiment 3

[0147] Correspondingly, such as Figure 4 As shown, the present application also discloses a real-time action recognition device, the device comprising:

[0148] The real-time action image determination module 41 is used to determine the real-time action image corresponding to the current action node during the action to be recognized;

[0149] A single-frame image recognition module 42, configured to input the real-time motion image into a pre-trained single-frame image motion recognition model, and determine a single-frame image recognition result corresponding to the real-time motion image;

[0150]The recognition result determination module 43 of the motion to be recognized is configured to determine the The recognition result of the action to be recognized;

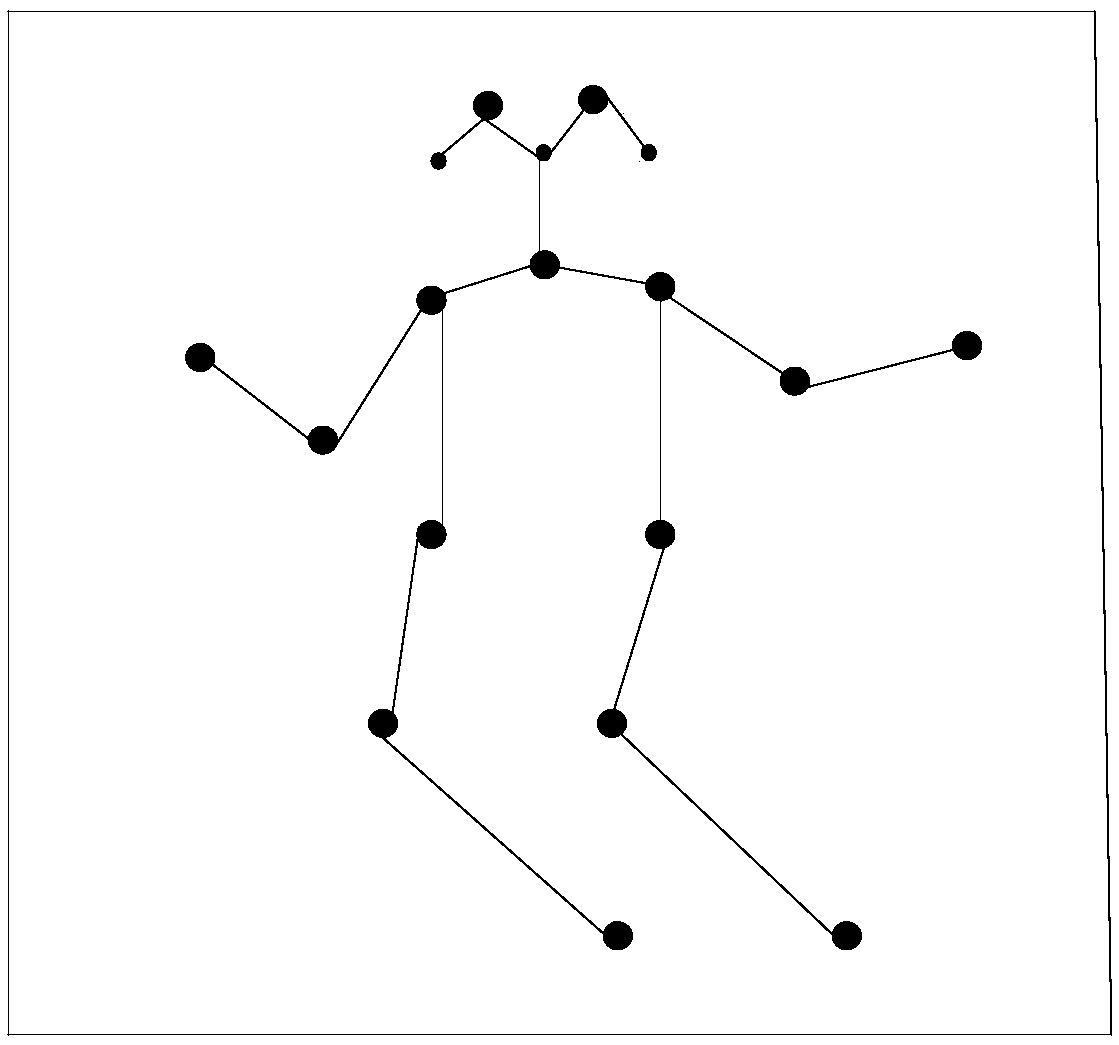

[0151] Wherein, the image sequence associated with the real-time action image consists of the real-time action images corresponding to the preset number of action nodes before the current action node corresponding ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com