A compression method of a deep neural network

A technology of deep neural network and compression method, which is applied in the direction of neural learning method, biological neural network model, neural architecture, etc. It can solve the problems of not considering weight correlation, low compression accuracy, and poor compression effect, so as to reduce the amount of calculation, The effect of reducing memory and high compression ratio

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0048] In order to make the purpose, technical solution and advantages of the present invention clearer, the present invention will be further elaborated below in conjunction with the accompanying drawings.

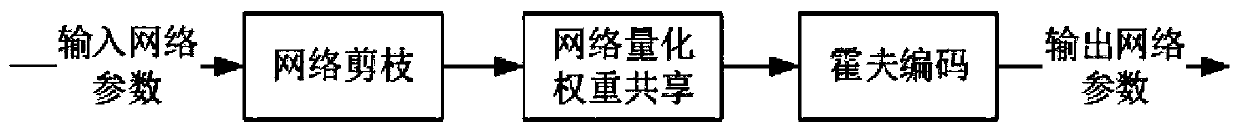

[0049] In this example, see figure 1 Shown, the present invention proposes a kind of compression method of deep neural network, comprises steps:

[0050] S100, network parameter pruning: pruning the network by pruning, deleting redundant connections, and retaining the connection with the largest amount of information;

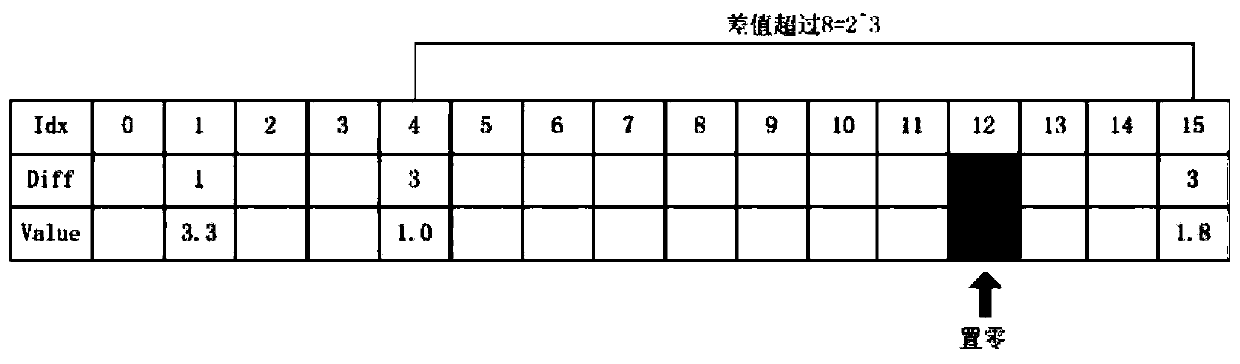

[0051] S200, training quantization and weight sharing: quantify the weights so that multiple connections share the same weights, and store valid weights and indexes;

[0052] S300, using the bias distribution of effective weights, and using Huffman coding to obtain a compressed network.

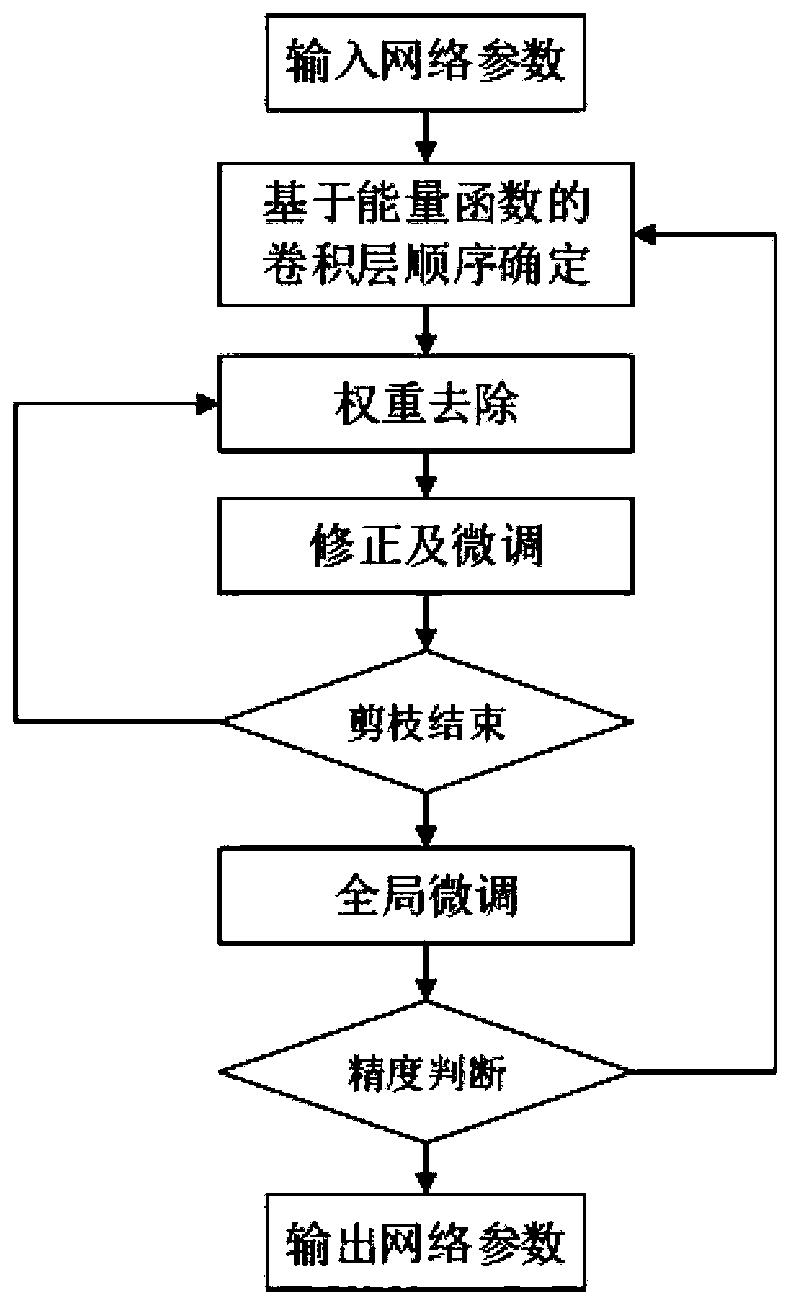

[0053] As the optimization scheme of the above-mentioned embodiment, in the step S100, the network parameters are pruned, such as figure 2 shown, including steps:

...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com