Two-dimensional image processing and three-dimensional positioning method based on human body acupuncture points

A two-dimensional image, three-dimensional technology, applied in the field of physiotherapy robots, can solve the problems of poor accuracy, difficulty in meeting high-precision human acupoint recognition and positioning requirements, low efficiency, etc., and achieve the effect of improving the accuracy of three-dimensional positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042]In order to illustrate the specific steps of the present invention more clearly, further description will be given below in conjunction with the accompanying drawings.

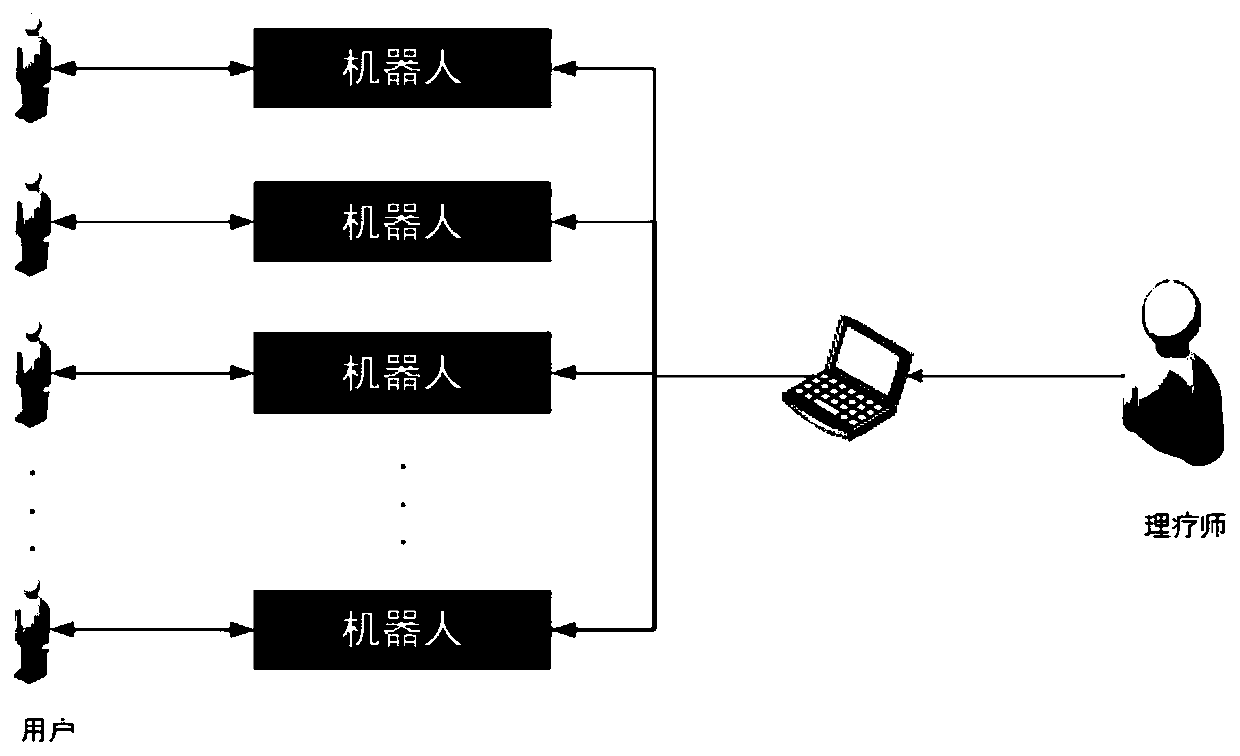

[0043] refer to figure 1 , the physical therapist can remotely monitor and intervene in the user's physical therapy process, and at the same time provide the user with comprehensive health care and physical therapy advice. Massage robots based on traditional Chinese medicine can enable users to enjoy health services in a variety of ways, and establish a personal health management center.

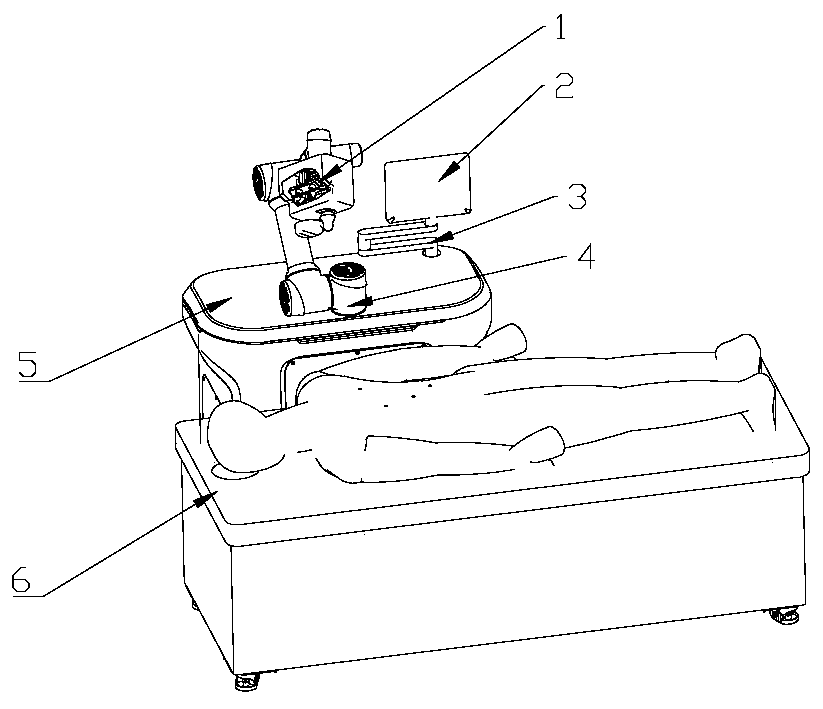

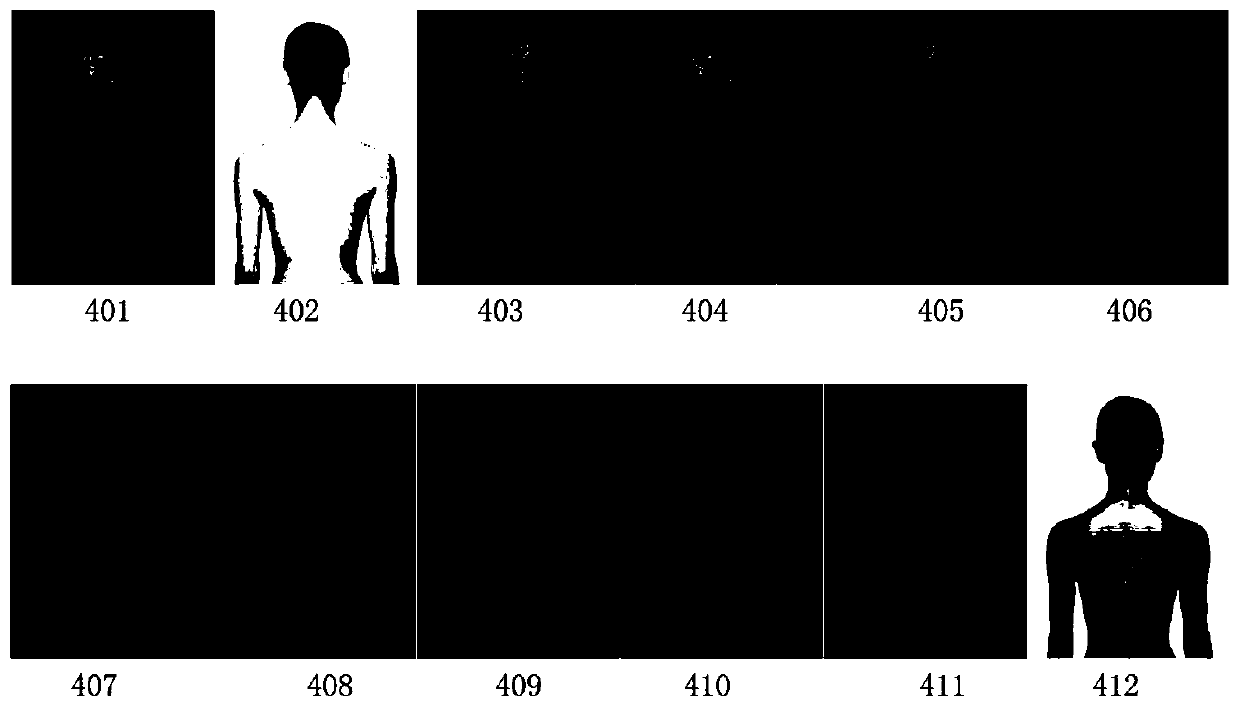

[0044] refer to figure 2 , the two-dimensional image processing and three-dimensional stereotaxic positioning method based on human acupoints according to the present invention. It includes five parts, namely image acquisition, camera calibration, 2D contour extraction, 3D point cloud acquisition and positioning. By preprocessing the collected images, the left camera as the origin of the coordinates is calculated ac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com