A feature image recognition method based on a multi-attention space pyramid

A space pyramid and feature image technology, applied in the field of improved deep convolutional network structure, can solve the problems of difficult fine-grained feature extraction and low recognition accuracy of images, and achieve enhanced feature extraction capabilities, improved accuracy, and improved The effect of accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

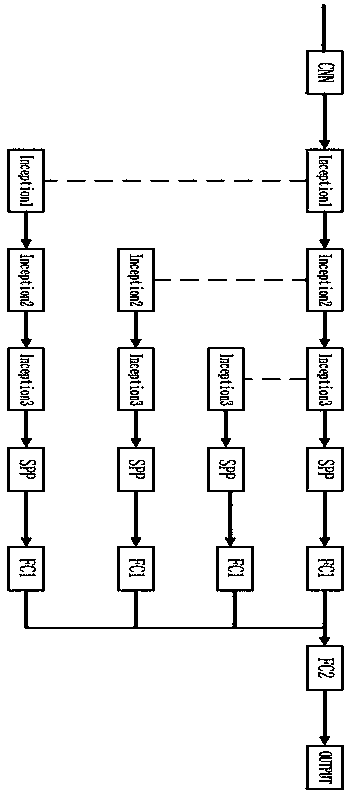

[0014] figure 1 As shown, in the image input layer, because the model adds spatial pyramid pooling, feature maps of any size can be converted into feature vectors of fixed size, and there is no requirement for the size of the input image, and images of any size can be input. Feature extraction stage:

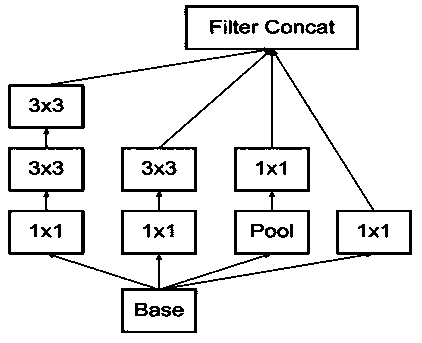

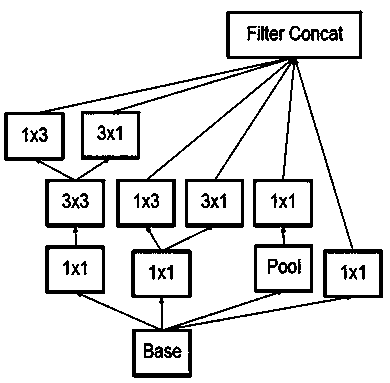

[0015] Constructing a feature extraction network based on a multi-attention space pyramid is as follows: Based on the Inceptionv3 network, a feature extraction network is proposed. The feature extraction network has a main network and three branch networks, and each branch network shares the convolutional layer of the CNN model. Each branch has the same inception module as the main network, such as figure 1 Among them, the CNN structure contains five convolutional layers, two average pooling layers, and the Relud activation function and BN (standardized) operation are added after each convolution, specifically:

[0016] The convolution kernel size is 3×3, the depth is 32, the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com