Panoramic vision SLAM method based on multi-camera cooperation

A panoramic vision and multi-camera technology, applied in computer components, image data processing, 3D modeling, etc., can solve problems such as limited perception field of view, poor ability to resist light and occlusion, poor positioning accuracy in weak texture environments, etc., to achieve Efficient and robust work, the effect of improving operating efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment example 1

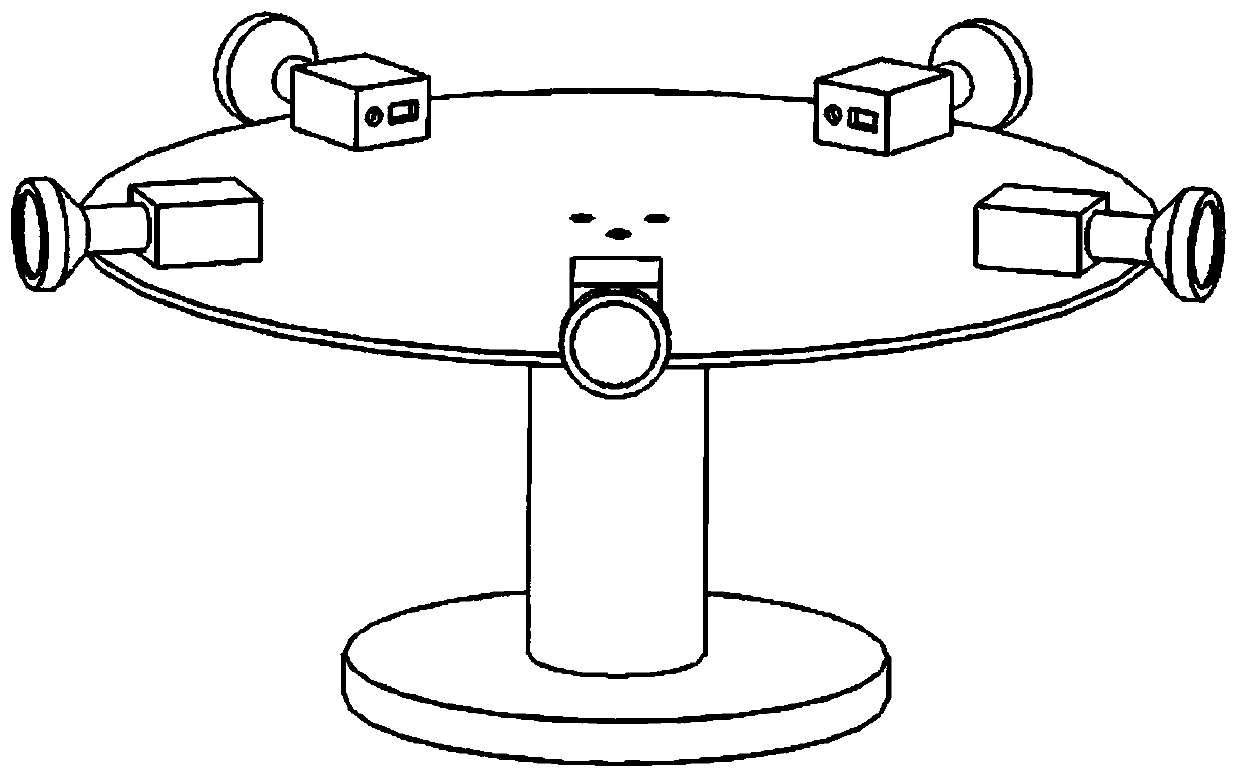

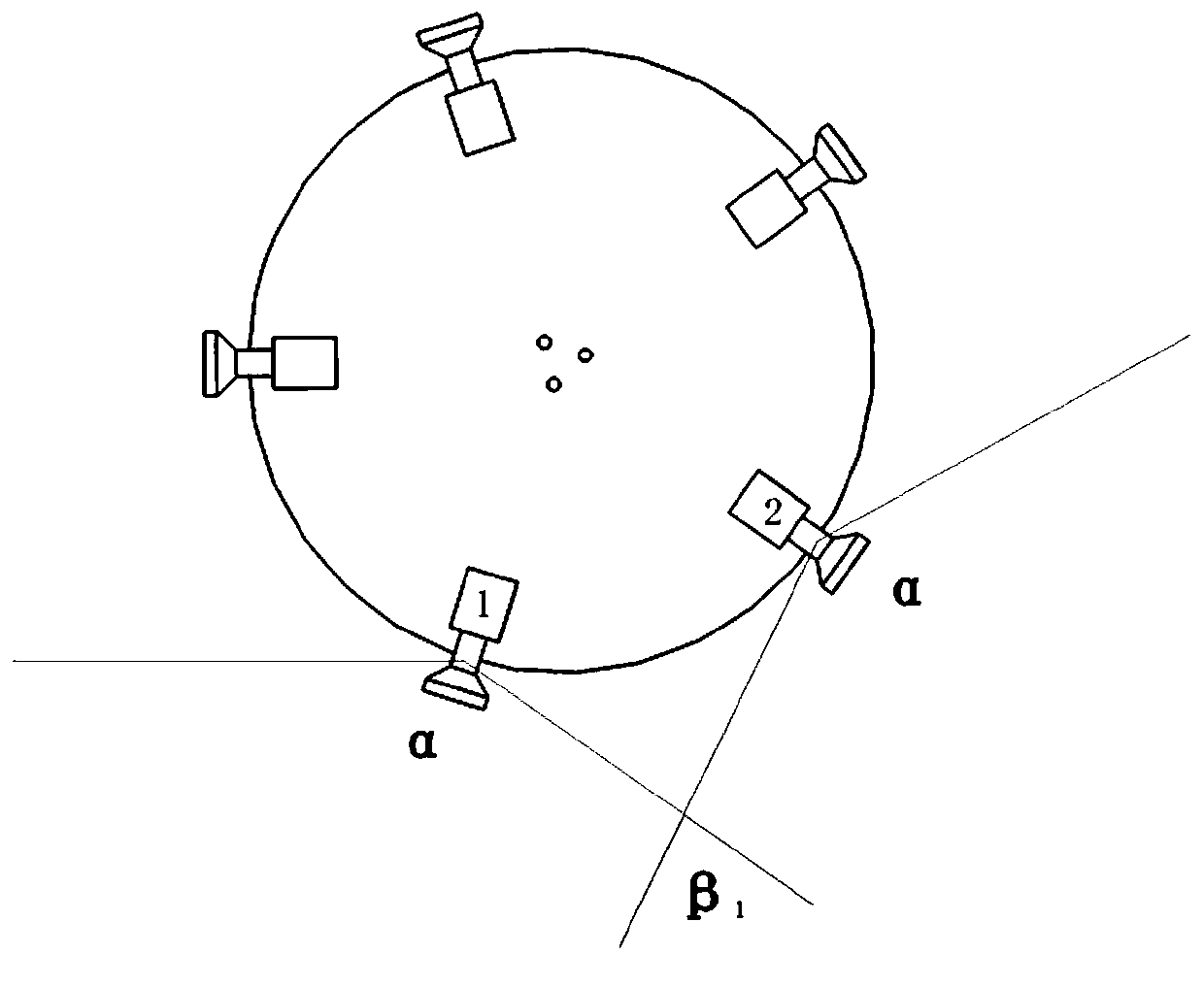

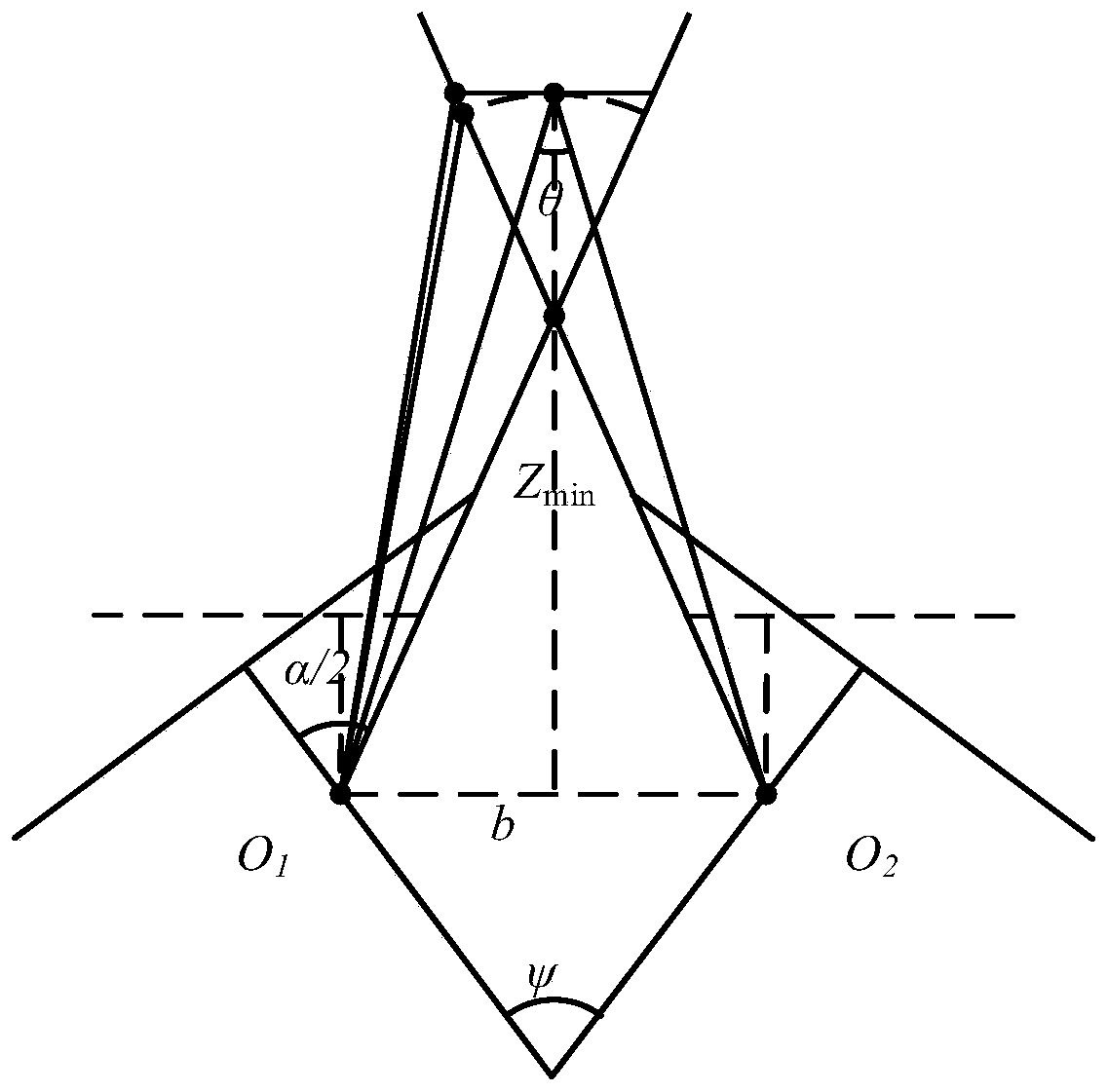

[0074] This implementation case provides a panoramic vision SLAM based on multi-camera collaboration. figure 1 As shown, the figure shows the structure of the SLAM system with 5 cameras; figure 2 It is a schematic diagram of the distribution of a multi-camera system. The given horizontal field of view of the camera is 120 degrees, and β=90. According to the calculation formula of the number of cameras in the present invention, the number of cameras N≥4.5, and N is 5, then the adjacent camera clamp The angle is 72 degrees; according to image 3 Schematic diagram of the parameter relationship and derived formula under the non-parallel camera structure. When the perceived environment range is given as 5-10m, the baseline length can be set to 30cm. Using these parameters to lay out the camera can more effectively and accurately perceive the environment for more accurate acquisition. The results of the positioning and composition of the SLAM system.

[0075] Such as Figure 4 As shown...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com