A Mute Language Action Recognition Method Based on Two-way 3dCNN Model

A technology of action recognition and modeling, applied in the field of computer vision, to achieve the effect of improving the quality of life and spiritual well-being index

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The specific implementation manners of the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. The following examples are used to illustrate the present invention, but are not intended to limit the scope of the present invention.

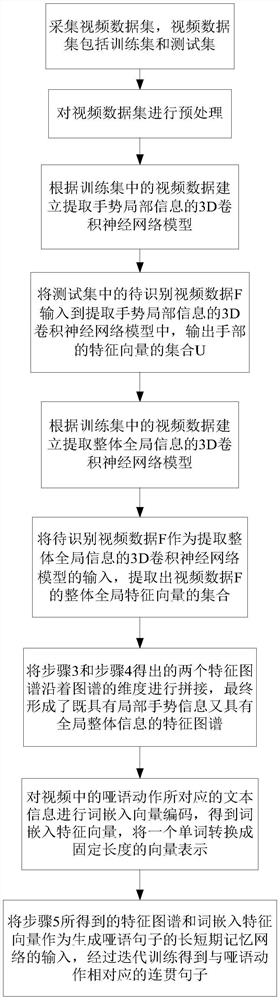

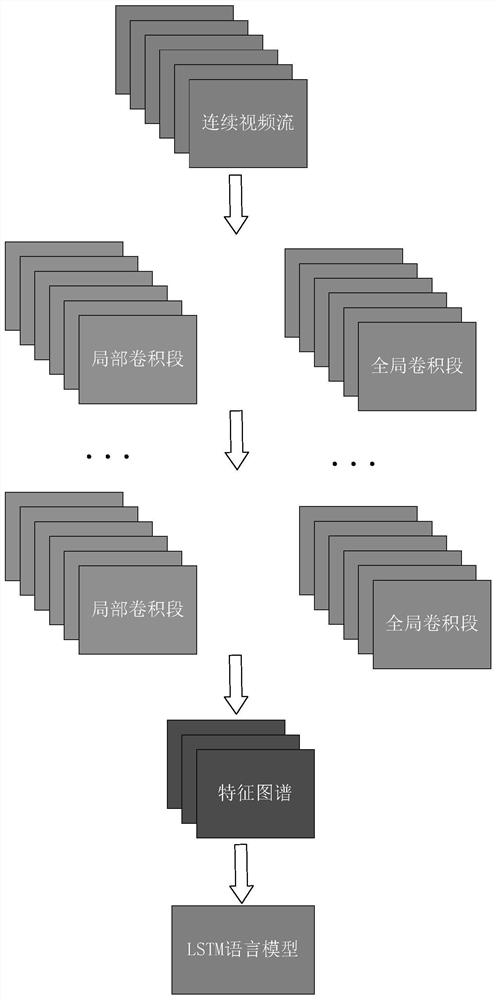

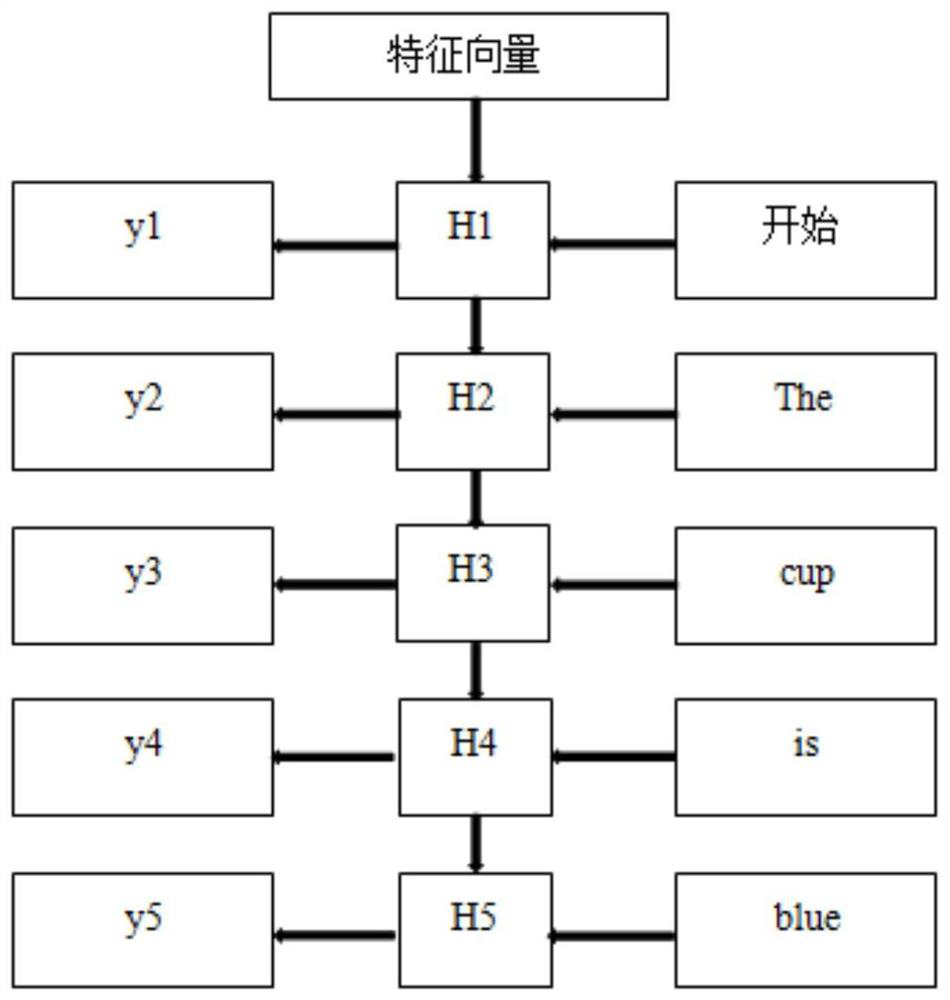

[0030] The traditional convolutional neural network can only obtain the spatial features of the input data, but for sign language videos, the features in the time dimension are also very important. Therefore, this method hopes to use the 3DCNN model framework to simultaneously extract the dumb language video streams. 3D here does not refer to the 3D in three-dimensional space, but refers to the 3D data formed after introducing the time dimension on the two-dimensional image, that is, the data composed of a series of video frames. At the same time, dumb language movements are different from general gestures. In addition to the most important hand information, dumb language ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com