Multi-scene target tracking method based on adaptive depth feature filter

A deep feature and target tracking technology, applied in the field of computer vision, can solve the problems of fixed depth feature filter weight, inability to adapt to a variety of complex scenes, and inability to integrate depth features well

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0064] The invention proposes a multi-scene target tracking method based on an adaptive depth feature filter. A target tracking system is implemented using MATLAB programming language. The system automatically marks the system-predicted target area in subsequent frames by reading the video with the target area marked in the first frame.

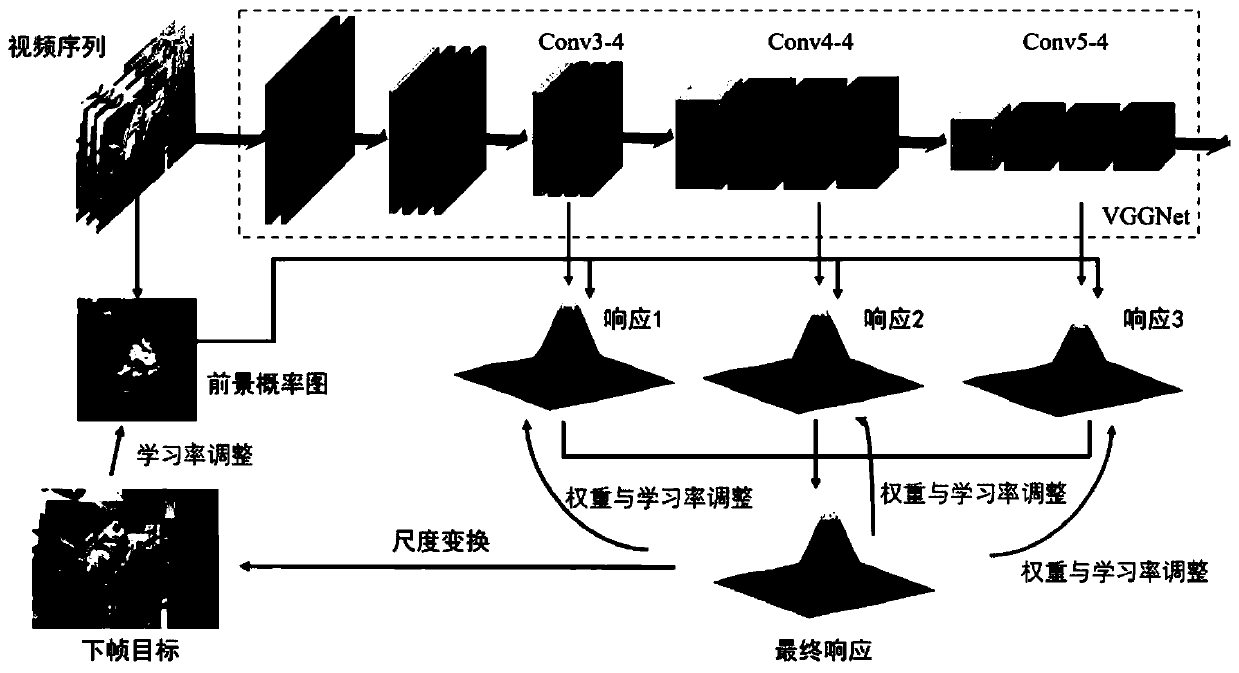

[0065] figure 1 It is the video object tracking process of the embodiment of the present invention. The specific implementation steps of the present invention are as follows:

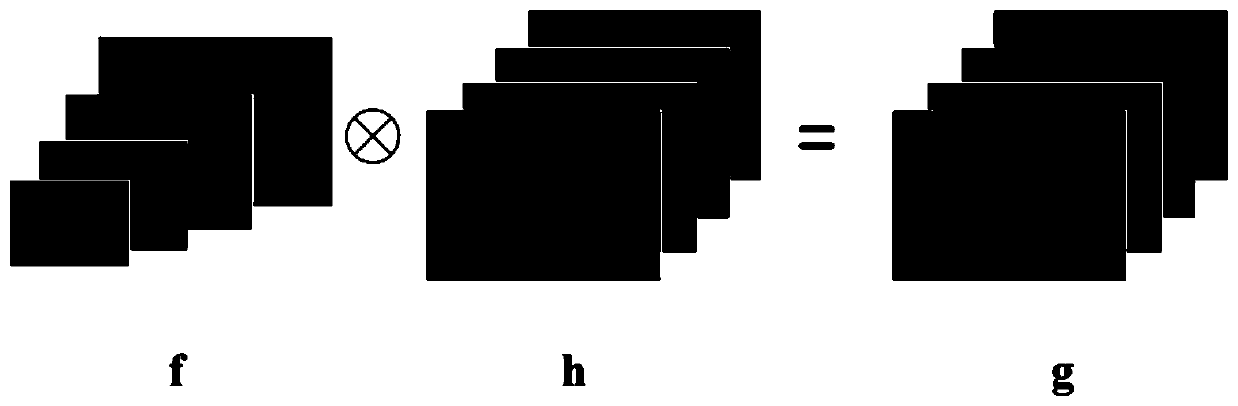

[0066] 1. Generate training examples. The training samples of the first frame are manually marked tracking target areas, and the training samples of subsequent frames are the predicted target areas. On the training samples, a circular matrix is used to generate positive and negative samples for training deep features. filter;

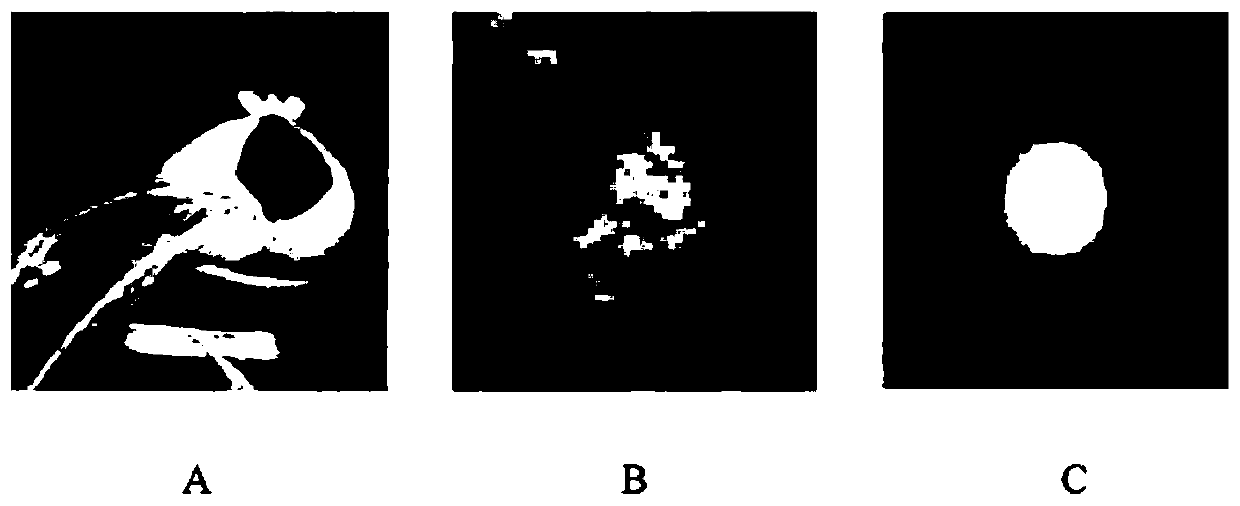

[0067] 2. Adaptively extract foreground objects. The target area contains a lot of background noise, the Hamming window cannot alleviat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com