Video style migration method based on time domain consistency constraint

A consistency and video technology, applied in image data processing, instrumentation, computing, etc., can solve the problems of not considering time domain correlation, lack of long-term consistency of stylized video, poor coherence effect of stylized video, etc., to achieve improved Training speed, satisfying real-time performance, and improving visual effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] Embodiments and effects of the present invention will be further described below in conjunction with the accompanying drawings.

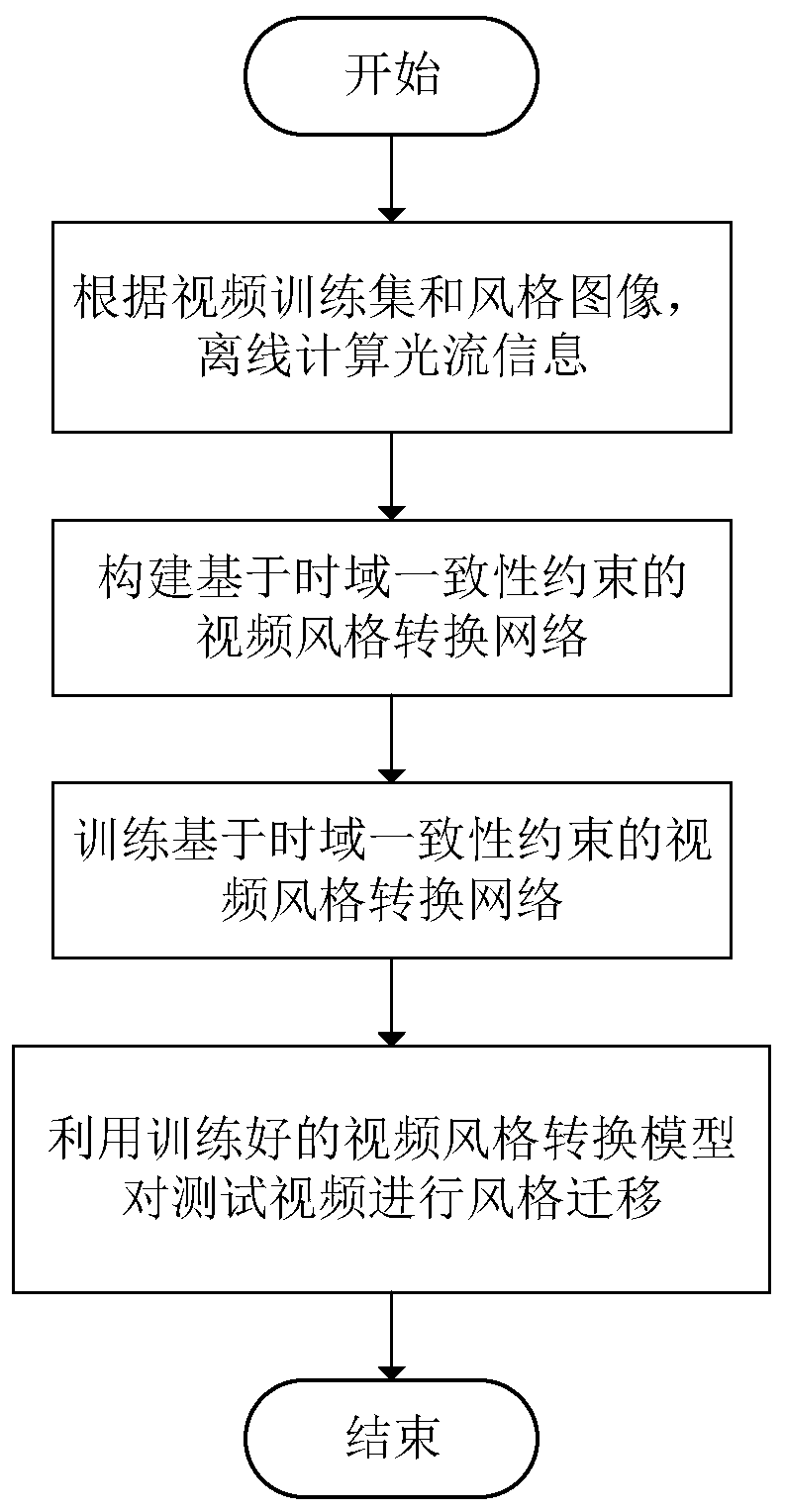

[0031] refer to figure 1 , the realization steps of the present invention are as follows:

[0032] Step 1. According to the video training set V and the style image a, the optical flow information is calculated offline.

[0033] (1a) Obtain video training set V and style image a, wherein V contains N groups of video sequences, and each group of video sequences I nIncluding four images, each of which is the first frame I of a video 1 , frame 4 I 4 , frame 6 I 6 and frame 7 I 7 , where n={1,2,...,N};

[0034] (1b) Calculate the optical flow information between different frame images through the existing variational optical flow method and optical flow confidence information C n ={c (1,7) ,c (4,7) ,c (6,7)},in Indicates the optical flow information of frame i to frame 7, c (i,7) Denotes the optical flow confidence matrix between f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com