Scene depth and camera position and posture solving method based on deep learning

A technology of scene depth and deep learning, which is applied in the field of camera motion parameters, can solve problems such as time series information modeling, time series learning without image sequences, and limited performance of position and pose estimation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

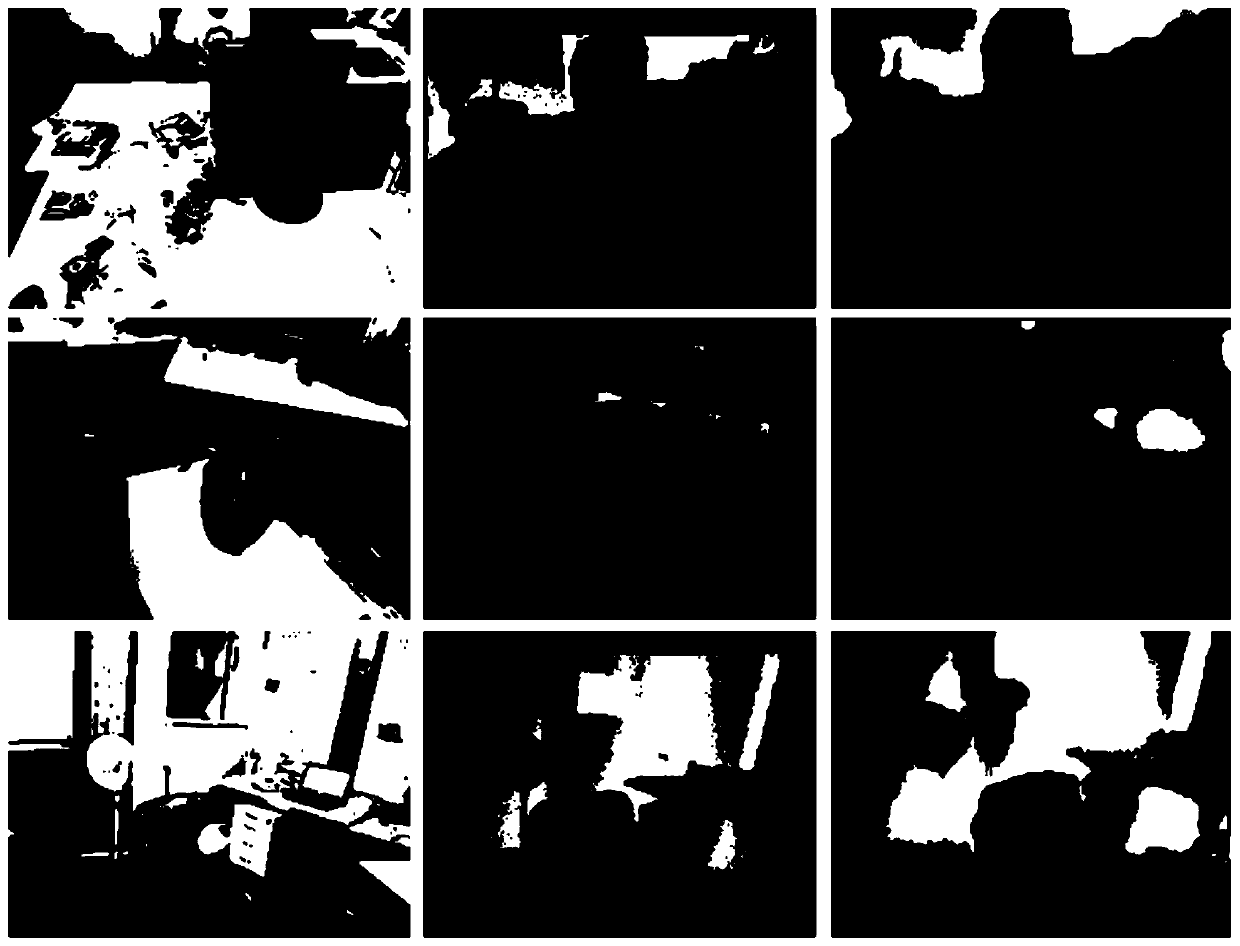

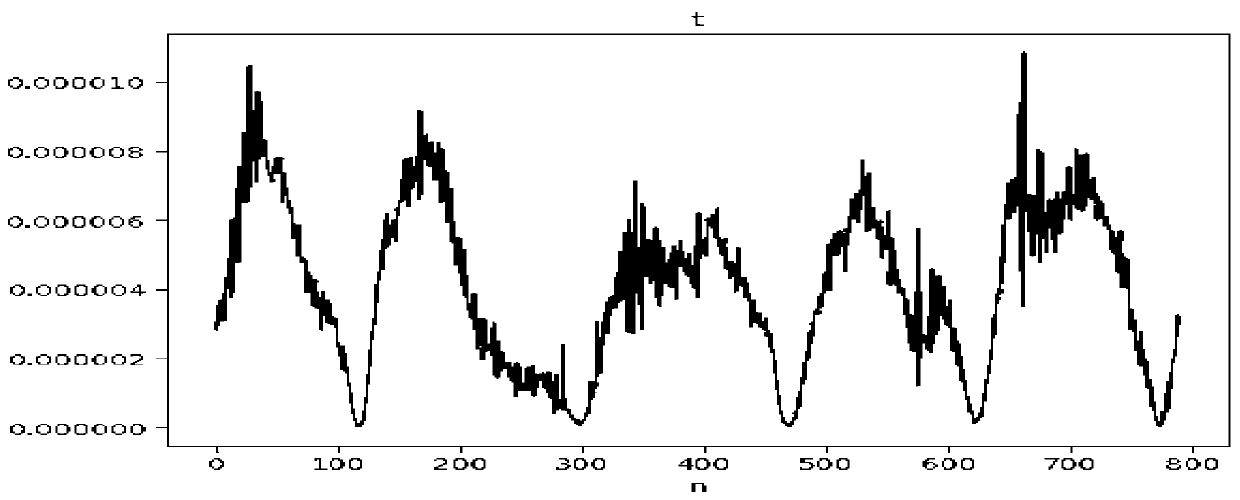

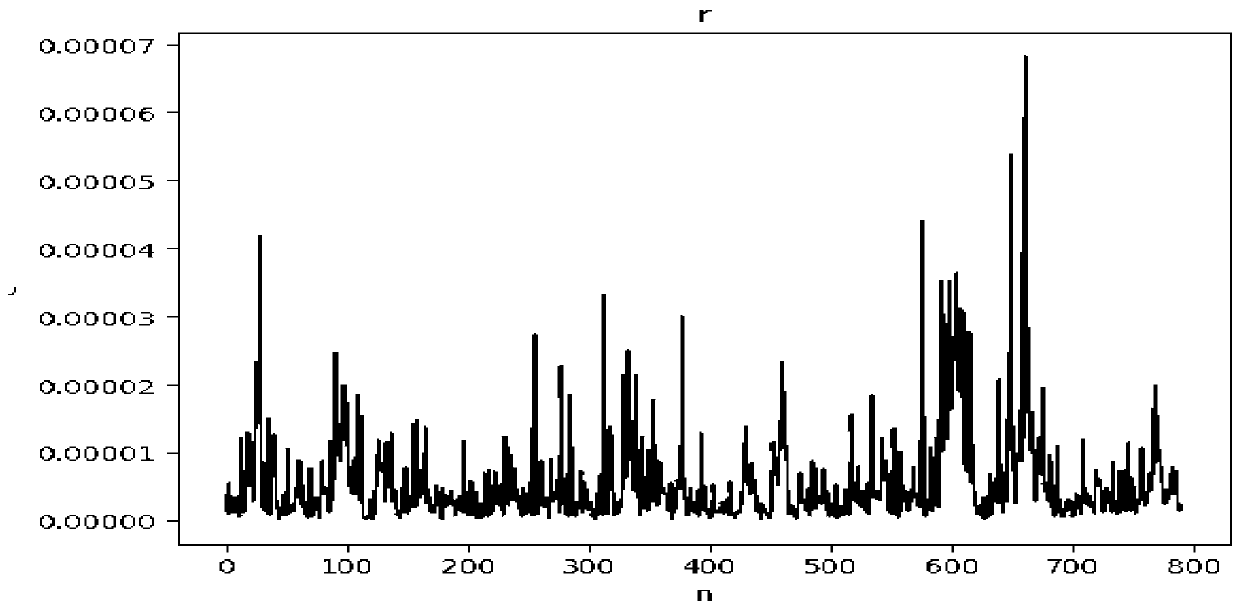

Image

Examples

Embodiment

[0042] Below in conjunction with accompanying drawing the present invention is further described, present embodiment implements under Windows10 64 operating system on PC, its hardware configuration is CPU i7-6700k, memory 16G, GPU NVIDIA GeForce GTX 1070 8G. The deep learning framework uses Keras 2.1.0 and uses Tensorflow 1.4.0 as the backend. Programming adopts python language.

[0043] A method for solving scene depth and camera position and posture based on deep learning. This method inputs an RGB image sequence with a resolution of N×N, where N is 224. It specifically includes the following steps:

[0044] Step 1: Dataset Construction

[0045] Filter B image sequences with the same resolution from the RGBD SLAMDataset dataset of the website https: / / vision.in.tum.de / data / datasets / rgbd-dataset, B is 48, and the number of images in each image sequence is C Frame, 700≤C≤5000, each image sample contains RGB three-channel image data, depth map, camera position and posture, and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com