Behavior recognition method, device, equipment and medium based on lrcn network

A recognition method and behavioral technology, applied in character and pattern recognition, biological neural network models, instruments, etc., can solve the problem of high computational overhead and achieve the effect of reducing the amount of calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] In order to make the objects, technical solutions, and advantages of the present invention, the present invention will be further described in detail below in connection with the embodiments and drawings. Here, a schematic embodiment of the present invention will be described herein for explanation of the invention, but is not limited to the present invention.

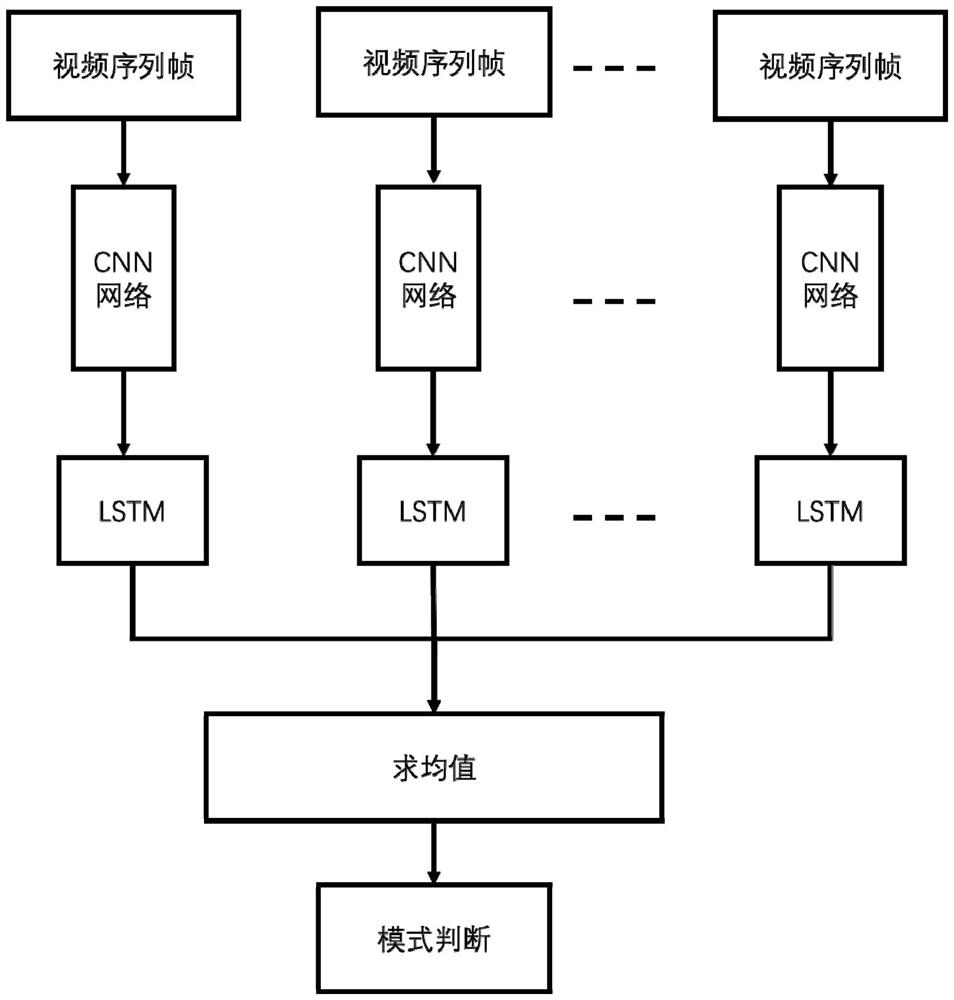

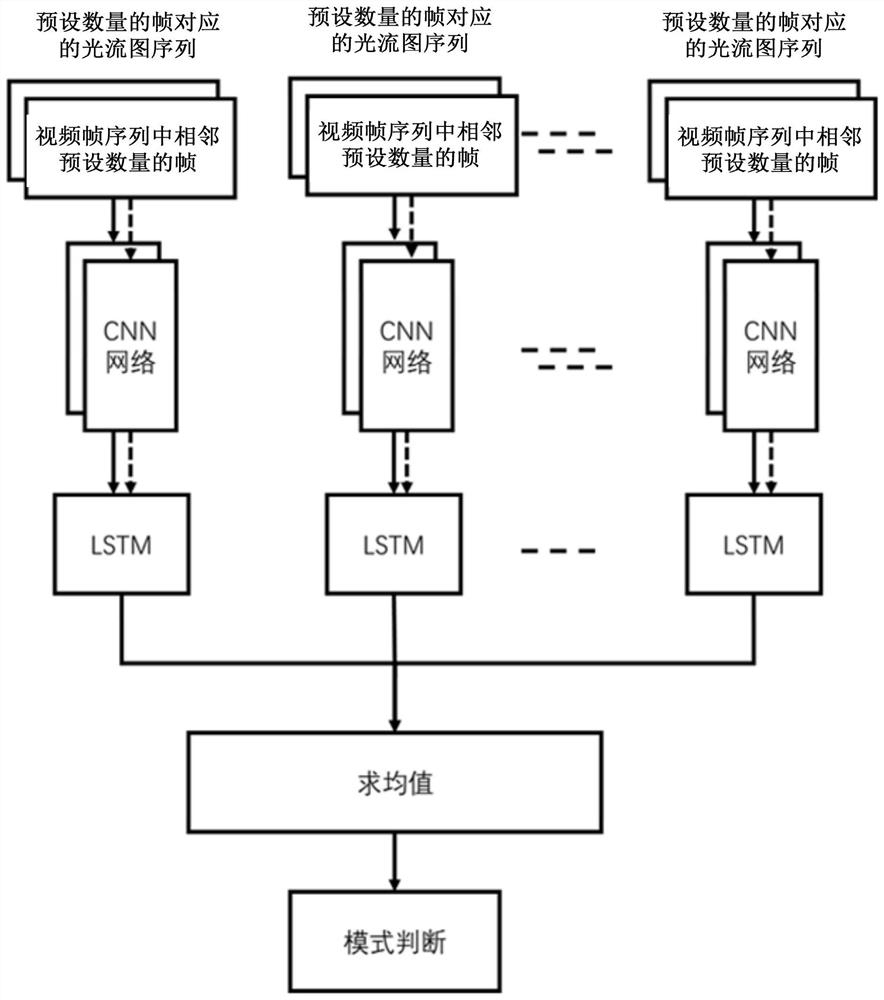

[0024] from figure 1 The LRCN network structure shows that the inventor discovered that the convolution calculation is very large in the entire calculation process, and during the proceeds of the behavior of each video sequence, each picture of the input portion of the LRCN network is To enter a separate convolutional neural network for calculation, after 20 separate convolutional neural networks, the weight of the convolutional neural network of each time is different. However, in fact, the image information between adjacent frames has a large amount of redundancy, and the calculation directly to the original image...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com