Method and device for determining pose of to-be-grabbed object and electronic equipment

A technology of object and pose, which is applied in the field of devices and electronic equipment, and the determination method of the pose of the object to be grasped. It can solve the problems of poor robustness, labor and time consumption, and affecting the accuracy and success rate of object grasping. , to achieve the effect of improving accuracy and success rate, saving labor and time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

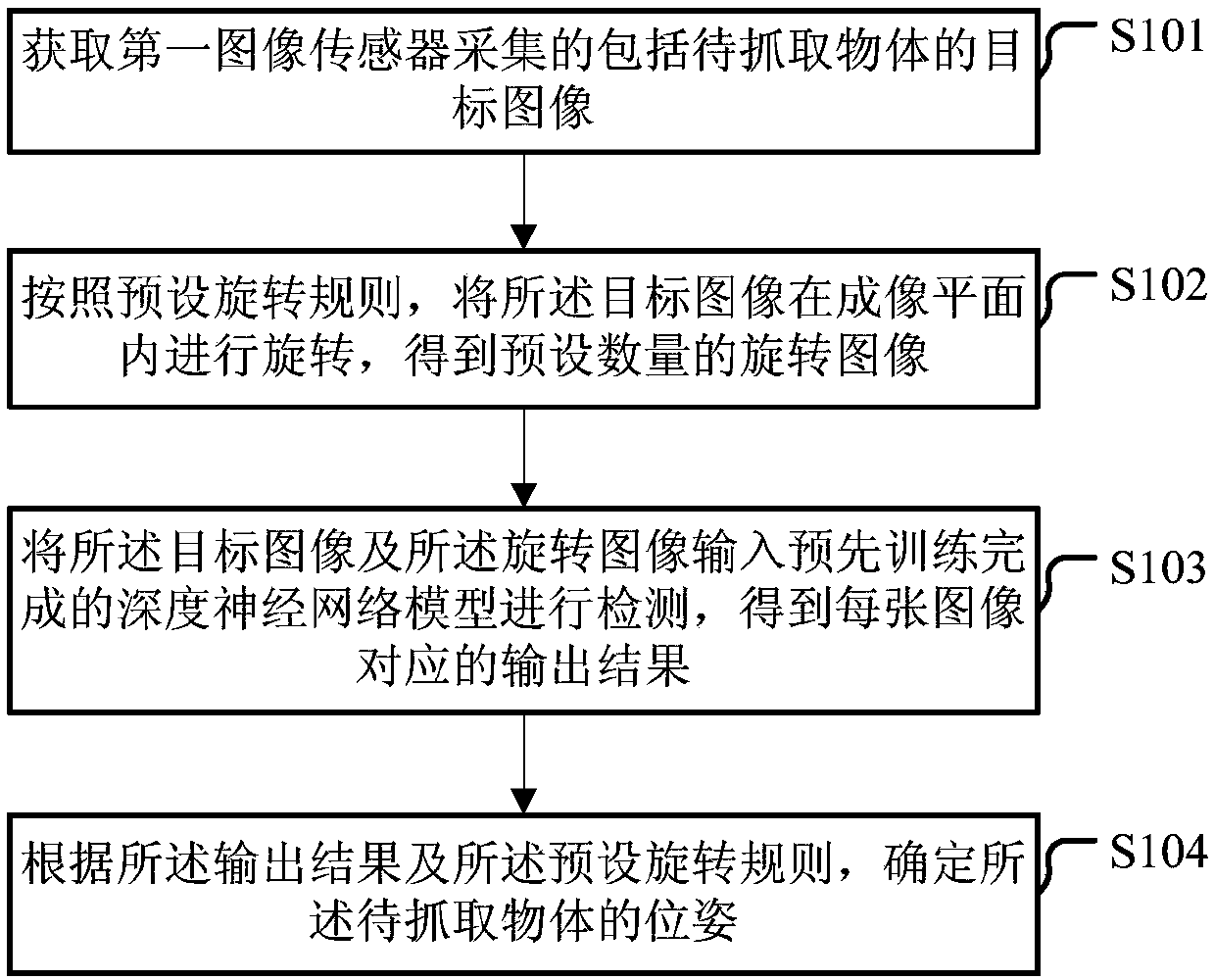

[0154] As an implementation manner of the embodiment of the present invention, the above method may also include:

[0155] According to the pose of the object to be grasped, the mechanical arm is controlled to grasp the object to be grasped.

[0156] Since the pose of the object to be grasped relative to the coordinate system of the manipulator can be determined, the electronic device can control the movement of the manipulator to the position of the object to be grasped according to the pose of the object to be grasped relative to the coordinate system of the manipulator, and then treat the Grab objects for grabbing.

[0157] It can be seen that in this embodiment, after the pose of the object to be grasped is determined, the electronic device can control the robotic arm to grasp the object to be grasped according to the pose of the object to be grasped, with high grasping accuracy.

[0158] As an implementation mode of the embodiment of the present invention, such as Figu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com