Text abstract generation method based on sentence association attention mechanism

An attention and sentence technology, applied in biological neural network models, special data processing applications, instruments, etc., can solve problems such as poor sentence coherence, slow progress in generating abstracts, and high information redundancy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

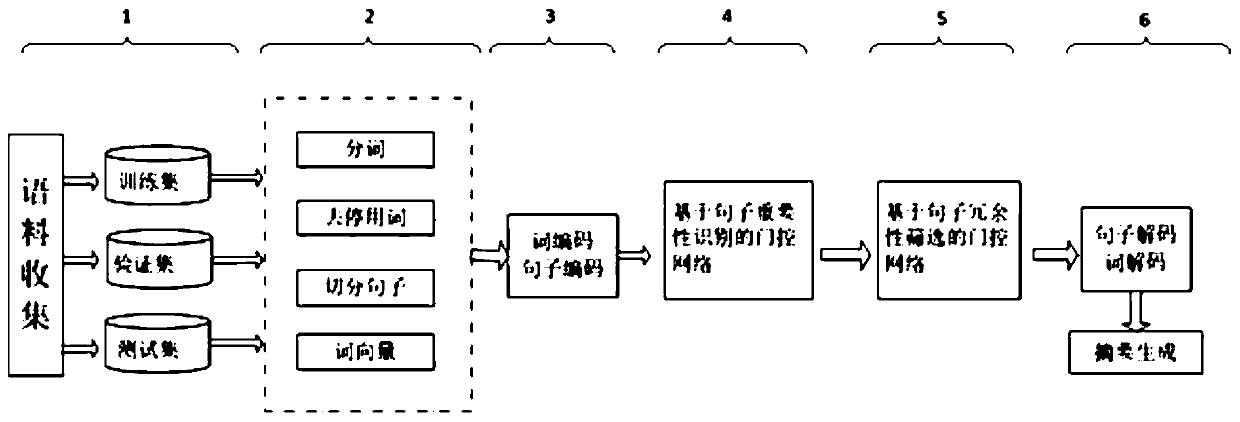

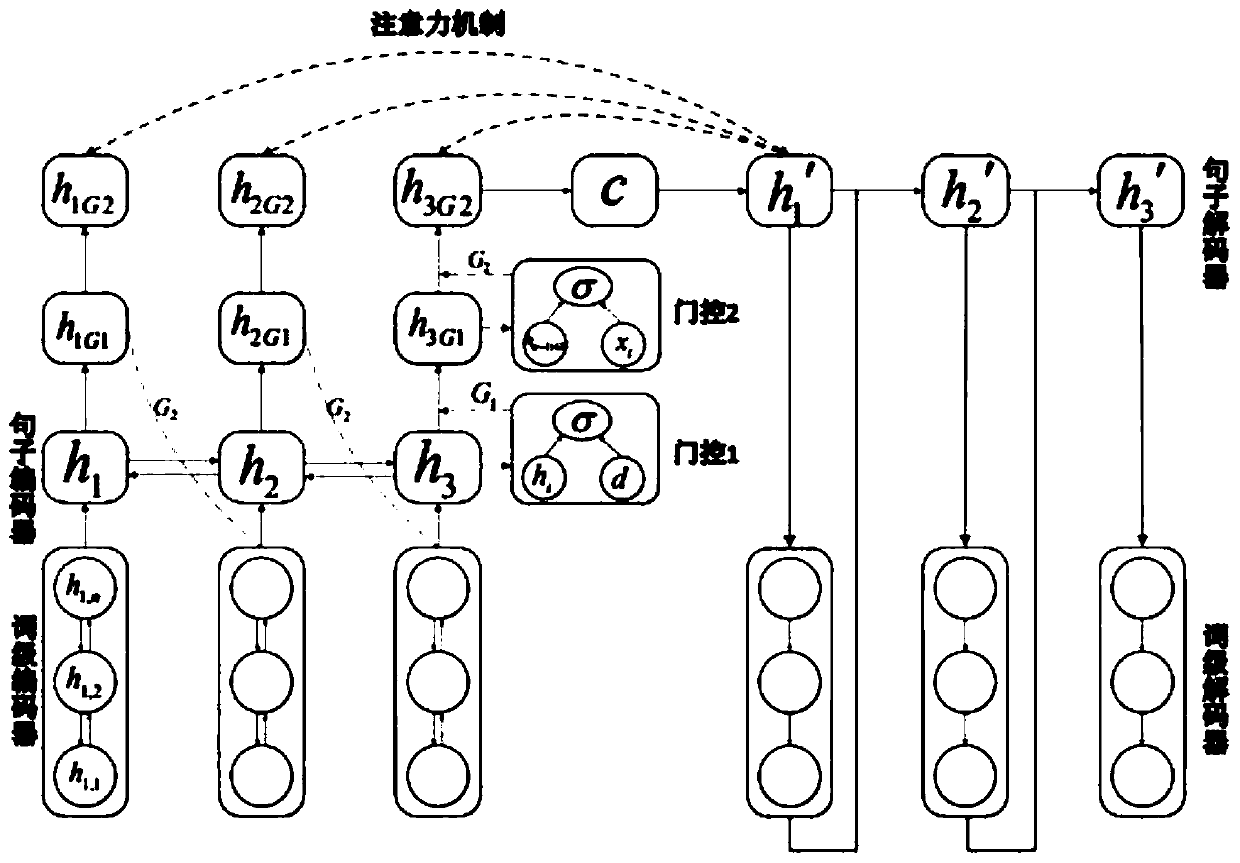

[0050] Embodiment 1: as Figure 1-2 As shown, the text summary generation method based on the sentence association attention mechanism, the specific steps are as follows:

[0051] Step1. More than 220,000 news documents were collected and sorted out as experimental data. This set of experimental data is divided into three parts: training set, verification set, and test set. The training data set contains more than 200,000 Chinese news corpus; the verification set and test set There are more than 10,000 pieces of data each, involving news events in recent years.

[0052] Step2. Before performing the summarization task, preprocess the document, including steps such as segmentation, word segmentation, and removal of stop words. The preprocessing parameters are set as follows: 100-dimensional word vectors pre-trained with word vectors (word2vec) are used as embedding initialization and allowed to be updated during training, and the hidden state dimensions of the encoder and decod...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com