A Method of Address Information Feature Extraction Based on Deep Neural Network Model

A deep neural network and address information technology, which is applied in the field of address information feature extraction based on a deep neural network model, can solve the problems of inability to dig deep into the connotation of text features, weak generalization, and information islands, and achieve model structure and training framework The effects of perfection, efficient extraction, accurate fitting and efficient calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

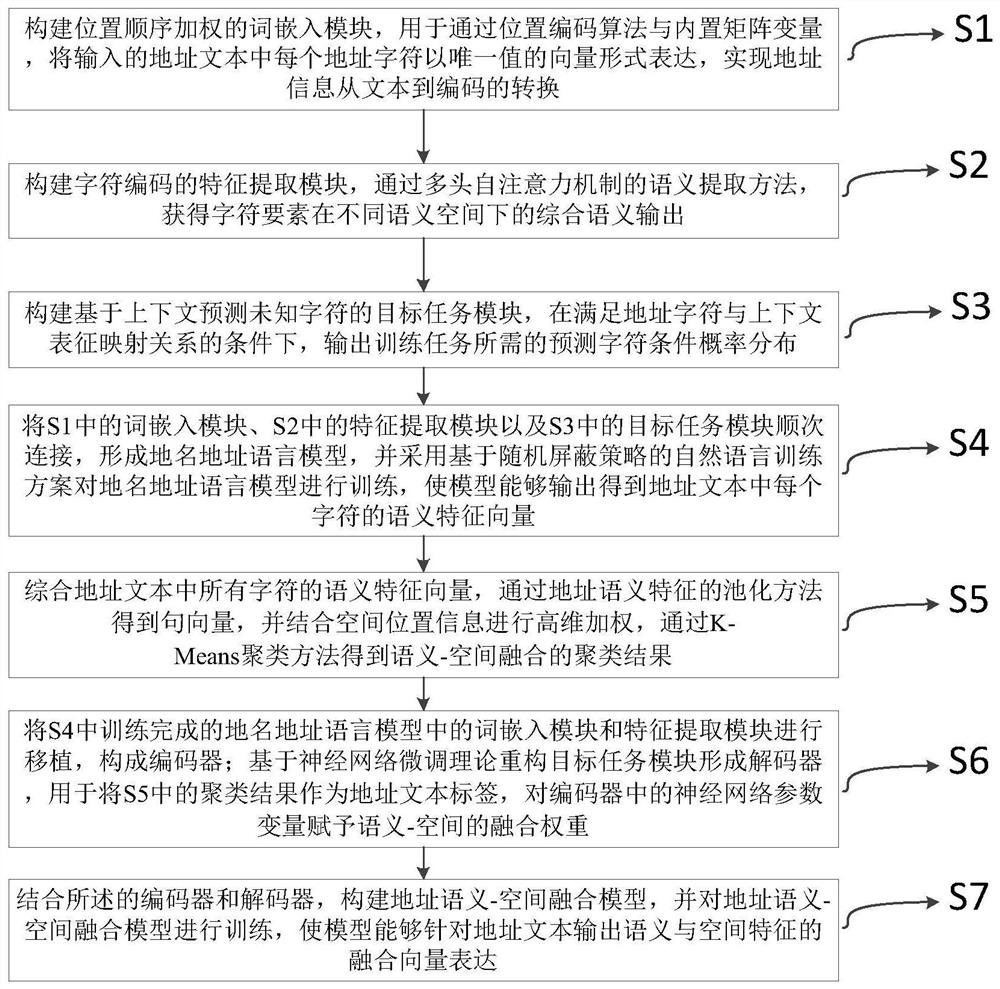

Method used

Image

Examples

Embodiment 1

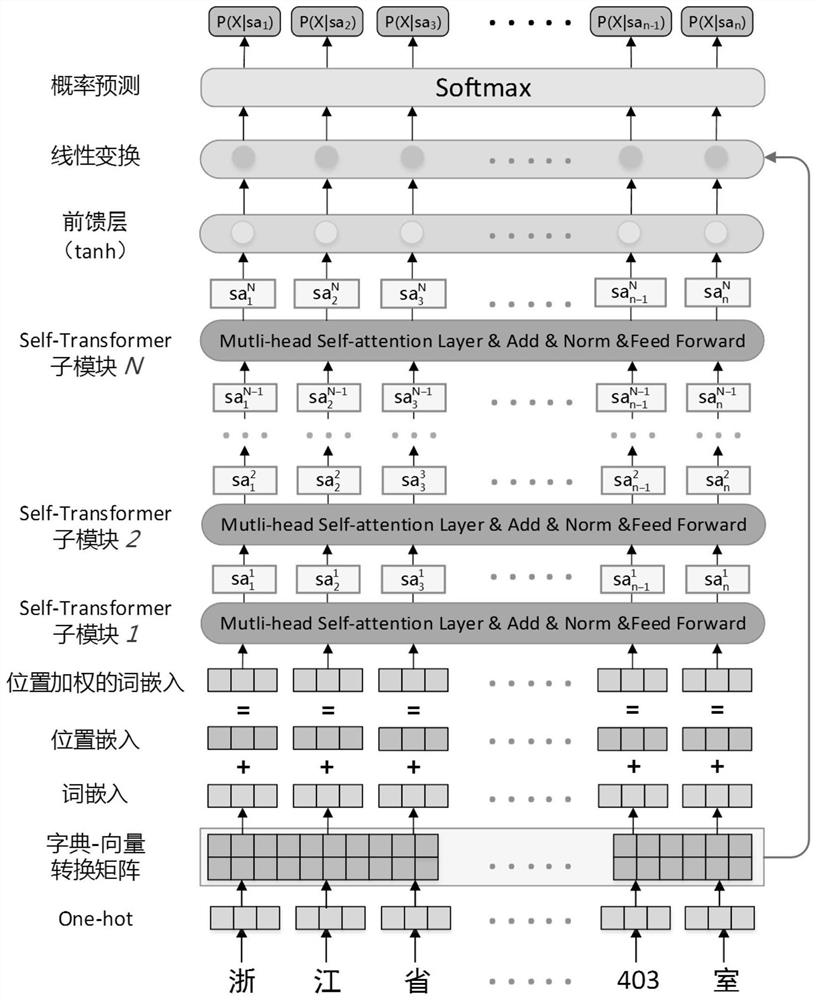

[0250] In this embodiment, an address text data set is constructed with 2 million pieces of place name and address data in Shangcheng District, Hangzhou City, and feature vector extraction is performed on it. The basic steps are as described in the aforementioned S1-S7, and will not be described in detail. The following mainly demonstrates some specific implementation details and effects of each step.

[0251] 1. According to the method described in steps S1-S7, use the TensorFlow deep learning framework to build ALM and GSAM, and set the save point of the model at the same time, save the neural network parameter variables except the target task module, so as to facilitate the transplantation in the next fine-tuning task; The hyperparameters of the model are set through the hype-para.config configuration file, and the specific contents mainly include the following categories:

[0252] 1) Training sample size batch_size: 64; 2) Initial learning rate η: 0.00005; 3) Number of tra...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com