Method for constructing JND model of screen image

A technology of screen image and construction method, applied in the field of screen image visual redundancy estimation, can solve the problem of not being able to estimate the visual redundancy information of screen image well.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The present invention will be further described below through specific embodiments.

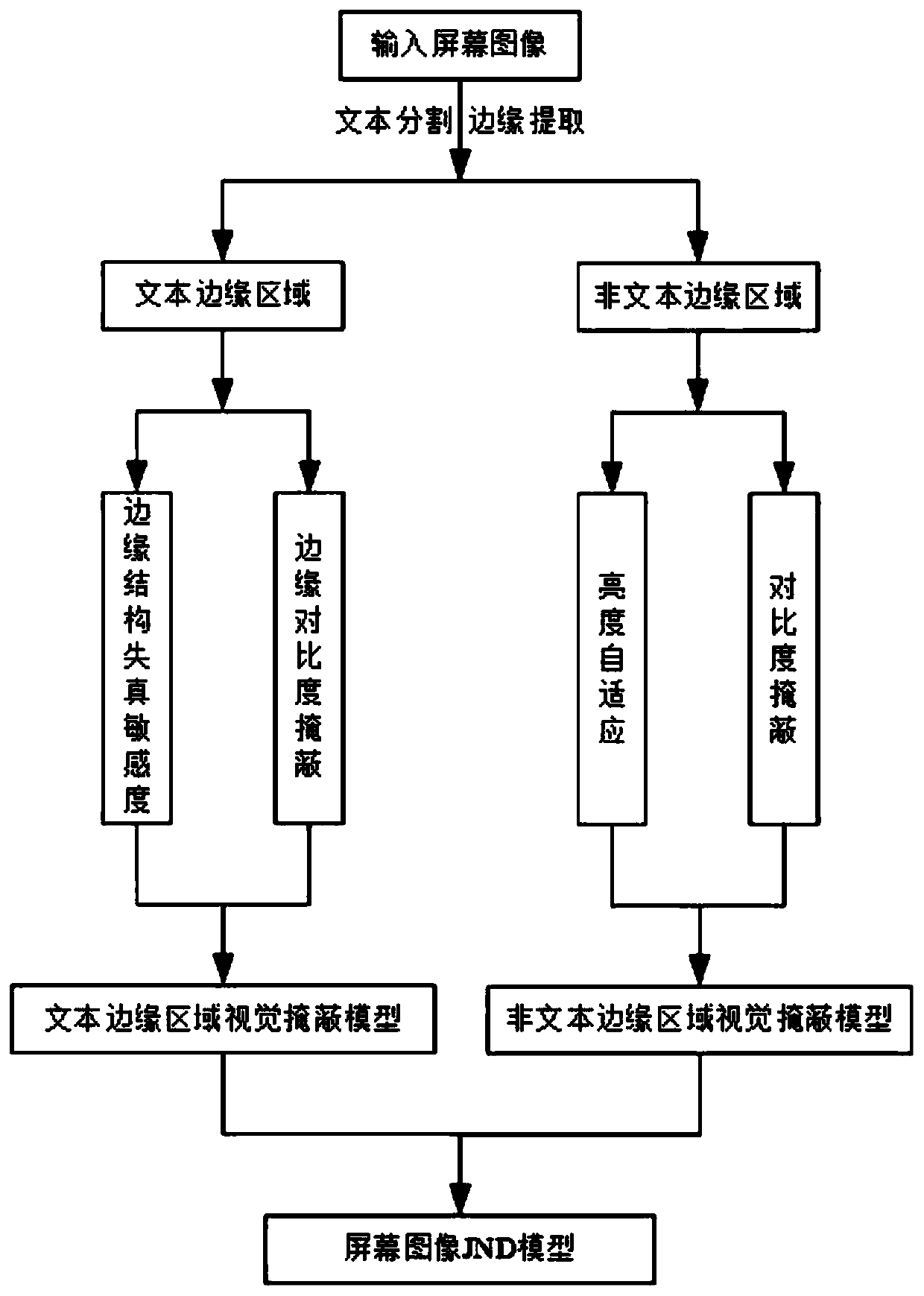

[0039] The present invention accurately estimates the visual redundant information in the screen image, and provides a screen image JND model construction method, such as figure 1 As shown, the specific implementation steps are as follows:

[0040] 1) Enter the screen image.

[0041] 2) Using the text segmentation technology to obtain the text area of the screen image.

[0042] 3) Using the Gabor filter to extract the edge of the text area, the screen image is divided into a text edge area and a non-text edge area.

[0043] 4) Using the edge width and edge contrast of the text edge pixels to calculate the edge structure distortion sensitivity and edge contrast masking correspondingly, and obtain the visual masking model of the text edge area.

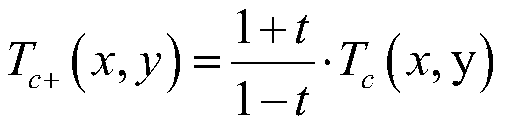

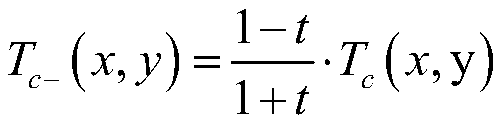

[0044] Specifically, calculate the strong edge contrast T c+ (x,y) and weak edge contrast T c- (x,y), as follows:

[0045]

[0046] ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com