Man, machine and object interaction mechanical arm teaching system based on RGB-D image

An RGB-D, RGB image technology, applied in the field of human-machine-object interaction robotic arm teaching system, can solve the problems of high joint action data dimension, long training time of learning model, dimensional disaster, etc., to avoid the dimensional disaster. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

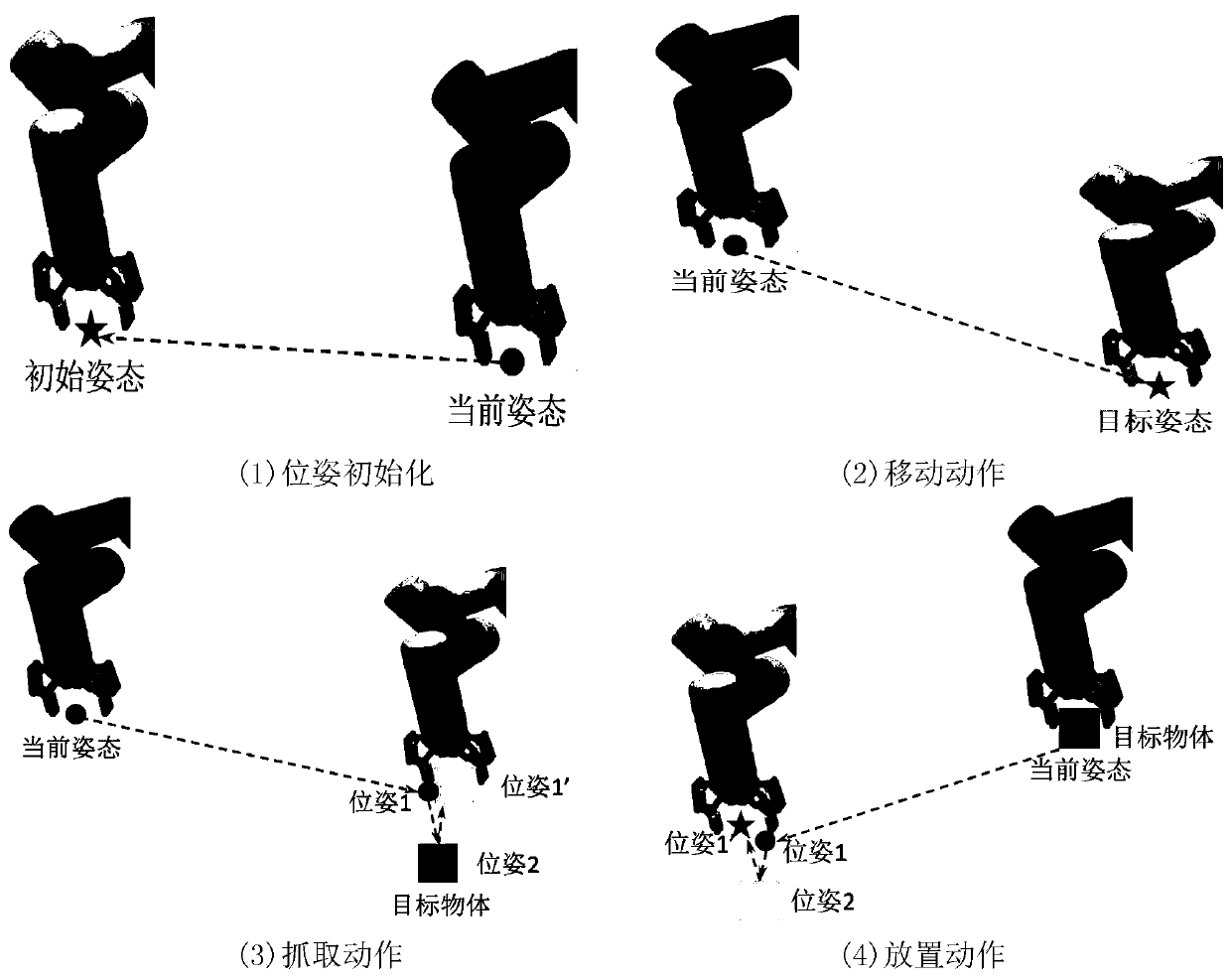

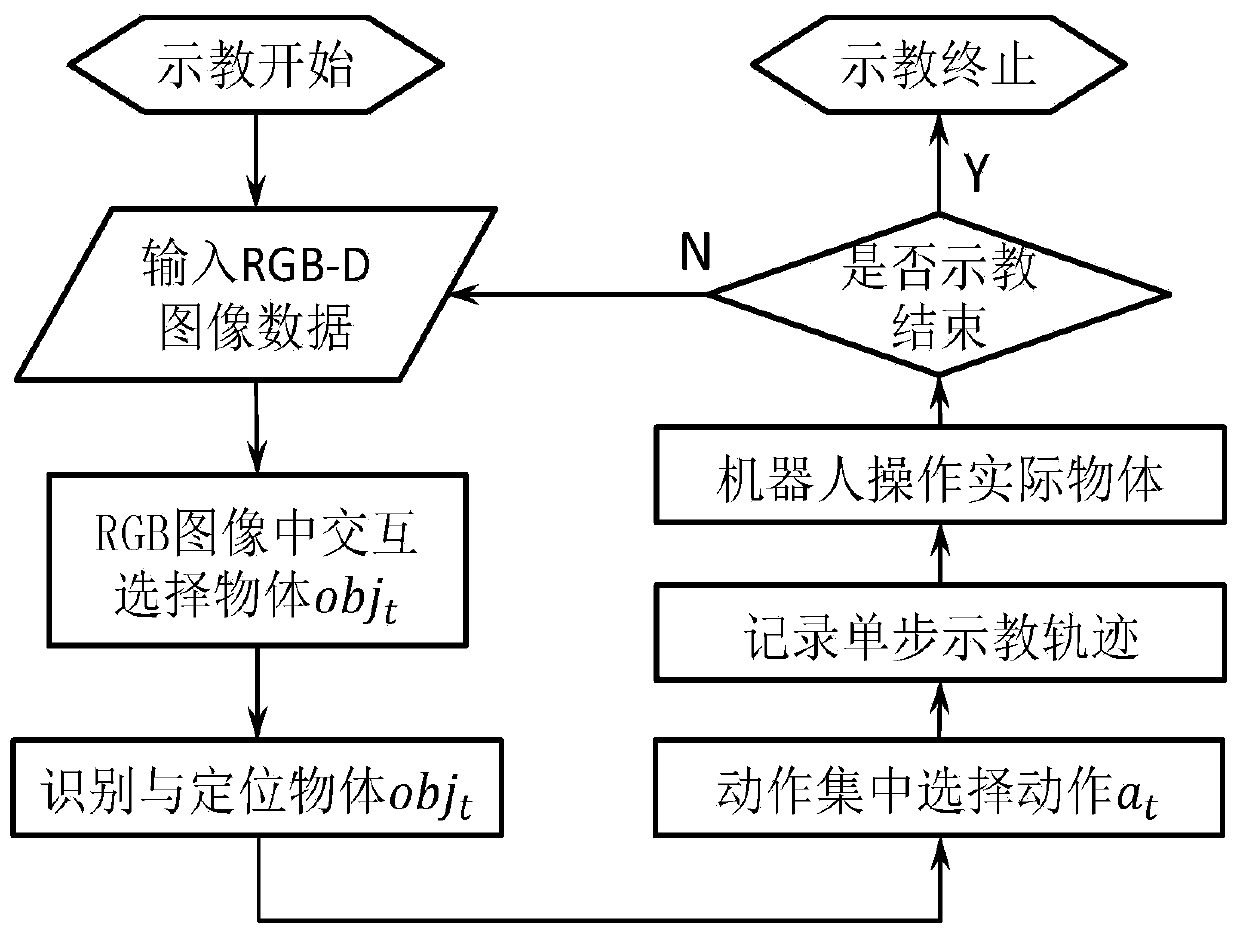

[0041] Compared with the traditional teaching method, the teaching system and method of human-computer interaction manipulator based on RGB-D image proposed by this method is a task-oriented, high-level and simple teaching method, ignoring each joint directly output abstract high-level actions, interact with objects in the RGB image space and select actions, control the manipulator to operate objects in the actual space, and can teach in the simulation environment, with high efficiency, safety and low loss, and can be realized data sharing.

[0042] In the embodiment, the following implementation modes are adopted:

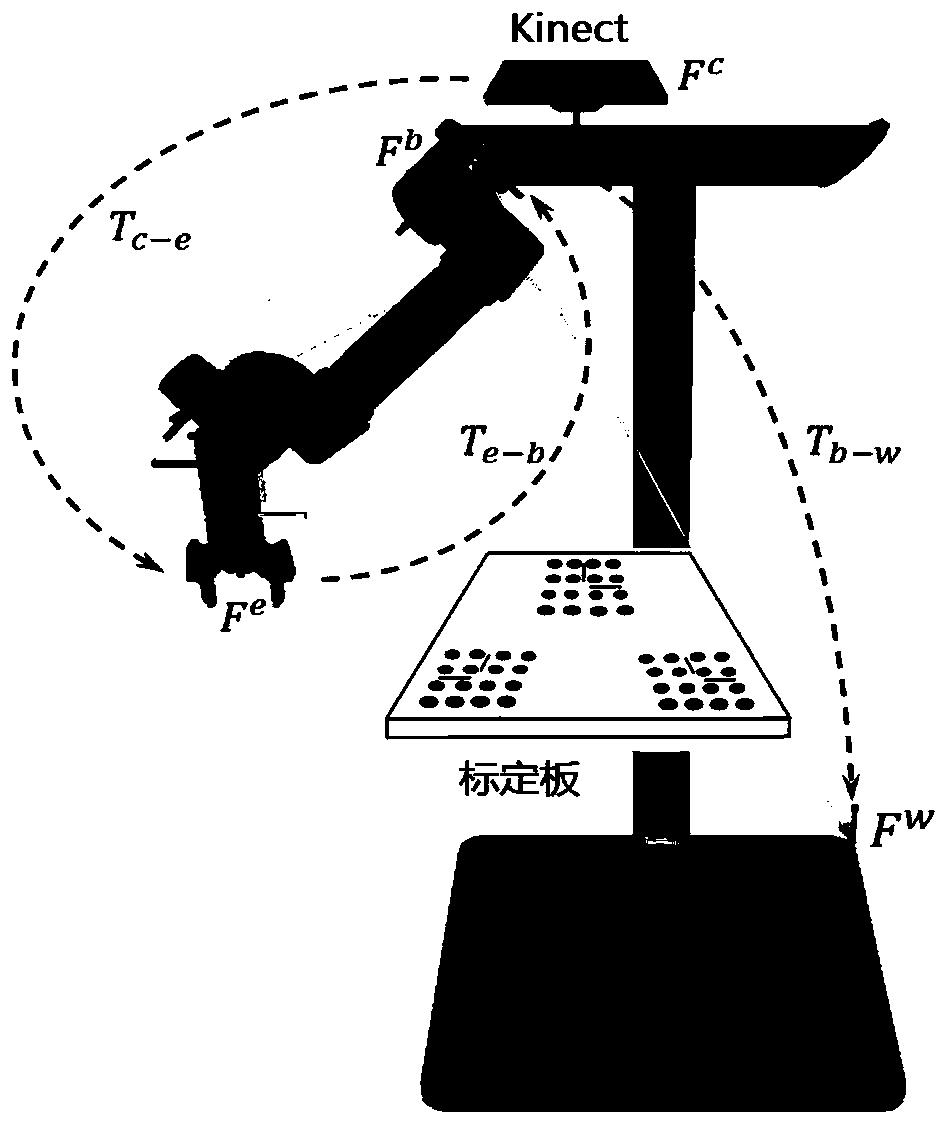

[0043] The construction steps of the robot teaching platform are as follows:

[0044] Step (1): Call the libfreenect2 driver to obtain the camera image data, subscribe to the RGB image and the point cloud image with a pixel size of 960×540, and perform the calibration of the RGB camera, the depth camera and the relative pose of the two according to the two, and e...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com