Inter-frame difference-based violent behavior detection method, system and device, and medium

A technology of inter-frame difference and detection method, applied in the direction of neural learning methods, instruments, biological neural network models, etc., can solve problems such as difficult to be extracted, and achieve the effect of solving fatigue and negligence

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0035] Embodiment 1, this embodiment provides a violent behavior detection method based on inter-frame difference;

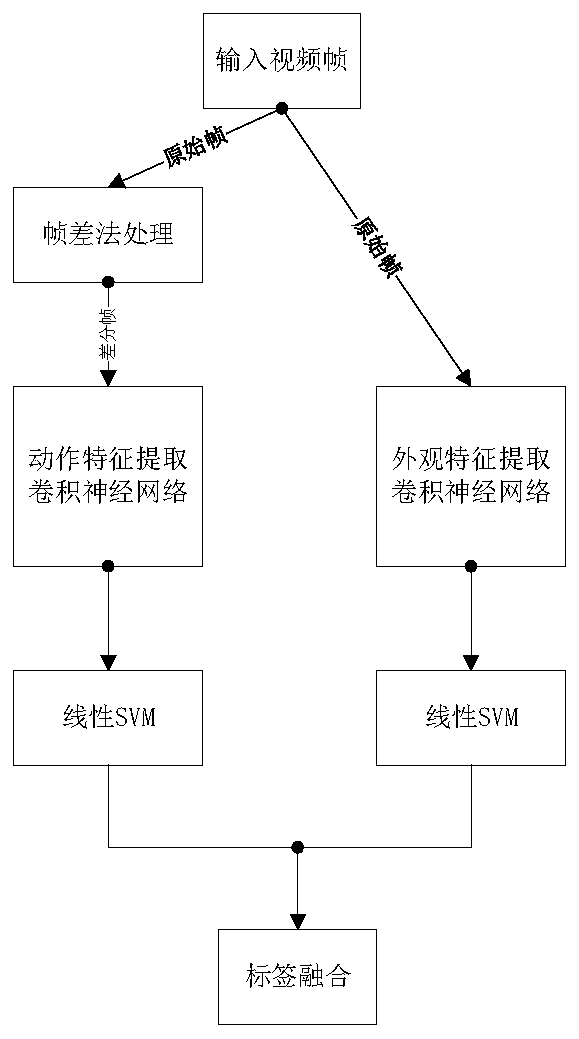

[0036] Such as figure 1 As shown, the violent behavior detection method based on frame difference includes:

[0037] All frame images of the video to be detected are input into the pre-trained first convolutional neural network, and the appearance features of each frame image are output;

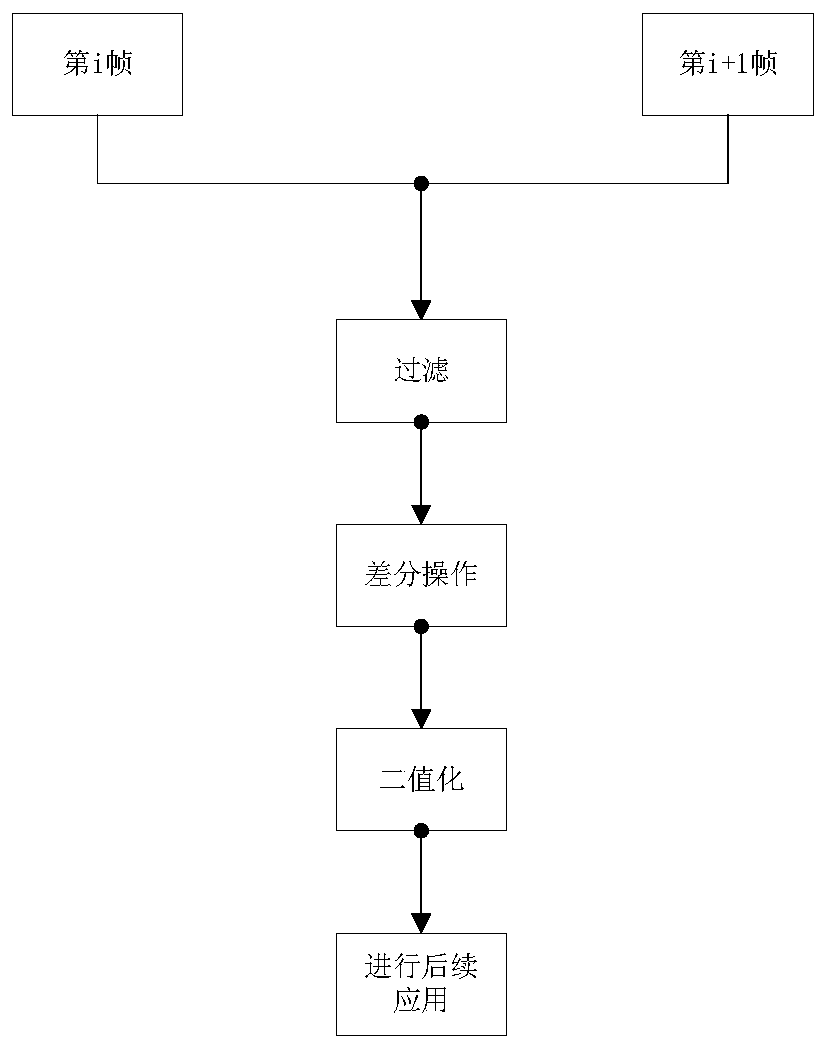

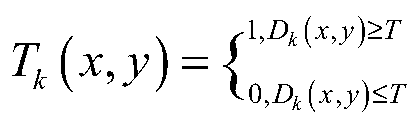

[0038] Use the inter-frame difference method to process the video to be detected, and extract several differential frame images; input each differential frame image into the pre-trained second convolutional neural network, and output the action features of each differential frame image ;

[0039] Input the appearance features of each frame image into the pre-trained first classifier, and output the first classification label of the current frame image;

[0040] Input the action feature of each differential frame image into the pre-trained second classifier, and output the s...

Embodiment 2

[0087] Embodiment 2, this embodiment provides a violent behavior detection system based on frame difference;

[0088] A violent behavior detection system based on frame difference, including:

[0089] The appearance feature extraction module is configured to: input all frame images of the video to be detected into the pre-trained first convolutional neural network, and output the appearance feature of each frame image;

[0090] The action feature extraction module is configured to: use the inter-frame difference method to process the video to be detected, and extract several difference frame images; input each difference frame image into the pre-trained second convolutional neural network , output the motion features of each differential frame image;

[0091] The first classification module is configured to: input the appearance feature of each frame image into a pre-trained first classifier, and output the first classification label of the current frame image;

[0092] The ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com