Disparity map DBSCAN clustering-based region of interest extraction method

A technology of region of interest and extraction method, which is applied in the field of region of interest extraction based on disparity map DBSCAN clustering, can solve the problems of sample acquisition and labeling costs that are difficult to apply, difficult, and have a large impact on accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] In order to further illustrate the technical content of the present invention, the present invention will be described in detail below in conjunction with the accompanying drawings and examples of implementation.

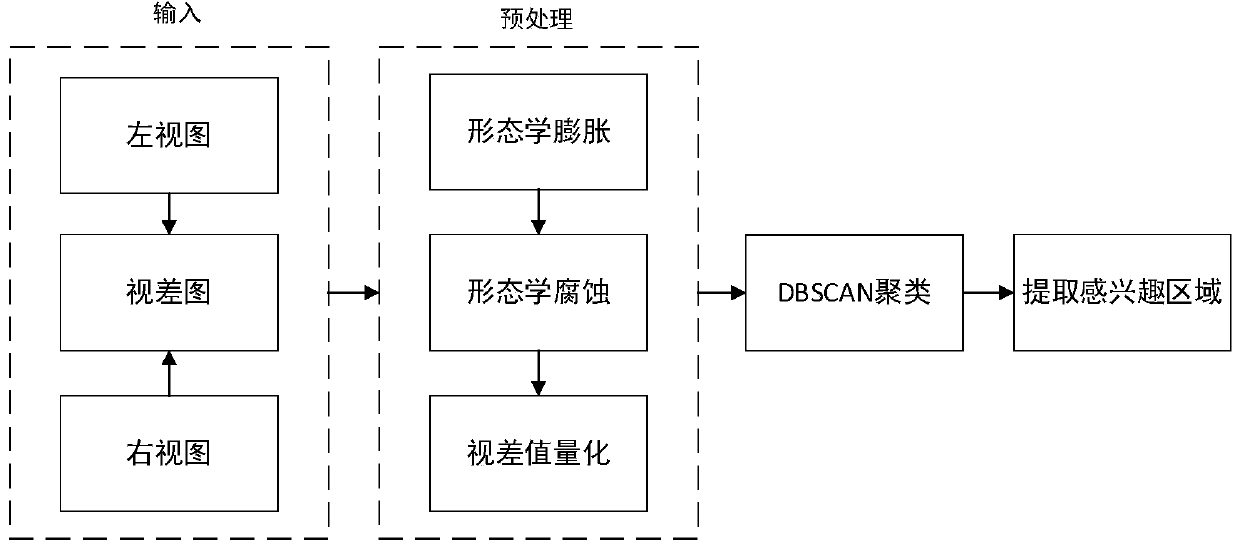

[0028] see figure 1 As shown, a method for extracting regions of interest based on disparity map DBSCAN clustering includes the following steps:

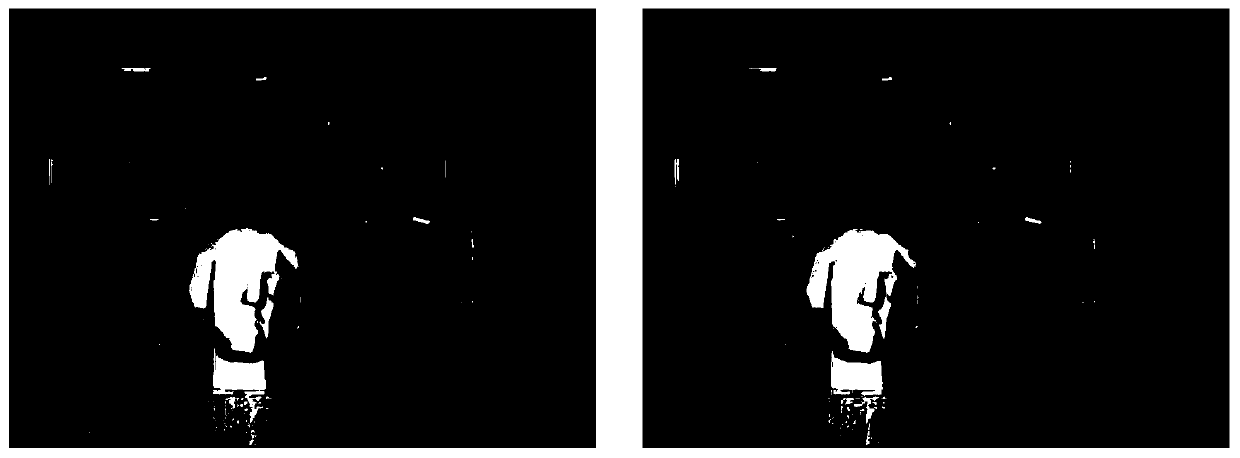

[0029] Step 1: Perform matching calculation on the binocular view to obtain a parallax image. The disparity image can come from a sparse disparity map method based on SAD matching, or from a dense disparity map calculation method based on SGM, graph cut, and deep learning. The disparity map should be quantized to a pixel value range from 0 to 255.

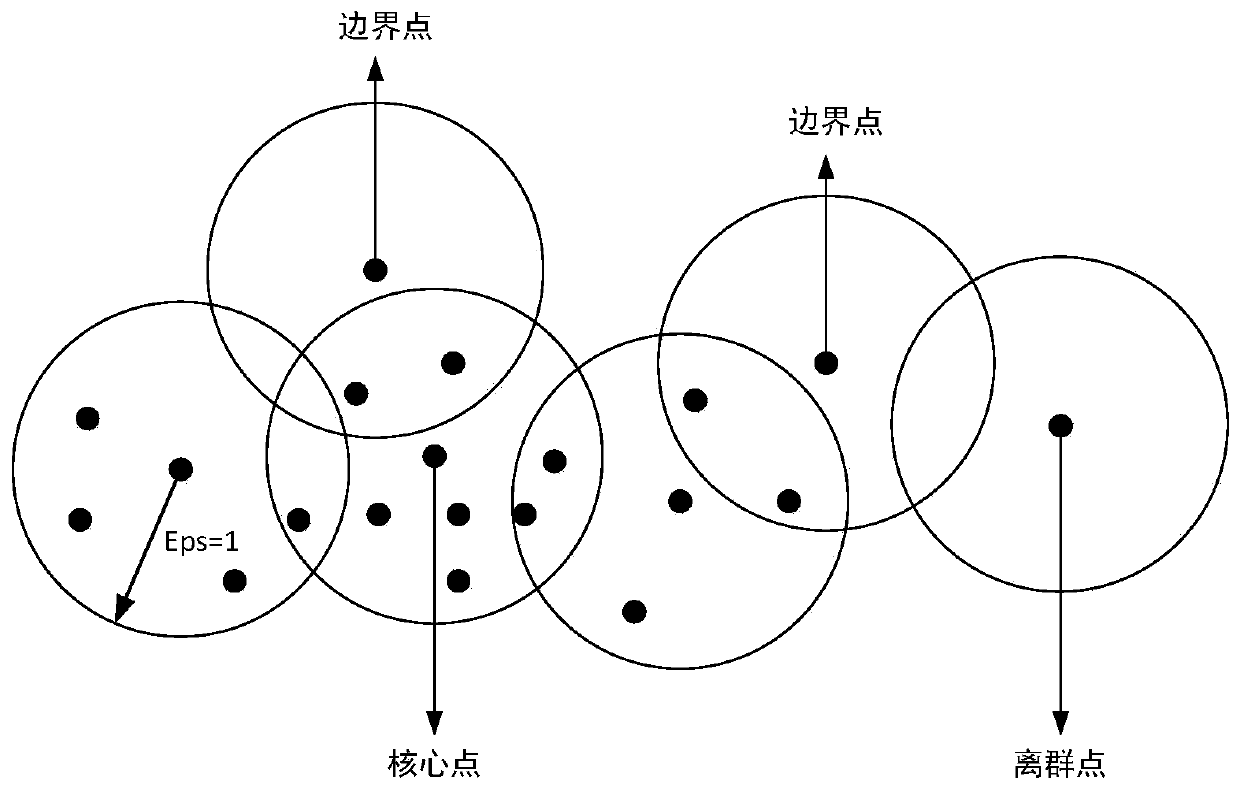

[0030] Step 2: Preprocess the parallax image input in step 1, and transform the parallax image into an image matrix with 1 row and N column vectors; where N is the product of image width W and height H, and the background in the parallax image is in the image matrix ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com