Binocular stereo matching method based on convolutional neural network

A technology of binocular stereo matching and convolutional neural network, which is applied in the field of binocular stereo matching based on convolutional neural network, can solve the problems of huge memory consumption and computing processing power, unable to accurately find pixel matching points, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0048] The purpose of the present invention is to provide a binocular stereo matching method based on convolutional neural network, which can complete the training of the network end-to-end without any post-processing process, so as to solve the problem of existing stereo matching method based on convolutional neural network. Pathological regions cannot accurately find matching points corresponding to pixels, while significantly reducing memory usage and runtime during training / inference.

[0049] The present invention will be described in detail below in conjunction with the accompanying drawings. It should be noted that the described embodiments are only intended to facilitate the understanding of the present invention, rather than limiting it in any way.

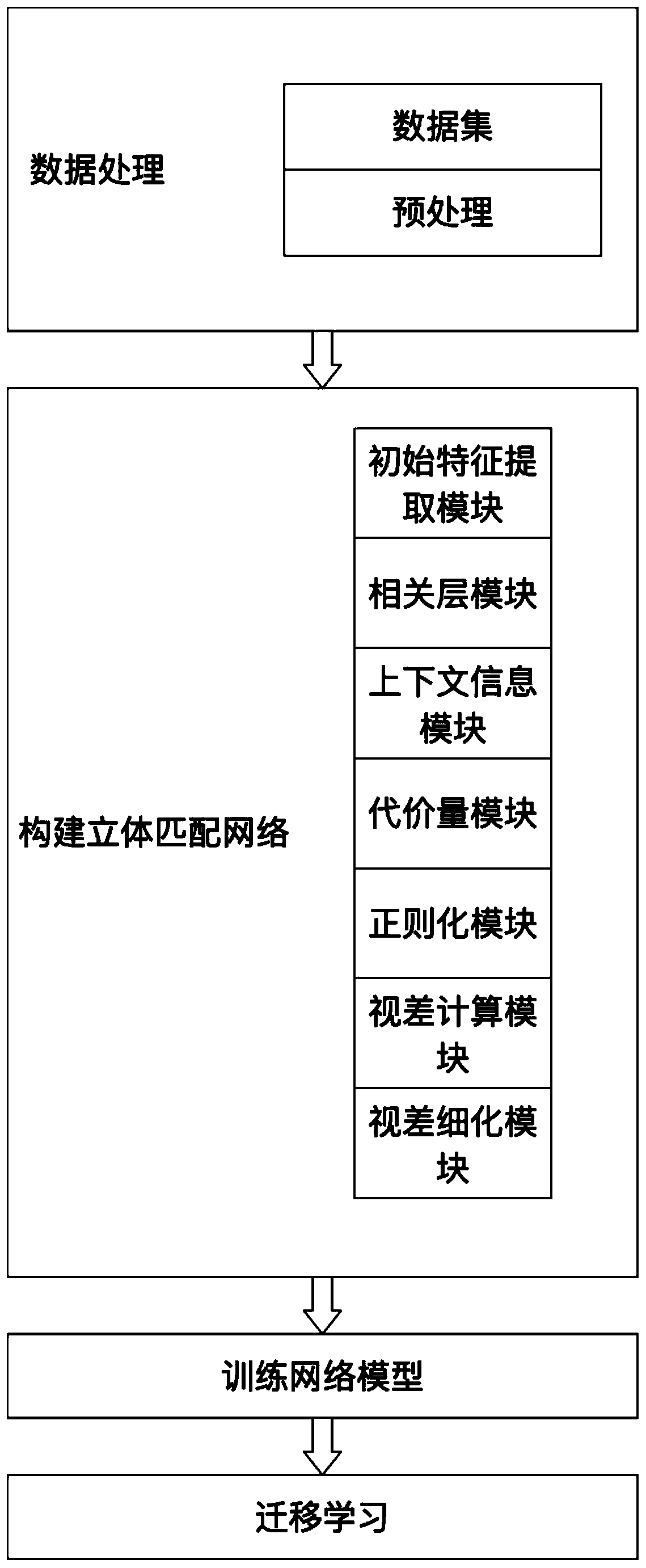

[0050] figure 1 It is the network flowchart of the binocular stereo matching method based on the convolutional neural network provided by the present invention.

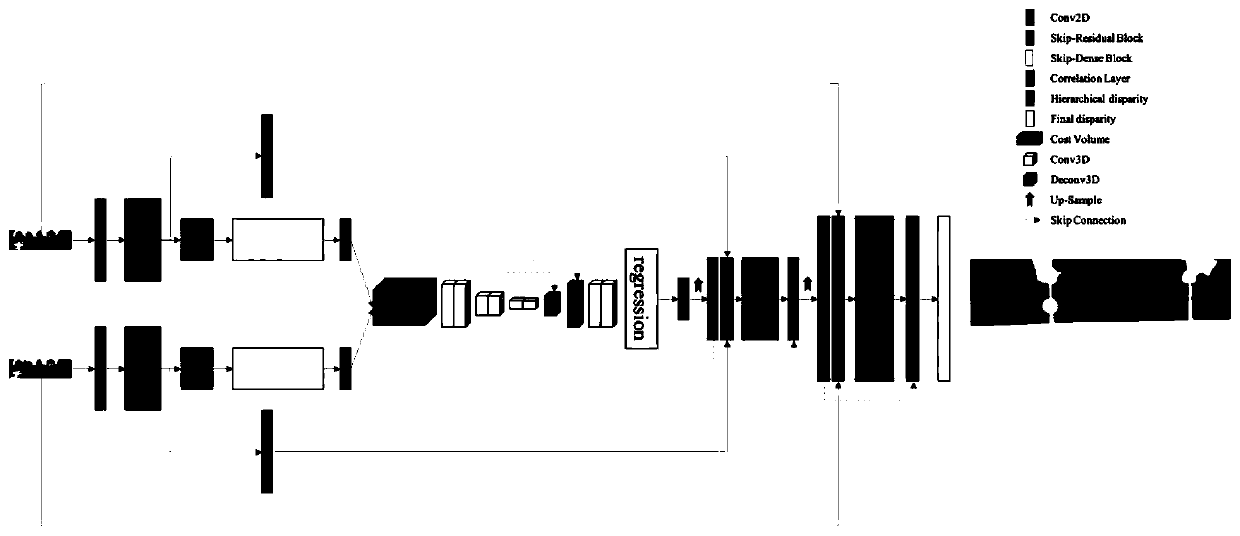

[0051] figure 2 It is the network structure diagram of ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com