Behavior recognition method based on spatiotemporal volume of local joint point trajectory in skeleton sequence

A recognition method and joint point technology, applied in character and pattern recognition, computer parts, instruments, etc., can solve the problems of high cost of depth detectors, different joint point trajectory length feature dimensions, and difficult time information, etc., to reduce Algorithm complexity, time complexity reduction, high time complexity effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

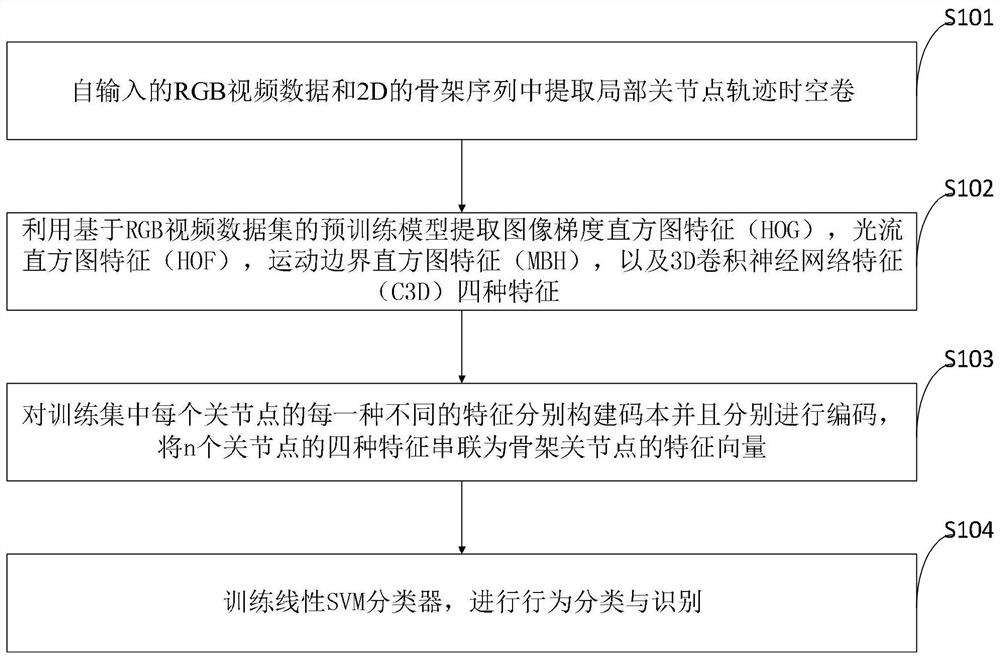

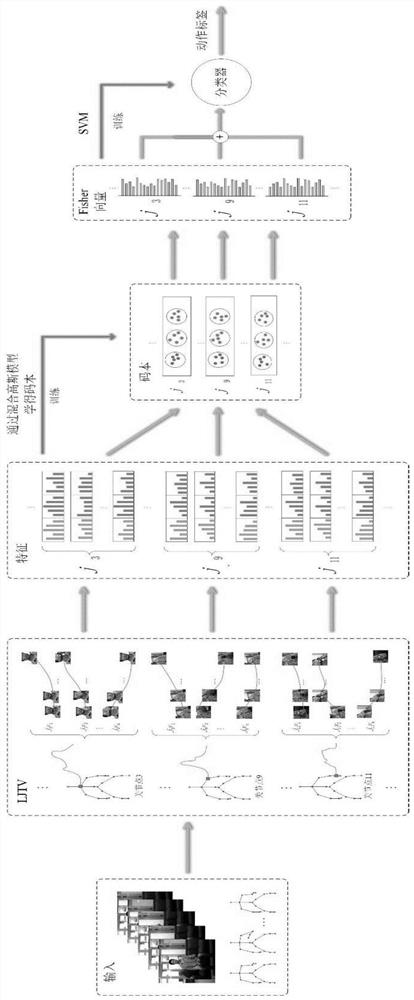

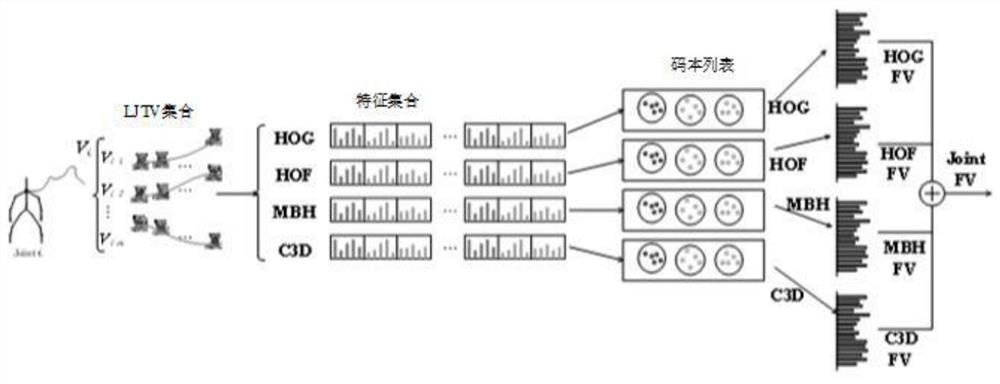

[0117] The present invention uses RGB video data and 2D human skeleton data for behavior recognition. The process of the method proposed by the present invention follows the classic behavior recognition process based on local features: detection of spatiotemporal interest points, feature extraction, construction of a bag of words model, and classification. Specifically, it is divided into four steps: extracting the local joint point trajectory space-time volume (LJTV), feature extraction, feature encoding, and behavior classification. Schematic such as figure 2 shown, each step is described in detail below:

[0118] Step 1, extract the spatiotemporal volume of the local joint point trajectory:

[0119] The human skeleton contains 15-25 joint points, and different data have different number of joint points, but the algorithm of the present invention is not constrained by the number of joint points.

[0120] The present invention takes a human skeleton with 20 joint points a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com