Patents

Literature

30results about How to "Accurate recognition rate" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

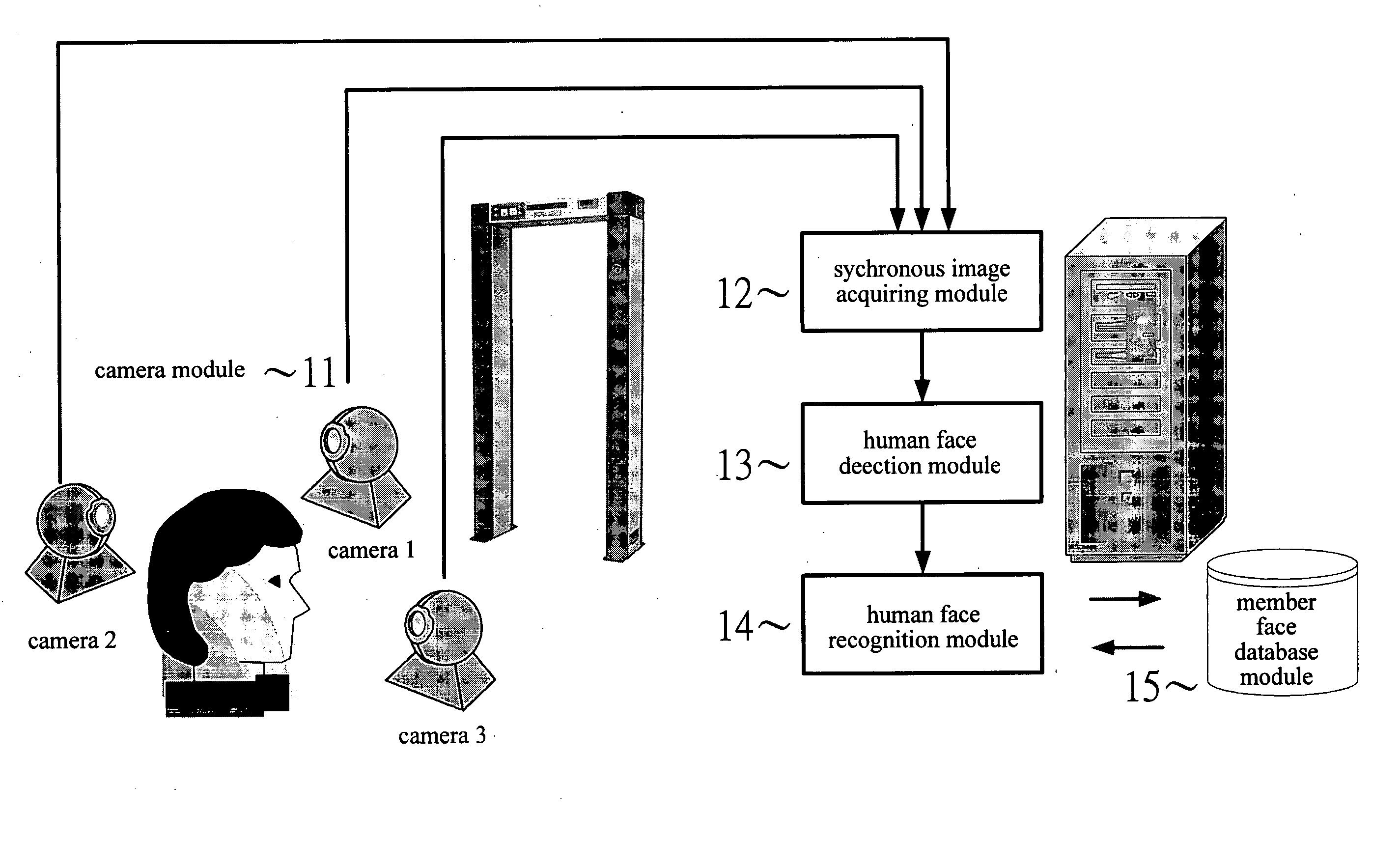

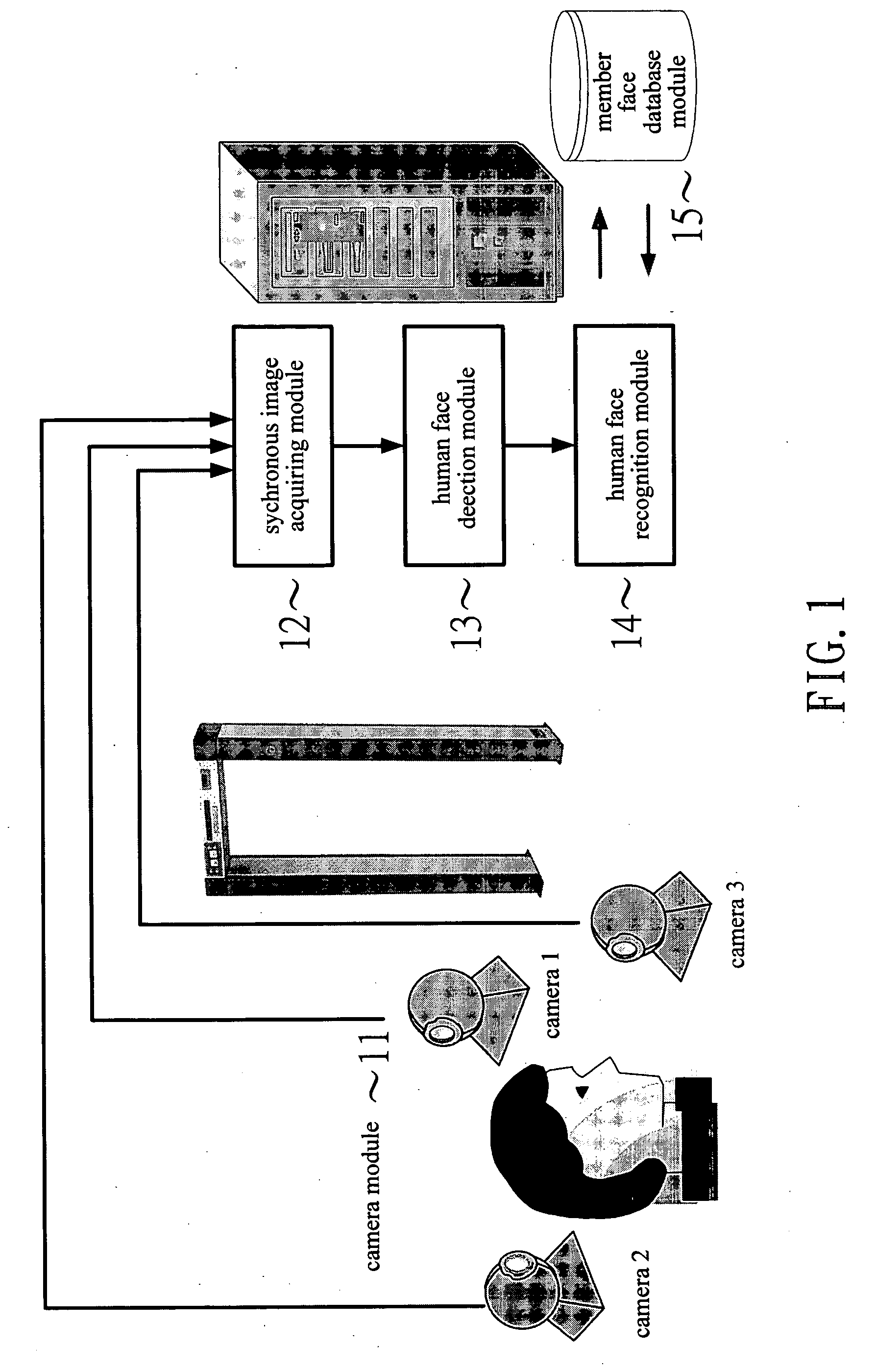

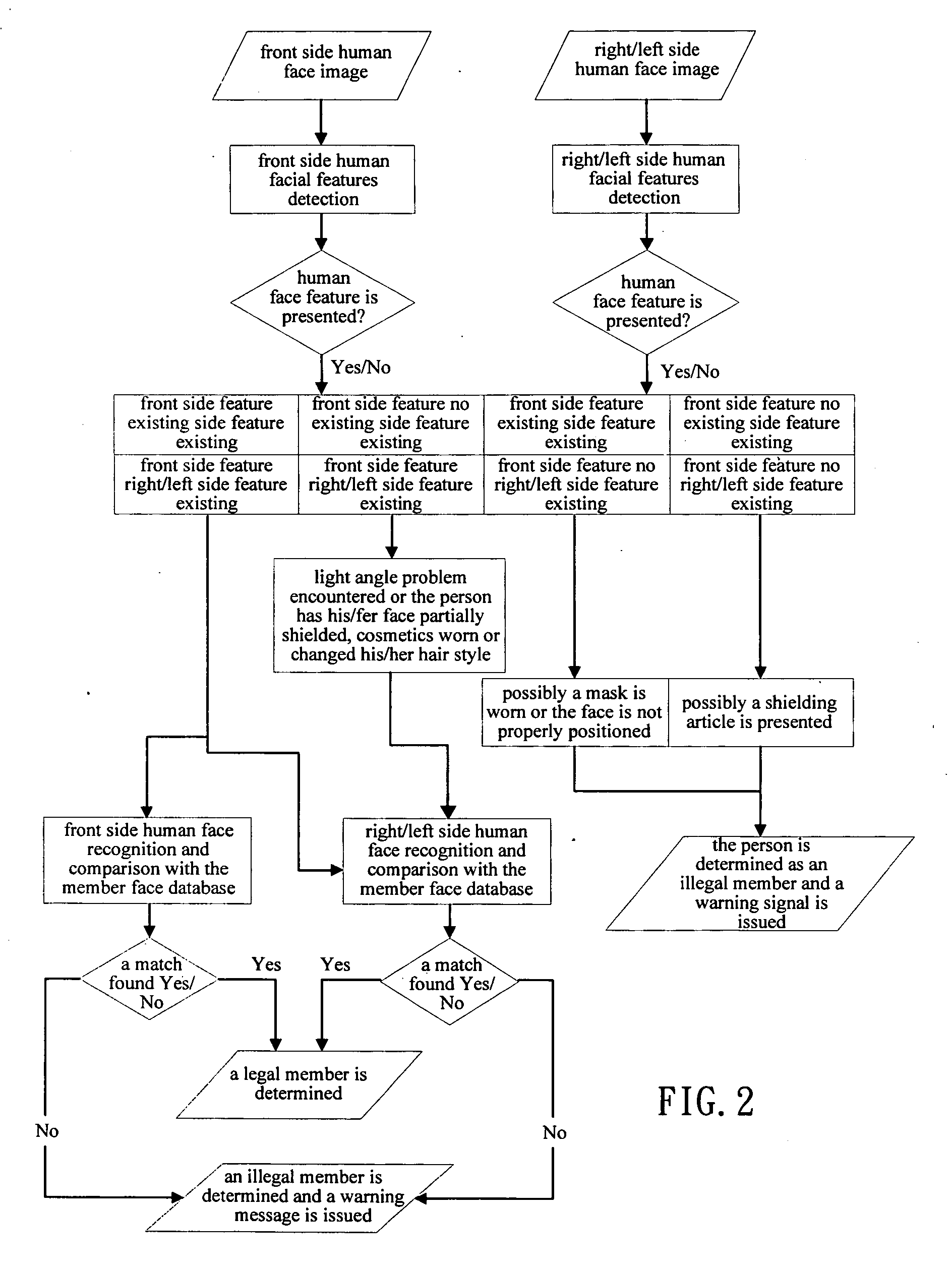

Method and device for human face detection and recognition used in a preset environment

Disclosed is a method and device for human face detection and recognition used in a preset environment. The human face detection device comprises a camera module, a synchronous image acquiring module, a human face detection module, a human face recognition module and a member face database module. The synchronous image acquiring module is used to acquire synchronously images photographed by the camera module. The human face detection module is used to detect if any human facial feature is presented in the image. If the human facial feature is confirmed, the image is transferred to the human face recognition module to extract the human feature from the image. Then, the extracted human facial feature is compared with member face data stored in the member face database module so that a recognition result which shows a person corresponding to the image is a legal or illegal member is generated.

Owner:CHUNGHWA TELECOM CO LTD

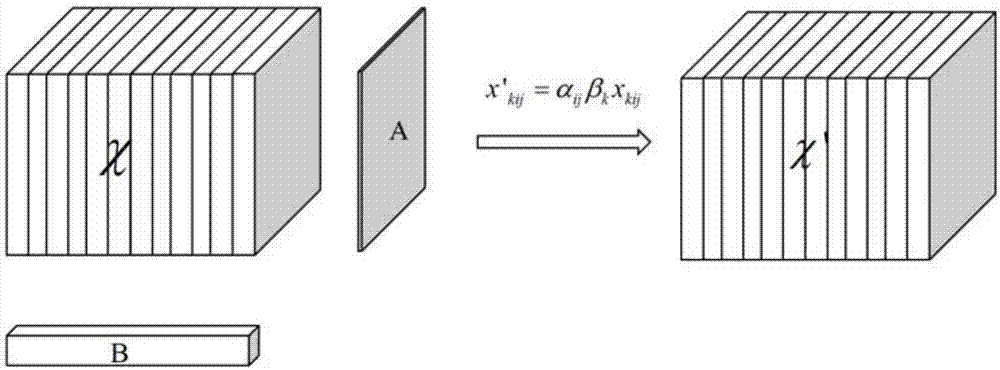

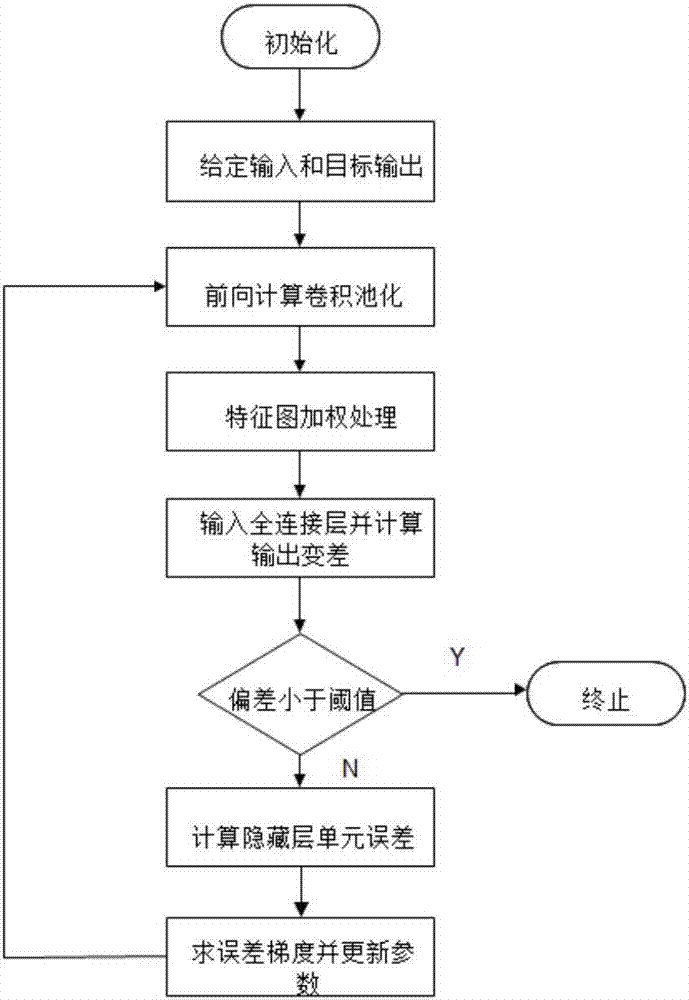

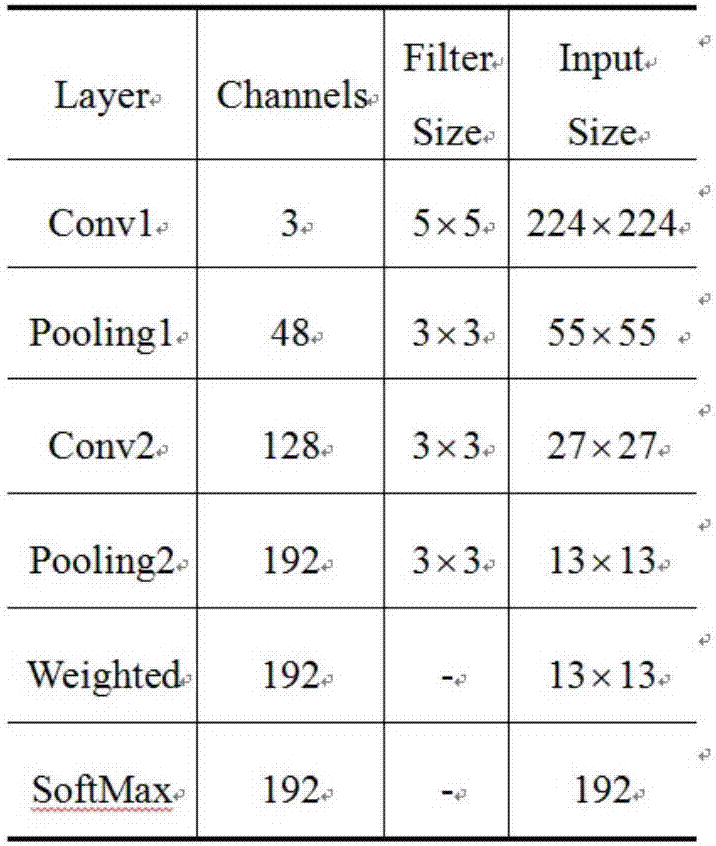

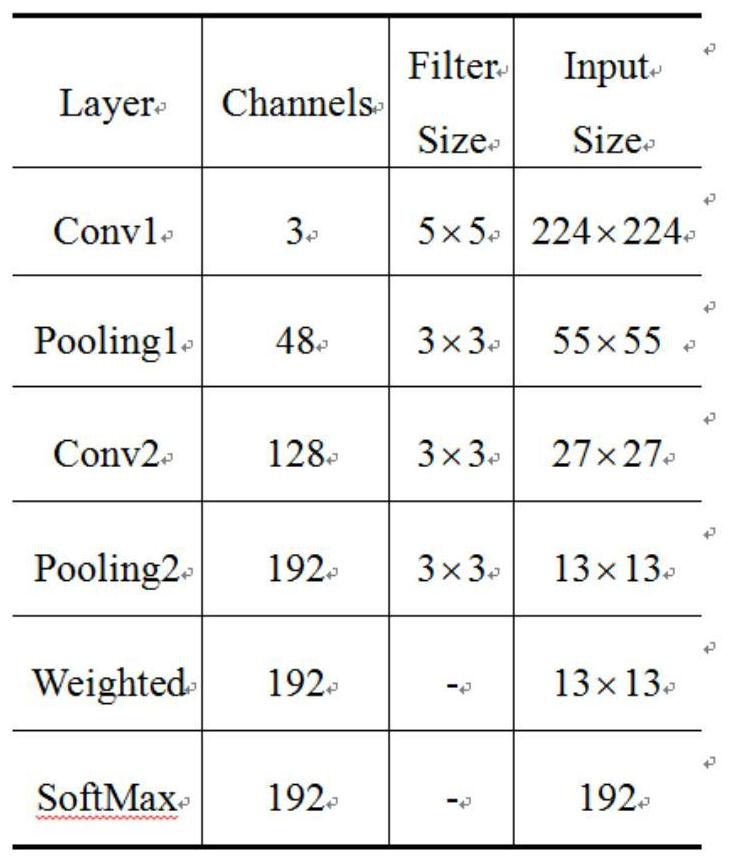

Underwater target feature extraction method based on convolutional neural network (CNN)

ActiveCN107194404AMake up for lost defectsAccurate recognition rateCharacter and pattern recognitionNeural architecturesSample sequenceLabeled data

The invention provides an underwater target feature extraction method based on a convolutional neural network (CNN). 1, a sampling sequence of an original radiation noise signal is divided into 25 consecutive parts, and each part is set with 25 sampling points; 2, normalization and centralization processing are carried out on a sampling sample of the j-th segment of the data signal; 3, short-time Fourier transform is carried out to obtain a LoFAR graph; 4, a vector is assigned to an existing 3-dimensional tensor; 5, an obtained feature vector is input to a fully-connected layer for classification and calculation of error with label data, whether the loss error is below an error threshold is tested, if the loss error is below the error threshold, network training is stopped, and otherwise, step 6 is entered; and 6, a gradient descent method is used to carry out parameter adjustment layer by layer on the network from back to front, and shifting to the step 2 is carried out. Compared with the traditional convolutional neural network algorithms, the method of the invention carries out a weighted operation of spatial information multi-dimensions on a feature graph layer to compensate for a defect of spatial information losses caused by one-dimensional vectorization of the fully-connected layer.

Owner:HARBIN ENG UNIV

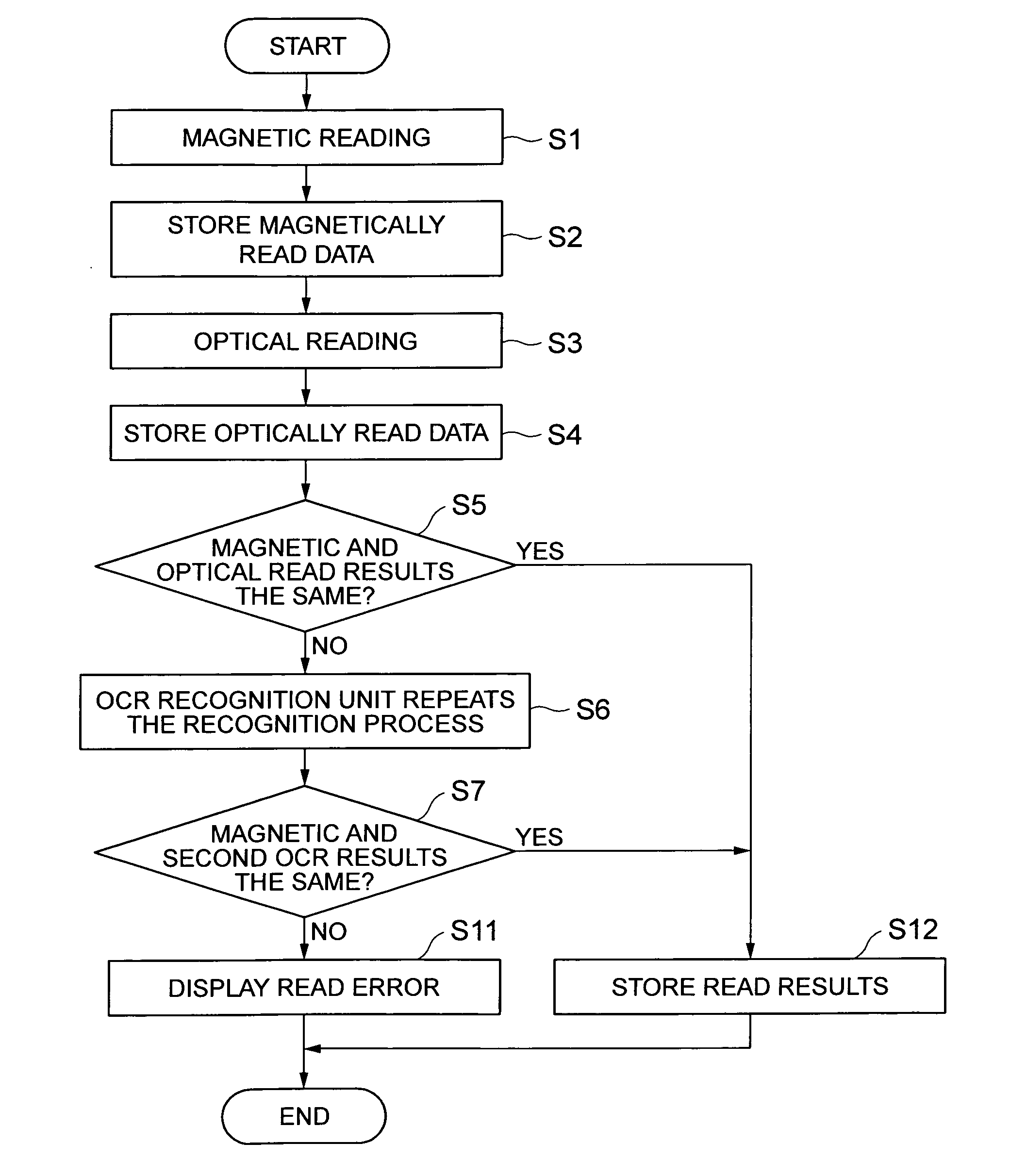

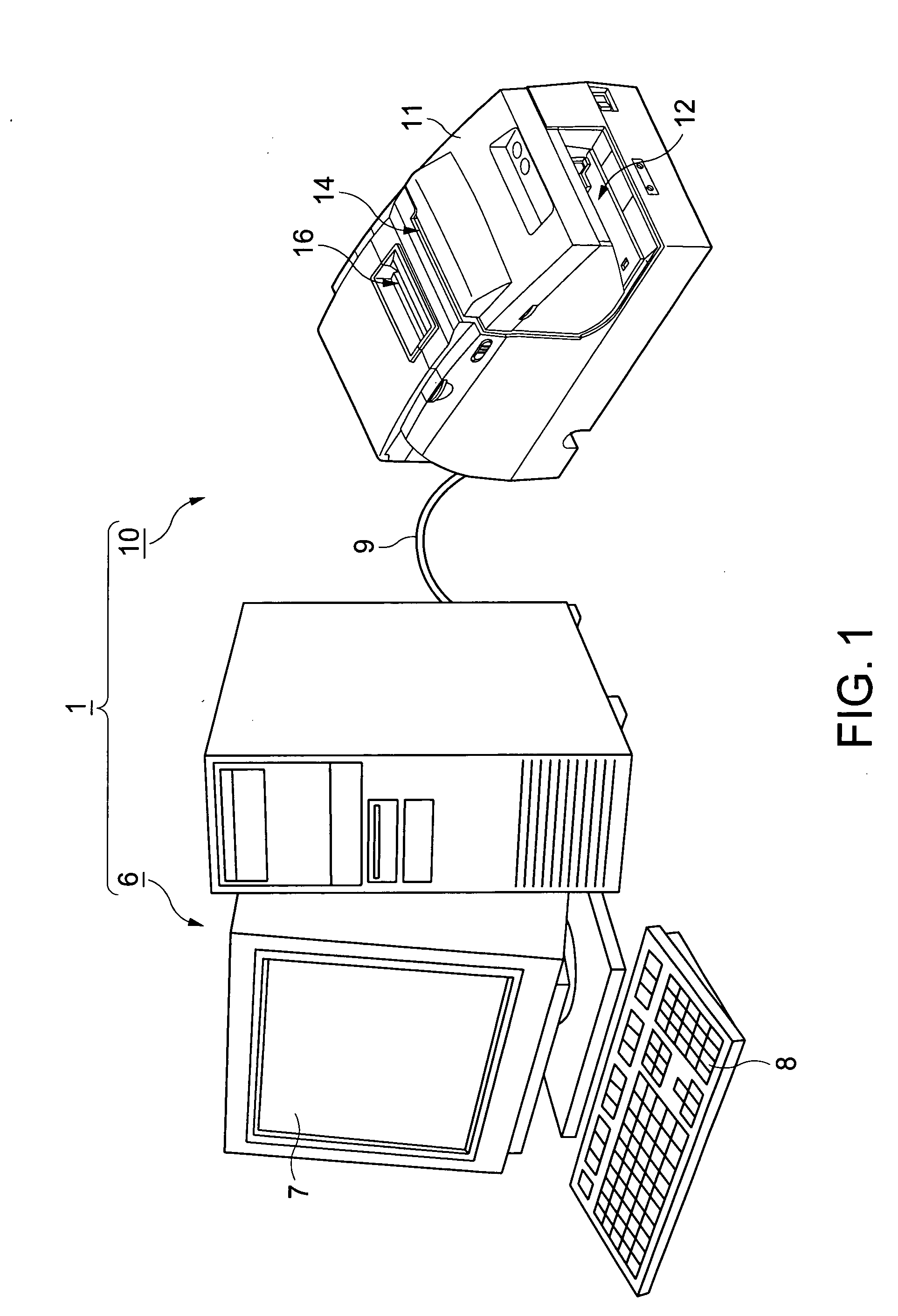

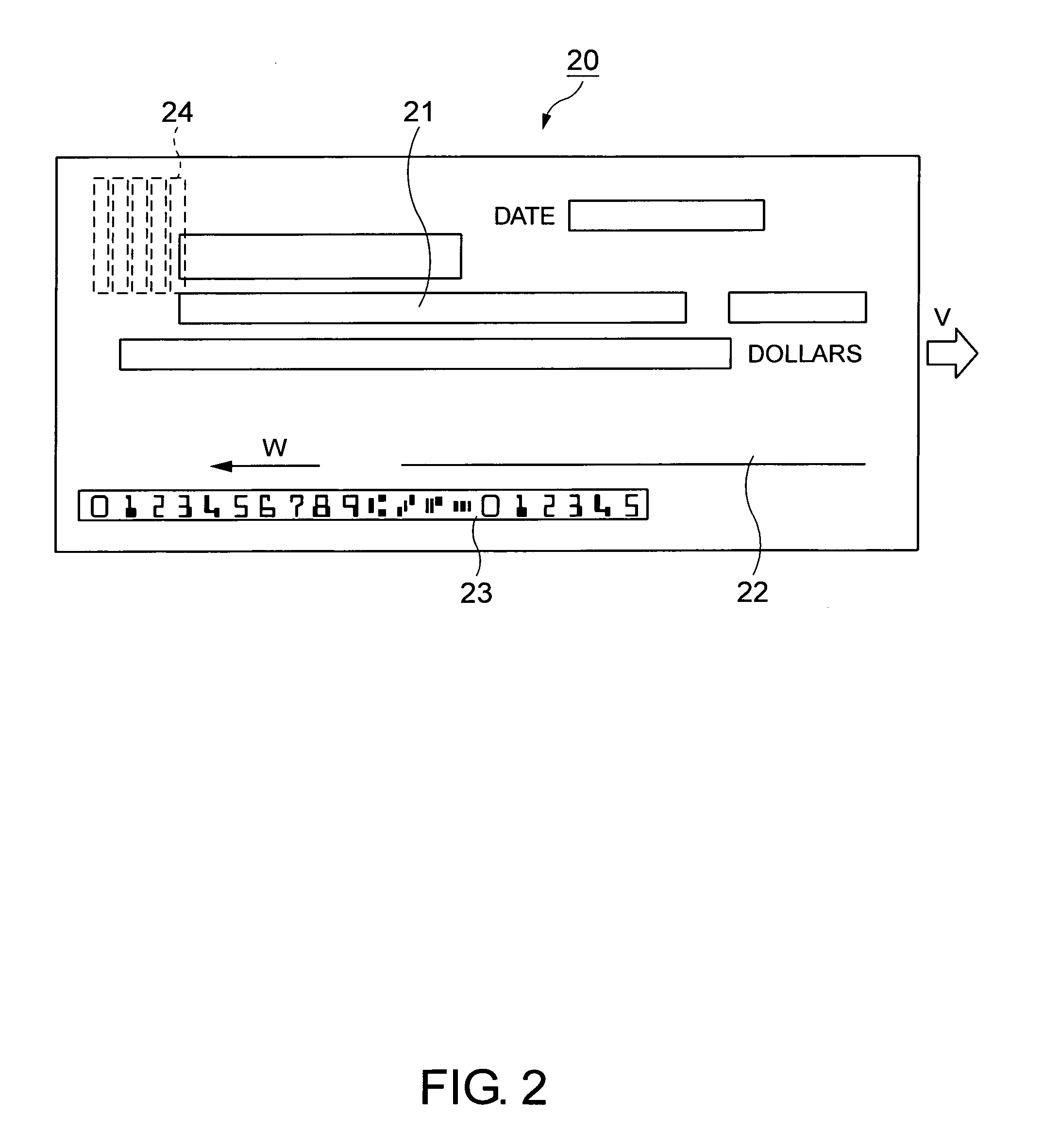

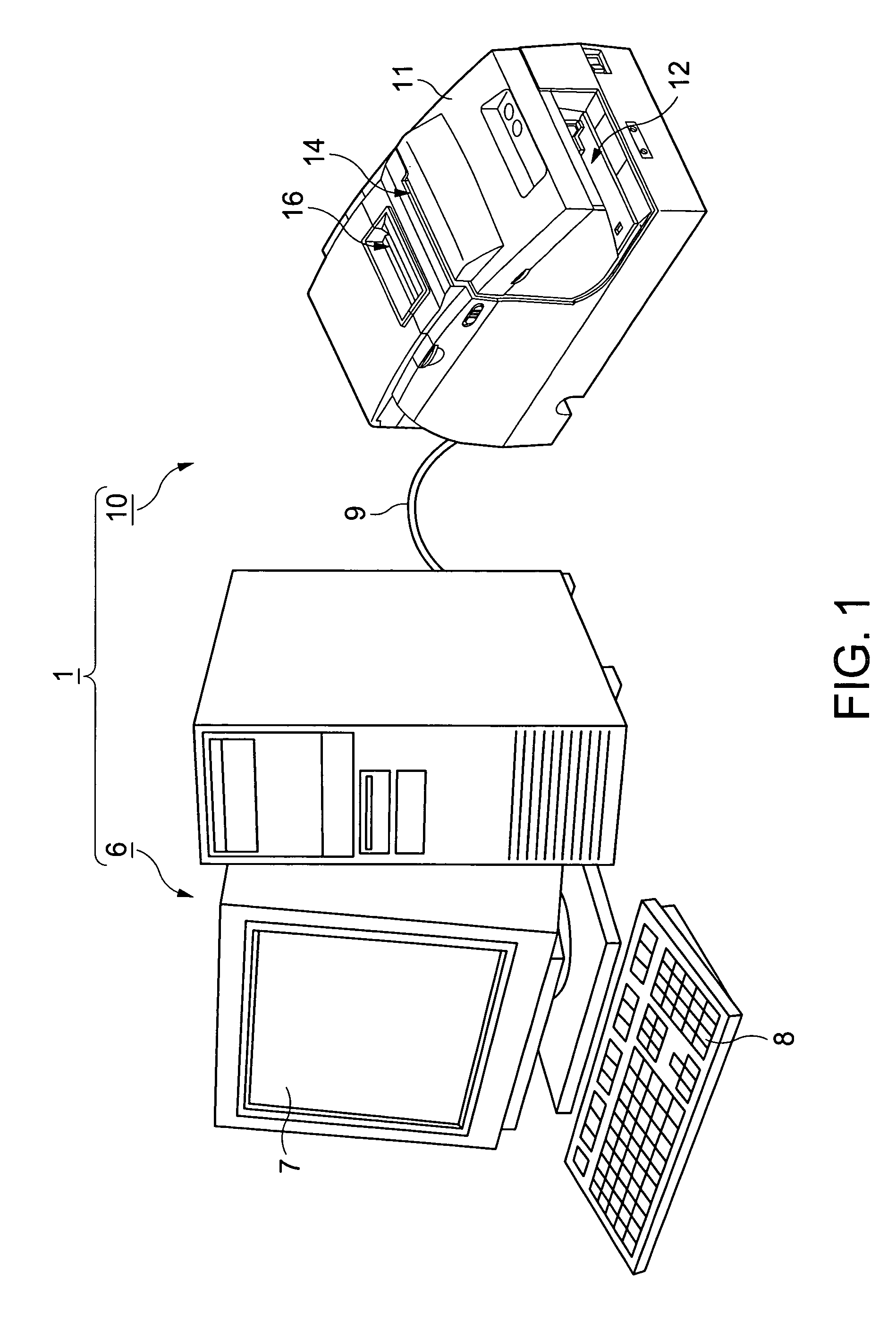

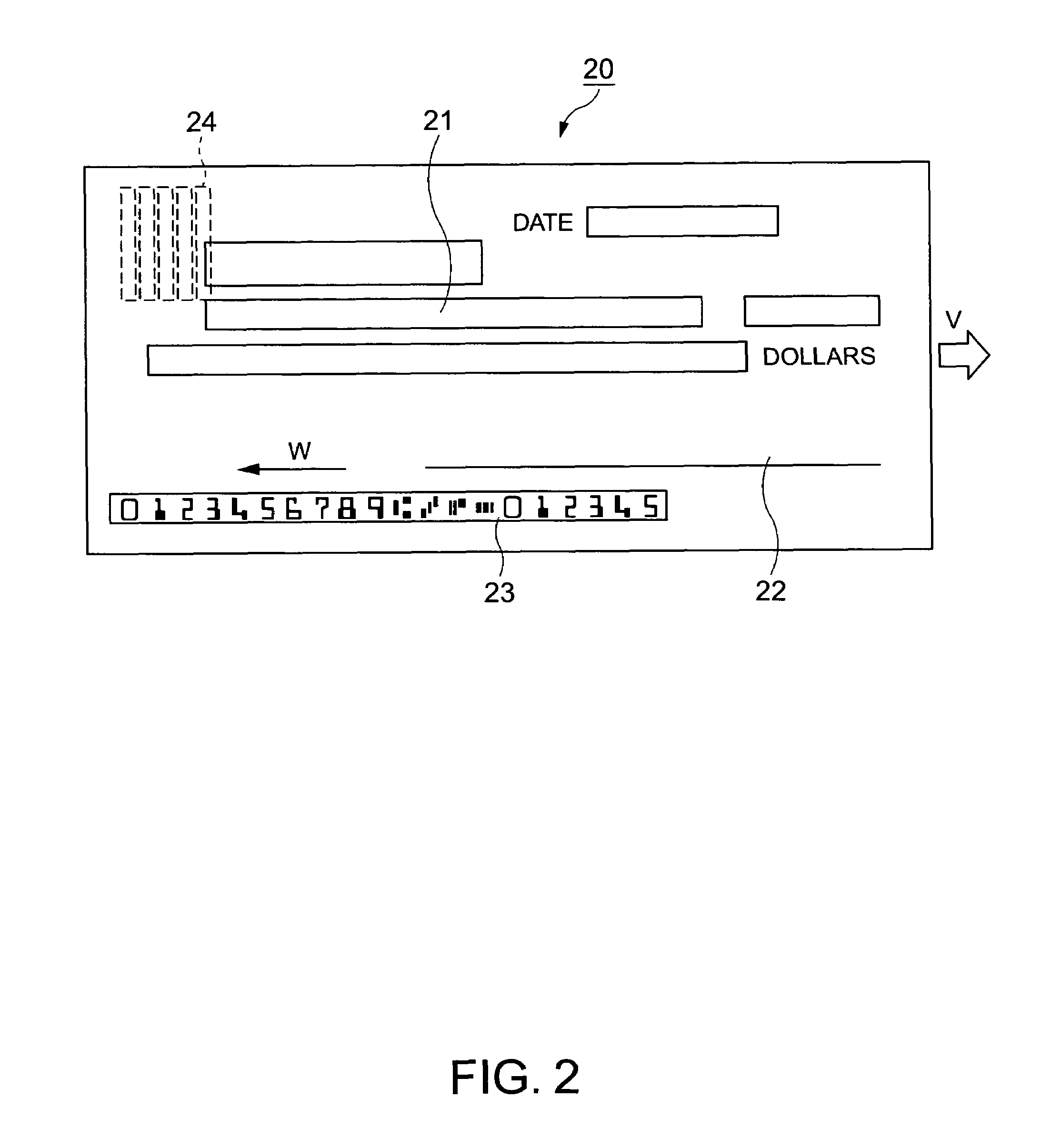

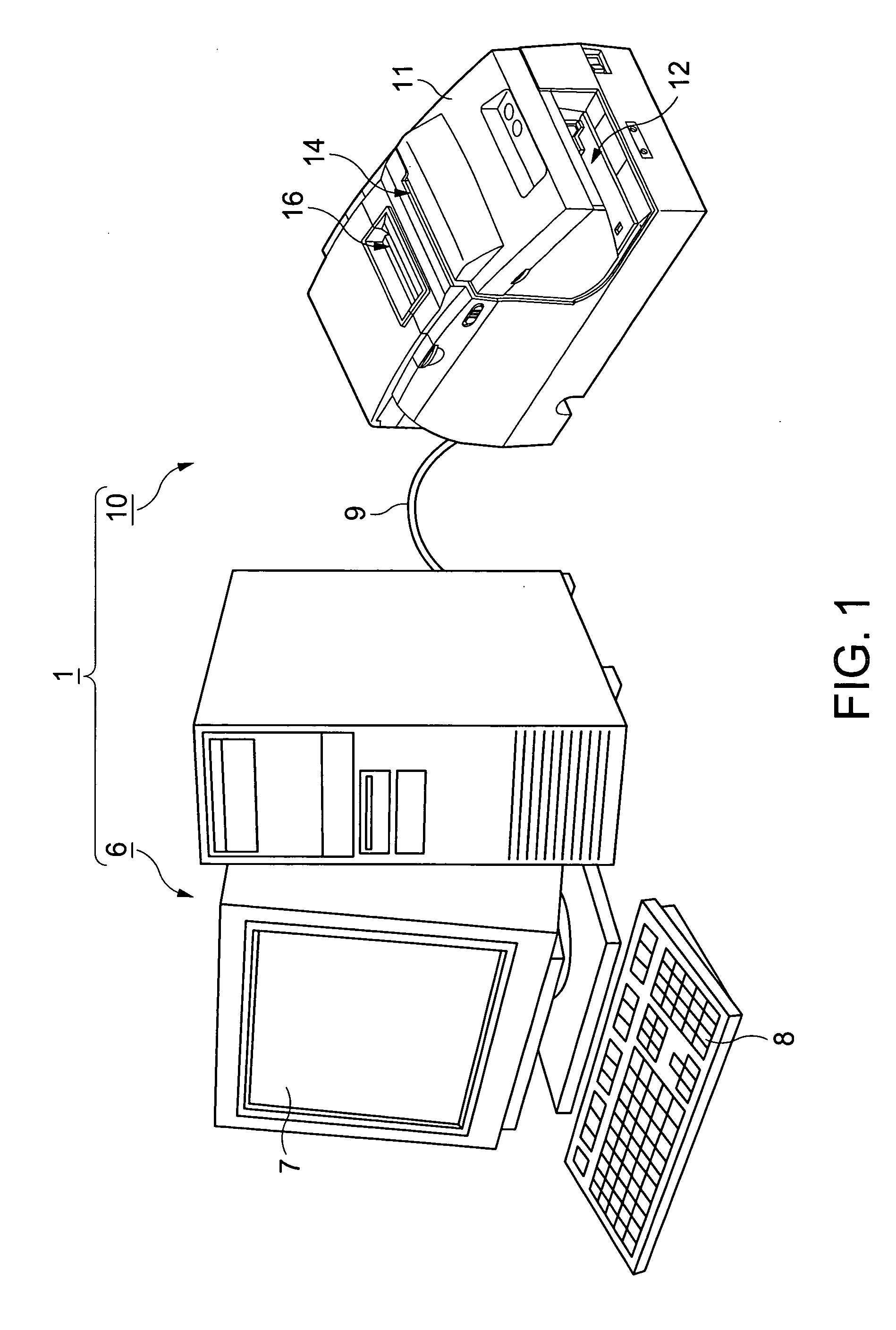

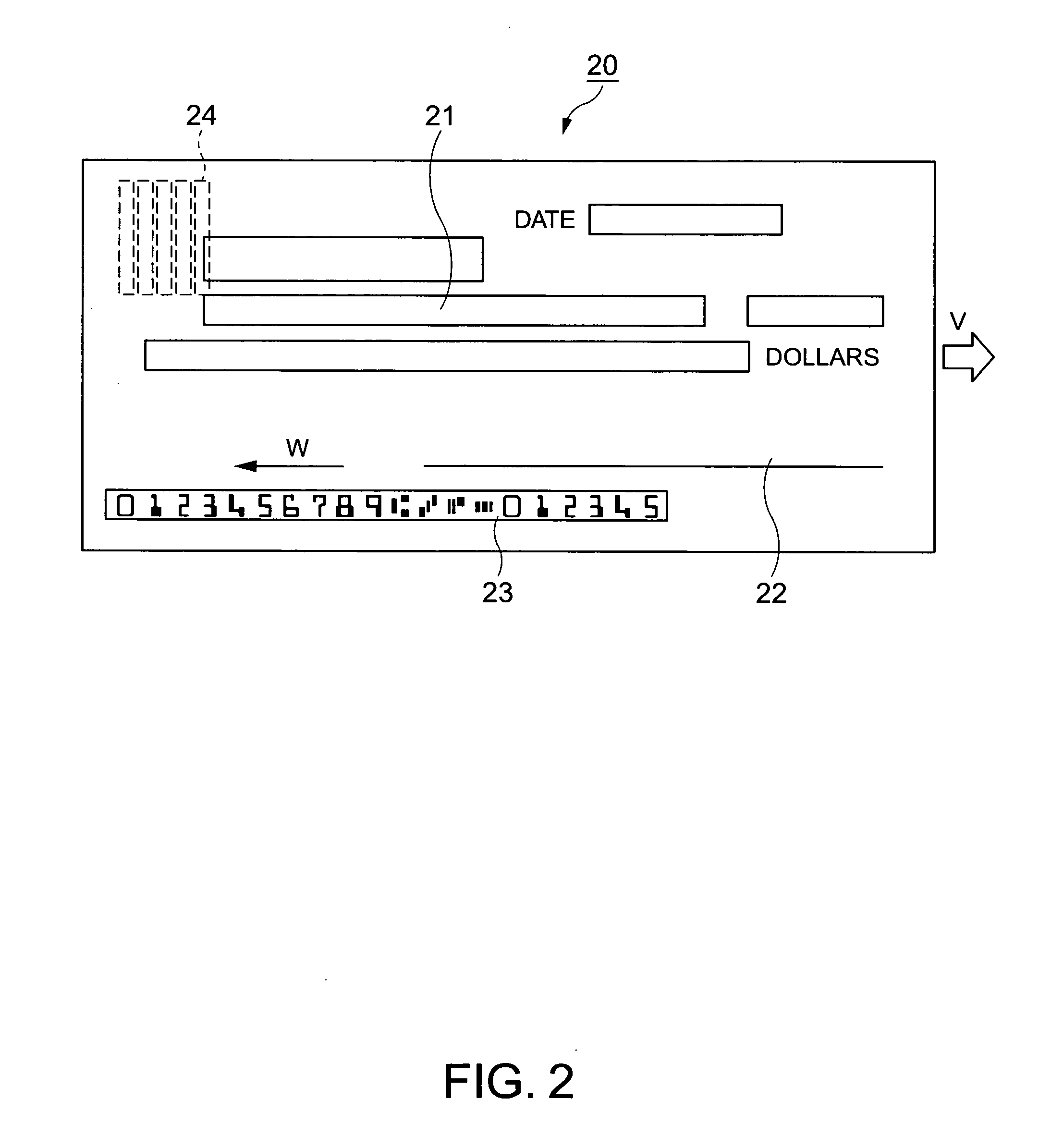

Optical reading apparatus, character recognition processing apparatus, character reading method and program, magnetic ink character reading apparatus, and POS terminal apparatus

InactiveUS20050286752A1Improving mismatch rateAccurate recognition rateCharacter recognitionEngineeringOptical character recognition

An optical reading apparatus and optical character recognition processing apparatus operating in conjunction with a magnetic ink character reading apparatus reduce the time in reading a string of characters formed in a line on a processed medium. This is done in the optical reading and recognition operations by selectively using a broad recognition area that allows for variation in character positions and a narrower recognition area where the probability of the desired character string being present is high depending on past results. An extracted image containing the character string is acquired from scanned image data and the recognition process is run. If recognition succeeds, the next matching area is set to a relatively narrow predicted range and the recognition process is applied to the predicted range. If character recognition succeeds within a specified distance, the next matching area is set to a relatively narrow predicted range. If character recognition does not succeed within the specified distance, the next matching area is set to the full width of the extracted image.

Owner:SEIKO EPSON CORP

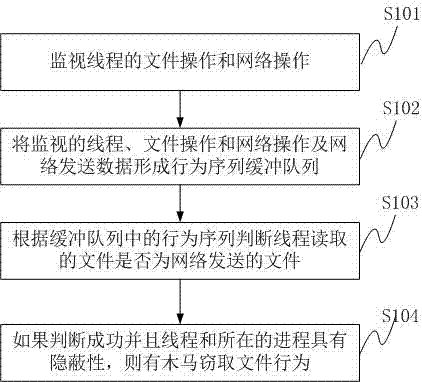

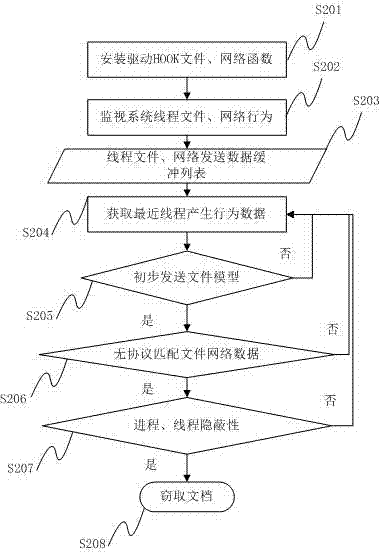

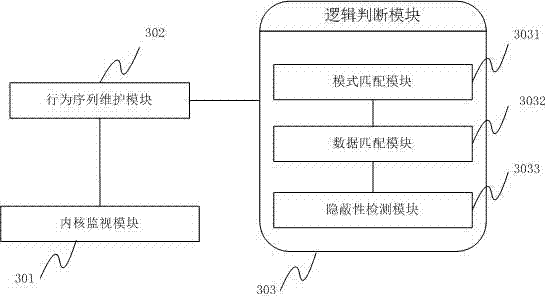

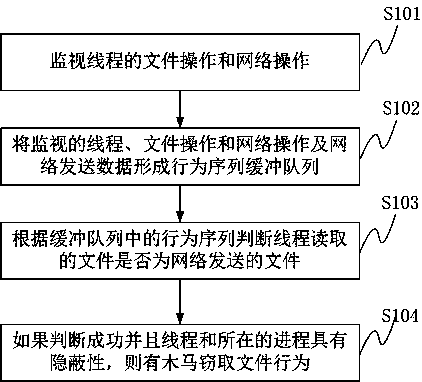

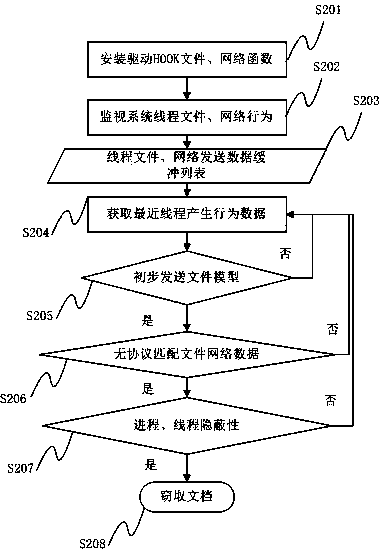

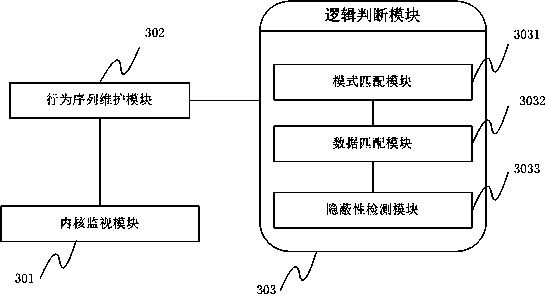

Method and system for detecting file stealing Trojan based on thread behavior

ActiveCN102394859AAccurate recognition rateNo false positivesPlatform integrity maintainanceTransmissionFile transmissionOperating system

The invention provides a method for detecting file stealing Trojan based on a thread behavior, which comprises the following steps of: monitoring file operation and network operation of a thread; forming a behavior sequence buffer queue by the monitored thread, the process of the monitored thread, intercepted file operation, data read by a file, the network operation and data transmitted by a network; judging whether the file read by the thread is a file transmitted by the network or not according to the behavior sequence in the buffer queue; and if SO, checking whether the thread and the process of the thread have secrecy, if SO, judging whether a behavior that Trojan steals the file exists. The invention also provides a system for detecting the file stealing Trojan based on the thread behavior. According to the method and the system, provided by the invention, the misreport of the normal file transmission can be reduced, and the detection to the behavior that the Trojan steals the file is improved.

Owner:HARBIN ANTIY TECH

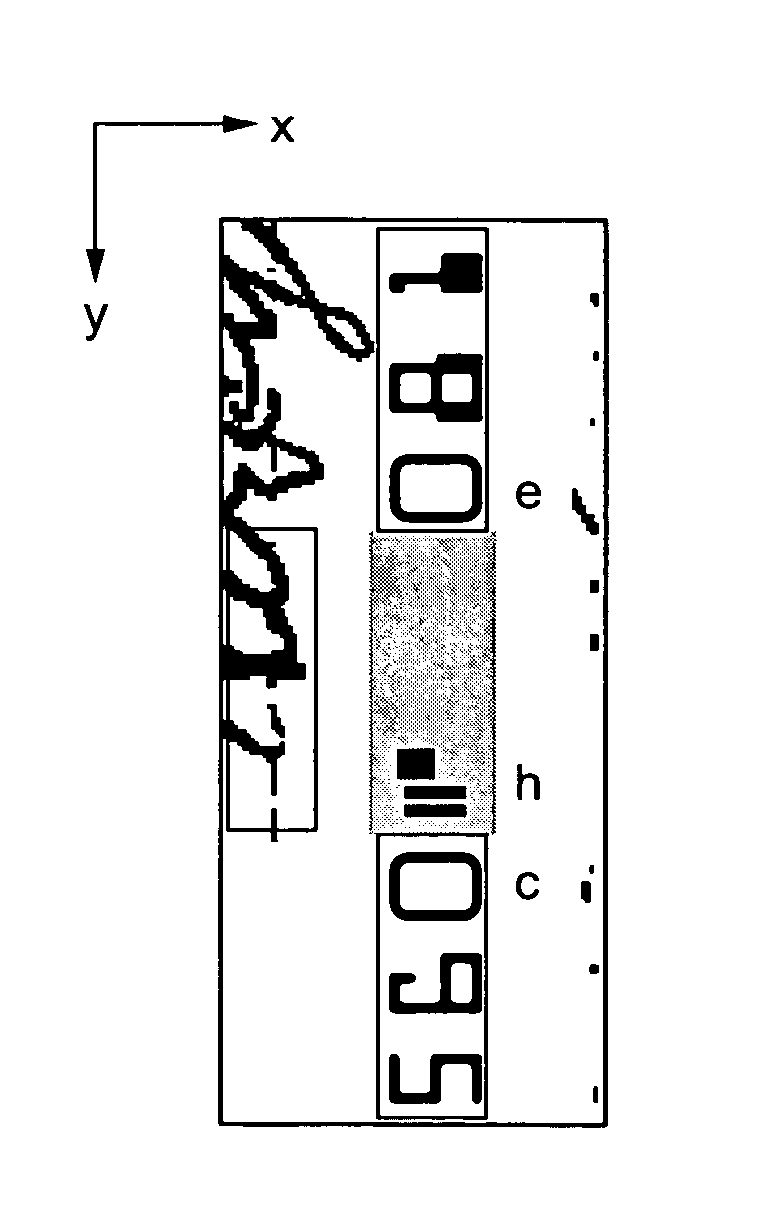

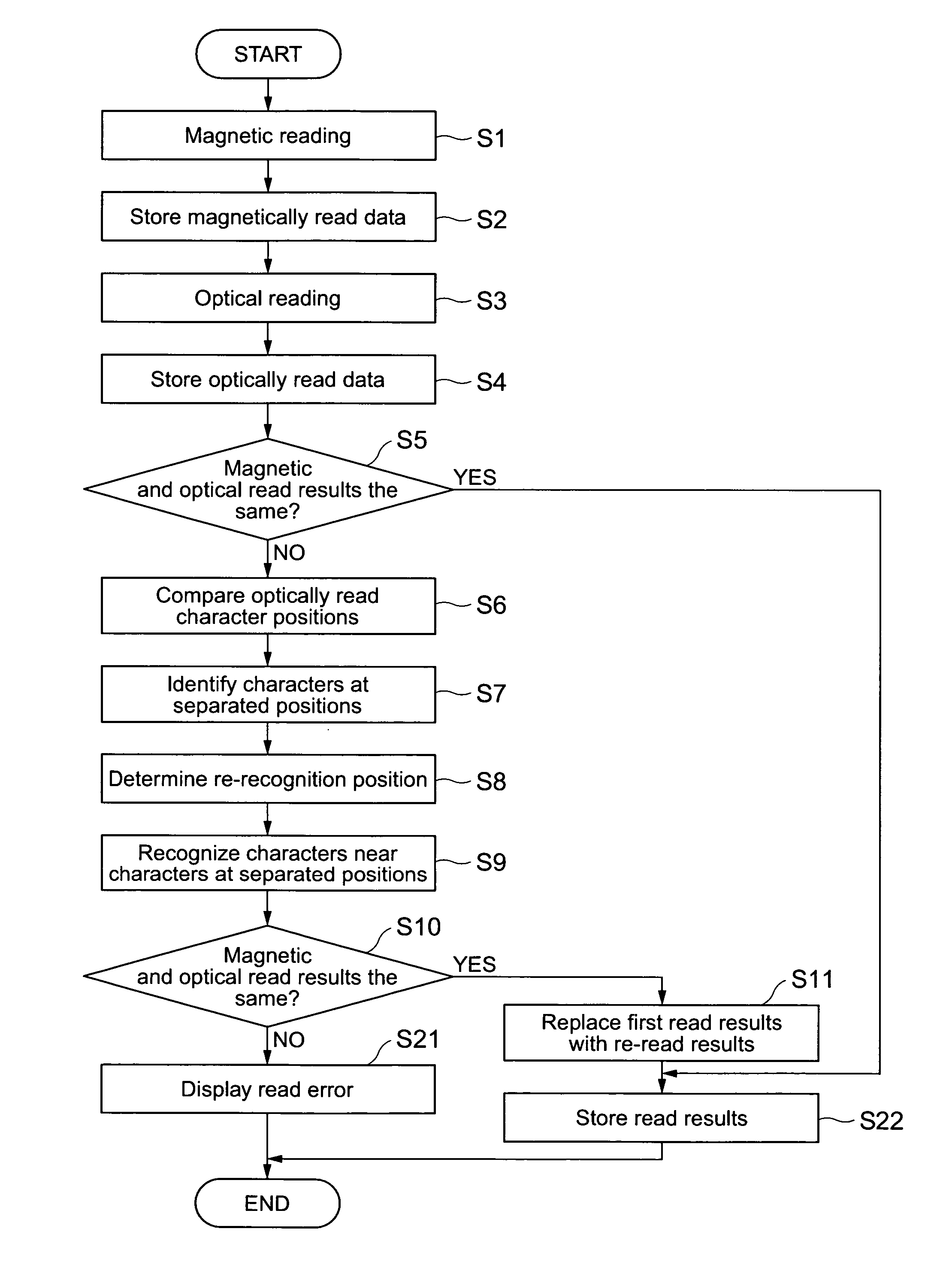

Magnetic ink character reading method and program

InactiveUS7606408B2Suppress misidentificationImprove read rateComplete banking machinesVoting apparatusText stringIdentification error

A magnetic ink character reading apparatus, magnetic ink character reading method and program, and a POS terminal apparatus reduce recognition errors and thereby improve the read rate. The magnetic ink character reading apparatus reads a text string of magnetic ink characters using both a magnetic reading mechanism and optical reading mechanism to obtain magnetic ink character recognition (MICR) results and optical character recognition (OCR) results, which are compared. The OCR process is repeated if the results differ. The positions of the read character blocks are compared to find character blocks that are offset perpendicularly to the base line of the magnetic ink characters, and the OCR process is repeated. The area to which the OCR process is applied again is near the position of the offset character block corrected in the direction perpendicular to the line of magnetic ink characters to be in line with the character blocks for which the MICR result and OCR result were the same.

Owner:SEIKO EPSON CORP

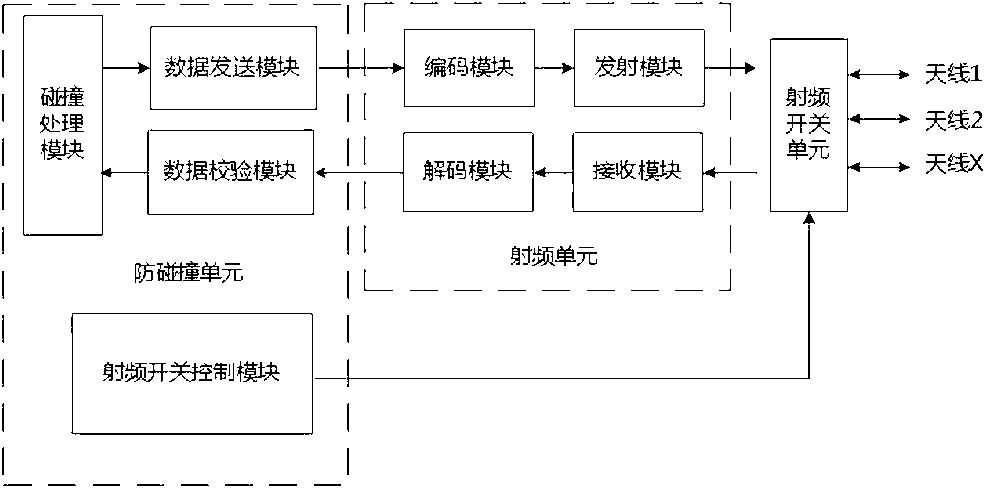

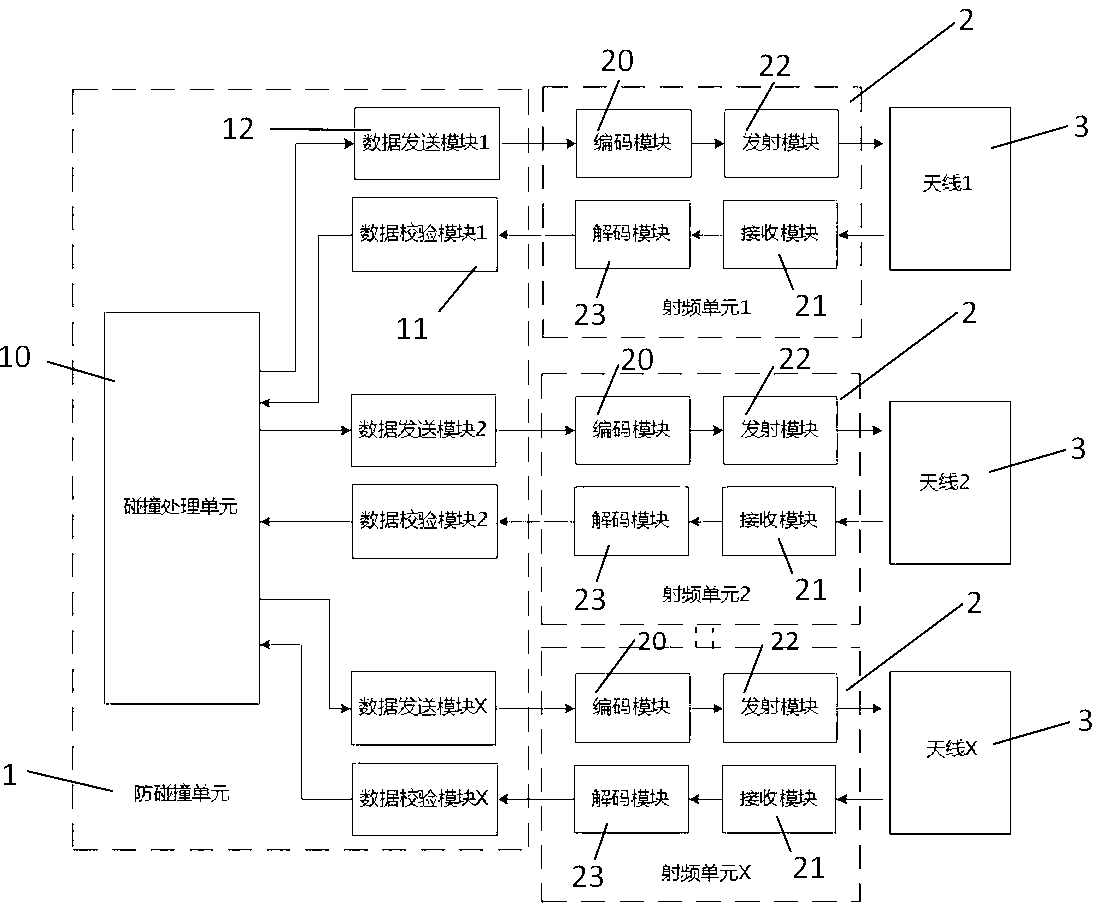

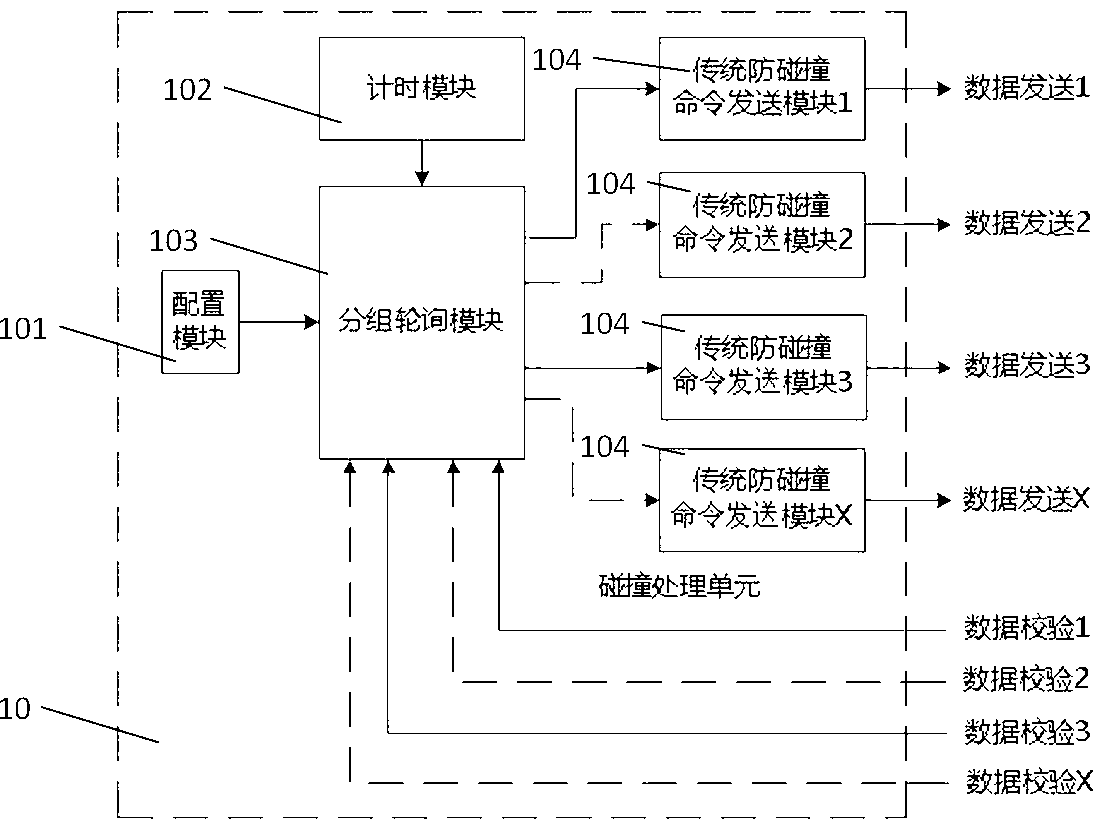

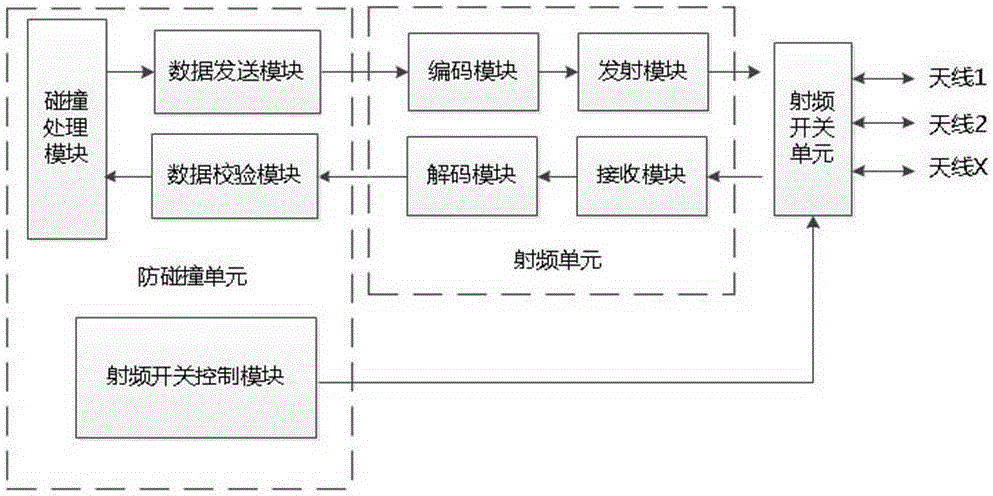

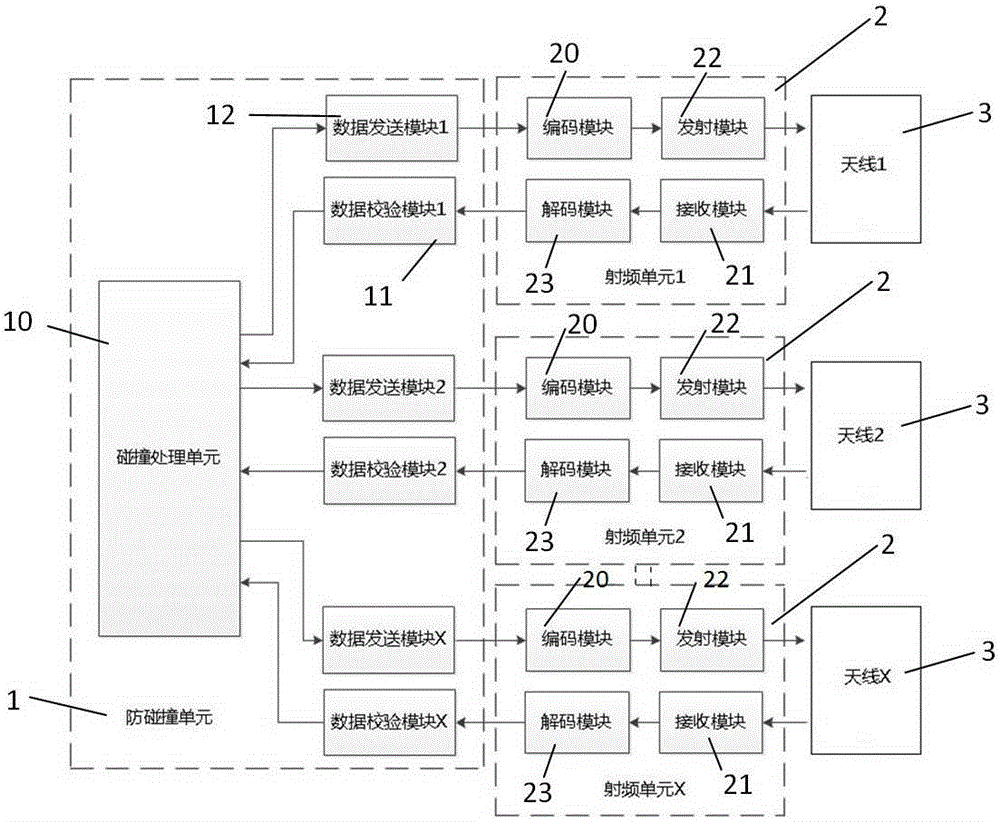

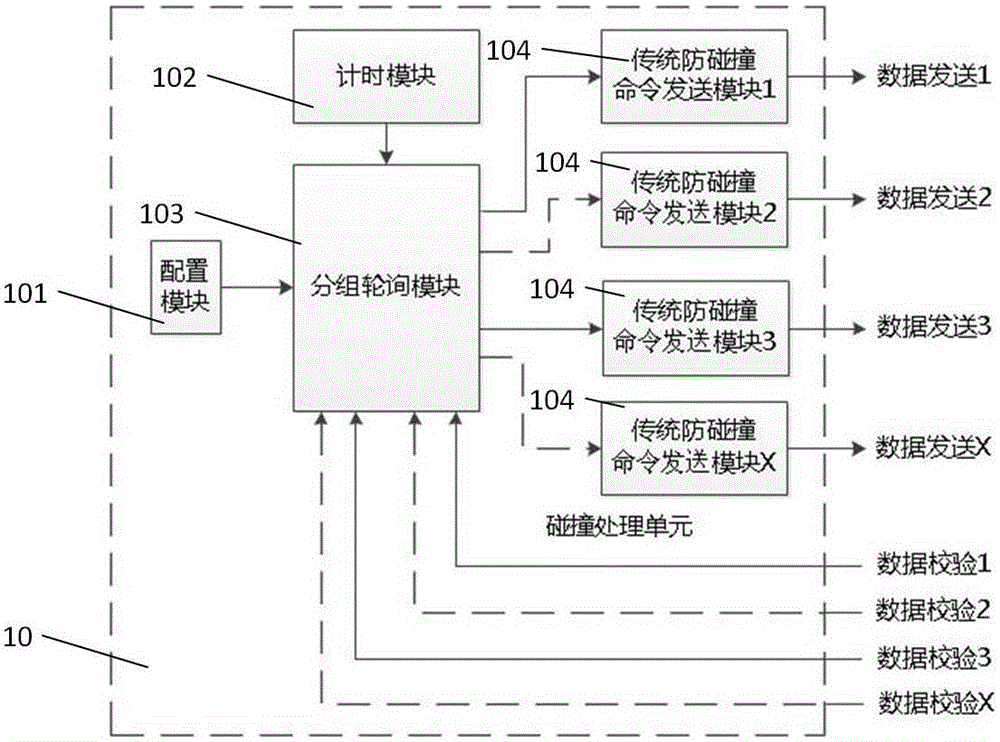

RFID (Radio Frequency Identification) reader with multiple antennae working simultaneously, and radio frequency data signal identification method of same

ActiveCN103324969AImprove recognition rateAccurate recognition rateCo-operative working arrangementsData signalEngineering

The invention provides an RFID (Radio Frequency Identification) reader with multiple antennae working simultaneously, and a radio frequency data signal identification method of the same. The RFID reader comprises an anti-collision unit, more than two radio frequency units and more than two antennae, wherein the radio frequency units are respectively connected with the anti-collision unit, and each antenna is connected with one radio frequency unit. The RFID reader can be used for realizing simultaneous working of multiple antennae of RFID, and solving the problem that the existing multi-antenna RFID system is prone to identification omission when only one antenna can work at the same time.

Owner:XIAMEN XINDECO IOT TECH

Magnetic ink character reading method and program

InactiveUS20050281449A1Suppress misidentificationImprove read rateCharacter and pattern recognitionPayment architectureTerminal equipmentText string

A magnetic ink character reading apparatus, magnetic ink character reading method and program, and a POS terminal apparatus reduce recognition errors and thereby improve the read rate. The magnetic ink character reading apparatus reads a text string of magnetic ink characters using both a magnetic reading mechanism and optical reading mechanism to obtain magnetic ink character recognition (MICR) results and optical character recognition (OCR) results, which are compared. The OCR process is repeated if the results differ. The positions of the read character blocks are compared to find character blocks that are offset perpendicularly to the base line of the magnetic ink characters, and the OCR process is repeated. The area to which the OCR process is applied again is near the position of the offset character block corrected in the direction perpendicular to the line of magnetic ink characters to be in line with the character blocks for which the MICR result and OCR result were the same.

Owner:SEIKO EPSON CORP

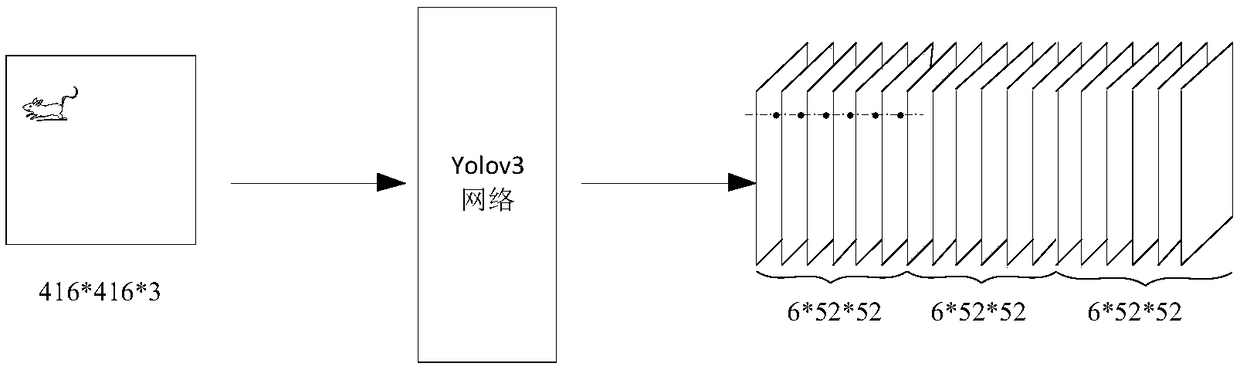

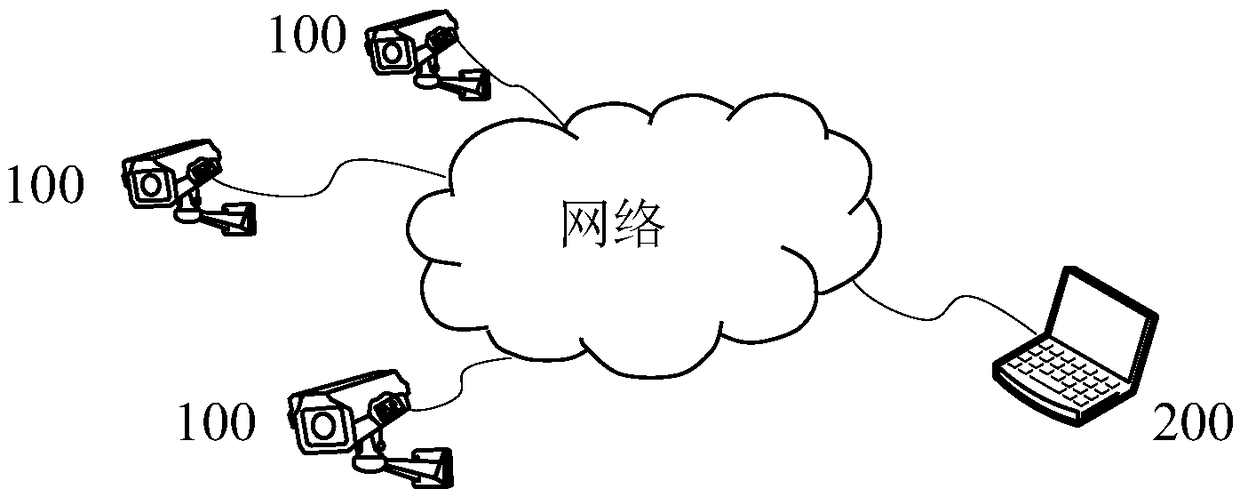

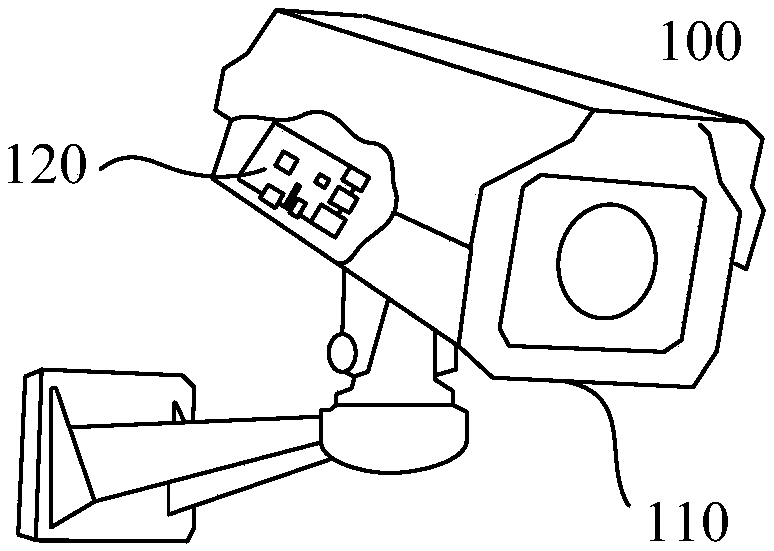

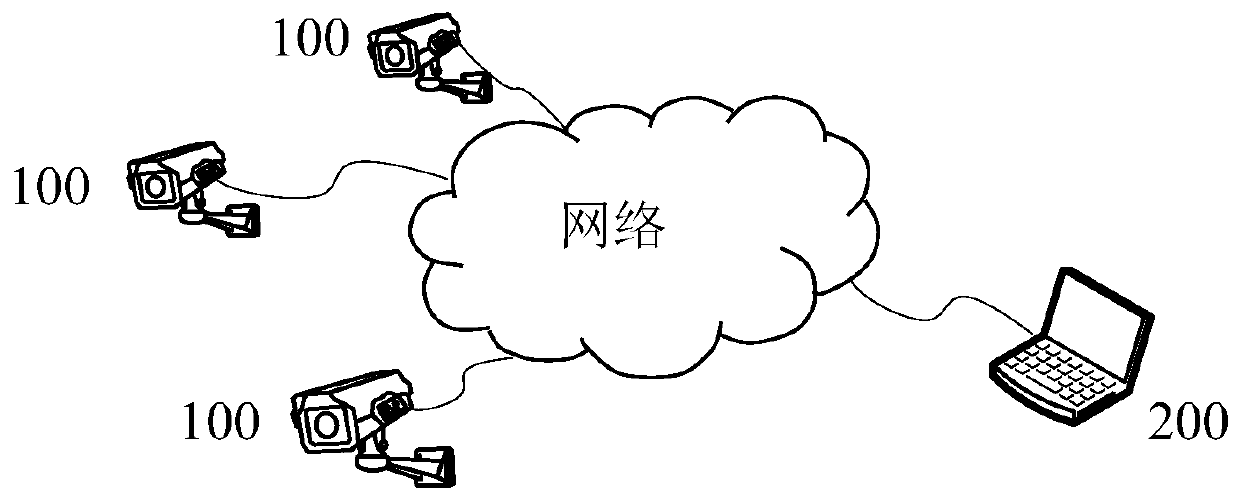

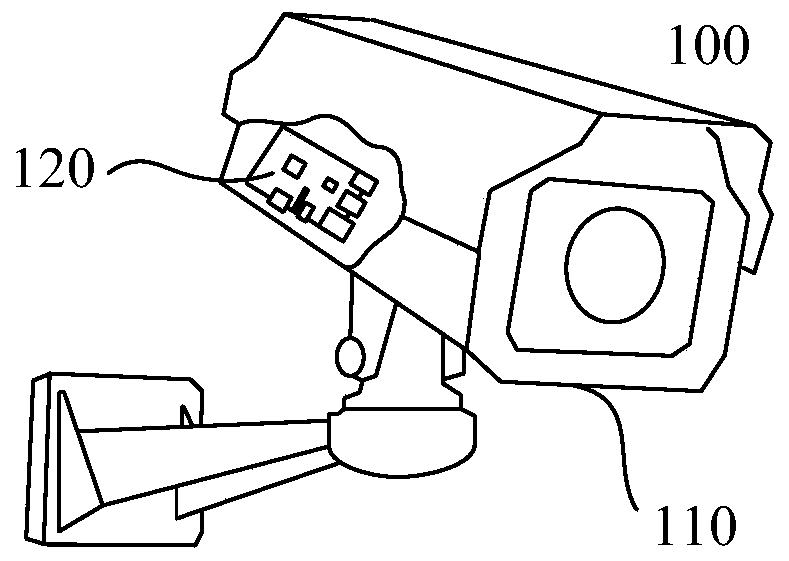

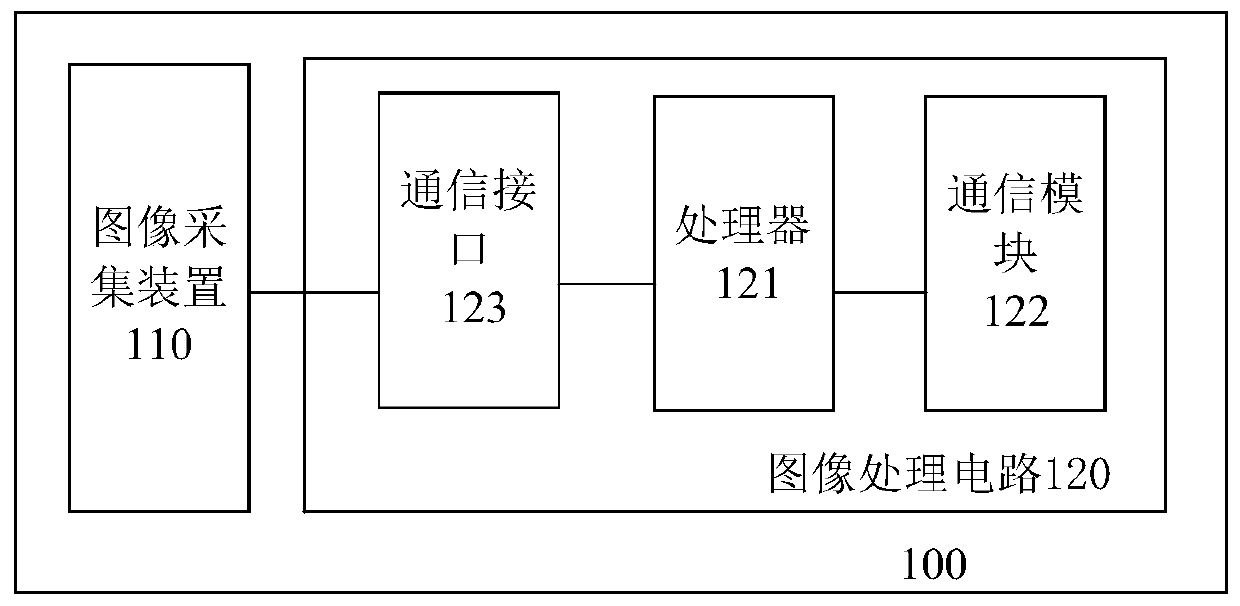

Method, device and image acquisition device for statistics of rat condition

ActiveCN109299703AAccurate recognition rateFast detection calculationCharacter and pattern recognitionMachine visionComputational model

The present application discloses a method, apparatus and image acquisition apparatus for counting rat conditions. Wherein the image acquisition device comprises an image acquisition device (110) foracquiring an image; And a processor (121) communicatively connected to the image acquisition device (110). The processor (121) is configured to perform operations of obtaining video from the image acquisition device (110) for a predetermined period of time; And analyzing the video using a computational model based on a convolution neural network to generate first statistical information related torat conditions in a monitoring area monitored by the image acquisition device. Thus, the invention solves the technical problems that the detection efficiency is not high in the existing machine vision monitoring method, the data quantity is too large for obtaining the detection pictures quickly, and the increase of the surveillance camera leads to the heavier operation burden of the server.

Owner:思百达物联网科技(北京)有限公司

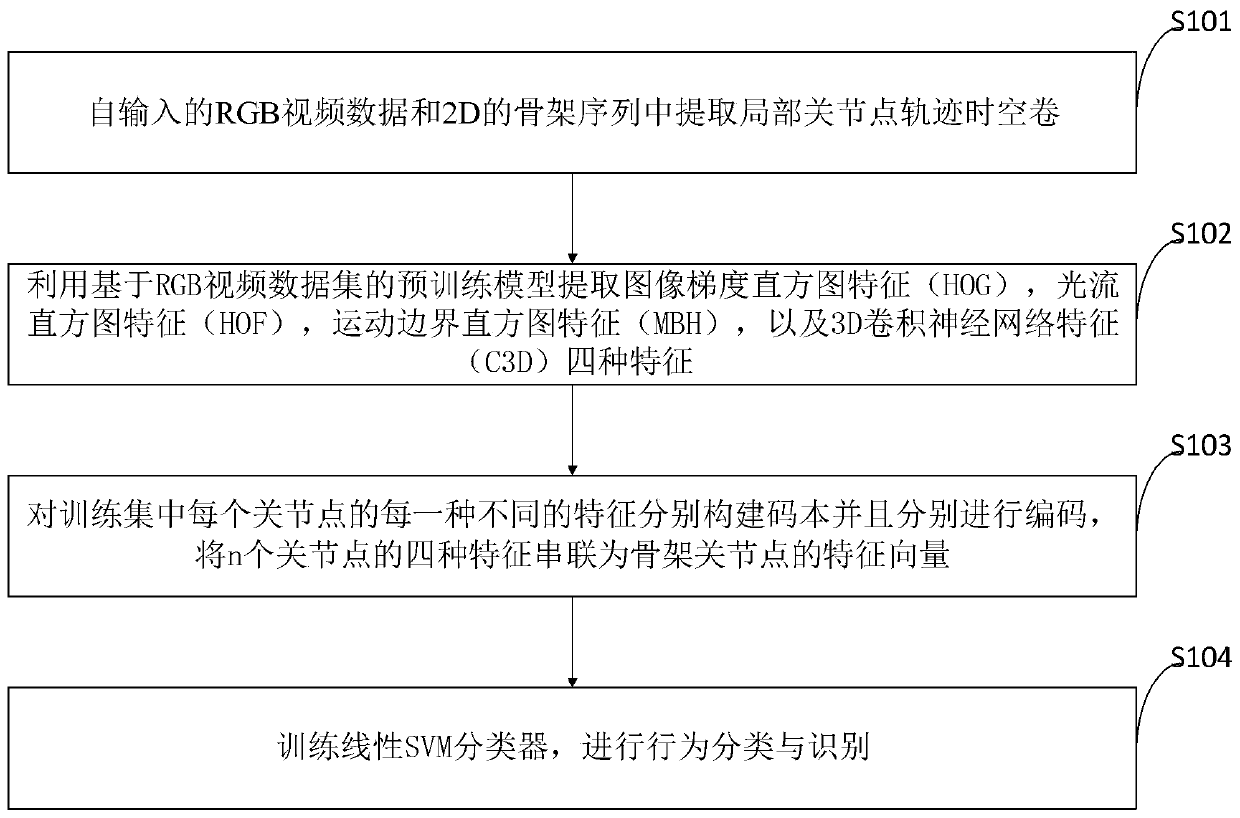

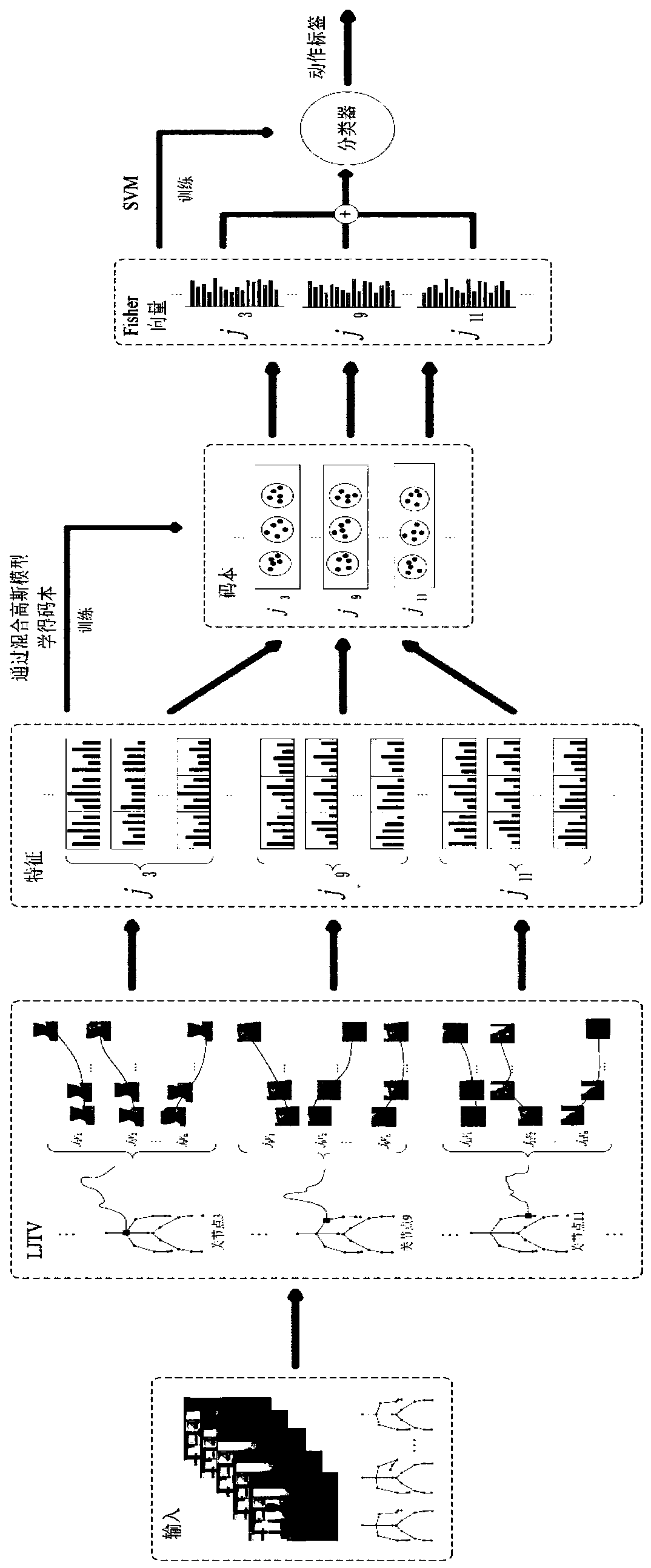

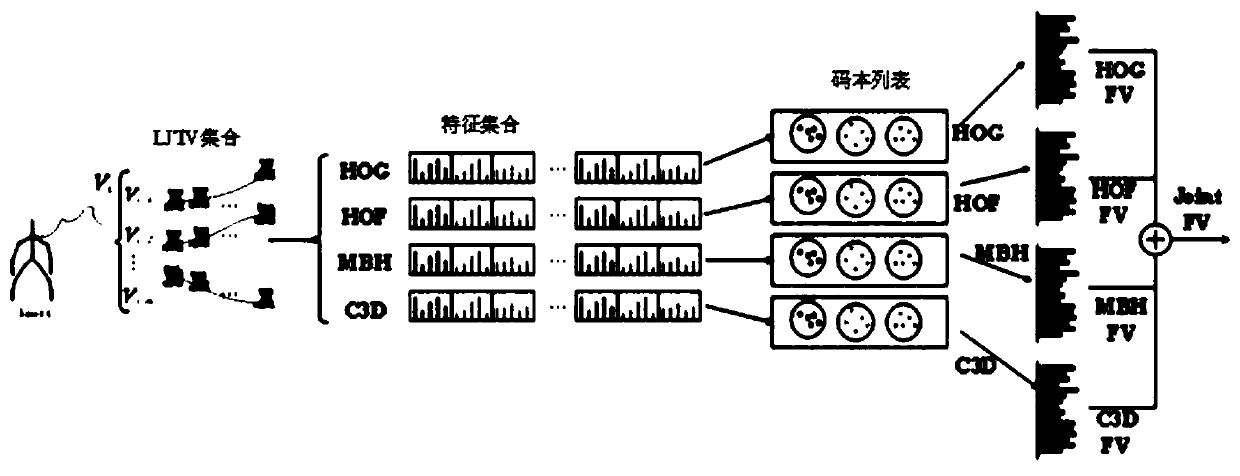

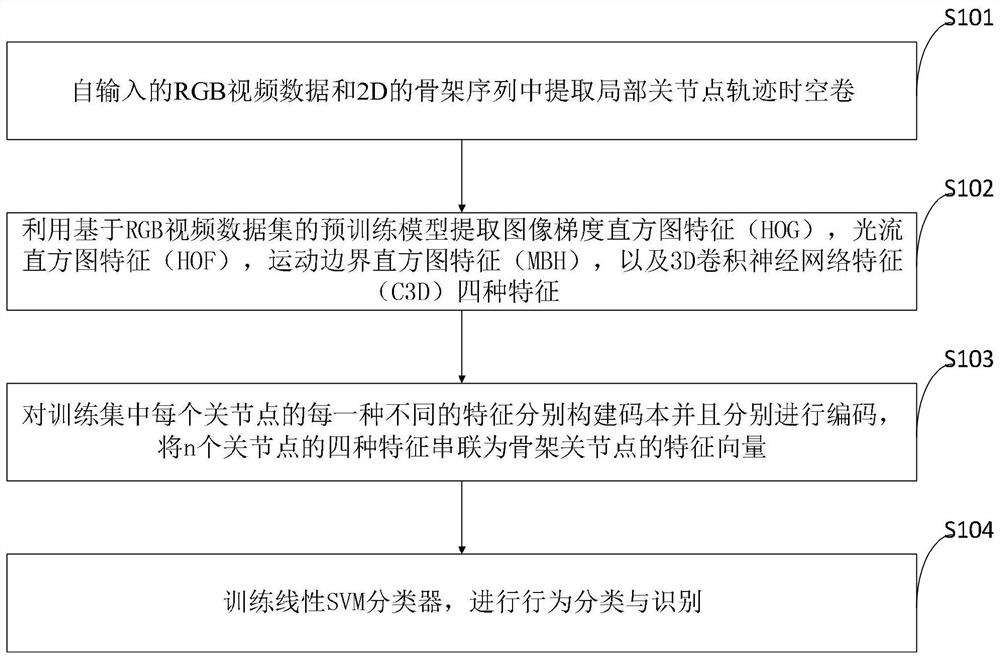

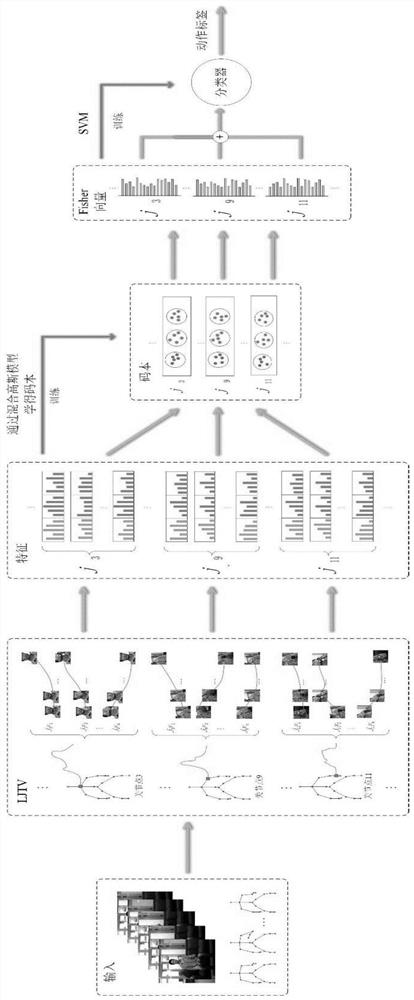

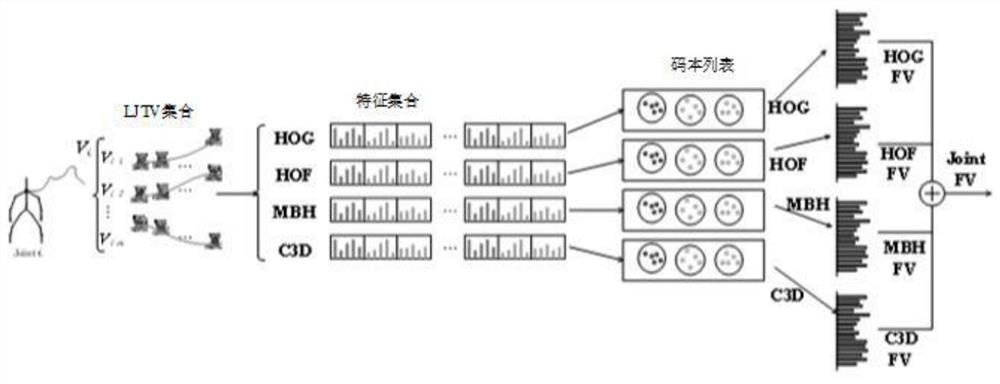

Behavior identification method based on local joint point track space-time volume in skeleton sequence

ActiveCN110555387AStable and accurate recognition rateIncrease costCharacter and pattern recognitionHuman bodyModel extraction

The invention belongs to the technical field of artificial intelligence, and discloses a behavior recognition method based on a local articulation point trajectory space-time volume in a skeleton sequence, and the method comprises the steps: extracting the local articulation point trajectory space-time volume from inputted RGB video data and skeleton articulation point data; extracting image features by using a pre-training model based on the RGB video data set; constructing a codebook for each different feature of each joint point in the training set and encoding the codebook, and connectingthe features of the n joint points in series to form a feature vector; and performing behavior classification and recognition by using an SVM classifier. According to the method, manual features and deep learning features are fused, local features are extracted by using a deep learning method, and fusion of multiple features can achieve a stable and accurate recognition rate. According to the invention, the 2D human body skeleton estimated by the attitude estimation algorithm and the RGB video sequence are used to extract the features, the cost is low, the precision is high, and the method hasimportant significance when applied to a real scene.

Owner:HUAQIAO UNIVERSITY

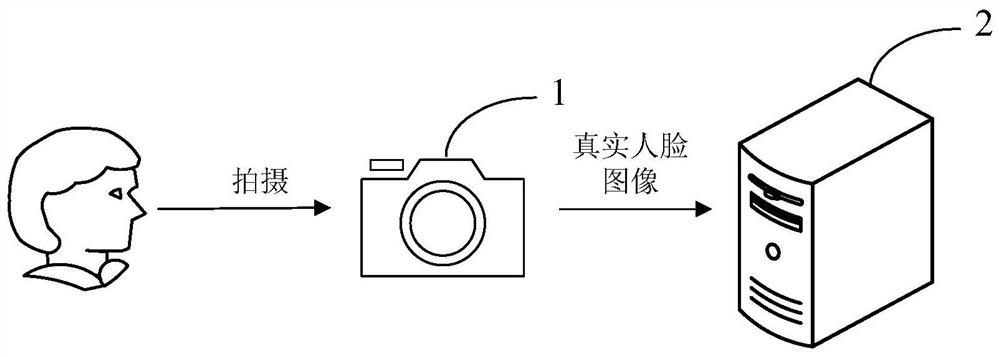

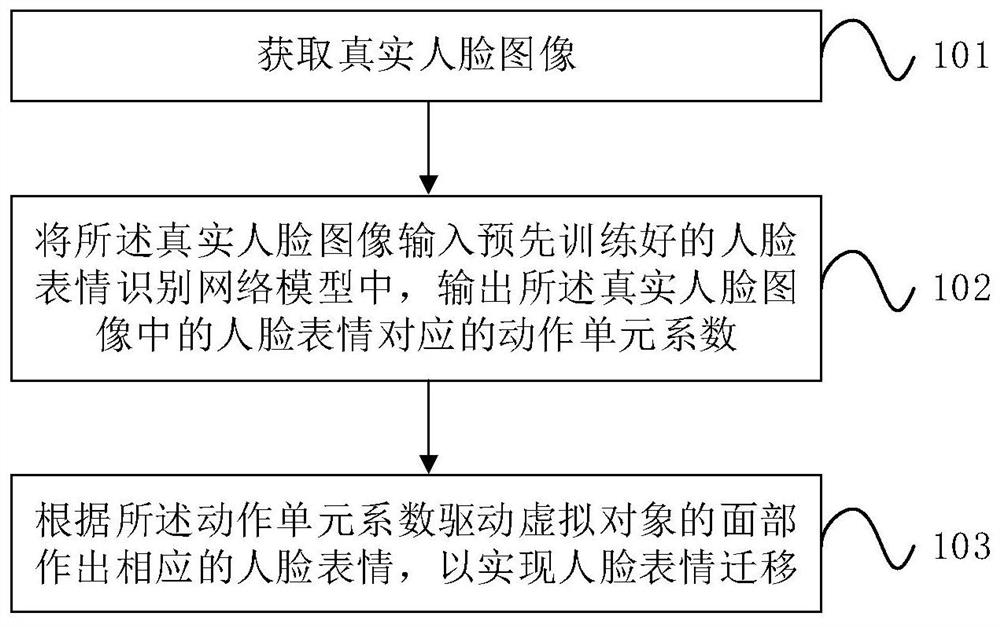

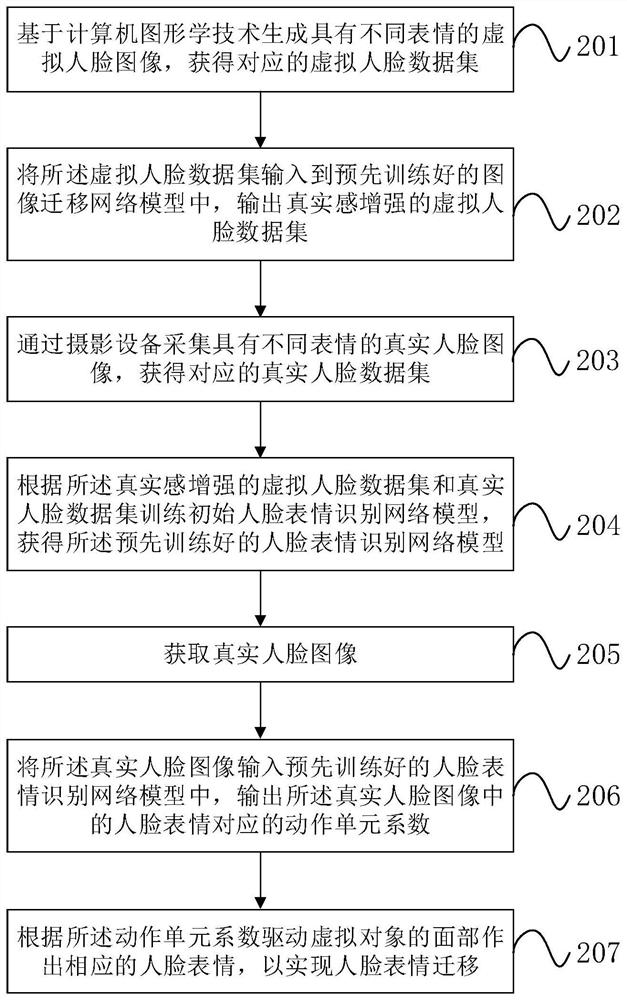

Facial expression migration method and device, electronic equipment and storage medium

ActiveCN112541445AAccurate recognition rateGeometric image transformationEnergy efficient computingPattern recognitionMedicine

The invention provides a facial expression migration method and device, electronic equipment and a storage medium. The method comprises the steps of obtaining a real facial image; inputting the real face image into a pre-trained face expression recognition network model, and outputting an action unit coefficient corresponding to a face expression in the real face image, wherein the face expressionrecognition network model is obtained through training of a virtual face data set and a real face data set, and the action unit coefficient is used for representing face data when a face is in different expressions; and driving the face of the virtual object to make a corresponding facial expression according to the action unit coefficient so as to realize facial expression migration, i.e., the facial expression recognition network model in the embodiment of the invention is obtained through joint training of the virtual facial data set and the real facial data set, so that the recognition rate is more accurate, the accuracy of facial expression recognition is improved, and the virtual object can make more realistic facial expressions.

Owner:CHINA UNITED NETWORK COMM GRP CO LTD +1

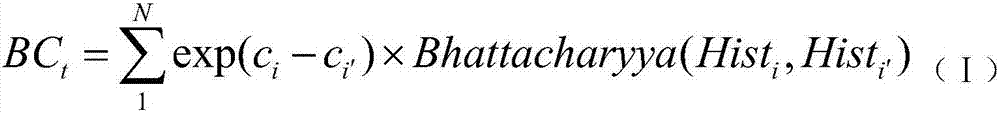

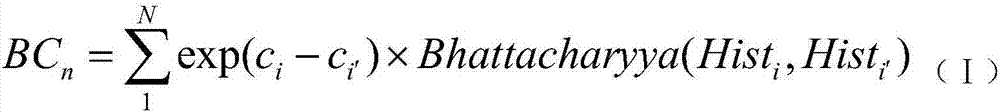

Novel video tracking method based on self-adaptive partitioning

ActiveCN105118071AImprove accuracyImprove adaptabilityImage analysisCharacter and pattern recognitionPattern recognitionSelf adaptive

The invention relates to a novel video tracking method based on self-adaptive partitioning, fully considers difference of pixel values inside a target area, and the video tracking method based on particle filtering realizes efficient and self-adaptive video tracking and improves accuracy and adaptivity of video tracking. For the problems of shielding, interference and the like which occur in video tracking, the video tracking method provided by the invention can maintain a relatively accurate recognition rate, fully considers content information in a video and image, and adaptively adjusts a corresponding partitioning strategy according to characteristics of a tracked target area, thereby achieving a high-intelligence and high-accuracy video tracking effect.

Owner:SHANDONG UNIV

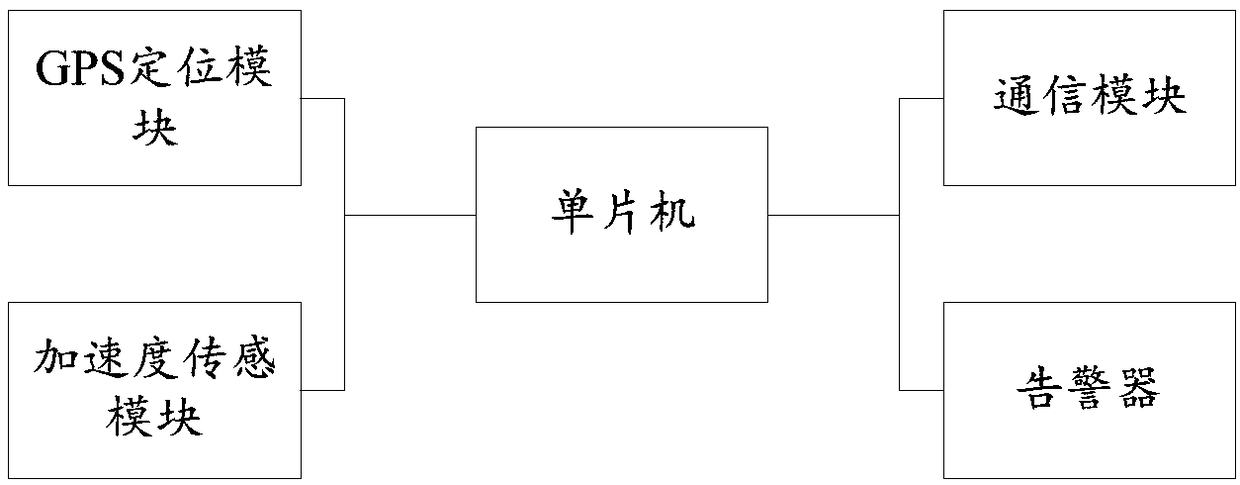

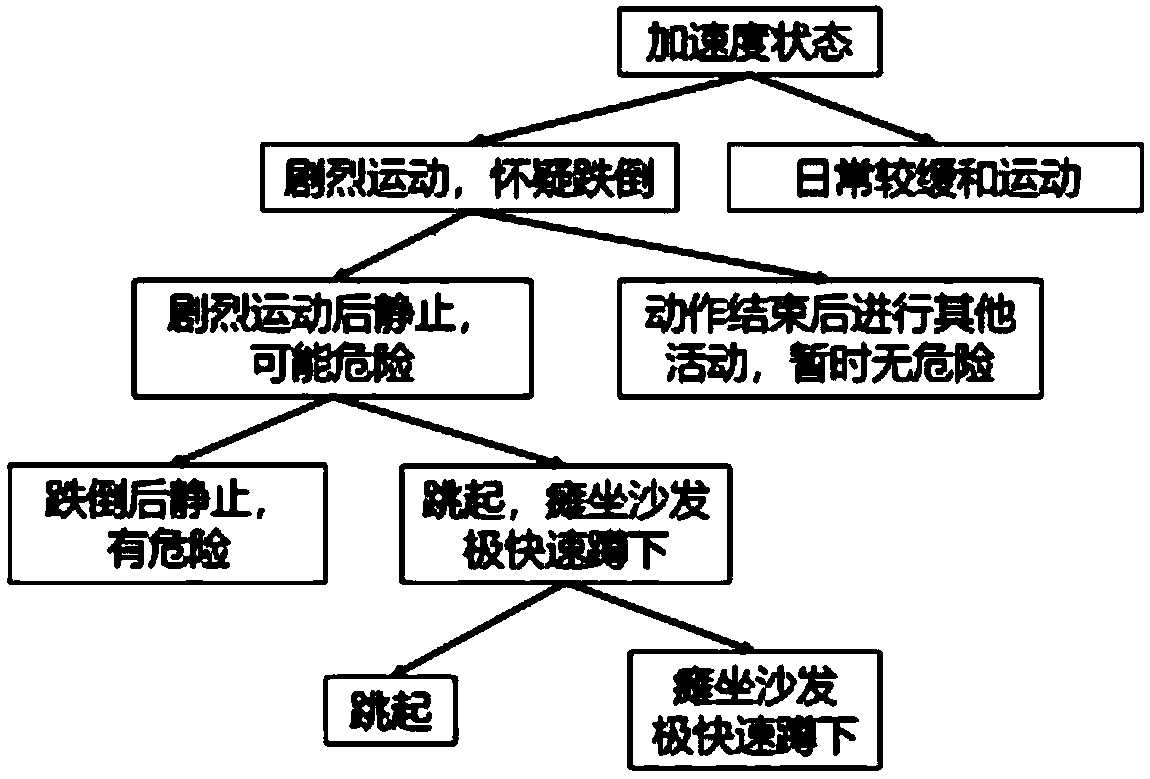

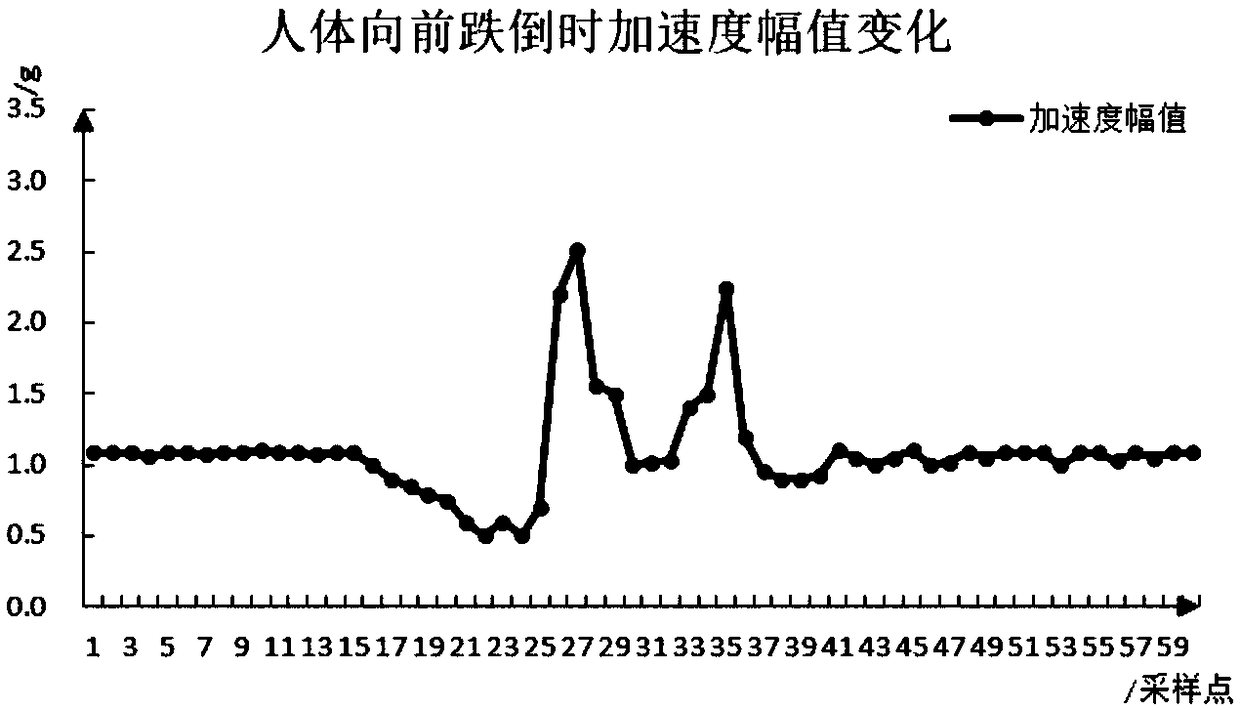

Safety protection terminal and system with tumbling recognition function

The invention discloses a safety protection terminal and system with a tumbling recognition function. The terminal comprises an acceleration sensing module used for obtaining acceleration of a user inreal time, a GPS positioning module used for obtaining position information of the user, a communication module used for sending information under control of a single chip microcomputer, an alerter used for issuing an alarm under control of the single chip microcomputer, and the single chip microcomputer used for obtaining acceleration amplitude and included angle by calculating based on the acceleration obtained by the acceleration sensing module, taking the acceleration amplitude and included angle as state eigenvalues of a human body, determining the current user status through threshold conditions of the state eigenvalues in different human states to conduct tumbling type recognizing, sending the fall recognition type and position information through the communication module, and controlling the alerter to issue the alarm. The safety protection terminal and system can provide good safety and security for the elderly, the disabled and the like. Recognition accuracy rate is higher,and false alarm is reduced.

Owner:SOUTHEAST UNIV

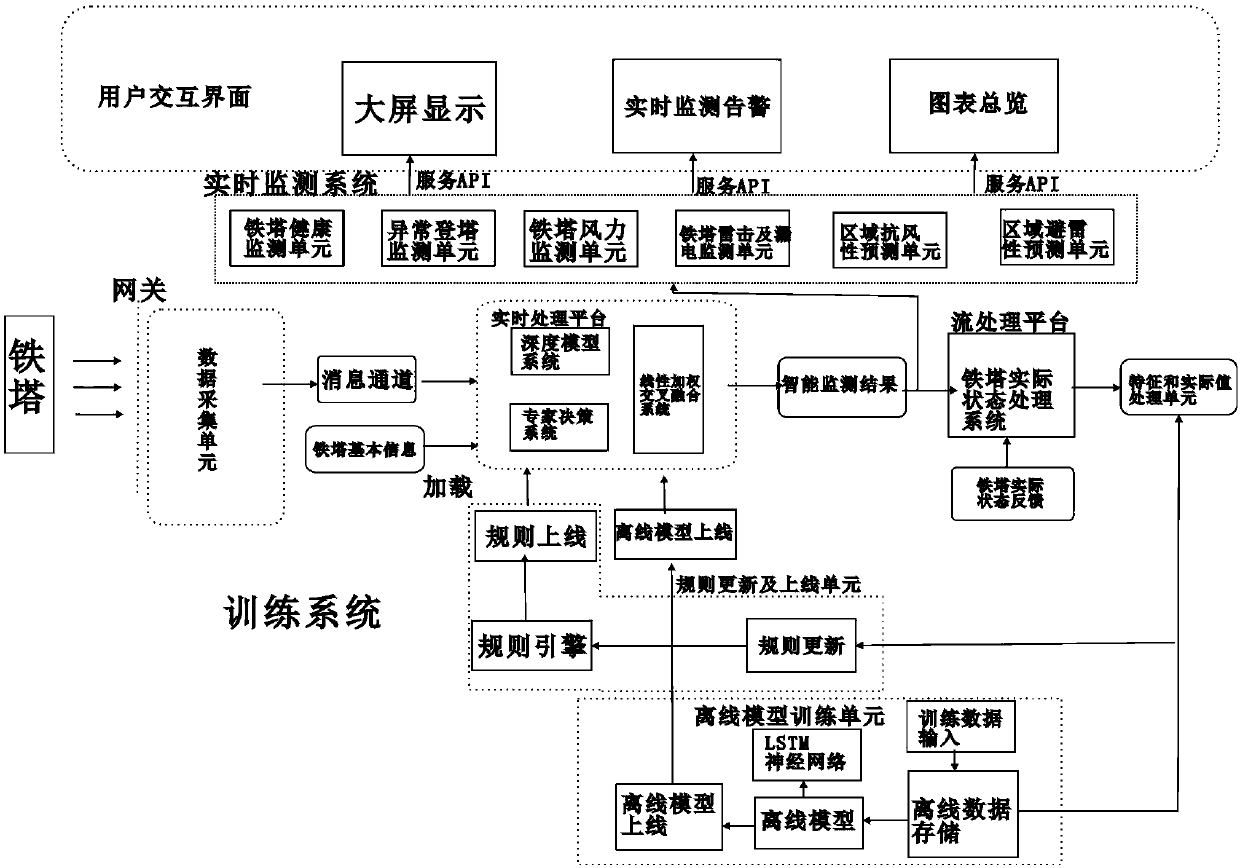

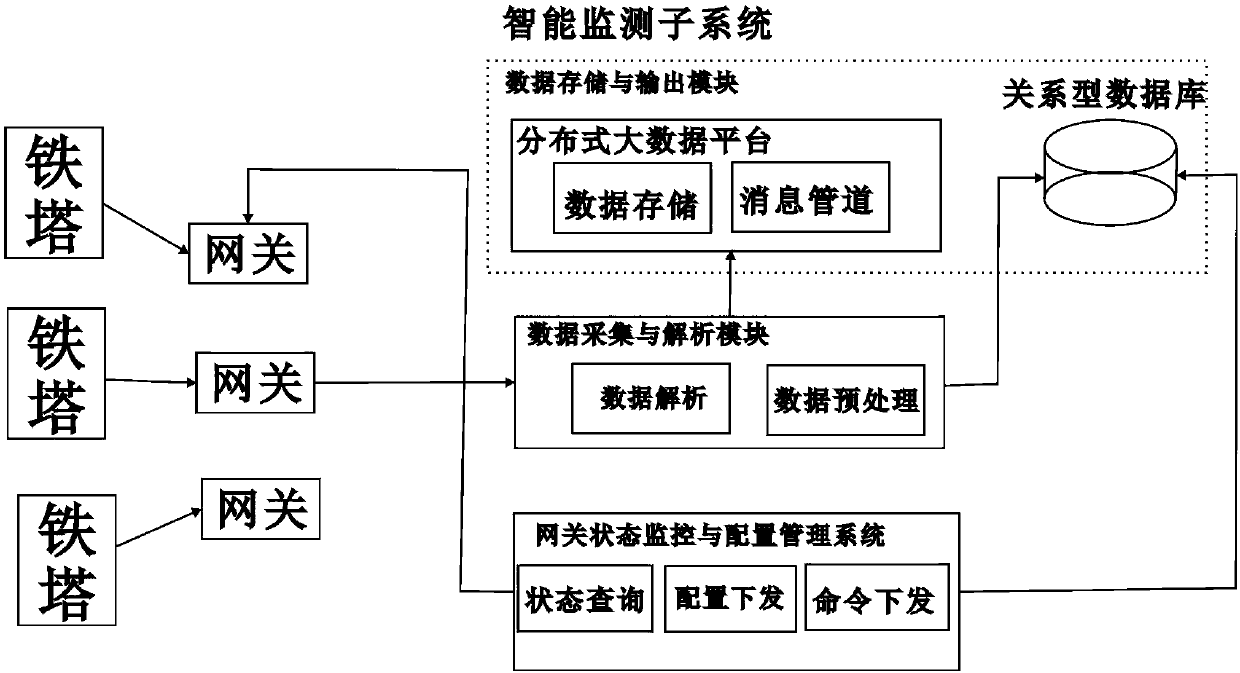

Intelligent detection system for monitoring real-time state of iron tower and method thereof

InactiveCN109959820AAccurate recognition rateStrong real-time monitoringElectrical measurementsIdentification rateInternet of Things

The invention provides an intelligent detection system for monitoring the real-time state of an iron tower and a method thereof. The system comprises an iron tower, and further comprises an iron towerintelligent monitoring platform, an intelligent monitoring subsystem and a user interaction interface, wherein four types of sensors are installed on the iron tower, and the four types of sensors comprise an angle sensor for monitoring the tilt angle of the iron tower, a vibration sensor for monitoring the vibration of the iron tower, a Hall current sensor for monitoring the lightning current andan electric leakage detection sensor for detecting electric leakage; a gateway with a gateway monitoring management and configuration management system is also deployed on the iron tower; and the gateway deployed on the iron tower performs collection and transmission to the iron tower intelligent monitoring platform through an Internet of Things communication protocol. The data uploaded by the sensors arranged on the iron tower can be intelligently analyzed, and the state of the iron tower can be monitored and judged in real time; and through video acquisition, whether the iron tower has a dangerous situation is judged in real time, and compared with manual monitoring, the intelligent monitoring has a more accurate recognition rate.

Owner:广州奈英科技有限公司

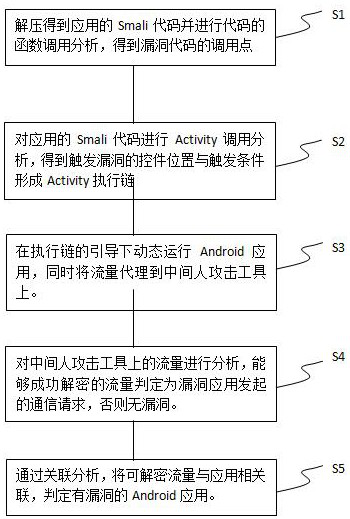

Android application digital certificate verification vulnerability detection system and method

PendingCN112541179AAvoid false positivesAccurate recognition rateDigital data protectionPlatform integrity maintainanceApplication softwareEmbedded system

The invention belongs to the field of application software detection of Android terminals, and particularly relates to an Android application digital certificate verification vulnerability detection system and method, and the system comprises a static detection module, a dynamic detection module and an intermediary agent module. The static detection module is used for discovering potential applications with digital certificate verification vulnerabilities according to static code characteristics of vulnerability applications; the dynamic detection module is used for dynamically executing application triggering vulnerability codes; the intermediary agent module is used for initiating intermediary attacks and trying to decrypt HTTPS flow so as to confirm whether digital certificate verification vulnerabilities really exist in applications or not, and the method makes up for the defects of false alarms caused by single use of static detection and low efficiency caused by single use of dynamic detection through the mode of combining dynamic detection and static detection, effective detection of the application is achieved, and the problems of low efficiency of manual auditing, high cost of large-scale market-level application detection and the like are solved.

Owner:STATE GRID HENAN ELECTRIC POWER ELECTRIC POWER SCI RES INST +1

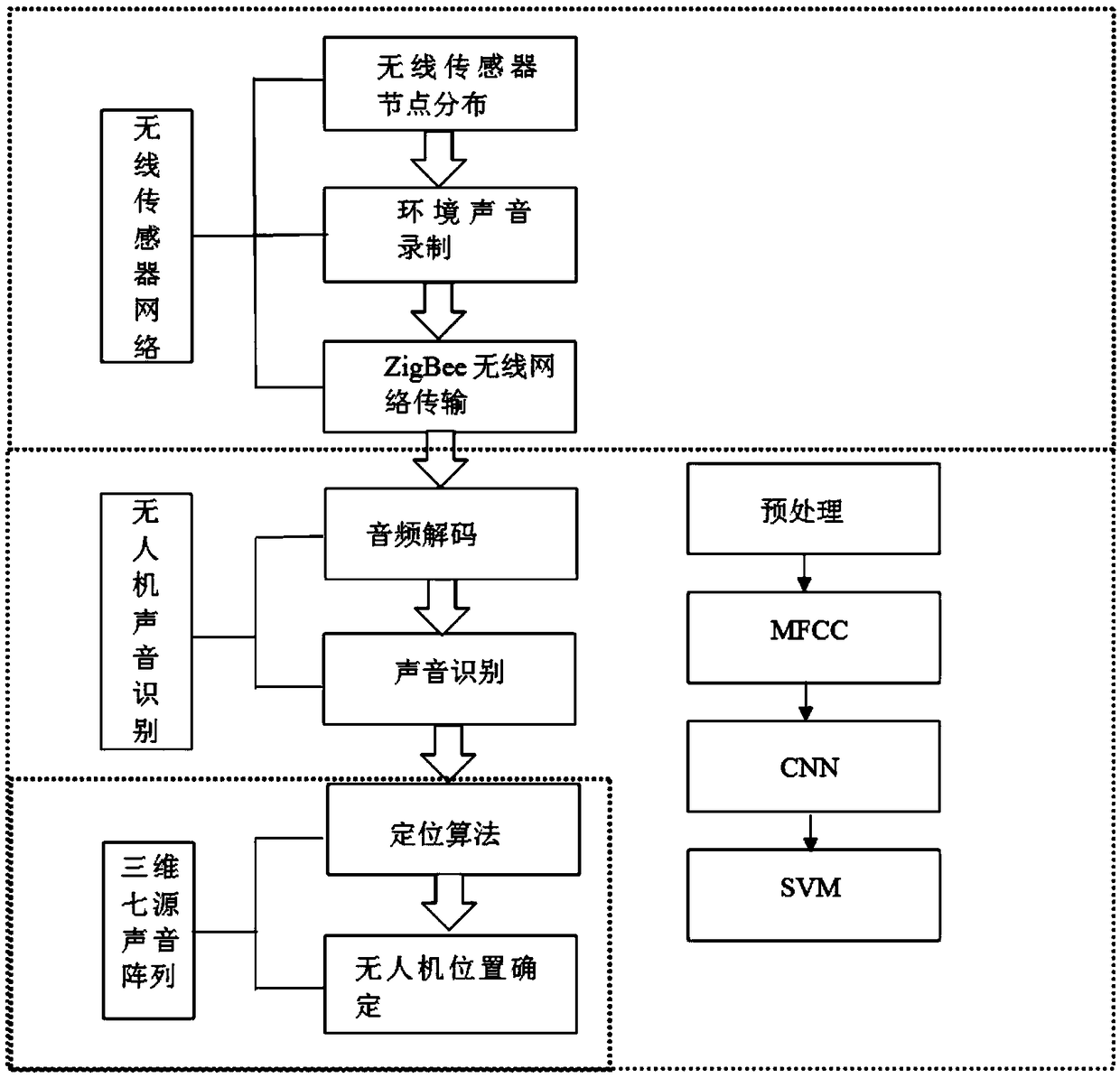

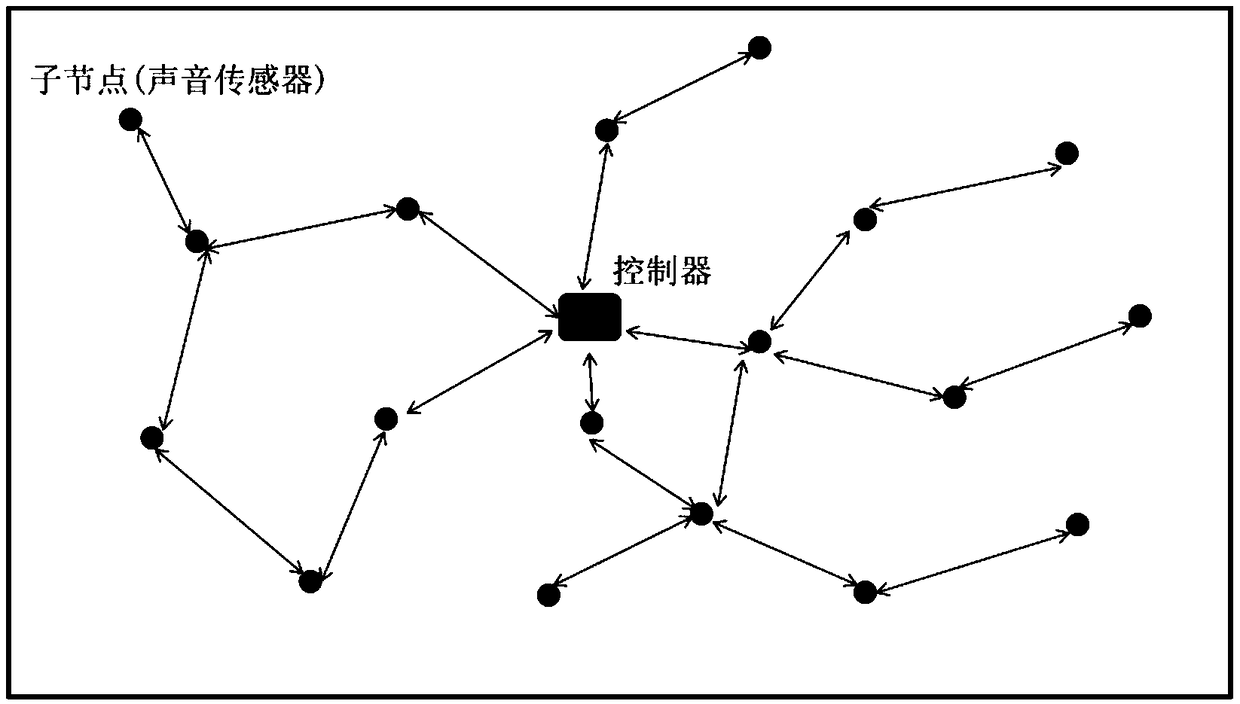

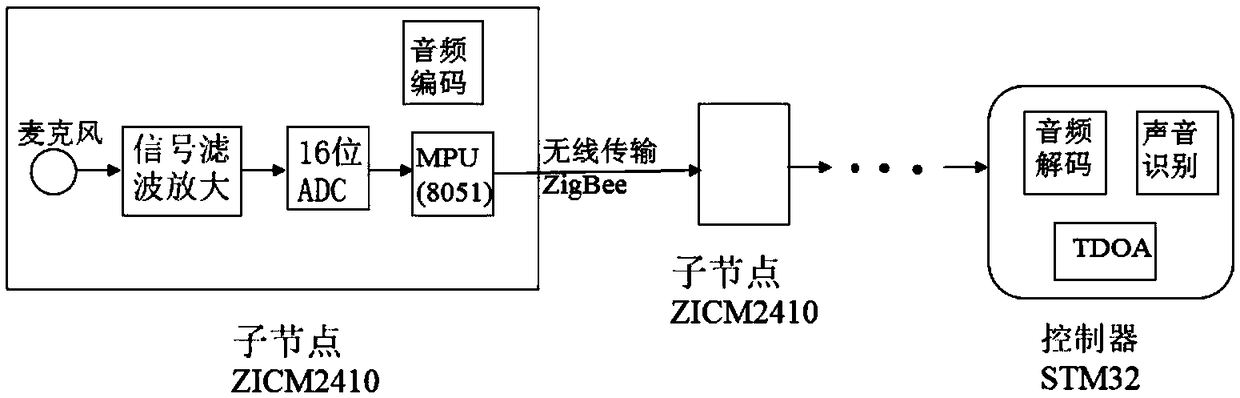

Sound recognizing and positioning device and method for unmanned aerial vehicle

InactiveCN109343001AAdaptableAccurate recognition rateSpeech analysisPosition fixationSensor arraySound sources

The invention discloses a sound recognizing and positioning device and a method for unmanned aerial vehicles. The device comprises a wireless sound sensor network and a master controller. The wirelesssound sensor network comprises a plurality of wireless sound sensor nodes. Each wireless sound sensor node is mainly composed of seven sound sensors, a signal filtering amplification module, an ADC module, an MCU and a wireless transmission module. The master controller comprises a decoding module, a sound recognition module, and a sound source positioning module based on a three-dimensional seven-element sound sensor array. According to the sound recognizing and positioning device and the method for the unmanned aerial vehicles, the environment sound monitoring and transmission are performedin a wireless sensor network mode that is convenient, fast, and high in adaptability. In addition to using MFCC for feature extraction when performing the sound recognition, the sound is also input into a CNN model, then whether the sound of the unmanned aerial vehicle is contained is determined using SVM, so that the recognition rate is more accurate. Finally, a sound source positioning algorithm based on the three-dimensional seven-element sound sensor array is adopted to perform three-dimensional positioning on the unmanned aerial vehicle, so that the positioning is more accurate.

Owner:JIANGXI UNIV OF SCI & TECH

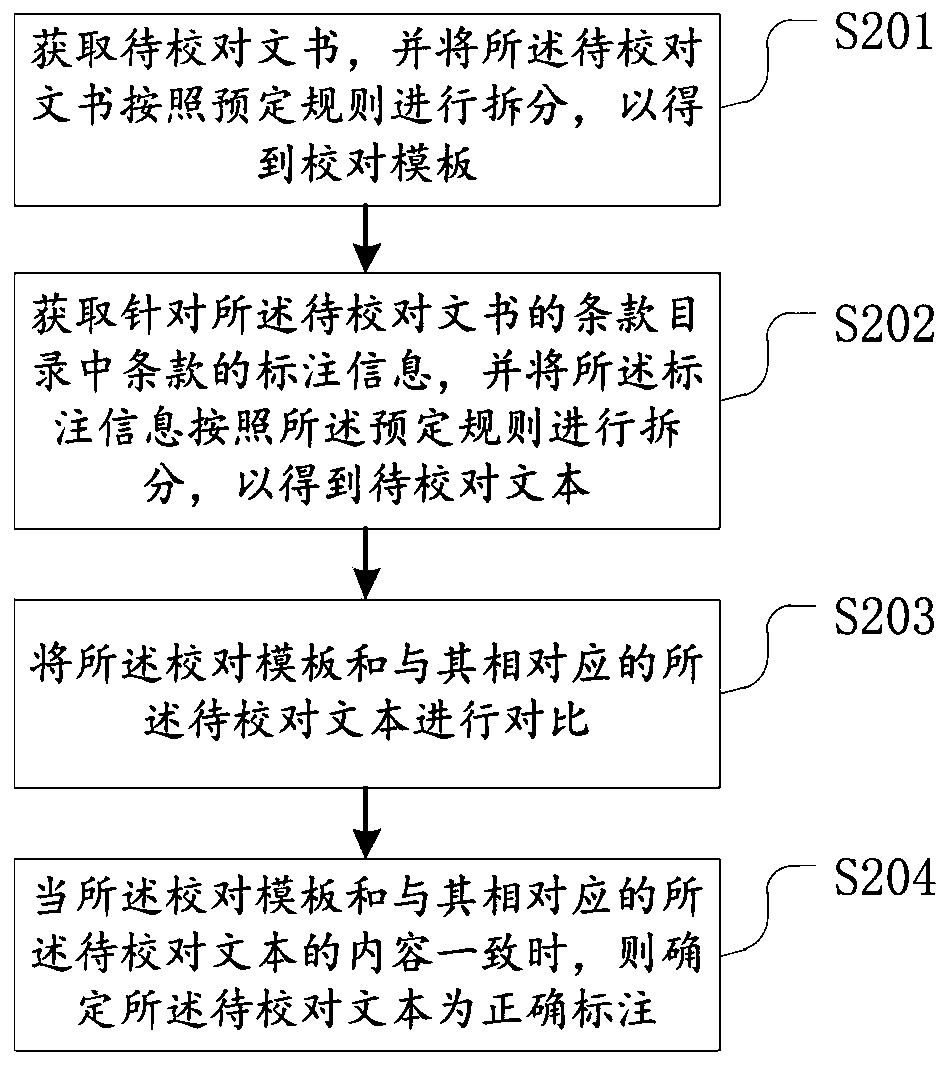

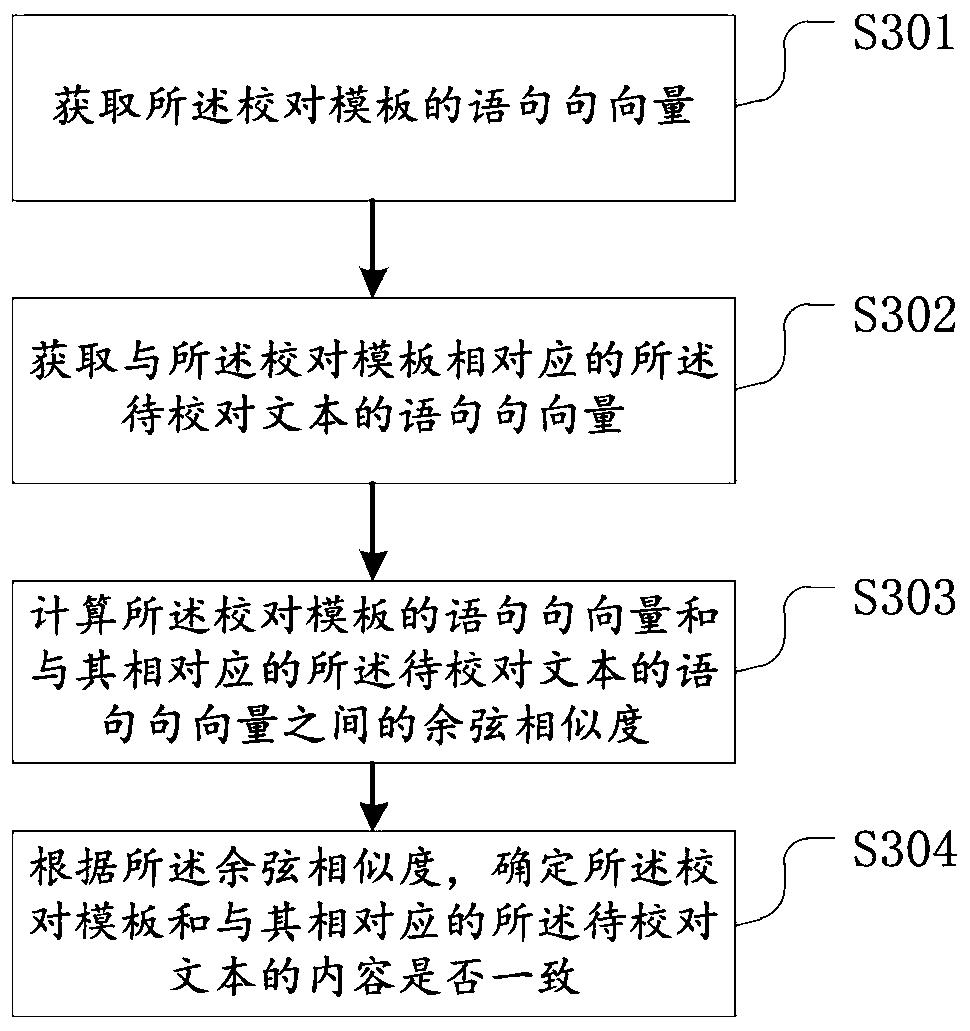

Document review proofreading method and device, storage medium and electronic equipment

PendingCN110674633AAccurate recognition rateGuaranteed uniformityNatural language data processingNatural language processingEngineering

The invention provides a document review proofreading method and device, and belongs to the technical field of similarity matching. The document review proofreading method comprises the steps: obtaining a to-be-proofreading document, and splitting the to-be-proofreading document according to a preset rule, so as to obtain a proofreading template; obtaining annotation information for terms in a term directory of the to-be-proofreading document, and splitting the annotation information according to the predetermined rule to obtain a to-be-proofreading text; comparing the proofreading template with the to-be-proofreading text corresponding to the proofreading template; and when the proofreading template is consistent with the content of the to-be-proofreading text corresponding to the proofreading template, determining that the to-be-proofreading text is correctly labeled. According to the document review proofreading method, the efficiency of document review and proofreading is improved,and the proofreading cost is reduced.

Owner:PING AN TECH (SHENZHEN) CO LTD

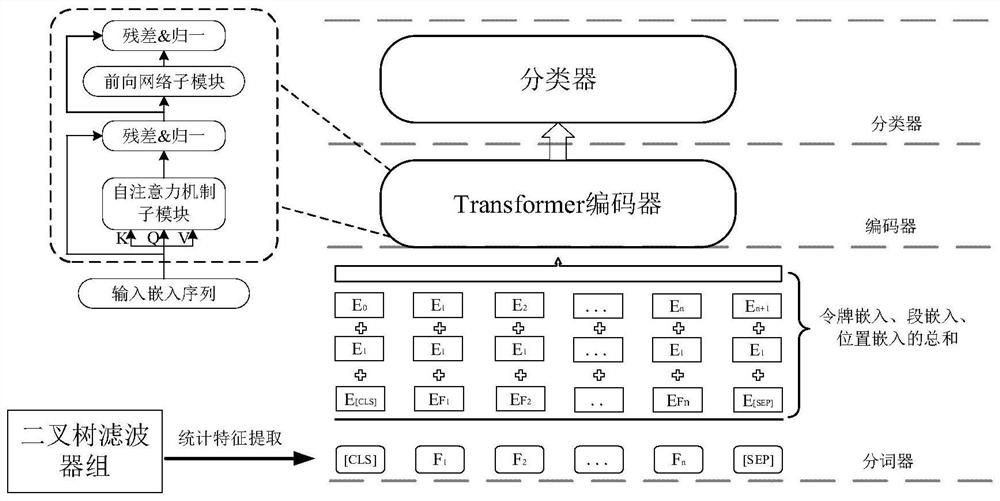

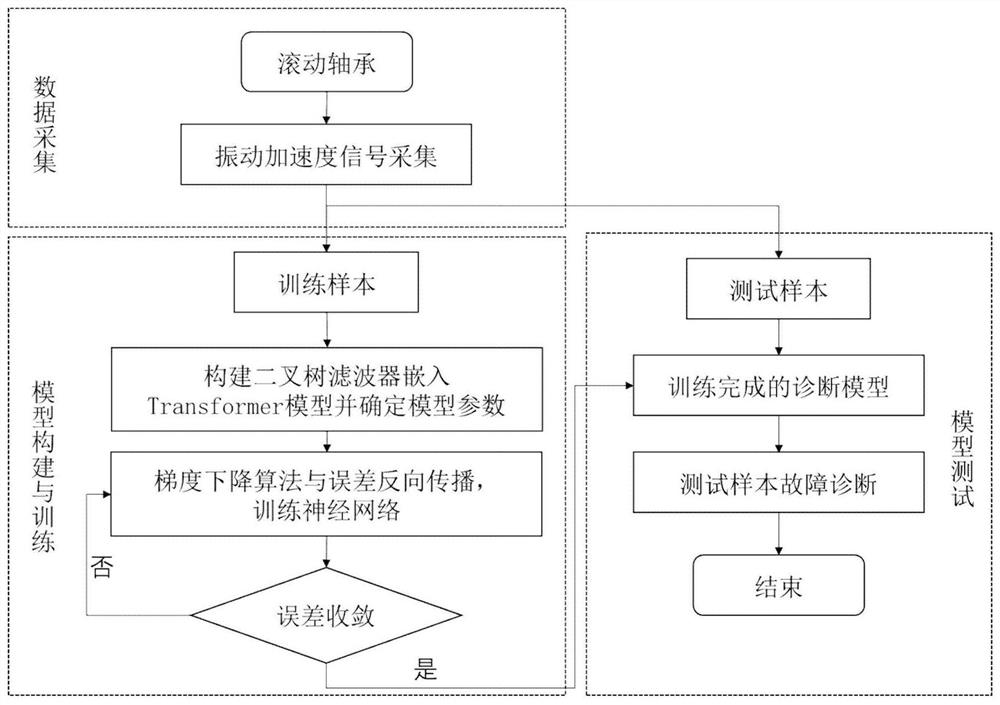

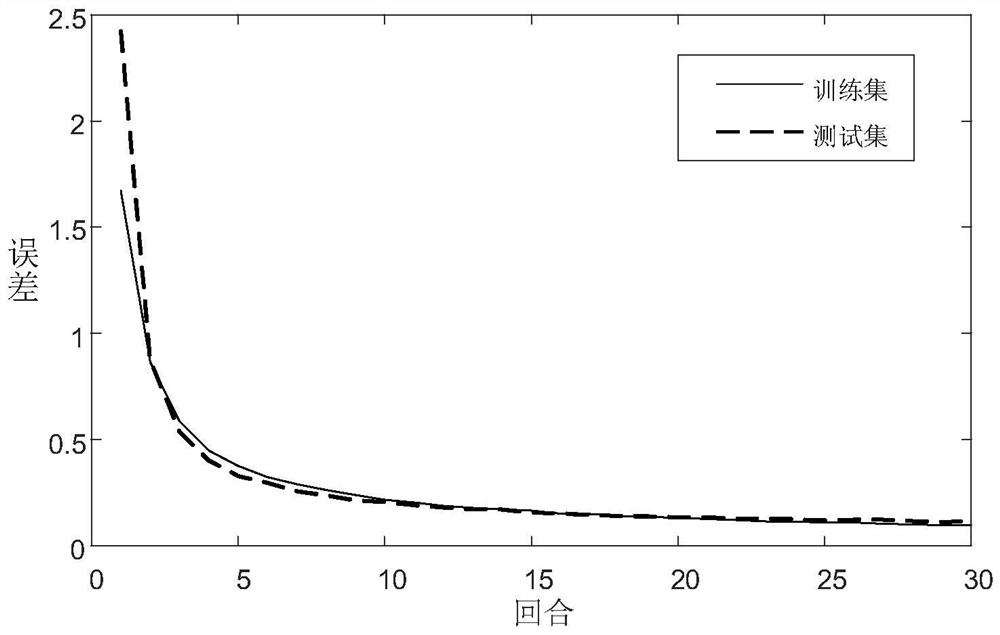

Binary tree filter Transform model and bearing fault diagnosis method thereof

PendingCN114662529AEfficient extractionEasy to measureCharacter and pattern recognitionNeural architecturesEngineeringBinary tree

The invention provides a binary tree filter Transform model and a bearing fault diagnosis application thereof, a word segmentation device part in the Transform model is improved by introducing a binary tree filter, so that the improved Transform model can extract a vibration signal, and when the binary tree filter Transform model is used for bearing fault detection, the vibration signal can be extracted by using the binary tree filter Transform model, so that the bearing fault detection accuracy is improved. For an input vibration signal, a plurality of frequency bands can be effectively extracted, and the frequency bands are ranked from small to large according to frequencies, so that information under different frequency bands is effectively represented, and finally the beneficial effect of better measuring fault information is achieved. In addition, compared with a traditional convolutional neural network and a recurrent neural network, the Transform model can well give weights to different frequency bands so as to obtain a more accurate recognition rate.

Owner:JIANGSU UNIV

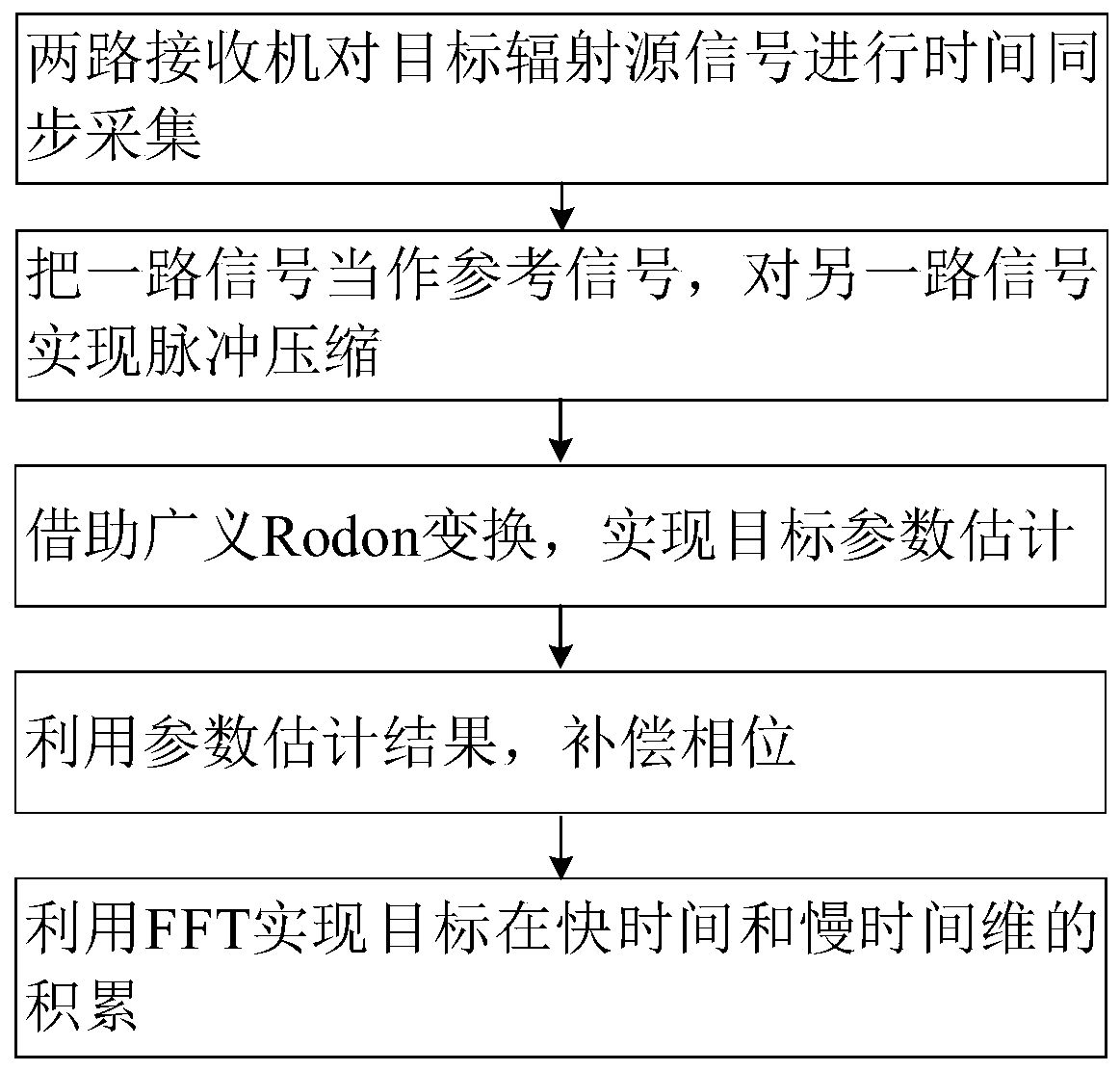

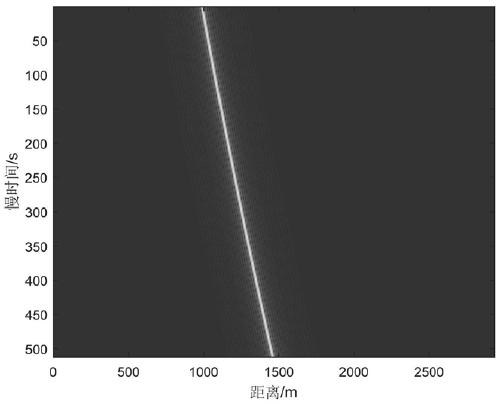

Method and device for target detection and parameter estimation of frequency hopping signals

ActiveCN109001671BEasy to handleHigh positioning accuracyPosition fixationTarget signalSoftware engineering

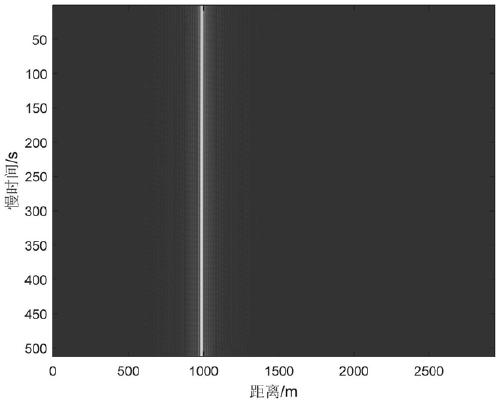

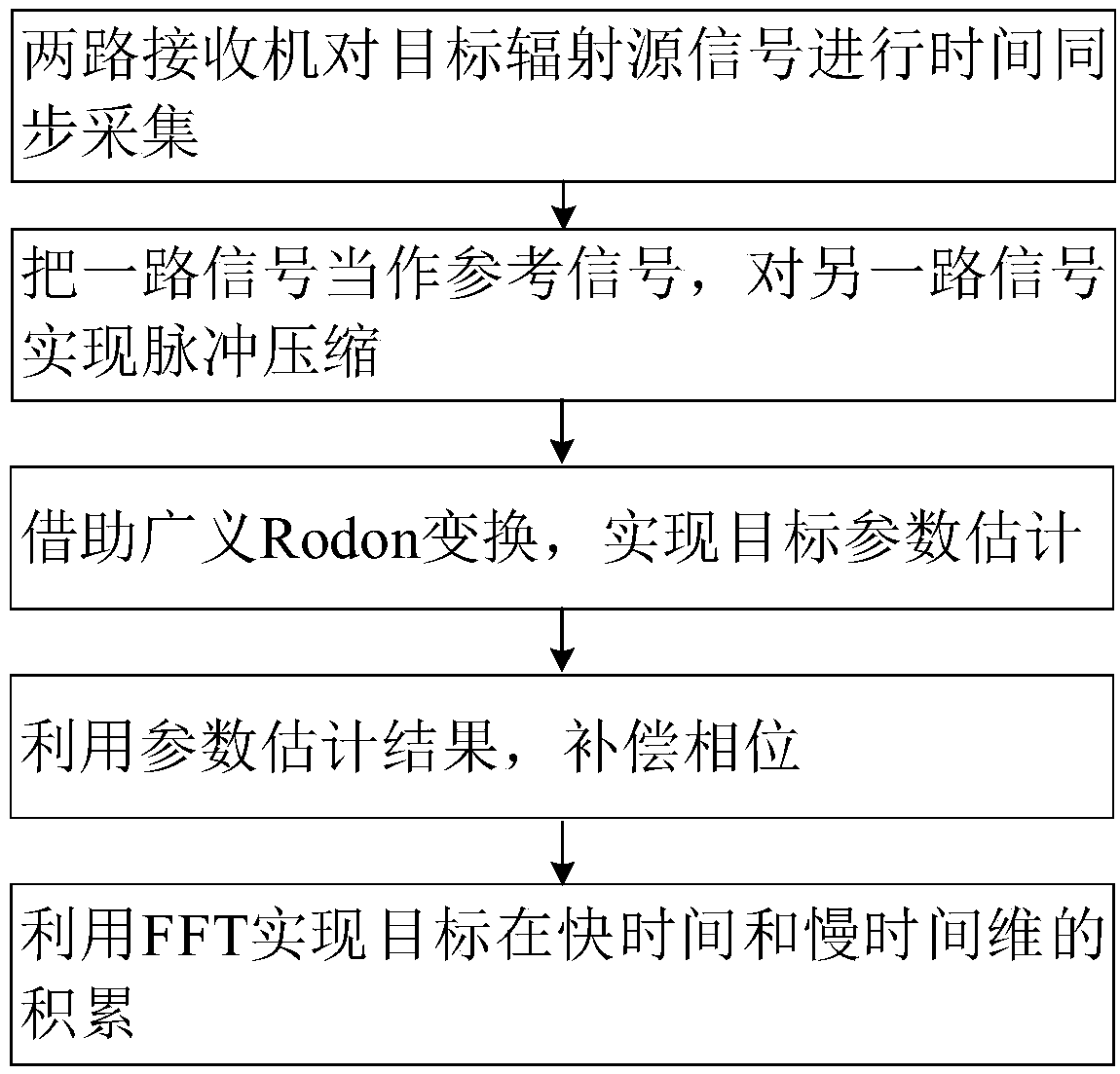

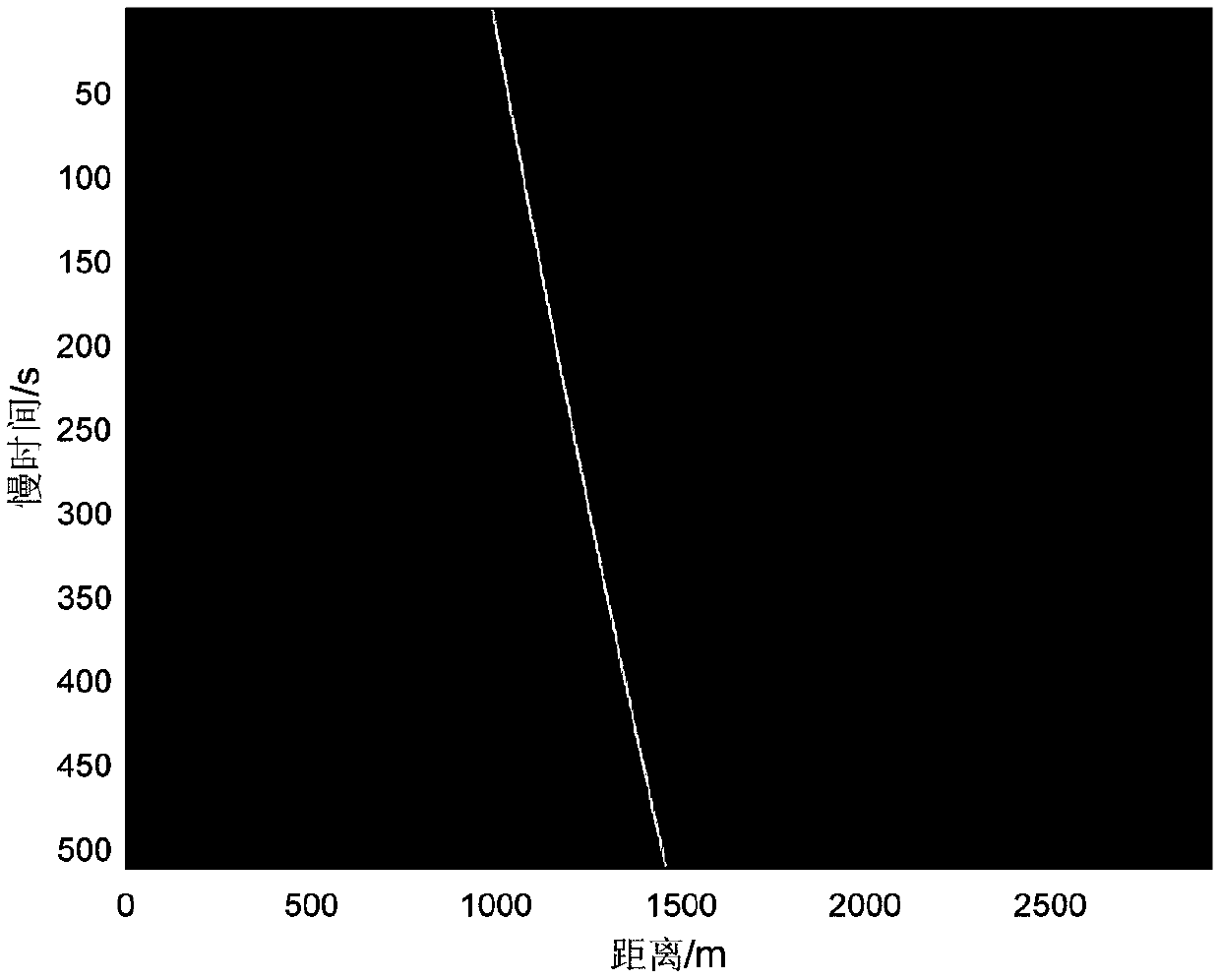

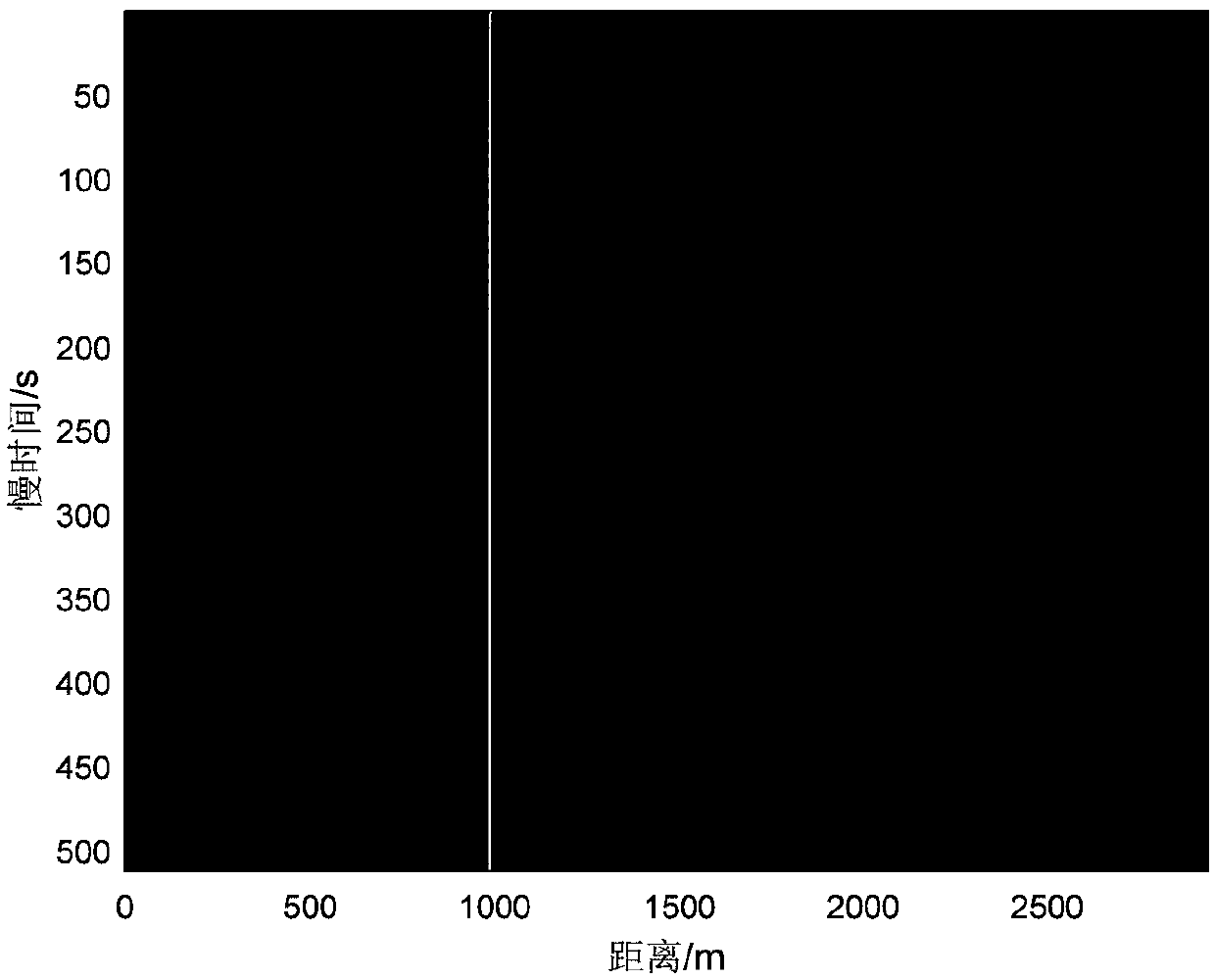

The invention relates to the field of signal processing, in particular to a frequency hopping signal target detection and parameter estimation method and device, wherein the collected signal is subjected to pulse compression by performing two-way acquisition on the signal of a target radiation source; then, phase compensation is carried out in combination with parameter estimation; finally, FFT accumulation is performed on a time dimension to obtain a target signal; and the position of the target radiation source is obtained according to the target signal. According to the frequency hopping signal target detection and parameter estimation method and device in the invention, the frequency hopping signal can be effectively processed; the positioning precision of the target radiation source and the target detection probability are improved; theoretical support is provided for the detection of the frequency hopping signal; the relatively low signal-to-noise ratio is adapted to; and accurate identification rate is achieved.

Owner:PLA STRATEGIC SUPPORT FORCE INFORMATION ENG UNIV PLA SSF IEU

Frequency hopping signal target detection and parameter estimation method and device

ActiveCN109001671AEasy to handleHigh positioning accuracyPosition fixationSignal-to-noise ratio (imaging)Estimation methods

The invention relates to the field of signal processing, in particular to a frequency hopping signal target detection and parameter estimation method and device, wherein the collected signal is subjected to pulse compression by performing two-way acquisition on the signal of a target radiation source; then, phase compensation is carried out in combination with parameter estimation; finally, FFT accumulation is performed on a time dimension to obtain a target signal; and the position of the target radiation source is obtained according to the target signal. According to the frequency hopping signal target detection and parameter estimation method and device in the invention, the frequency hopping signal can be effectively processed; the positioning precision of the target radiation source and the target detection probability are improved; theoretical support is provided for the detection of the frequency hopping signal; the relatively low signal-to-noise ratio is adapted to; and accurate identification rate is achieved.

Owner:PLA STRATEGIC SUPPORT FORCE INFORMATION ENG UNIV PLA SSF IEU

Method, device and image acquisition equipment for statistics on rat situation

ActiveCN109299703BAccurate recognition rateFast detection calculationCharacter and pattern recognitionVisual monitoringAcquisition apparatus

The application discloses a method, a device and an image acquisition device for counting the rat situation. Wherein, the image acquisition device includes: an image acquisition device (110), configured to acquire images; and a processor (121) communicatively connected to the image acquisition device (110). The processor (121) is configured to perform the following operations: acquire video within a predetermined period of time from the image acquisition device (110); and use a convolutional neural network-based computing model to analyze the video and generate a The first statistical information related to the rat situation in the monitoring area monitored by the image acquisition device. Therefore, the present invention solves the technical problems existing in the existing machine vision monitoring methods, such as low detection efficiency, too large amount of data is not conducive to fast acquisition of detection pictures, and the increase of monitoring cameras will lead to increased computing burden on the server.

Owner:思百达物联网科技(北京)有限公司

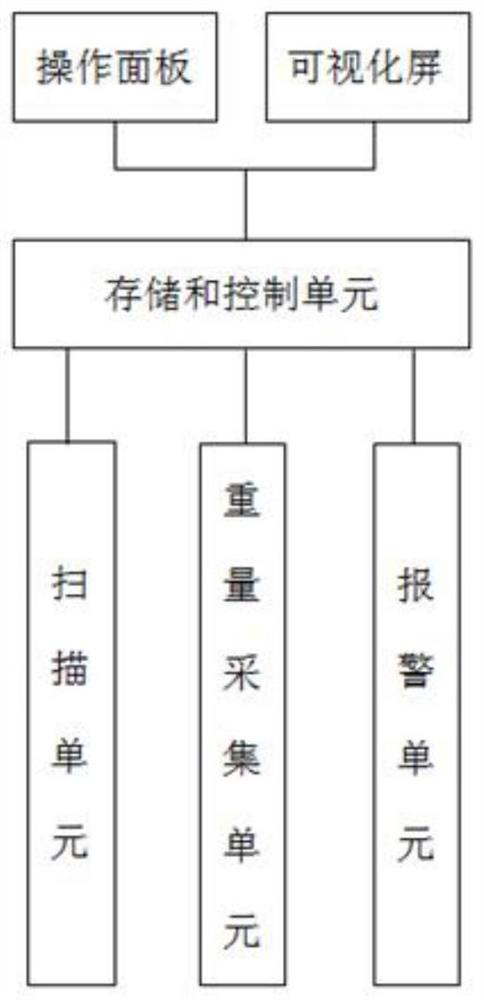

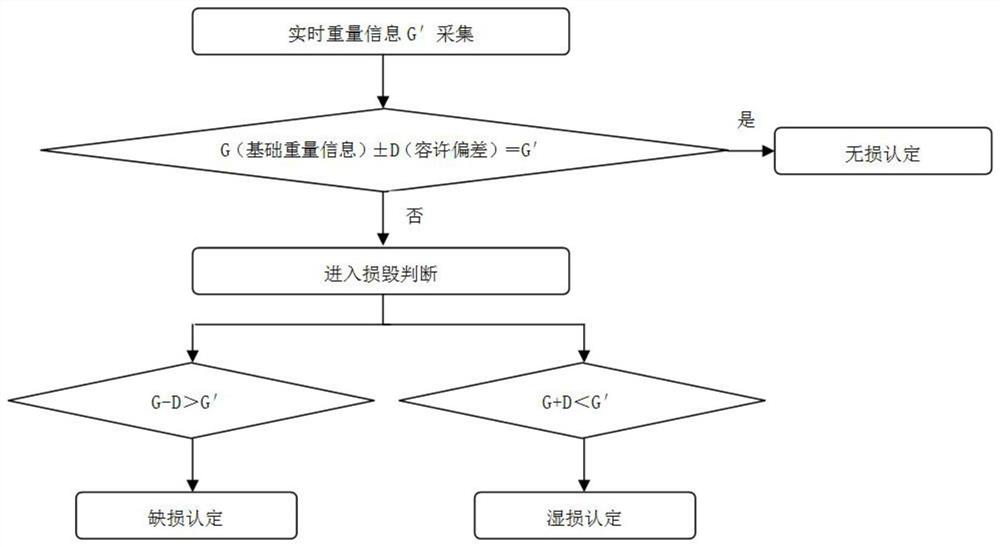

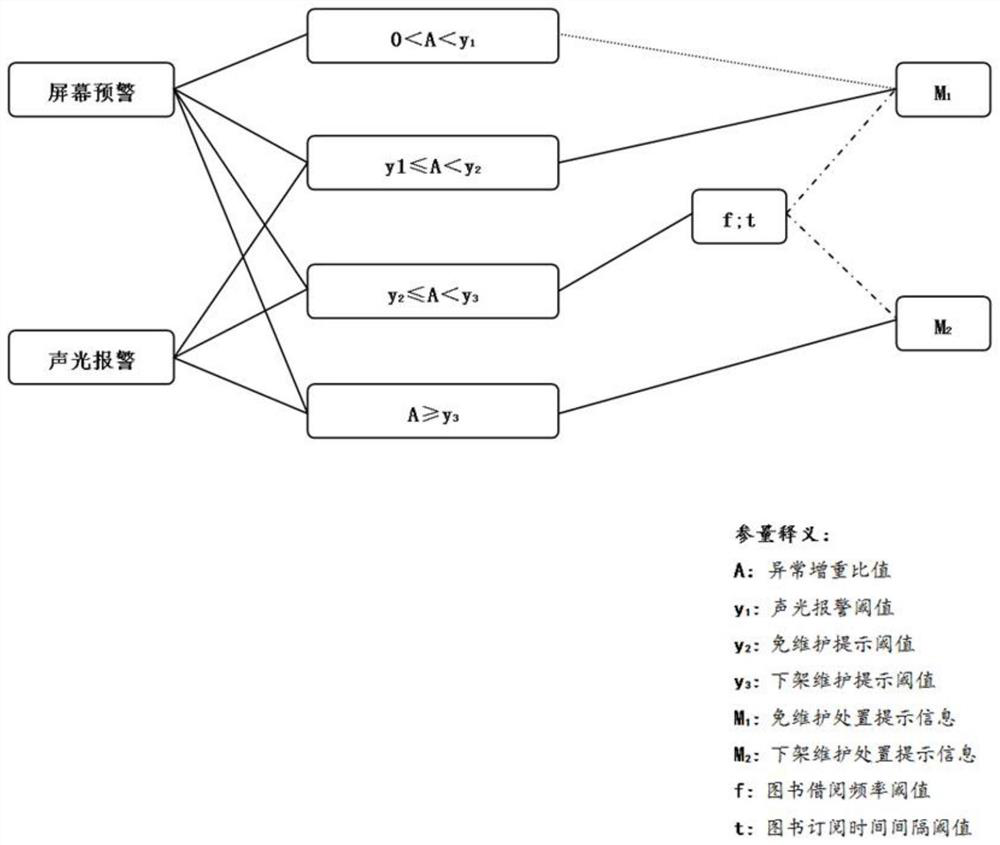

Scanning recognition system, scanning recognition method and book scanning equipment

PendingCN114372542AAchieving identifiabilityAchieve disposalCo-operative working arrangementsCash registersAlgorithmControl cell

The invention provides a scanning recognition system, which comprises a scanning unit, a weight acquisition unit, an alarm unit and a storage and control unit, and is characterized in that the storage and control unit is set to automatically compare pre-stored book basic weight information G with book real-time weight information G'acquired by the weight acquisition unit; and according to the forward or reverse type of the difference between the book basic weight information G and the book real-time weight information G ', judging that the book damage category is defect or wet damage, and when the book damage category is judged to be wet damage, further identifying the book wet damage degree as one of different wet damage levels according to the difference between the book basic weight information G and the book real-time weight information G'. And the signal of the alarm unit is controlled according to the identified moisture loss level. The invention also provides a scanning identification method based on a threshold value. Wet damage identification alarm and disposal of the book are achieved in book scanning through the weight detection and comparison principle, large-scale hardware development and upgrading of book scanning equipment are not needed, and the method is efficient, accurate and low in cost.

Owner:QINGDAO UNIV OF SCI & TECH

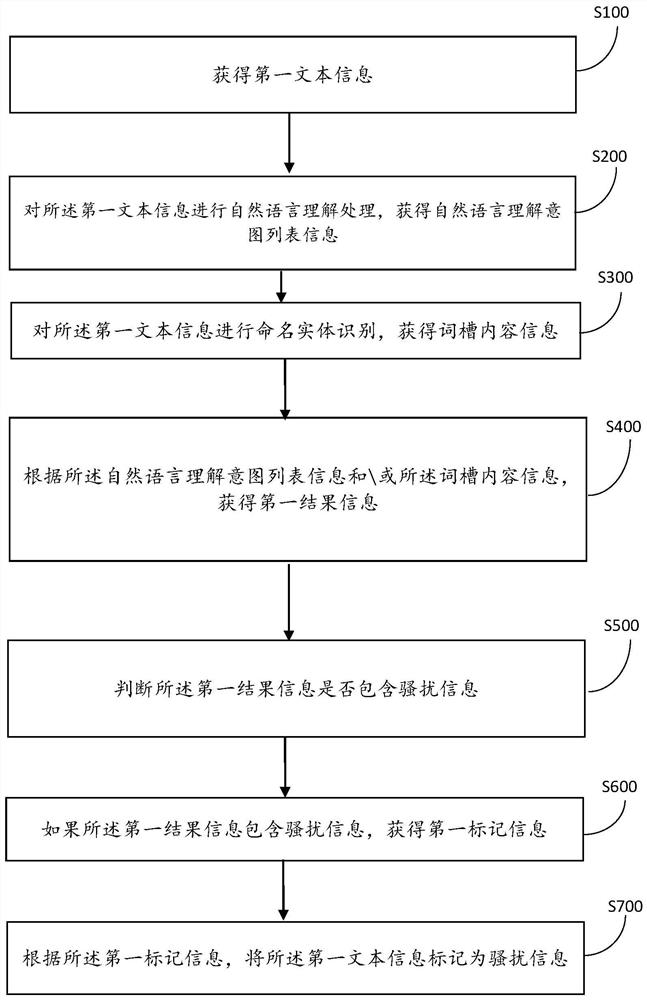

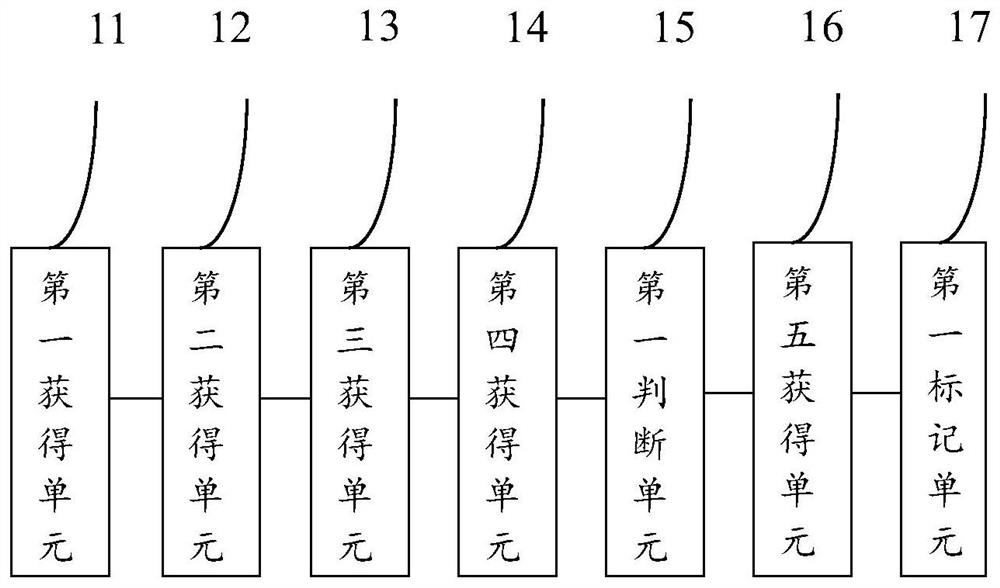

Disturbance information judgment method and system based on NER and NLU

ActiveCN112651223ALow costQuick responseNatural language data processingEnergy efficient computingNatural language understandingNamed-entity recognition

The invention discloses a disturbance information judgment method and system based on NER and NLU, and the method comprises the steps: obtaining first text information; performing natural language understanding processing on the first text information to obtain natural language understanding intention list information; performing named entity identification on the first text information to obtain word slot content information; obtaining first result information according to the natural language understanding intention list information and / or the word slot content information; judging whether the first result information contains harassment information or not; if the first result information contains harassment information, obtaining first mark information; and marking the first text information as harassment information according to the first marking information. The technical problems that in the prior art, time and energy are greatly consumed, quick response cannot be achieved, pertinence is not high, and low-cost updating cannot be achieved are solved.

Owner:浙江百应科技有限公司

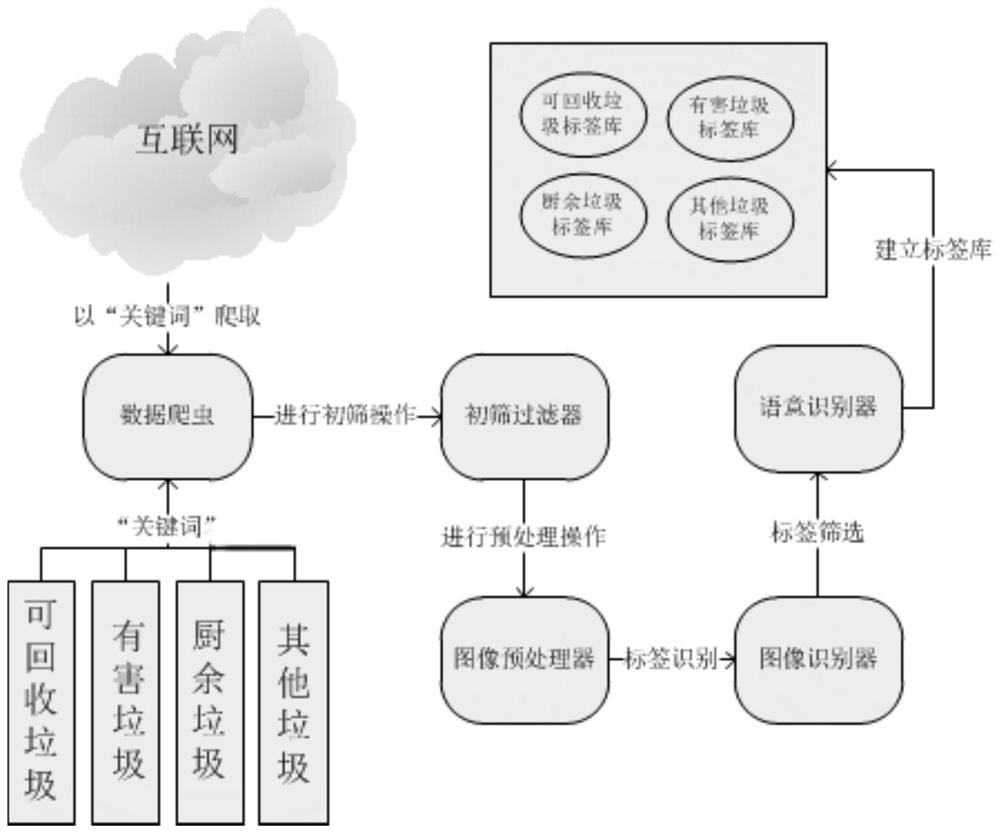

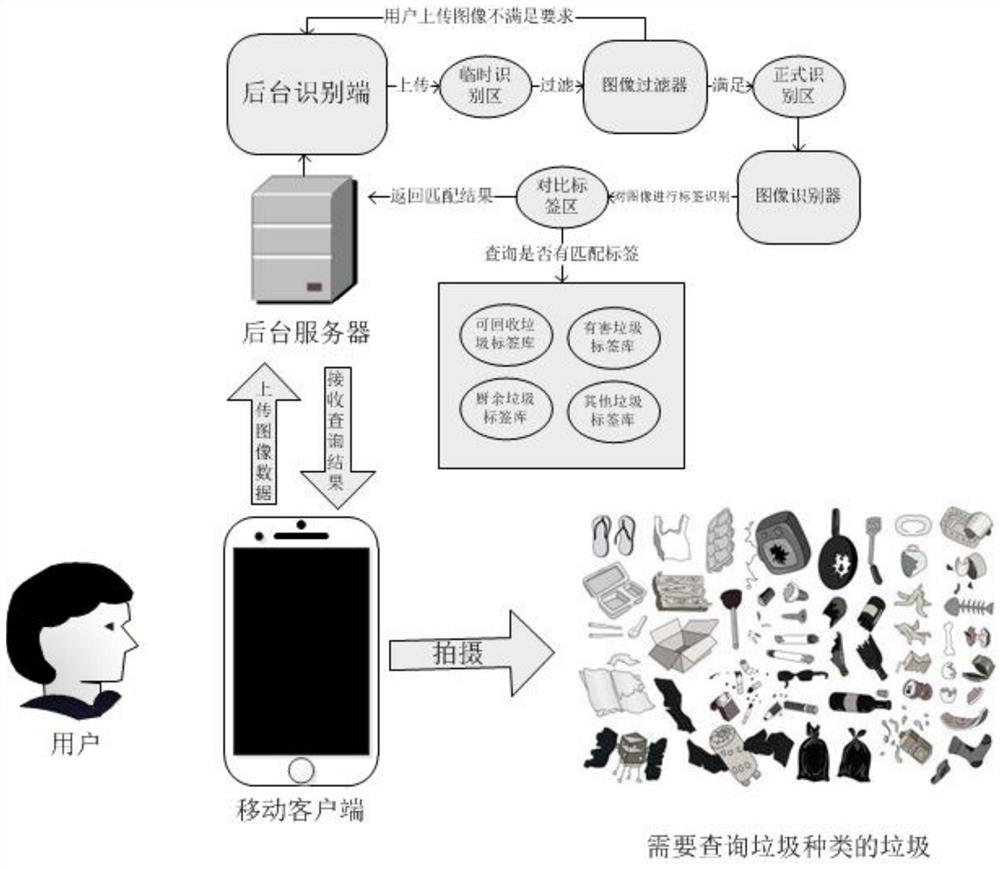

Garbage classification system and method based on garbage recognition

PendingCN113076439ARealize recognition and classificationReduce manual laborWeb data indexingStill image data indexingNatural language processingThe Internet

The invention provides a garbage classification system and method based on garbage recognition, and relates to the field of garbage classification treatment. According to the garbage classification system based on garbage recognition, through the combination of a data crawler, an image recognizer and a semantic recognizer, pictures related to recoverable garbage, harmful garbage, kitchen garbage and other garbage on the Internet are crawled down, the pictures are labeled through the image recognizer, real object nouns are reserved in a garbage classification label library through a semantic recognizer, the garbage classification label library is established, and labels of images uploaded by a user are retrieved in the garbage classification label library, so that garbage is recognized and classified, training images do not need to be labeled manually, manual guidance in material screening is avoided, and blind areas can be identified; and meanwhile, through a word meaning similarity comparison function of the semantic recognizer, labels with different words but the same word meaning can be matched, so that the generalization ability of the system is greatly improved.

Owner:四川九通智路科技有限公司

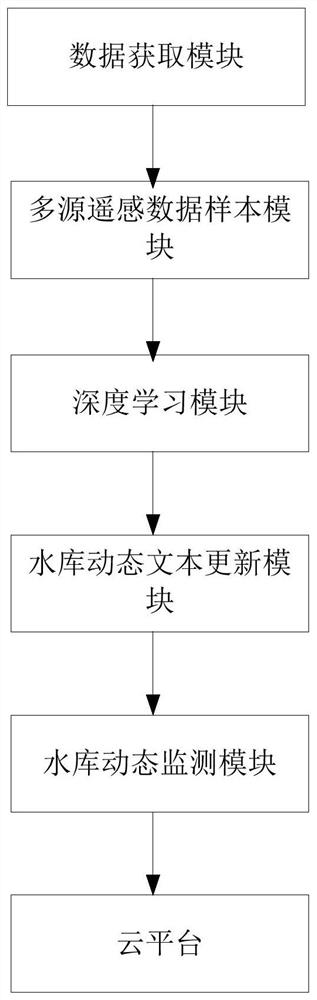

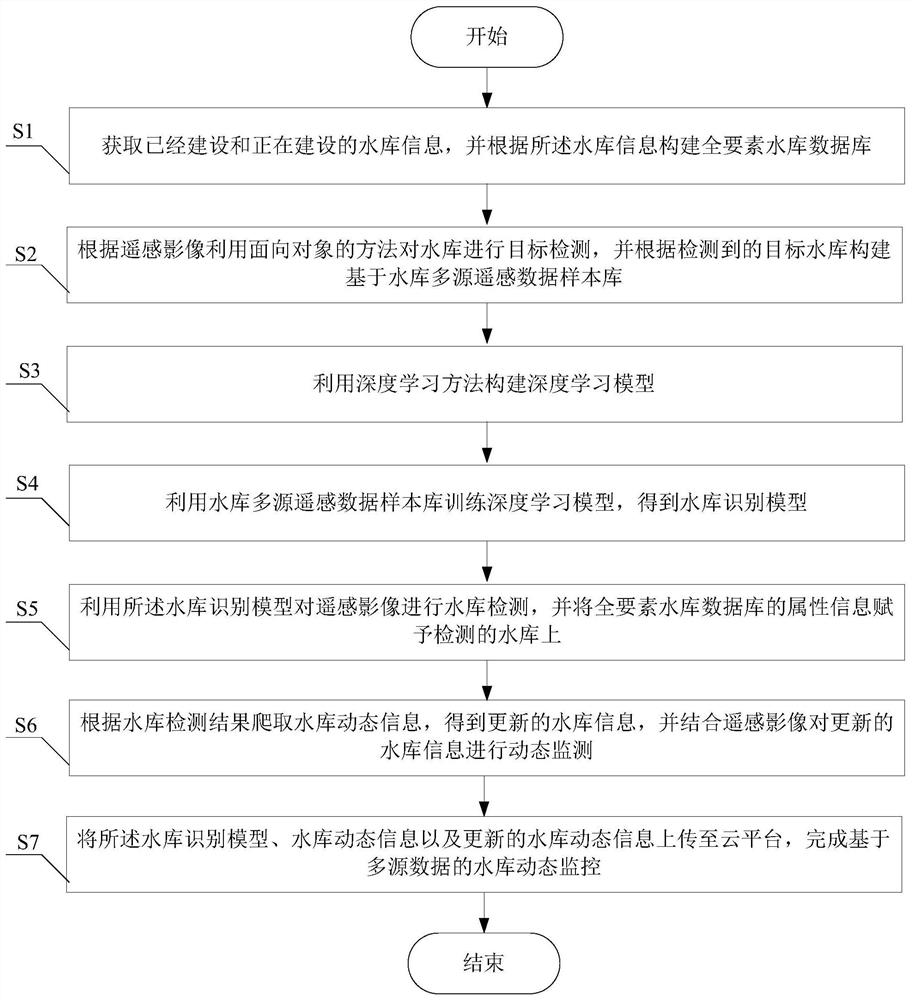

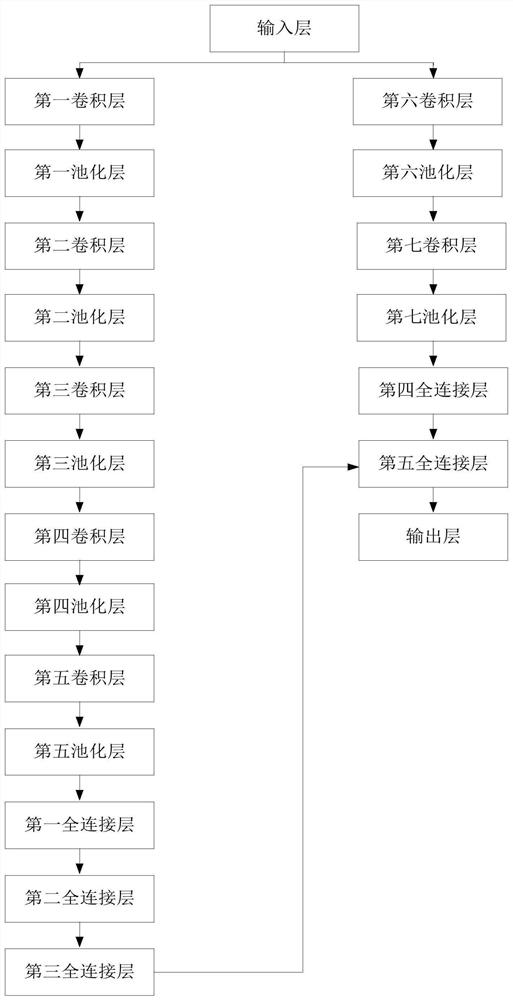

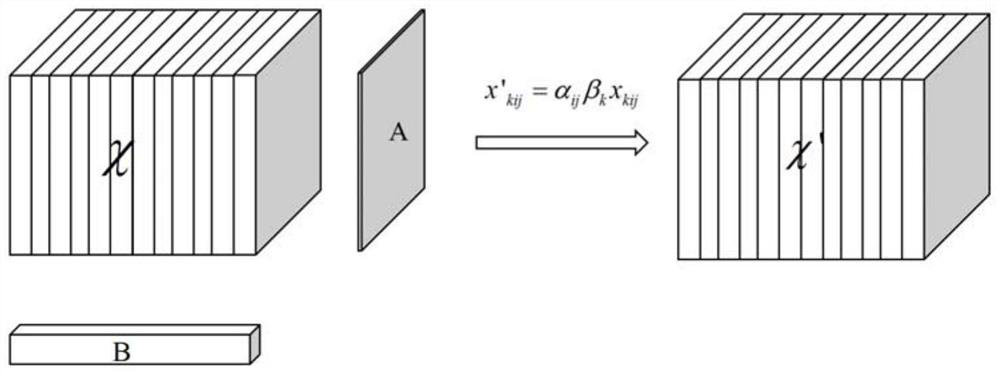

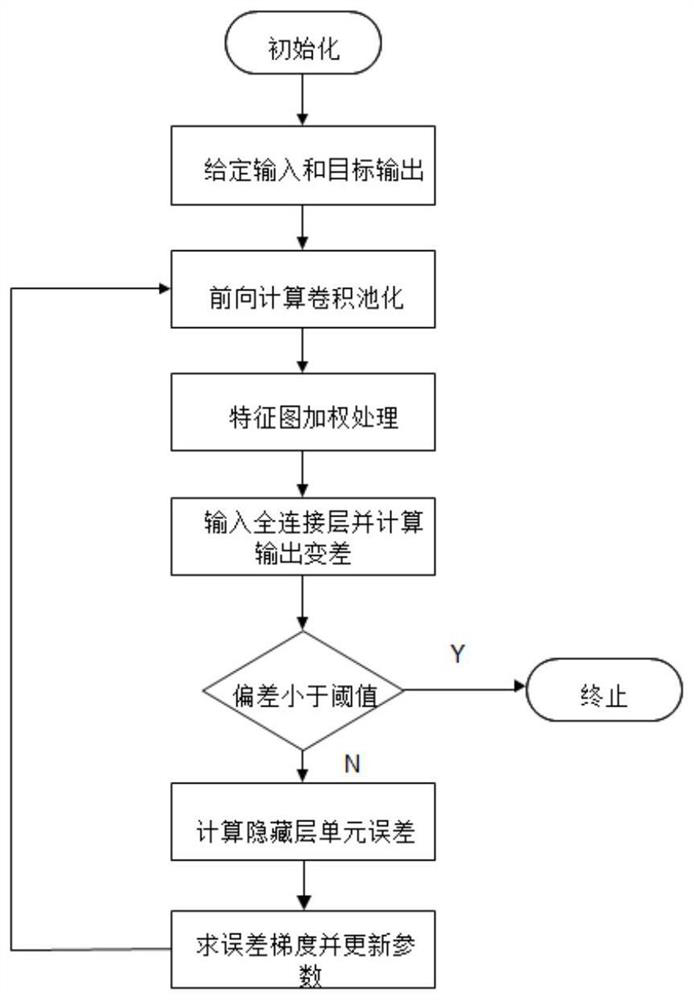

Reservoir dynamic monitoring system and method based on multi-source data

ActiveCN112766146AWide range of build methodsAccurate recognition rateData processing applicationsClimate change adaptationSource dataSensing data

The invention provides a reservoir dynamic monitoring system and method based on multi-source data, and belongs to the reservoir dynamic monitoring technology, and the system comprises a data acquisition module, a multi-source remote sensing data sample module, a deep learning module, a reservoir dynamic text updating module, a reservoir dynamic monitoring module and a cloud platform. According to the invention, the method includes: constructing a nationwide total-factor reservoir information database; performing reservoir target detection by using an object-oriented classification method in combination with a deep learning technology, and endowing reservoir attribute information; updating a reservoir information database according to a remote sensing monitoring method and a ubiquitous network monitoring method. The nationwide reservoir dynamic active monitoring method can actively discover, track and lock reservoir construction and management hot spots and key targets, achieves automatic extraction of deep reservoir information, and provides great convenience for people to use water conservancy information.

Owner:YELLOW RIVER ENG CONSULTING +1

Feature extraction method of underwater target based on convolutional neural network

ActiveCN107194404BMake up for lost defectsAccurate recognition rateCharacter and pattern recognitionNeural architecturesFeature vectorFeature extraction

The invention provides an underwater target feature extraction method based on a convolutional neural network. 1. Divide the sampling sequence of the original radiation noise signal into 25 consecutive parts, and set 25 sampling points for each part; 2. Normalize and centralize the sampling samples of the j-th segment data signal; perform short-term Fourier transform to get the LoFAR image; 4. Assign the vector to the existing 3-dimensional tensor; 5. Input the obtained feature vector to the fully connected layer for classification and calculate the error with the label data, and check whether the loss error is lower than the error threshold. If it is lower than that, stop the network training, otherwise go to step 6; 6. Use the gradient descent method to adjust the parameters of the network layer by layer from the back to the front, and go to step 2. The recognition rate of the method of the present invention is compared with the traditional convolutional neural network algorithm, and the multi-dimensional weighting operation of the spatial information is performed on the feature layer to make up for the defect of the loss of spatial information caused by the one-dimensional vectorization of the fully connected layer. .

Owner:HARBIN ENG UNIV

A rfid reader/writer with multiple antennas working at the same time and a method for identifying radio frequency data signals

ActiveCN103324969BImprove recognition rateAccurate recognition rateCo-operative working arrangementsData signalRadio frequency

Owner:XIAMEN XINDECO IOT TECH

Method and system for detecting file stealing Trojan based on thread behavior

ActiveCN102394859BAccurate recognition rateNo false positivesPlatform integrity maintainanceTransmissionFile transmissionOperating system

The invention provides a method for detecting file stealing Trojan based on a thread behavior, which comprises the following steps of: monitoring file operation and network operation of a thread; forming a behavior sequence buffer queue by the monitored thread, the process of the monitored thread, intercepted file operation, data read by a file, the network operation and data transmitted by a network; judging whether the file read by the thread is a file transmitted by the network or not according to the behavior sequence in the buffer queue; and if SO, checking whether the thread and the process of the thread have secrecy, if SO, judging whether a behavior that Trojan steals the file exists. The invention also provides a system for detecting the file stealing Trojan based on the thread behavior. According to the method and the system, provided by the invention, the misreport of the normal file transmission can be reduced, and the detection to the behavior that the Trojan steals the file is improved.

Owner:HARBIN ANTIY TECH

Behavior recognition method based on spatiotemporal volume of local joint point trajectory in skeleton sequence

ActiveCN110555387BReduce time complexitySolve problems such as perspective changesCharacter and pattern recognitionFeature vectorData set

The invention belongs to the technical field of artificial intelligence, and discloses a behavior recognition method based on local joint point trajectory space-time volume in a skeleton sequence, which extracts the local joint point trajectory space-time volume from input RGB video data and skeleton joint point data; The pre-training model of the video data set extracts image features; constructs a codebook for each different feature of each joint point in the training set and encodes it separately, and concatenates the features of n joint points into a feature vector; uses the SVM classifier Behavior classification and identification. The present invention fuses manual features and deep learning features, and uses deep learning methods to extract local features, and the fusion of multiple features can achieve a stable and accurate recognition rate; the present invention uses the 2D human skeleton estimated by the pose estimation algorithm and the RGB video sequence. Extracting features has low cost and high accuracy, and it is of great significance to apply to real scenes.

Owner:HUAQIAO UNIVERSITY

A Video Tracking Method Based on Adaptive Blocking

ActiveCN105118071BImprove accuracyImprove adaptabilityImage analysisCharacter and pattern recognitionAdaptive videoVideo tracking

The invention relates to a video tracking method based on self-adaptive block, which fully considers the difference of pixel values in the target area, and the video tracking method based on particle filtering realizes efficient self-adaptive video tracking and improves the performance of video tracking. Accuracy and adaptability. For problems such as occlusion and interference in video tracking, the present invention can maintain a relatively accurate recognition rate, fully consider the content information in the video and image, and adaptively adjust the corresponding block strategy according to the characteristics of the tracked target area. To achieve highly intelligent, high-accuracy video tracking effect.

Owner:SHANDONG UNIV

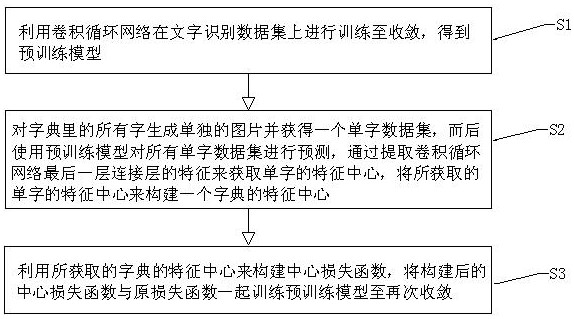

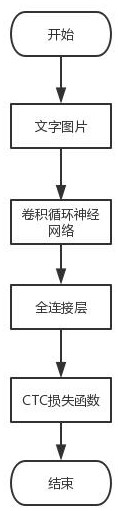

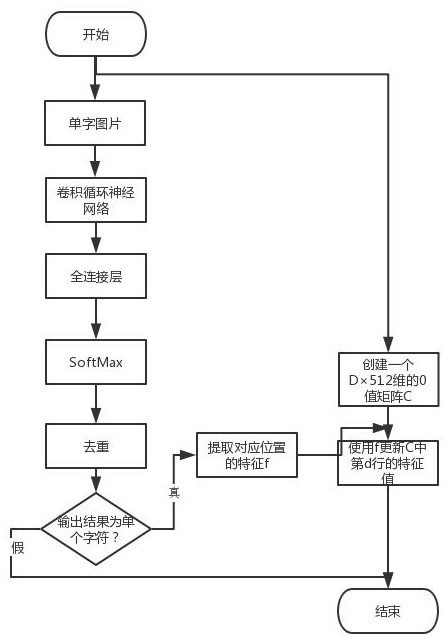

An Improved Training Method for Character Recognition Based on Center Loss

ActiveCN113326833BPrevent problems with not training correctlyReduce distanceNeural architecturesCharacter recognitionData setAlgorithm

The invention discloses an improved training method for character recognition based on center loss. The method comprises the following steps: S1, performing training on a character recognition data set until convergence, and obtaining a pre-training model; S2, extracting the last The features of the layers are connected to obtain the feature center of the word, and the feature center of the acquired word is used to construct the feature center of a dictionary; S3, continue to train the pre-trained model until convergence. Beneficial effects: the center loss training model module adopted by the present invention can make the feature space of the same character more compact, and for similar characters, it can make them closer to their respective feature centers, making it easier for the similar characters to be recognized Differentiation can improve the accuracy of the model in character recognition without changing the model size and inference speed.

Owner:WHALE CLOUD TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com